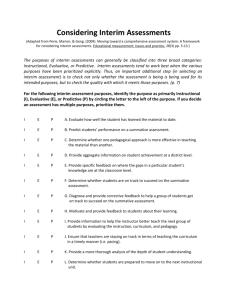

Can Interim Assessments be Used for Instructional Change?

advertisement