APPLICATIONS OF SATELLITE AND AIRBORNE IMAGE DATA TO COASTAL MANAGEMENT S -

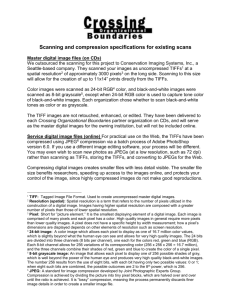

advertisement