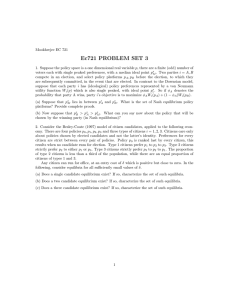

Answers to Midterm Examination EC 703 Spring 2016

advertisement

Answers to Midterm Examination EC 703 Spring 2016 1. (a) The easiest approach is to divide the Nash equilibria into two cases: those where s1 +s2 ≤ 10 and those where s1 +s2 > 10. What would have to be true for an equilibrium in the first case? Clearly, s1 ≥ 0 and s2 ≥ 0 since offering 0 is better than getting something negative. Second, we must have s1 + s2 = 10. Otherwise, each player has an incentive to increase his demand. It is not hard to see that any (s1 , s2 ) ∈ S1 × S2 satisfying s1 ≥ 0, s2 ≥ 0, and s1 + s2 = 10 is an equilibrium. So now consider s1 + s2 > 10. When would such a pair of strategies form an equilibrium? Clearly, it must be true that it’s better to not have an agreement than to compromise and “accept” the other person’s demand. That is, for player 1, it must be true that “accepting” 2’s demand — i.e., changing to s1 = 10 − s2 — is worse than nothing. That is, it must be true that 10 − s2 ≤ 0 or s2 ≥ 10. Similarly, s1 ≥ 10. It is not hard to see that any (s1 , s2 ) ∈ S1 × S2 with s1 ≥ 10 and s2 ≥ 10 is an equilibrium. (b) It is dominated to demand 0 or less. Demanding 0 ensures a payoff of zero, while demanding .01 sometimes gives 0 and sometimes gives .01. Hence .01 is better. Similarly, demanding −.01 gives either 0 or −.01 and so is dominated by demanding .01. On the other hand, any demand above 0 is undominated. To see this, fix any strictly positive demand, say s > 0. If the opponent chooses 10 − s, then s is the unique best reply. Hence it could not possibly be dominated. So the set of dominated strategies is the set of si ≤ 0. Once we remove these, though, we change the set of Nash equilibria only slightly. Included in the Nash equilibria are (0, 10) and (10, 0) — these two equilibria are now eliminated. All the other equilibria remain, however. (c) Once we eliminate demands of 0 or less, now demands of 10 or more are dominated. To see this, note that a demand of 10 or more necessarily yields a payoff of 0 (since the opponent never demands less than .01). Demanding .01 is better since this sometimes yields a payoff of .01. Again, no other strategy is dominated. For any s strictly bigger 1 than 0 and strictly smaller than 10, it is possible that the opponent demands 10 − s. Since s is the unique best reply to this, s cannot be dominated. Once we eliminate demands of 10 or more, we eliminate all the Nash equilibria where the total demands exceeded 10. Hence we are only left with the (s1 , s2 ) with .01 ≤ si ≤ 9.99 and s1 + s2 = 10. The most common mistakes on this question were missing some of the equilibria in (a), missing the fact that 0 is dominated in the first round and therefore not eliminating 10 in the second, and eliminating strategies above 10 in the first round. 2. The normal form is d e a 4, 2, 2 −3, 0, 1 b −2, 0, 3 1, 1, 0 c 3, 4, −3 3, 4, −3 f d e a 4, 2, 2 0, 3, 0 b −2, 0, 3 2, 1, 1 c 3, 4, −3 3, 4, −3 g The pure strategy Nash equilibria are (a, d, f ), (c, e, f ), and (c, e, g). Since the only subgame is the game itself, these are also the pure strategy subgame perfect equilibria. To see that (a, d, f ) is a weak perfect Bayesian equilibrium, take 2’s belief to put probability 1 on the left–hand node of his information set and take 3’s belief to put probability 1 on the left–hand node of his information set. Weak consistency forces 2’s belief to be as specified and allows 3’s to be as specified. It is easy to see that the strategies are sequentially rational given these beliefs. For (c, e, f ), use the same belief as above for 3. For 2, take the belief to put probability 1 on the right–hand node. Since neither information set is reached, weak consistency allows these beliefs. With these beliefs, the strategies are sequentially rational. Hence this is a weak perfect Bayesian equilibrium. Finally, for (c, e, g), assume 2 and 3 each put probability 1 on the right–hand node in their information sets. Again, weak consistency allows this and again the strategies are sequentially rational given these beliefs. Hence this is also a weak perfect Bayesian equilibrium. Turning to sequential equilibrium, the key observation is that for any consistent belief, the probability 2 assigns to the left–hand node in his information set must be the same as the probability 3 assigns to the left–hand node in his. To see this, fix any sequence of totally mixed strategies. Let pn be the probability 1 plays a in this sequence, qn be the probability 1 plays b, and rn the probability 2 plays e. Then Bayes’ rule says that the 2 probability assigned to the left–hand node in 2’s information set is pn pn + q n while the probability assigned to the left–hand node in 3’s information set is pn rn pn = . (pn + qn )rn pn + q n Given this, it’s easy to show that (a, d, f ) and (c, e, g) above are sequential but not (c, e, f ). To construct totally mixed strategies for (a, d, f ), take 1’s totally mixed strategy to put probability 1/n on b and 1/n on c and take 2’s to put probability 1/n on e. For 2, Bayes’ rule says that the probability of the left–hand node in his information set is 1 − n2 , 1 − n1 which converges to 1 as n → ∞. As we showed above, this means the same is true for 3. Thus the belief we used above for weak perfect Bayesian satisfies consistency, so this is a sequential equilibrium. For (c, e, g), take 1’s totally mixed strategy to put probability 1/n2 on a and 1/n on b. Let 2’s totally mixed strategy put probability 1/n on d. From Bayes’ rule, the probability 2 puts on the right–hand node in his information set must be 1 n 1 n2 + 1 n = n , 1+n which converges to 1 as n → ∞. From the reasoning above, we know 3’s probability on the right–hand node in his information set also converges to 1. Hence, again, the beliefs used for weak perfect Bayesian for this strategy profile are consistent so this is a sequential equilibrium. To see that (c, e, f ) cannot be part of a sequential equilibrium, suppose to the contrary that there is a consistent belief for which these strategies are sequentially rational. Let µ be the probability 2 assigns to the left–hand node in his information set, so that this must also be the probability 3 assigns to the left–hand node in his information set. For 2’s strategy of e to be sequentially rational, we must have (0)µ + (1)(1 − µ) ≥ 2µ or µ ≤ 1/3. For 3’s strategy of f to be sequentially rational, we need µ≥1−µ 3 or µ ≥ 1/2. Hence there is no such µ. The most common mistakes on this question concerned difficulties in showing consistency. Sometimes, the calculation of Bayes’ rule was done incorrectly. More often, an argument would be made that a particular strategy profile was not part of a sequential equilibrium because one particular totally mixed strategy did not converge to the right beliefs. A similar error was to show that one particular belief which would support sequential rationality was not consistent. To show that a certain strategy profile is part of a sequential equilibrium, you have to find one belief that makes it sequentially rational and prove that this belief is consistent. Any belief satisfying these two conditions will do. Thus to show that a strategy profile is not part of a sequential equilibrium requires showing that no belief which makes the strategy sequentially rational is consistent — that is, there is no totally mixed strategy generating beliefs by Bayes’ rule converging to a belief which makes the strategies sequentially rational. 3. First, consider pooling equilibria. Fix some m∗ ∈ [0, ∞) and suppose 1’s strategy is m(ta ) = m(tb ) = m∗ . In this case, 2’s belief in response to m∗ must be the prior, 1/2 on each type. Hence the only sequentially rational strategy for 2 in response to m = m∗ is β since (1/2)(1) < (1/2)(3). Depending on 2’s belief in response to other messages, either α or β could be optimal. Clearly, if there is some m0 < m∗ which also leads 2 to play β, 1 would deviate to that since the action would be the same and the “cost” of the message would be lower. Similarly, if there is some m0 < m∗ which leads 2 to play α, 1 would deviate to that since he prefers α to β and would save on the cost. Hence we must have m∗ = 0. Also, suppose 1 could deviate to some other message m0 that would lead 2 to play α. Type ta would want to deviate if 2 − m0 > 0 or m0 < 2. Similarly, tb would want to deviate if m0 < 1. Hence there can’t be an m0 < 2 which leads 2 to play α. Summarizing, here are the pooling equilibria. 1 plays m(ta ) = m(tb ) = 0. 2 plays a(m) = β at least for all β < 2. 2 could respond to any higher message with either action. 2’s belief can be 1/2 on each type in response to any of the m’s which lead him to play β and could be, say, probability 1 on ta for any m which leads him to play α. Turning to separating equilibria, let ma = m(ta ) and mb = m(tb ) where ma 6= mb . If this is an equilibrium, then 2’s belief in response to ma must put probability 1 on ta , so we must have a(ma ) = α. Similarly, 2’s belief in response to mb must put probability 1 on tb , so we must have a(mb ) = β. As above, 2’s response to other messages can be either α or β — we’ll need to work out which. When is mb optimal for tb ? Obviously, it must be better for tb than ma , so we must have 1 − ma ≤ −mb 4 or ma − mb ≥ 1. Note for future reference that this implies ma > 0. What else must be true? Clearly, if some message m < mb leads 2 to play α, then tb would deviate to this. But the same is true if there is any message m < mb which leads 2 to play β. Hence we must have mb = 0. When is ma optimal for ta ? Obviously, it must be better for ta than mb = 0, so we must have 2 − ma ≥ −mb = 0 or ma ≤ 2. Also, there cannot be any m < ma which leads 2 to play α or else ta would deviate to this. Let’s put this together. Fix a value for ma . We know from the above that this must be in the interval [1, 2]. We know that 2 must play β in response to any m < ma . So take 1’s strategy to be m(ta ) = ma and m(tb ) = 0. Take 2’s strategy to be a(m) = β for any m < ma and a(m) = α for m = ma . For any larger m, we can take 2’s response to be either α or β. For beliefs, there are many things we could specify, but the simplest is to have 2 believe t = ta with probability 1 in response to any m for which a(m) = α and to believe t = tb with probability 1 in response to any m with a(m) = β. Common mistakes: Almost no one fully specified 2’s strategy for any of the equilibria. A number of people lost sight of the fact that you can’t evaluate 1’s payoff to a given m without saying how 2 responds to that message, leading to logical errors of many varieties. For example, this problem led many people to conclude there are no separating equilibria in this game and some to conclude there are no equilibria at all. 5