T) (CL rem Theo

advertisement

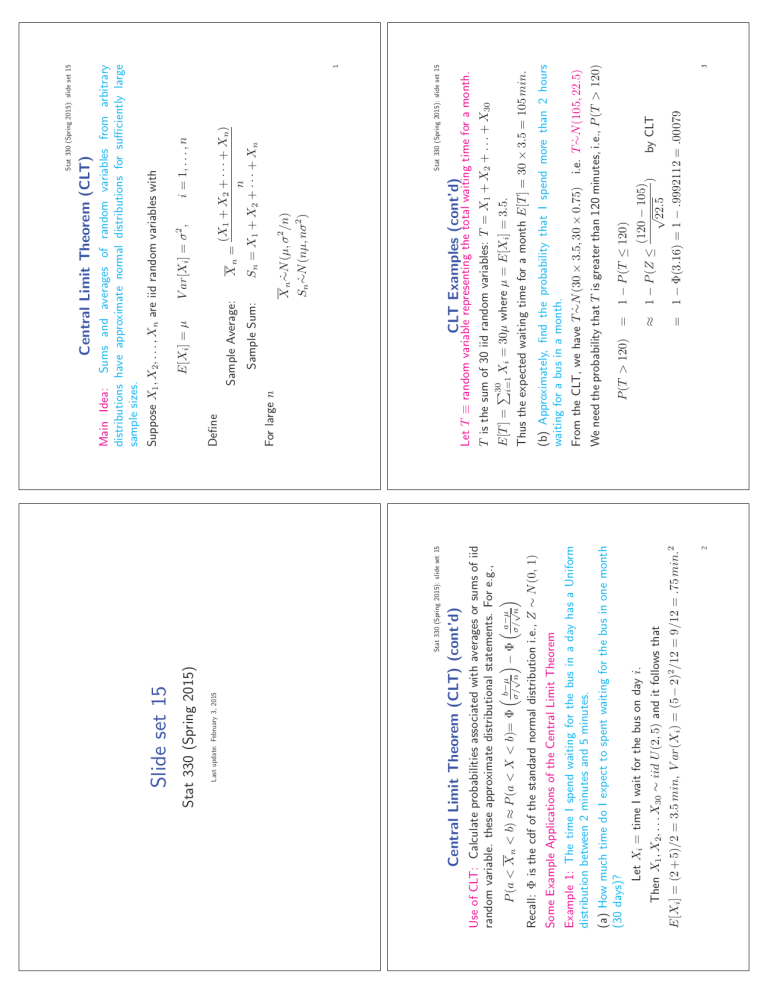

Stat 330 (Spring 2015): slide set 15 2 E[Xi] = (2 + 5)/2 = 3.5 min, V ar(Xi) = (5 − 2)2/12 = 9/12 = .75 min.2 Then X1, X2, . . . X30 ∼ iid U (2, 5) and it follows that Let Xi = time I wait for the bus on day i. (a) How much time do I expect to spent waiting for the bus in one month (30 days)? Example 1: The time I spend waiting for the bus in a day has a Uniform distribution between 2 minutes and 5 minutes. Some Example Applications of the Central Limit Theorem Recall: Φ is the cdf of the standard normal distribution i.e., Z ∼ N (0, 1) Use of CLT: Calculate probabilities associated with averages or sums of iid random variable. these approximate distributional statements. For e.g., b−μ a−μ √ √ P (a < X n < b) ≈ P (a < X < b)= Φ σ/ − Φ n σ/ n Central Limit Theorem (CLT) (cont’d) Last update: February 3, 2015 Stat 330 (Spring 2015) Slide set 15 Stat 330 (Spring 2015): slide set 15 X n∼N ˙ (μ, σ 2/n) Sn∼N ˙ (nμ, nσ 2) 1 Stat 330 (Spring 2015): slide set 15 (X1 + X2 + · · · + Xn) n Sn = X 1 + X 2 + · · · + X n Xn = i = 1, . . . , n CLT Examples (cont’d) Sample Sum: Sample Average: V ar[Xi] = σ 2, i.e. T ∼N ˙ (105, 22.5) ≈ 1 − P (Z ≤ (120 − 105) √ ) by CLT 22.5 = 1 − Φ(3.16) = 1 − .9992112 = .00079 P (T > 120) = 1 − P (T ≤ 120) 3 We need the probability that T is greater than 120 minutes, i.e., P (T > 120) From the CLT, we have T ∼N ˙ (30 × 3.5, 30 × 0.75) (b) Approximately, find the probability that I spend more than 2 hours waiting for a bus in a month. Thus the expected waiting time for a month E[T ] = 30 × 3.5 = 105 min. T is the sum of 30 iid random variables: T = X1 + X2 + . . . + X30 30 E[T ] = i=1 Xi = 30μ where μ = E[Xi] = 3.5. Let T ≡ random variable representing the total waiting time for a month. For large n Define E[Xi] = μ Suppose X1, X2, . . . , Xn are iid random variables with Main Idea: Sums and averages of random variables from arbitrary distributions have approximate normal distributions for sufficiently large sample sizes. Central Limit Theorem (CLT) Stat 330 (Spring 2015): slide set 15 P (|X n − d| > .5) = P (X n − d > .5) + P (X n − d < −.5) .5 −.5 Xn − d Xn − d +P = P > < 4/n 4/n 4/n 4/n −.5 .5 +P Z < ≈ P Z> 4/n 4/n Thus 6 We use the CLT to approximate each of the probabilities on the right. From the CLT we have that 7 • Thus the astronomer must take at least 62 measurements to have the accuracy specified above. √ • We need to find an integer n so that 2(1 − Φ( n/4)) is just less than or equal to .05. √ • We will set 2(1 − Φ( n∗/4)) = .05, solve for n∗ and take the required number of measurements to be the n∗. √ √ • Observe that 2(1 − Φ( n/4)) = .05 implies that Φ( n/4)) = .975. √ • Using the Normal cdf tables, this gives n/4 = 1.96; thus n∗ = 61.47. √ √ = 1 − Φ( n/4) + Φ(− n/4) √ = 2(1 − Φ( n/4)) CLT Big Example (cont’d) Stat 330 (Spring 2015): slide set 15 P (|X n − d| > .5) ≤ .05 Stat 330 (Spring 2015): slide set 15 CLT Big Example (cont’d) X n∼N ˙ (d, 4/n) (X1 +X2 +···+Xn ) n We want to find the number of measurements n so that The estimate of d is X n = X1, X2, . . . Xn ∼ iid with E[Xi] = d and V ar[Xi] = 4 Let Xi be the ith measurement. The astronomer assumes that 5 P (|X n − d| > .5) = P (X n − d > .5) + P (X n − d < −.5) We know that Stat 330 (Spring 2015): slide set 15 An astronomer wants to measure the distance, d, from the observatory to a star. Due to the variation of atmospheric conditions and imperfections in the measurement method, a single measurement will not produce the exact distance d. The astronomer takes n measurements of the distance and uses the sample average to estimate the true distance. From past records of these measurements the astronomer knows the variance of a single measurement is 4 parsec2. How many measurement should the astronomer make so that the chance that his estimate differs by d by more than .5 parsecs is at most .05? CLT Big Example 4 Since this probability is very high, the available disk space is very likely to be sufficient. P (sufficient space) = P (Sn ≤ 330)) Sn − nμ 330 − (300)(1) √ √ ) ≤ = P σ n 0.5 300 ≈ Φ(3.46) = .9997 We have n = 300, μ = 1 and σ = 0.5. The number of images n is large, so the CLT applies. Then Example 4.13 (Allocation of Disk Space) A disk has free space of 330 megabytes. Is it likely to be sufficient for 300 independent images, if each image has expected size of 1 megabyte with a standard deviation of 0.5 megabytes? CLT Example from Baron Stat 330 (Spring 2015): slide set 15 8 When either of np or n(1 − p) are < 20, a continuity correction is needed (see Baron p.94). Use this approximation only when np and n(1 − p) are both > 5; the approximation is pretty good when np and n(1 − p) are both > 20. Applying the CLT result for Sn, we have that Y ∼N ˙ (nμ, nσ 2) where μ = p 2 and σ = p(1 − p). That is, Y ∼N ˙ (np, np(1 − p)). Write Y as the sum of n iid Bernoulli variables each with μ = E(Xi) = p and σ 2 = V ar(Xi) = p(1 − p): Y = X1 + X2 + . . . + Xn Let Y be a variable with a Bn,p distribution. We know, that Y is the number of successes in n independent Bernoulli experiments with P (success) = p. For large n, the binomial distribution Bn,p is approximately normal Nnp,np(1−p). Why? Normal approximation to the Binomial