Stat 330 (Spring 2015): Homework 5

advertisement

Stat 330 (Spring 2015): Homework 5

Due: February 23, 2015

Show all of your work, and please staple your assignment if you use more than one sheet. Write your name,

the course number and the section on every sheet. Problems marked with * will be graded and one additional

randomly chosen problem will be graded. Show all work for full credit.

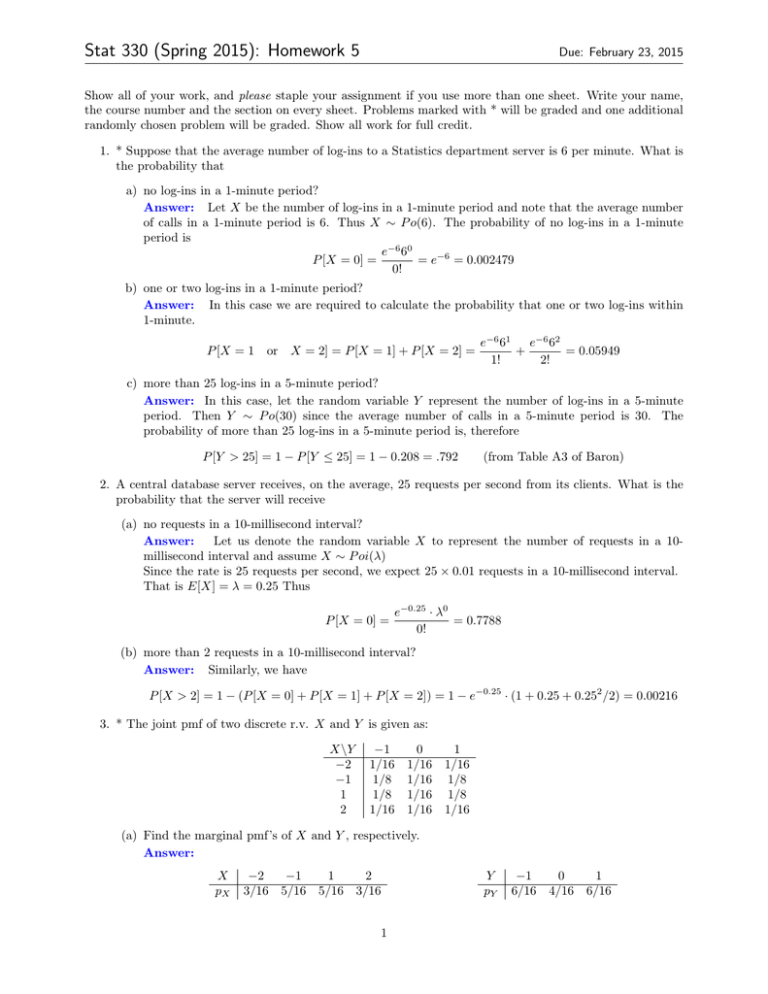

1. * Suppose that the average number of log-ins to a Statistics department server is 6 per minute. What is

the probability that

a) no log-ins in a 1-minute period?

Answer: Let X be the number of log-ins in a 1-minute period and note that the average number

of calls in a 1-minute period is 6. Thus X ∼ P o(6). The probability of no log-ins in a 1-minute

period is

e−6 60

= e−6 = 0.002479

P [X = 0] =

0!

b) one or two log-ins in a 1-minute period?

Answer: In this case we are required to calculate the probability that one or two log-ins within

1-minute.

P [X = 1

or X = 2] = P [X = 1] + P [X = 2] =

e−6 61

e−6 62

+

= 0.05949

1!

2!

c) more than 25 log-ins in a 5-minute period?

Answer: In this case, let the random variable Y represent the number of log-ins in a 5-minute

period. Then Y ∼ P o(30) since the average number of calls in a 5-minute period is 30. The

probability of more than 25 log-ins in a 5-minute period is, therefore

P [Y > 25] = 1 − P [Y ≤ 25] = 1 − 0.208 = .792

(from Table A3 of Baron)

2. A central database server receives, on the average, 25 requests per second from its clients. What is the

probability that the server will receive

(a) no requests in a 10-millisecond interval?

Answer:

Let us denote the random variable X to represent the number of requests in a 10millisecond interval and assume X ∼ P oi(λ)

Since the rate is 25 requests per second, we expect 25 × 0.01 requests in a 10-millisecond interval.

That is E[X] = λ = 0.25 Thus

P [X = 0] =

e−0.25 · λ0

= 0.7788

0!

(b) more than 2 requests in a 10-millisecond interval?

Answer: Similarly, we have

P [X > 2] = 1 − (P [X = 0] + P [X = 1] + P [X = 2]) = 1 − e−0.25 · (1 + 0.25 + 0.252 /2) = 0.00216

3. * The joint pmf of two discrete r.v. X and Y is given as:

X\Y

−2

−1

1

2

−1

1/16

1/8

1/8

1/16

0

1

1/16 1/16

1/16 1/8

1/16 1/8

1/16 1/16

(a) Find the marginal pmf’s of X and Y , respectively.

Answer:

X

pX

−2

3/16

−1

5/16

1

5/16

2

3/16

1

Y

pY

−1

6/16

0

4/16

1

6/16

Stat 330 (Spring 2015): Homework 5

Due: February 23, 2015

(b) Find the following probabilities:

i. P (X ≥ 2)

Answer: = 1/16 + 1/16 + 1/16 = 3/16.

ii. P (X > Y )

Answer: = 1/8 + 1/16 + 1/16 + 1/16 + 1/16 = 6/16.

iii. P (Y > 0)

Answer: = 1/16 + 1/8 + 1/8 + 1/16 = 6/16.

(c) Are X and Y independent?

Answer: X and Y are not independent because pX,Y (1, 1) = 1/8 6= 6/16 · 5/16 = pX (1) · pY (1)

(d) Are X and Y uncorrelated?

Answer: we need to check, whether the correlation between X and Y is zero (then the variables

are uncorrelated.

The definition for correlation is

corr(X, Y ) = p

Cov(X, Y )

V ar(X)V ar(Y )

Therefore, we need to know the Variances and Covariance. For that, we need the expected values

of X and Y .

For the expected values, we need the marginal probability mass functions calculated in part (a)

above:

The expected values E[X] and E[Y ] are:

E[X]

E[Y ]

= −2 · 3/16 + (−1) · 5/16 + 1 · 5/16 + 2 · 3/16 = 0,

= −1 · 6/16 + 0 · 4/16 + 1 · 6/16 = 0.

The variances then are:

V ar[X]

=

V ar[Y ]

=

(−2 − 0)2 · 3/16 + (−1)2 · 5/16 + 5/16 + 22 · 3/16 = (12 + 5 + 5 + 12)/16 = 34/16,

12/16

The covariance between X and Y is defined as E[(X − E[X])(Y − E[Y ])]:

Cov(X, Y )

E[X]=E[Y ]=0

=

=

E[X · Y ] =

(−2) · (−1) · 1/16 + (−2) · 0 · 1/16 + (−2) · 1 · 1/16 +

+1/8 + 0 − 1/8 +

−1/8 + 0 + 1/8 +

−2/16 + 0 + 2/16 = 0.

By this, we know that the correlation is 0 also. Therefore, the variables are uncorrelated.

(e) Calculate E[W ] and V ar[W ] where W = 2X − 3Y .

Answer:

E[W ] = E[2X − 3Y ] = 2E[X] − 3E[Y ] = 0

From Theorem 2.2.5, V ar[aX + bY ] = a2 V ar[X] + b2 V ar[Y ] + 2abCov(X, Y ) But X and Y are not

correlated i.e. Cov(X, Y ) = 0. This gives

V ar[W ] = V ar[2X − 3Y ] = 4V ar[X] + 9V ar[Y ] = 4(34/16) + 9(12/16) = 15.25

2

Chapter 3

25

By the Bayes’ Rule,

Stat 330 (Spring 2015): Homework 5

P {H|N } =

=

4. (Baron’s book): 3.24

Due: February 23, 2015

P {N |H} P {H}

P {N |H} P {H} + P {N |L} P {L}

(0.368)(0.2)

= 0.0923

(0.368)(0.2) + (0.905)(0.8)

Answer:

3.30 We have a new event here, T = {no accidents in 3 years} = P {X = 0}, where X is

the number of accidents during 3 years. This variable X has Poisson distribution with

parameter 3 for high-risk drivers and with parameter 0.3 for the low-risk group. From

Table A3,

P {T |H} = 0.050

and

P {T |L} = 0.741.

Then, by the Bayes rule,

P {H|T } =

=

P {T |H} P {H}

P {T |H} P {H} + P {T |L} P {L}

(0.050)(0.2)

= 0.0166

(0.050)(0.2) + (0.741)(0.8)

5. (Baron’s book):

3.31 this conditional probability must be considerably lower than in ExerOf course,

cise

3.29.

Answer:

3.31

(a) Let X be the number of components that pass the inspection. It is the number

of successes in 20 Bernoulli trials, thus it has Binomial distribution with n = 20

and p = 0.8. From Table A2,

P {X ≥ 18} = 1 − FX (17) = 1 − 0.7939 = 0.2061

(b) Let Y be the number of components should be inspected until a component

that passes inspection is found. It is the number of trials needed to see the first

success, thus it has Geometric distribution with p = 0.8.

E(Y ) =

1

= 1.25 components

p

3.32 Let X be the number of crashed computers. This is the number of “successes” (crashed

computers) out of 4,000 “trials” (computers), with the probability of success 1/800.

Thus, it has Binomial distribution with parameters n = 4, 000 (large) and p = 1/800

(small), that is approximately Poisson with

λ = np = 5.

Using Table A3 with parameter 5,

(a) P {X < 10} = F (9) = 0.968

(b) P {X = 10} = F (10) − F (9) = 0.986 − 0.968 = 0.018

3