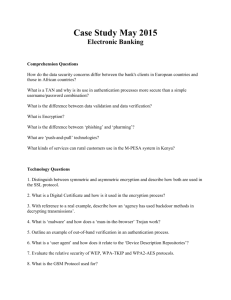

Page 1 Encryption technology encodes computer files so that No. 325

advertisement