Runge-Kutta Methods (fun for everyone) Dave Goulet

advertisement

Runge-Kutta Methods

(fun for everyone)

Dave Goulet

goulet@rose-hulman.edu

Mathematics, RHIT

May 15, 2013

1 / 55

Outline

Introduction

Calculus and Differential Equations

Trees and Combinatorics

Linear Algebra, Complex Variables, & Geometry

Continuous Optimization

Algorithms

Beyond Runge-Kutta

2 / 55

Runge-Kutta and Runge-Kutta-like Methods

High Quality Solvers:

I

Matlab

I

I

I

I

Fortran

I

I

I

I

I

ode45

ode23

ode23s∗

DOPRI

ROS∗

RODAS∗

many others

Mathematica (NDSolve)

I

I

I

I

ExplicitRungeKutta

ImplicitRungeKutta

SymplecticPartitionedRungeKutta

Maple (dsolve, type=numeric)

I

I

I

I

rkf45

ck45

dverk78

rosenbrock∗

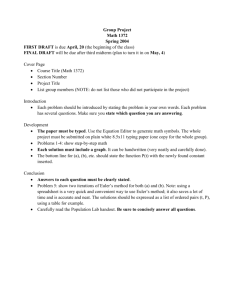

Pedagogical Solvers:

I

Maple’s classical uses six low quality textbook RK methods.

3 / 55

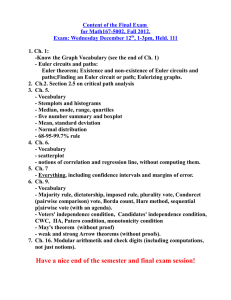

What’s wrong with ode45 or dsolve?

Consider the probelm y’=-50(1-y) with y(0)=0.

1

y

0.8

0.6

0.4

0.2

0

exact

ode45

0

2

4

6

8

10

t

12

14

16

18

20

ode45 is adaptive. What values for the time steps did it choose?

4 / 55

Explicit RK Methods are Not Suitable for All Problems

ode45 used 325 steps and 2077 functional evaluations.

error

y

1

0.5

0

1

h

0

0.1

0

0

2

4

6

8

10

t

12

14

16

18

20

Multiply work by 106 or 109 for many realistic problems.

5 / 55

Calculus &

Differential Equations

6 / 55

Euler’s Method: Approximation of Derivatives

Begin with a first order ODE.

y 0 (x) = f (x, y (x))

Approximate slopes in a simple way.

y 0 (xn ) ≈

y (xn+1 ) − y (xn )

yn+1 − yn

yn+1 − yn

≈

=

xn+1 − xn

xn+1 − xn

h

Replace the ODE with a difference equation.

yn+1 − yn

= f (xn , yn )

h

The Forward Euler Method (1768):

yn+1 = yn + hf (xn , yn )

xn+1 = xn + h

7 / 55

Euler’s Method: Approximation of Integrals (skip)

Begin with a first order ODE, integrating both sides.

y 0 (x) = f (x, y (x))

=⇒

y (xn + h) − y (xn ) =

Z

xn +h

f (x, y (x))dx

xn

Approximate the integral in a simple way.

Z

xn +h

xn

f (x, y (x))dx ≈ hf (xn , y (xn ))

Replace the ODE with a difference equation.

yn+1 − yn = hf (xn , yn )

The Forward Euler Method:

yn+1 = yn + hf (xn , yn )

xn+1 = xn + h

8 / 55

How Good is Forward Euler?

Accuracy If FWE is applied to the exact solution, y (xn ), how large

will the error, |yn+1 − y (xn+1 )|, be?

Stability Does FWE amplify small errors in an undesirable way? Do

decaying solutions decay as they should?

Quality As FWE iterates, does it provide information on and

control over accuracy and stability? For a given accuracy,

how computationally efficient is FWE?

9 / 55

Accuracy of Forward Euler: Taylor Polynomials

Apply FWE to an autonomous ODE, y 0 = f (y ). Compute the error.

h2

yn+1 − y (xn+1 ) = yn + hf (yn ) − y (xn ) + hy 0 (xn ) + y 00 (c)

2

2

h

= h(f (yn ) − y 0 (xn )) − y 00 (c)

2

h2 00

= − y (c)

2

= O(h2 )

Forward Euler is a first order method.

Method is p th order if truncation error is O(hp+1 ).

10 / 55

Stability of Forward Euler: The Dahlquist Test Problem

The ODE y 0 = λy has exponentially decaying solutions when <(λ) < 0.

y (x + h) = e hλ y (x) = e z y (x)

Apply FWE to the ODE.

yn+1 = yn + hλyn = (1 + hλ)yn ≡ (1 + z)yn

Numerical solution decays iff |1 + z| < 1. A circle is not a half plane!

11 / 55

Stabilty Region for Forward Euler

Exact

FWE

1

|1 + z| < 1

−1

−2

0

12 / 55

Numerical Solutions Using Forward Euler

dy/dt=λ y

4

Exact

z=-2.5

z=-1.5

z=-0.5

2

0

−2

−4

0

0.2

0.4

0.6

0.8

1

t

13 / 55

Quality of Forward Euler

I

Without the exact solution, how can you gauge accuracy?

I

Without the exact solution, how can you detect instability?

I

How can you control step size to achieve accuracy and stability?

I

If your implementation efficient?

14 / 55

The Butcher Table: 2-stage examples

Idea: estimate ∆y using slope estimates in multiple locations.

Heun’s Method (1900)

Generic 2-Stage Method

k1 = hf (xn , yn )

k1 = hf (xn + c1 h, yn + a11 k1 + a12 k2 )

k2 = hf (xn + h, yn + k1 )

1

1

yn+1 = yn + k1 + k2

2

2

k2 = hf (xn + c2 h, yn + a21 k1 + a22 k2 )

yn+1 = yn + b1 k1 + b2 k2

Butcher tables organize the coefficients.

0

1

0

1

0

0

1

2

1

2

c1

c2

a11

a21

a12

a22

b1

b2

Where ci = ai1 + ai2 , the ith row sum of A.

xn + ci h is the location where the ith slope estimate is being estimated.

15 / 55

The Butcher Table: General Case

An s-stage method.

Where ci =

c

P

A

b

T

j

c1

c2

..

.

a11

a21

..

.

a12

a22

..

.

···

···

..

.

a1s

a2s

..

.

cs

as1

as2

···

ass

b1

b2

···

bs

aij = (A1)i .

k = hf (xn + hc, yn 1 + Ak)

yn+1 = yn + b T k

16 / 55

Accuracy: Computing Truncation Errors with Calculus

Apply RK method to y 0 = f (y ). Compute the truncation error.

yn+1 − y (xn + h) = yn +

X

j

bj kj −

X hj

j

j!

y (j) (xn )

Derivatives can be computed successively:

y 0 = f (y )

y 00 = f 0 (y )y 0 = f 0 f

y 000 = f 00 ff + f 0 f 0 f

y (4) = f 000 fff + f 00 f 0 ff + f 00 ff 0 f + f 00 ff 0 f + f 0 f 00 ff + f 0 f 0 f 0 f

Can be automated, but still tedious.

Expansion of kj in Taylor series is hard.

17 / 55

Accuracy: Tedious Taylor Series Expansions. (skip)

Example: 2-stage explicit method (easiest case).

yn+1 = yn + b1 k1 + b2 k2

= yn + b1 hf + b2 hf (y + a21 hf )

= yn + h(b1 + b2 )f + h2 b2 a21 f 0 f +

y (x + h) = yn + hf +

h3

2 00 2

b2 a21

f f + ...

2

h2 0

h3

f f − (f 00 f 2 + (f 0 )2 f ) + . . .

2

6

Order 1 requires b1 + b2 = 1. Order 2 requires b2 a21 = 1/2. Order 3

isn’t possible.

yn+1 − y (x + h) =

h3

(3a21 − 2) f 00 f 2 − 2(f 0 )2 f + . . .

12

Deriving order conditions this way is hard.

Order 14 methods with 35 stages have been constructed.

18 / 55

Graph Theory &

Combinatorics

19 / 55

Order Conditions: Rooted Trees

y0 = f

y 00 = f 0 f

y 000 = f 00 ff + f 0 f 0 f

y (4) = f 000 fff + 3f 00 ff 0 f + f 0 f 00 ff + f 0 f 0 f 0 f

Butcher (1963) utilized correspondences between rooted trees and

elementary differentials.

q

f

q

q

q

q

q

q q

A q

q

q

q

q

q q

A q

q

q

q q

A q

ff 0 f 0 f 0 f f 00 ff f 0 f 0 f 0 f f 0 f 00 ff f 00 ff 0 f

q q q

@q

f 000 fff

Butcher’s idea: Use combinatoric techniques to compute tree data and

Taylor series expansions. Prove equivalences.

20 / 55

Order Conditions: Butcher’s Theorem

The tree densities, γ, and elementary weights, Φ, satisfy Φ(t) =

q q

A q

q

q

q

q

q q

A q

q

q

q q

A q

q q q

@q

6

3

24

12

8

4

f 0f 0f

f 00 ff

f 0f 0f 0f

f 0 f 00 ff

f 00 ff 0 f

f 000 fff

q

q

q

q

q

q

1

2

f

ff 0

P

bi

P

bi ci

1

γ(t) .

P

bi aij cj

P

bi ci2

P

bi aij ajk ck

P

bi aij cj2

P

bi aij ci cj

P

bi ci3

I

Conditions easily derivable by hand or by CAS (Sofroniu 1994).

I

Solving multivariable polynomial equations is hard.

I

Solving algorithms exist, e.g., using a Grobner basis (Sofroniu 2004).

21 / 55

Example: An 8th Order Condition. (skip)

u1

u2

u1

A

A Au

4

@

@

t=

@u8

u1

u1

A

A Au3

γ(t) = 8 ∗ 4 ∗ 3 ∗ 2 ∗ 1 ∗ 1 ∗ 1 ∗ 1 = 192

X

Φ(t) =

bi (aij cj2 )(aik (ck (akl cl )))

i,j,k,l

X

bi aij aik akl cj2 ck cl =

i,j,k,l

1

192

Only 114 more 8th order conditions to go!

22 / 55

Example: 3-stage 3rd Order Explicit Method

General case and an example.

0

c2

c3

0

a21

a31

0

0

a32

0

0

0

b1

b2

b3

0

1

1

Order 0:

c2 = a21 ,

Order 1:

b1 + b2 + b3 = 1

Order 2:

b2 c2 + b3 c3 = 1/2

Order 3:

b2 c22 + b3 c32 = 1/3,

0

1

0

0

1

2

1

2

0

0

0

2

3

0

1

3

c3 = a31 + a32

b3 (a31 c1 + a32 c2 ) = 1/6

4 equations in 6 unknowns: use free variables to improve quality.

The example satisfies several important additional criteria.

23 / 55

How much accuracy is possible? (skip)

Butcher’s approach reveals limitations on s-stage order p methods.

Figure summarizes many important theorems.

exp.

imp.

exp.

imp.

100

10

free parameters

maximum order

12

8

6

4

60

40

20

2

0

80

0

5

stages

10

0

0

5

stages

10

24 / 55

Linear Algebra,

Complex Variables,

& Geometry

25 / 55

Stability Functions

RK methods are of the form

k = hf (xn + hc, yn 1 + Ak),

yn+1 = yn + b T k

Apply to test problem, y 0 = λy with <(λ) < 0.

k = hλ(yn 1 + Ak),

yn+1 = yn + b T k

Define z = hλ.

yn+1 = 1 + zb T (I − zA)−1 1 yn

= Φ(z)yn

Φ(z) is the Stability Function.

Exact update would be yn+1 = e z yn . How does Φ(z) compare to e z ?

26 / 55

Stability Functions: Padé Approximations

The stability function is rational (proof by Cramer’s Rule).

Φ(z) = 1 + zb T (I − zA)−1 1 =

det(I − zA + z1b T )

det(I − zA)

Idea: Choose Φ(z) to be a Padé approximation of e z :

Approx

1+z

Method

Forward Euler

Order

1

Stages

1

Type

explicit

1 + z + z 2 /2

1

1−z

2+z

2−z

6 + 2z

6 − 4z + z 2

12 + 6z + z 2

12 − 6z + z 2

Heun

2

2

explicit

Backward Euler

1

1

implicit

Midpoint Rule

2

1

implicit

Radau IIa (1969)

3

2

implicit

Gauss

4

2

implicit

27 / 55

Padé Approximations

1

0.5

F. Euler

Heun

B. Euler

Midpoint

Radau IIa

Gauss

0

−0.5

−1

−20

−15

−10

−5

0

28 / 55

Stability Functions

When applied to y 0 = λy , how alike are the exact and numerical solution?

y (xn + h) = e z y (xn )

yn+1 = Φ(z)yn

Geometric Method 1: Stability Regions

I

S = {z ∈ C : |Φ(z)| < 1} is the Stability Region, i.e., the region

where the numerical solution will decay.

I

Compare this to the left half plane, |e z | < 1 for <(z) < 0.

Geometric Method 2: Order Stars

I

Ideally, |e z | ≈ |Φ(z)|, so that numerical solutions and exact solutions

decay at similar rates.

I

Φ(z)e −z is the Order Star. Study the set {z ∈ C : |Φ(z)e −z | > 1}.

29 / 55

Stability Regions and Order Stars: An Example

Consider RK4 (Kutta, 1901), Φ = 1 + z + 12 z 2 + 16 z 3 +

k1 = hf (xn , yn )

0

1

2

1

2

1

1 4

24 z .

1

2

k2 = hf (xn + h/2, yn + k1 /2)

1

2

0

0

0

1

1

6

1

3

1

3

k3 = hf (xn + h/2, yn + k2 /2)

k4 = hf (xn + h, yn + k3 )

1

6

yn+1 = yn + (k1 + 2k2 + 2k3 + k4 )/6

6

6

3

3

0

0

−3

−3

−6

−6 −3 0

3

6

−6

−6 −3 0

3

6

30 / 55

Geometry of Padé Approximations: Stability Regions

Midpoint

F. Euler

B. Euler

6

6

6

3

3

3

0

−3

0

−3

0

−3

−6

−6 −3 0 3

Heun

6

−6

−6 −3 0 3

Gauss

6

−6

6

6

6

3

3

3

0

−3

0

−3

0

−3

−6

−6 −3 0

3

6

−6

−6 −3 0

3

6

−6

−6 −3 0 3

Radau

6

−6 −3 0

6

3

31 / 55

Geometry of Padé Approximations: Order Stars

Midpoint

F. Euler

B. Euler

6

6

6

3

3

3

0

−3

0

−3

0

−3

−6

−6 −3 0 3

Heun

6

−6

−6 −3 0 3

Gauss

6

−6

6

6

6

3

3

3

0

−3

0

−3

0

−3

−6

−6 −3 0

3

6

−6

−6 −3 0

3

6

−6

−6 −3 0 3

Radau

6

−6 −3 0

6

3

32 / 55

The Geometric Perspective

accuracy and stability ←→ geometry of approximations to e z .

Theorem (Wanner et al., 1978)

I

The number of lobes in the order star meeting at the origin is equal

to the order of the method.

I

If the poles of Φ are in the right half plane and if the order star

doesn’t intersect the imaginary axis, then the stability region

contains the entire left half plane.

6

6

3

3

0

0

−3

−3

−6

−6 −3 0

3

6

−6

−6 −3 0

Dormand & Prince, 1980 (Matlab’s ode45).

3

6

33 / 55

Accuracy and Stability are not Independent

Define B =diag(b) and C =diag(c) with

a11 · a1s

b1

1

· b = · 1 = ·

A= ·

as1 · ass

bs

1

c1

c = · = A1

cs

The order conditions up to 4th :

bT 1 = 1

b T A1 = 1/2

b T C 2 1 = 1/3

b T A2 1 = 1/6

b T C 3 1 = 1/4

b T CA2 1 = 1/8

b T AC 2 1 = 1/12

b T A3 1 = 1/24

Theorem

Φ = 1 + zb T 1 + z 2 b T A1 + z 3 b T A2 1 + z 4 b T A3 1 + . . .

Order conditions cause Φ(z) to resemble e z , for small z.

34 / 55

Implicit Methods can be Very Stable. (skip)

Theorem

If A is singular, then

lim

<(z)→−∞

|Φ(z)| =

6 0

If A is nonsingular, then the Laurent series expansion of Φ(z) is

Φ(z) = 1 − b T A−1 1 +

∞

X

(−1)n

n=1

zn

b T (A−1 )n+1 1

and

lim

<(z)→−∞

|Φ(z)| = 0 if and only if

b T A−1 1 = 1

35 / 55

Explicit Methods Lack Stability. (skip)

Theorem

When the method is explicit, A is singular, and the stability function is a

polynomial.

Φ(z) =

det(I − zA + z1b T )

= det(I − zA + z1b T )

det(I − zA)

so

lim

<(z)→−∞

|Φ(z)| = ∞

Instability is guaranteed.

How far can the stability boundary be extended?

36 / 55

Continuous Optimization

37 / 55

Optimizing the Stability Region: Adding Stages. (skip)

s=p

(s,p)=(2,2),(2,3),(3,3)

s=p+1

4

0

−4

−8

−4

0 −8

−4

0

−8

−4

0

4

0

−4

−32

−16

0

38 / 55

Optimizing Stability Regions. (skip)

Fehlberg, 1970

6 stages, 5th order, explicit

(Maple’s default)

Bogacki & Shampine, 1996

8 stages, 5th order, explicit

6

6

3

3

0

0

−3

−3

−6

−6

−6

−3

0

3

6

−6

−3

0

3

6

39 / 55

Optimizing the CFL Condition. (skip)

Nonlinear Optimization Problem

Find an explicit RK method with maximal positive CFL number given:

1. order = p and stages = s.

2. The method satisfies certain efficiency constraints.

3. The method is Total Variation Diminishing.

Theorem (Ruuth and Spiter, 2004)

I

Heun is the optimal 2nd order 2-stage method.

I

RK3TVD is the optimal 3rd order 3-stage method.

I

There is no optimal nth order n-stage method for

n ≥ 4.

Solution: Add more stages!

0

1

0

1

0

0

1

2

1

2

0

1

0

1

0

0

1

2

1

4

1

4

0

0

0

1

6

1

6

2

3

40 / 55

Optimizing the CFL Condition: Explicit SSP

Ruuth and Spiter’s SSP(9,5) with CLF number 2.695.

0

0.234

0.285

0.297

0.611

0.546

0.760

0.714

0.914

0

0.234

0.110

0.050

0.143

0.045

0.058

0.114

0.096

0

0

0.174

0.079

0.227

0.071

0.093

0.180

0.151

0

0

0

0.167

0.240

0.143

0.109

0.132

0.111

0

0

0

0

0

0.298

0.227

0.107

0.090

0

0

0

0

0

0.013

−0.010

−0.129

−0.108

0

0

0

0

0

0

0.281

0.133

0.112

0

0

0

0

0

0

0

0.175

0.147

0

0

0

0

0

0

0

0

0.312

0

0

0

0

0

0

0

0

0

0.088

0.102

0.111

0.158

−0.060

0.197

0.071

0.151

0.179

4

0

−4

−8

−4

0

41 / 55

Choosing the Right Method is Important

ode45 struggles because of stability problems.

6

1

3

0

0.5

−3

−6

6

−6 −3 0

3

6

0

0

5

10

0

0.5

−3

−6 −3 0

3

6

0

vs.

similar to ode23s

Implicit Rosenbrock

4th order

528 function evals.

h chosen for accuracy

1

3

−6

ode45

Explicit RK

5th order

2077 function evals.

h chosen for stability

0

5

10

42 / 55

Algorithms

43 / 55

High Quality RK Methods Require High Quality Algorithms

“The numerical solution of ordinary differential equations is an old topic

and, perhaps surprisingly, methods discovered around

the turn of the century are still the basis of

the most effective, widely used codes for this

purpose. Great improvements in efficiency have been made, but it is

probably fair to say that the most significant

achievements have been in reliability,

convenience, and diagnostic capabilities

.”-

Shampine and Gear, 1979

44 / 55

Efficiency: Embedding and the FSAL Property

Embedded: two different reconstructions from the same k values.

First Stage As Last: s-stage method is effectively s-1 stages.

Sofroniu, 2004

Bogaki-Shampine, 1989

Mathematica: ExplicitRungeKutta 2,1

Matlab: ode23

0

1

1

0

0

1

0

0

1

2

1

2

0

0

0

1

2

1

2

0

2nd order

1

− 61

1

6

1st order

1

2

3

4

1

0

1/2

0

0

0

4

9

0

0

0

0

1

3

4

9

0

3rd order

1

4

1

3

1

8

2nd order

2

9

3

4

1

3

2

9

7

24

0

0

0

ks = f (yn+1 ) so that k1 of the next time step is already computed.

The lower order method helps with error estimation and control.

45 / 55

Closer Look at FSAL

Suppose the nth set of k values is computed, and the solution updated.

k1 = hf (yn )

k2 = hf (yn + k1 )

k3 = hf (yn + k1 /2 + k2 /2)

yn+1 = yn + k1 /2 + k2 /2

When the next set of k values is computed, the new k1 will be the old k3 .

k1 = hf (yn+1 )

= hf (yn + k1 /2 + k2 /2)

= k3

A k value is reused, so an n stage method is effectively n − 1 stages.

46 / 55

Reliability: Adjusting Step Size for Accuracy

Use an order p + 1 method with an embedded order p method.

yn+1 − y (xn + h) = ahp+1 + O(hp+2 )

ȳn+1 − y (xn + h) = O(hp+2 )

Subtract

yn+1 − ȳn+1 = ahp+1 + O(hp+2 )

Suppose ĥ achieves the optimal error tolerance.

Etol = aĥp+1 + O(ĥp+2 )

Divide

Etol

=

yn+1 − ȳn+1

ĥ

h

!p+1

+ O(h + ĥ)

Adjust

1

p+1

Etol

ĥ = h yn+1 − ȳn+1 47 / 55

Step Size Adjustment is an I Controller

Consider the step size control formula.

1

p+1

Etol

ĥ = h yn+1 − ȳn+1 Compute logarithms and rearrange.

1

(log |Etol | − log |yn+1 − ȳn+1 |)

p+1

Controller Change = (Integral Gain) (Set Point − Process Variable)

log(ĥ) − log(h) =

u[n] − u[n − 1] = Ki e[n]

Making it a PI Controler can provide better convergence.

u[n] − u[n − 1] = Ki e[n] + Kp (e[n] − e[n − 1])

Gustafsson, 1991

ĥ = h 0.7

p+1

Etol

yn+1 − ȳn+1 0.4

yn − ȳn p+1

Etol 48 / 55

PI Control of DOPRI54

ode45

dopri54, i control

dopri54, pi control

49 / 55

Diagnostic: Detecting the Stability Border

Hairer & Wanner, 1996: For DOPRI54, the quantity

−0.08536k1 + 0.088k2 − 0.0096k3 + 0.0052k4 + 0.00576k5 − 0.004k6 h

yn+1 − ȳn+1

is < 1 near the stability border and > 1 away from it.

z hovers the border of the

smaller stability region

switching → 223 function evals.

50 / 55

Summary

I

Software packages provide general purpose solvers.

I

Pick the method which is right for your problem.

I

Custom methods can be superior.

I

There are tradeoffs between accuracy and stability.

I

Efficiency, reliability, diagnostics, and control are key.

51 / 55

Parallel Peer Schemes

RK schemes do this:

kn = hf (yn 1 + Akn )

yn+1 = yn + b T kn

The kn,i are computed in serial.

Parallel Peer schemes do something like this:

kn = hf (yn 1 + Akn−1 )

yn+1 = yn + b T kn

kn,i is computed independently of kn,j , so parallelization is natural.

kn,i = hf (yn + ai1 kn−1,1 + . . . + ai,s kn−1,s )

I

Potential for high number of stages, s= number of nodes.

I

Can be made very stable with no implicit equations to solve.

I

Can be made superconvergent, p > s. (Weiner et al. 2008).

52 / 55

Rosenbrock Methods: How Implicit is Implicit Enough?

Implicit methods require too much computation. One stage example:

k = hf (yn + a11 k)

If f is costly to evaluate, iterative methods of solution,e.g., Newton’s

method, are expensive.

Rosenbrock (1963): k is usually small, so use a Taylor polynomial.

k = hf (yn ) + hf 0 (yn )a11 k

I

Easy to generalize, easy to derive order conditions.

I

I

Implicit, but linear. Linear solves are cheap.

Stability regions are the same as implicit methods.

I

Parallel Peer versions exist (Weiner et al. 2005).

I

Generalizations approximate f 0 , e.g., Matlab’s ode23s (1997).

53 / 55

Multistage Rosenbrock Methods

Start with

k1 = hf (yn ) + hf 0 (yn )γ1 k1

Collect k1 on the left.

(1 − hγ1 f 0 (yn ))k1 = hf (yn )

Add more stages.

(1 − hγ2 f 0 (yn ))k2 = hf (yn + a21 k1 ) + d21 k1

(1 − hγ3 f 0 (yn ))k3 = hf (yn + a31 k1 + a32 k2 ) + d31 k1 + d32 k2

General case:

(1 − hγi f 0 (yn ))ki = hf (yn + aiT k) + hdiT k

For systems, each stage requires one linear solve and each time step one

Jacobian computation.

(I − hγi J)ki =hf (yn + aiT k) + hdiT k

Choosing all γi equal means only one LU decomposition per time step.

54 / 55

Rosenbrock Example

Example used in the talk. (4th/3rd order embedded)

k1 = hf (yn ) + hf 0 (yn )k1 /2

k2 = f (yn + k1 ) − 2k1 + hf 0 (yn )k2 /2

k3 = f (yn + (3k1 + k2 )/8) − k1 /4 + hf 0 (yn )k3 /2

k4 = f (yn + (3k1 + k2 )/8) + 3k1 /4 − k2 /8 − k3 /2 + hf 0 (yn )k3 /2

0

1

0

1

0

0

1

2

1

2

3

8

3

8

1

8

1

8

0

0

0

0

0

0

0

0

1

6

1

6

0

2

3

1

6

1

6

2

3

0

1

2

0

−2

− 14

0

3

8

1

2

− 18

0

0

1

2

1

−2

0

0

0

1

2

55 / 55