Multivariate Data & GLM Advanced Biostatistics Dean C. Adams Lecture 6

advertisement

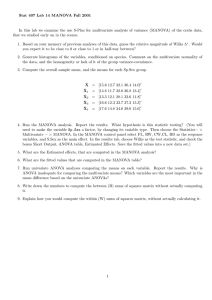

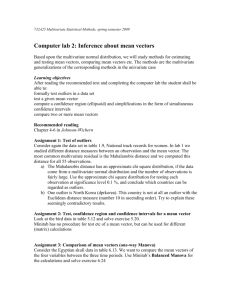

Multivariate Data & GLM Advanced Biostatistics Dean C. Adams Lecture 6 EEOB 590C 1 Univariate Versus Multivariate Analyses •Univariate statistics: Assess variation in single Y (obtain scalar result) •Multivariate statistics: Assess variation in multiple Y simultaneously •Multivariate methods are mathematical generalizations of univariate (ACTUALLY, univariate methods are special cases of multivariate!) 2 Why Jump to Multivariate? •More complete description of pattern •Biological data are often multivariate, so treat as such •Separate univariate analyses misses covariation signal* ANOVAs on y1 and y2 separately would fail to identify group differences from covariation *Covariation IS biology; so one must think multivariately! 3 Rao’s Paradox (Curse of Dimensionality) •Increasing # dimensions of data (Y) means more information •However, for a given n the statistical power decreases •Eventually, too few n for # variables in Y •How large should sample size be? •Many suggestions: •n = 2*#vars •n = 4*#vars •n = #vars2 •ngp = 2*#vars •ngp = 4*#vars 4 Identifying Patterns from the Y-Matrix •Several ways to identify patterns in Yn×p (Y-matrix of n objects × p variables) 1: Linear models: MANOVA/regression to assess patterns 2: R-mode analyses: Summarize by columns (VCV matrix of variables) 3: Q-mode analyses: Summarize by rows (distance matrix for objects)* •First one needs to DESCRIBE the multivariate data! *Many Q-mode & R-mode methods yield identical results: PCA (R-mode) vs. PCoADEuclid (Q-mode) 5 Descriptors of Multivariate Data •Describing multivariate data = understanding it Data are dots in space, so goal is to describe point cloud •VCV (S): Covariance matrix of variances and covariances (‘multivariate variance’) •Correlation matrix: matrix of pairwise variable correlations (standardized covariance matrix) Multivariate Correlations totlen w ingext w gt totlen 1.0000 0.7106 0.5839 w ingext 0.7106 1.0000 0.5775 w gt 0.5839 0.5775 1.0000 Scatterplot Matrix s11 S s21 s31 s22 s32 s33 1 R r21 r31 1 r32 1 168 166 162 160 158 totlen 154 152 255 250 245 w ingext 240 235 230 31 29 w gt 27 •Bivariate correlation plots are also useful 25 23 152 156 160 164 168230 235 240 245 250 255 23 242526 27282930 3132 6 Multivariate Distances •Data are ‘dots’ in multivariate data space •Distances between objects describe similarity (or difference) Small distance = similar •Distance (or similarity) measure used depends on the type of data •NOTE: Distances (D) can be converted to similarities (S) and vice-versa When scaled to 01, relationship is: D 1 S or D 1 S or D 1 S 2 7 Similarity/Distance From Binary Data •Data are 0/1 •Generate 2×2 frequency table for each pair of specimens Specimen 1 Specimen 2 1 0 1 a b 0 c d •Similarity/distance based on a,b,c,d (# traits in each category) • Simple matching coefficient: S a ab cd d a • Jaccard’s coefficient: S abc D b c (#differences) • Hamming distance: 1 2 1 •Choice depends on data and assumptions (e.g., are shared absences (0,0) meaningful?) For S D conversions see Legendre & Legendre 1998 8 Similarity/Distance From Multi-State Data •Multi-state data requires different S/D measures Spec 1 9 3 4 6 2 1 6 8 7 8 Spec 2 5 3 3 4 3 1 6 4 6 8 XAgreements 0 1 0 0 0 1 1 0 • Percent matching: S4 1 X agree •Note: can extend all binary descriptors in this fashion length( X ) 1 s12 j p •Contribution of each trait (sj) is: 0/1 for binary OR multi-state • Gower’s general similarity: S5 For S D conversions see Legendre & Legendre 1998 9 Similarity/Distance From Continuous Data •Continuous data common in morphometrics •MANY possible distance measures • Euclidean distance: D y y Y - Y Y - Y t 2 Euclid • Manhattan distance: • Canberra distance: • Mahalanobis distance: 1j 2j 1 2 1 2 DManhat y1 j y2 j y1 j y2 j DCanberra y1 j y2 j Note double 0 must be removed 2 DMahal Y1 - Y2 S 1 Y1 - Y2 t • Some distances (e.g., Deuclid) generate a METRIC space 10 Combining Data Types •All distance measures require data in commensurate units • Deuclid requires all Y are continuous • Dhamming requires all Y are 0/1 •Researchers sometimes combine data types • Y=SVL, #bristles, presence of nose (0/1) • Y=elevation, #individuals/km, presence of competitor (0/1) •THIS IS GIGO!!! • A program may calculate the distance, but it has no meaning (variables in incommensurate units, not weighted properly, etc.) • Could convert characters to common unit & combine, but still have the weighting problem •Generally not advisable to combine data types for obtaining distances 11 Metrics vs. Measures •Not all distance measures are the same: they fall into different classes •Metric: A distance is a metric IFF: 1: minimal: min(d11=0) 2: symmetry: (d12=d21) 3: Triangle inequality: (d12+d13 ≥ d23) •Semimetric (pseudometric): Triangle inequality not satisfied (e.g., Bray-Curtis distance & Sørenson’s similarity) •Nonmetric: min(d11<0): i.e. has negative distances (e.g.,Kulczynski’s coefficient) •Some distance measures are metric (e.g., DEuclid, DManhat), others not (see above) For discussion of common ecological distance/similarity coefficients, see Legendre & Legendre, 1998 Numerical Ecology 12 Euclidean (Metric) Spaces •Euclidean spaces are defined by the Euclidean metric (Deuclid) •Euclidean spaces satisfy: •3 metric space conditions: 1: min(d11=0); 2: d12=d21; 3: d12+d13 ≥ d23 •Axis Perpendicularity: if xi yi 0 x & y are perpendicular (orthogonal) •In Euclidean spaces, distances, directions, and angles can be defined •Thus they can be examined and compared for biological interpretation •NOTE: most multivariate studies assume a metric (typically Euclidean) geometry 13 Jump to Multivariate GLM •ALL previous models (ANCOVA, factorial, nested, multiple regression, etc.) can be done as GLM in matrix form •Thus, GLM with matrices is extremely general, and covers much of our roadmap of inferential statistics 1 Categorical X >1 Categorical X 1 Continuous X >1 Continuous X Both 1 Continuous Y ANOVA Factorial ANOVA Regression Multiple Regression ANCOVA >1 Continuous Y Factorial MANOVA Multivariate Regression Multivariate Multiple Regression MANCOVA MANOVA •Since univariate GLM is 1 equation, jumping to MULTIVARIATE is easily accomplished (add columns to Y) } GLM 14 Multivariate GLM •Multivariate GLM easy, add columns to Y-matrix 1 t B X X Xt Y • b found the same way, but is a matrix •Problem: SSw & SSW are now matrices, so univariate no F-ratio •Need to summarize variation explained by SSw & SSW matrices •Several solutions: •Wilks’ lambda: •Pillai’s Trace: •Roy’s largest root: •Hotelling’s Trace: SSCPerr E SSCPModel SSCPerr HE Pillai ' s tr SSCPModel SSCPerr SSCPModel tr H E H 1 1 Roy ' s max SSCPerr SSCPModel 1 Pillai’s more robust to unbalanced designs and violations of model 1 Hotelling ' s tr SSCPerr SSCPModel •Test statistics can be converted to F-ratio: 1 n s p F p N = total sample size, s = # X variables in reduced model, and p = # Y variables (Wilks’ : lower is more significant) 15 Testing Group Differences: MANOVA •Compares variation within groups to variation between groups 1 1 X 1 1 •X = independent variable (group labels) •Y = dependent variables •Solve for b (components of means) Gp1 Gp1 Gp 2 Gp 2 B X X Xt Y •Pillai’s Trace: SSCPerr Y XB •Significance from multivariate test-statistic Yp1 Ypn -1 t Y XB •Wilks’ lambda: Y11 Y Y1n Y XB t E HE Pillai ' s tr H E H 1 Pillai’s more robust to unbalanced designs and moderate violations of model 16 Post Hoc Tests I: DMahal •Pairwise comparisons using Generalized Mahalanobis Distance (D2 or D) •Convert D2 T2 F to test D Y1 Y 2 2 n1 n2 T D2 n1 n2 2 t S 1 Y 1 Y 2 N g p1 2 F T N g p •For experiment-wise error rate, adjust using Bonferroni: exp # comparisons df1 = p, df2 = (N-g-p-1) N = total sample size, g = # groups p = # response vars. 17 Post Hoc Tests II: Randomization •Resampling method for pairwise comparisons 1. 2. 3. 4. 5. 6. Estimate group means Calculate matrix of Deuclid Shuffle specimens into groups Estimate means and Drand Assess Dobs vs. Drand Repeat Dobs Y i Yj Y t i Yj DEuclid 18 MANOVA Example: Bumpus Sparrow Data •After a bad winter storm (Feb. 1, 1898), Bumpus retrieved 136 sparrows in Rhode Island (about ½ died) •Collected the following measurements on each: 1) Alive/dead 2) Weight 3) Total length 4) Wing extent 5) Beak-head length 6) Humerus 7) Femur 8) Tibiotarsal 9) Skull 10) Keel-sternum 11) male/female •To investigate natural selection, examined whether there was a difference in alive vs. dead birds Bumpus, H. C. 1898. Woods Hole Mar. Biol. Sta. 6:209-226. 19 Bumpus Data: MANOVA •Single-factor MANOVA >summary(manova(bumpus.data~sex)) Df Pillai approx F num Df den Df Pr(>F) sex 1 0.46652 12.243 9 126 9.166e-14 *** •Factorial MANOVA >summary(manova(bumpus.data~sex*surv)) Df Pillai approx F num Df den Df Pr(>F) sex 1 0.47143 12.2882 9 124 9.520e-14 *** surv 1 0.34256 7.1788 9 124 2.442e-08 *** sex:surv 1 0.09718 1.4831 9 124 0.1613 •Dead appear to be slightly larger, as do males 20 MANOVA: Post-Hoc Tests •Group comparisons with Euclidean Distance (DEuclid below & Prand above) Fem Dead Fem Surv Male Dead Male Surv Fem Dead 0 0.300 NS 0.013 0.001 Fem Surv 0.0319 0 0.008 0.005 Male Dead 0.0576 0.0831 0 0.026 Male Surv 0.0542 0.0649 0.0423 0 •Conclusions from MANOVA: •Significant sexual dimorphism (males slightly larger) •Significant survival status (nonsurvivors slightly larger) •All pairwise comparisons significant, except within females 21 Describing The Data S (VCV matrix) WT 0.0005 0.00035 0.00051 0.00074 0.00074 0.00023 0.00025 0.00034 0.00048 0.00033 0.00044 0.000307 0.00043 0.000242 0.000244 0.000535 0.000631 BHL HL FL TTL SW CV SKL 0.00032 0.00067 0.00049 0.00094 0.00044 0.001024 0.00086 0.000474 0.00089 0.001161 0.00094 0.00047 0.00087 0.001008 0.001321 0.00067 0.0003 0.00041 0.000445 0.000416 0.000621 0.0014 0.000523 0.00083 0.000737 0.000669 0.000458 0.002244 R 5.52 3.40 3.48 2.85 2.95 2.65 2.75 HL FL TTL SW SKL AE BHL HL 1 0.8107 0.5229 0.4571 1 0.4609 0.3888 2.85 1 0.8215 0.7487 0.5121 0.5523 FL 3.40 1 0.6234 0.6164 0.5853 0.5348 0.4945 3.40 1 0.5243 0.5202 0.4433 0.4549 0.4702 0.5192 3.48 WT 2.85 2.95 1 0.5739 0.5036 0.6794 0.5778 0.5337 0.4365 0.5865 3.15 3.35 BHL 5.52 WT 5.44 AE 1 0.6933 0.5867 0.471 0.4859 0.4441 0.3784 0.4381 0.5052 TTL 1 0.3895 1 2.65 2.75 TL 0.44 0.41 1.76 0.649 1.08 1.17 1.08 0.914 1.54 5.04 5.10 5.44 TL TL AE WT BHL HL FL TTL SW SKL TL AE WT BHL HL FL TTL SW SKL Humerus, femur, tibiotarsal, & skull have most variation (in log-units) sY *100 Y 3.25 TL AE WT BHL HL FL TTL SW SKL AE SW SKL 5.04 5.10 3.15 3.35 2.85 3.25 3.40 2.95 3.10 TL 2.95 3.10 Trait-by-trait group comparisons (NOTE: plots miss covariation) 2 2 ♂ 1 1 ♀ 0 5.02 5.05 5.07 ln(totlen) 5.09 5.12 0 5.44 5.46 5.49 ln(wingext) 5.52 5.55 22 0.0 Female: red Male: blue Alive: circle Dead: triangle -0.2 -0.1 PC2 0.1 0.2 Visualizing Group Differences: PCA* -0.2 -0.1 0.0 0.1 0.2 PC1 *Will learn this next time 23 Testing for Covariation: Regression •Relates variation in shape to variation in covariate •X = independent variable (continuous) •Y = dependent variables •Solve for b (components of means) B X X Xt Y SSCPerr Y XB •Significance from multivariate test-statistic •Pillai’s Trace: Yp1 Ypn -1 t Y XB •Wilks’ lambda: Y11 Y Y1n 1 X 11 X 1 X 1n Y XB t E HE Pillai ' s tr H E H 1 Pillai’s more robust to unbalanced designs and moderate violations of model 24 Bumpus Data: Regression •Allometry > summary(manova(Y~TotalLength)) Df Pillai approx F num Df den Df Pr(>F) TotalLength 1 0.55629 19.903 8 127 < 2.2e-16 *** •Significant allometry (relative to total length) •Note: challenging to visualize patterns 25 Visualizing Multivariate Regressions •Represent Y by some summary axis PC1 vs. X Regression Score vs. X t s Yβ β β t PC1 may not align with direction of covariation Predicted Values vs. X Y Xβ X X X X Y t -.5 Drake and Klingenberg (2008) Evolution -1 t P1 SVD Y Adams and Nistri (2010) BMC Evol Biol 26 Testing Groups and Covariates: MANCOVA •Relates variation in shape to variation in covariate •X = independent variables (groups & continuous) •Y = dependent variables •Solve for b (components of means) Y11 Y Y1n 1 X 11 X 1 X 1n Yp1 Ypn B X X Xt Y -1 t Y XB SSCPerr Y XB Y XB t •NOTE: MANCOVA is sequential procedure •Test interactions first (group-specific slopes) •If NS, remove and compare groups (while accounting for covariate) ** Implementation point: covariate must be first variable in X-matrix, as R uses Type I SS for H 27 Bumpus Data: MANCOVA •Full MANCOVA > summary(manova(lm(Y~TotalLength*sex*surv))) Df Pillai approx F num Df den Df Pr(>F) TotalLength 1 0.63862 26.7287 8 121 < 2.2e-16 *** sex 1 0.41791 10.8590 8 121 1.924e-11 *** surv 1 0.26227 5.3771 8 121 8.593e-06 *** TotalLength:sex 1 0.09667 1.6186 8 121 0.1263 TotalLength:surv 1 0.02795 0.4348 8 121 0.8981 sex:surv 1 0.09295 1.5499 8 121 0.1471 •Compare groups while accounting for allometry > summary(manova(lm(Y~TotalLength+sex*surv))) Df Pillai approx F num Df den Df Pr(>F) TotalLength 1 0.62878 26.2545 8 124 < 2.2e-16 *** sex 1 0.40635 10.6098 8 124 2.859e-11 *** surv 1 0.26117 5.4791 8 124 6.315e-06 *** sex:surv 1 0.08125 1.3708 8 124 0.2157 28 Visualizing MANCOVA •Represent Y by some summary axis (by group) PC1 vs. X Regression Score vs. X Predicted Values vs. X Y Xβ X X X X Y t red = female blue = male circles = alive triangles = dead s Yβ β β t t -.5 Drake and Klingenberg (2008) Evolution NOTE: X = cov+gps: see MorphoJ help file -1 t P1 SVD Y Adams and Nistri (2010) BMC Evol Biol Note: X = cov+gps 29 Example II: Salamander Foot Ontogeny •Italian Hydromantes inhabit caves •Climb walls & ceilings (strong ecological selection) Legend H. genei H. flavus H. italicus H. strinatii H. ambrosii H. imperialis H. sarrabusensis H. supramontis •Ho: Adult foot morphology adapted for climbing (e.g,. Lanza, 1991) •a) never tested empirically, b) ignores developmental influences Adams & Nistri (2010) BMC Evol. Biol. •Is there evidence for this hypothesis? 30 Foot Shape Ontogeny Results •Significant foot shape allometry (and convergence) 5 7 3 p 6 4 8 2 1 Adams & Nistri (2010) BMC Evol. Biol. A 9 d 31 Multivariate GLM: Challenges I 1.0 p=2 p = 10 p = 15 p = 20 p = 30 0.6 0.8 Power: PGLS=PIC N=10 Power: Parametric GLM regression 0.2 0.4 0.6 0.8 0.4 Increasing Dimensionality 0.0 0.0 0.2 0.4 Effect 0.6 2 4 6 8 10 15 20 30 0.8 Adams (unpublished). •Recommendations: •Increase N (when possible) •Use distance-based MANOVA 0.2 p= p= p= p= p= p= p= p= Increasing Dimensionality Power = 0.0 0.0 1.0 •As p ↑, power ↓ 0.0 0.2 0.4 0.6 Input Covariation 0.8 Adams (2014) Evolution. 32 Multivariate GLM: Challenges II •The ‘large P to small N’ problem 1.0 N=10 p = 30 0.0 0.2 0.4 Effect 0.6 10 15 20 30 0.8 Adams (unpublished). Power = 0.0 0.0 0.0 p= p= p= p= 0.2 0.4 0.6 0.8 Power: PGLS=PIC Power: Parametric GLM regression 0.2 0.4 0.6 0.8 1.0 •When P ≥ N, covariance matrices singular |SSCP|=0 •SSCP-1 can’t be computed (divide by zero) •GLM statistics undefined and cannot be completed 0.0 0.2 0.4 0.6 Input Covariation 0.8 Adams (2014) Evolution. •Can be a common problem for high-dimensional data 33 Large P to Small N: Solutions •Evaluate significance via generalized inverse (SSCP- instead of SSCP-1) -Generalized inverse is called the Moore-Penrose inverse •Conceptually simple, but not all software allows this (must ‘code’ solution) •Evaluate significance via randomization •Use test-statistic that does not require inverse: tr(SSCPmodel), Dgp1,gp2, etc. •Conceptually simple, but requires programming to implement •Use distance-based permutational-MANOVA 34 Solution 3: Distance-Based Approaches •Test significance based on distances between objects •Relies on covariance matrix - distance matrix equivalency (Gower, 1966) PCoA Dist Y PCA VCV •GLM is covariance based •Its ‘dual’ (permutational-MANOVA) is distance-based Gower (1966). Biometrika. *NOTE: ANY distance measure can be used for this!!! 35 Permutational-MANOVA: Computations •Permutational-MANOVA partitions variation in distances •SSBtwn and SSErr found from distances 1. Obtain SSB, SSW: estimate Fobs 1 N 1 N 2 SST dij N i 1 j 11 1 N 1 N 2 SSW dij eij n i 1 j 11 SSt SSW / (a 1) F Same group: eij=1 Different group: eij=0 SSW / ( N a) 2. Shuffle data; estimate Frand 3. Compare Fobs vs. Frand 4. Repeat •Doesn’t require inverting covariance matrix, so general solution 36 Examples: Permutational-MANOVA •Factorial MANOVA >summary(manova(bumpus.data~sex*surv)) Df Pillai approx F num Df den Df Pr(>F) sex 1 0.47143 12.2882 9 124 9.520e-14 *** surv 1 0.34256 7.1788 9 124 2.442e-08 *** sex:surv 1 0.09718 1.4831 9 124 0.1613 •Permutational-MANOVA* > bumpus.dist<-dist(bumpus.data) #generate distance matrix > adonis(bumpus.dist~sex*surv) Df SumsOfSqs MeanSqs F.Model R2 Pr(>F) sex 1 0.10568 0.105679 10.3739 0.07043 0.001 *** surv 1 0.04607 0.046069 4.5224 0.03070 0.013 * sex:surv 1 0.00399 0.003985 0.3912 0.00266 0.776 * The function procD.lm in the geomorph package is preferable, as it allows residual randomization 37 Multivariate GLM: Challenges III •Multivariate data not continuous (matrix binary traits, presence/absence, counts, etc.) •Not legitimate to ‘force’ into GLM •Recommendation: Use permutational-MANOVA with appropriate distance measure for data (see Legendre and Legendre 1998 for many) 38 Summary: General Linear Models •Assess variation in Y as explained by linear models: Y b0 b1 X1 b2 X 2 b3 X 3 •MANOVA: Categorical X •M-Regression: Continuous X •MANCOVA: Combination of the two •Matrix formulation is most straightforward B X X Xt Y t -1 •Think of model as univariate then ‘remember’ Y is multivariate 39