Adaptive Array Processing Adaptive beamforming Dynamic adaptive beamforming

advertisement

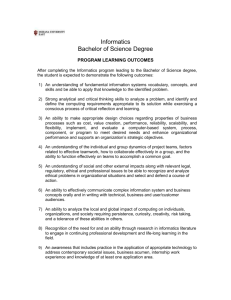

UNIVERSITY

OF OSLO

Adaptive Array Processing

Adaptive beamforming

Algorithm’s characteristics depend on data it

receives

Dynamic adaptive beamforming

Algorithms that update themselves as each

observation is acquired

DEPARTMENT OF INFORMATICS

J&D 7, Oct 2000 SH/1

UNIVERSITY

OF OSLO

Estimation Theory

Measurement: y, Parameter: ξ

Bayes’s rule:

py,ξ (y, ξ) py|ξ (y|ξ)pξ(ξ)

=

pξ|y (ξ|y) =

py (y)

py (y)

1. A priori density: pξ(ξ)

2. A posteriori density: pξ|y (ξ|y)

3. Joint probability density: py,ξ (y, ξ)

4. Likelihood function: py|ξ (y|ξ)

Minimum mean-square error

estimator

The parameter’s a posteriori expected value:

ξˆM M SE (y) = E[ξ|y]

Maximum likelihood estimator

If ξ is not a random variable or its a priori

density is unknown, then maximize the

likelihood function.

DEPARTMENT OF INFORMATICS

J&D 7, Oct 2000 SH/2

UNIVERSITY

OF OSLO

Conventional Beamforming

Beamformer output: z(t, ~ζ) = e0W Y

Output power: P (e) = e0W RW 0e

W = diag(w1, . . . , wM ) - weights

e - steering vector

Y - vector of observations

R - estimate of correlation matrix

• Single frequency, no noise model assumed,

e determined from geometry only.

• For spatially white noise, Kn = σn2 I, and a

single signal present, it can be shown that

the conventional beamformer with W = I

is the maximum likelihood estimator of

direction and power: P = e0Re

• Beampattern: P (e) when the data, Y, i.e. R̂

varies. Steered direction is fixed.

• Steered response: P (e) when the assumed

~ varies. Data is fixed.

direction, i.e. e(ζ)

DEPARTMENT OF INFORMATICS

J&D 7, Oct 2000 SH/3

UNIVERSITY

OF OSLO

Minimum variance

beamforming

Capon (1967), Applebaum (1976), Howells

(1976), also called ‘MLM‘

Principle: minimize output of beamformer,

subject to a gain of 1 in the look-direction

min E[|w0y|2] = e0Re subject to Re[e0w] = 1

Weight vector: w =

R−1 e

e0 R−1e

Output power: P M V = w0Rw = [e0R−1e]−1

DEPARTMENT OF INFORMATICS

J&D 7, Oct 2000 SH/4

UNIVERSITY

OF OSLO

Linear prediction

beamforming

A time-series method adapted to an array, an

element’s output is predicted as a weighted

linear sum of the other elements:

X

∗

wm

Ym

Ym0 = −

m6=m0

Minimize mean-square prediction error

subject to unity gain on predicted element:

0

min E[|w0y|2] = e0Re subject to δm

w=1

0

Solution: w =

R−1 δm0

0 R−1 δ

δm

m0

0

{wm} are not beamformer weights =>

LP cannot be used as a beamformer, only as a

spatial spectrum estimator:

0

δm

R−1 δm0

LP

0

Output power: P = |δ0 R−1e|2

m0

Predicted element, m0: any element, typically

end or center.

DEPARTMENT OF INFORMATICS

J&D 7, Oct 2000 SH/5

UNIVERSITY

OF OSLO

Properties of correlation

matrix

• Hermitian symmetry: R = (R∗)t = R0

• Positive definite: x0Rx > 0, x 6= 0

This implies that

• The eigenvalues are positive

• The eigenvectors form an orthonormal set.

R=

M

X

λivivi0, R−1 =

i=1

DEPARTMENT OF INFORMATICS

M

X

0

λ−1

i vi vi

i=1

J&D 7, Oct 2000 SH/6

UNIVERSITY

OF OSLO

Eigenanalysis

M-dimensional spatial correlation matrix:

R = Kn + SCS 0

No signals, spatially white noise

R = Kn = σn2 I =

M

X

λivivi0

i=1

Multiple eigenvalues equal to noise variance

1 signal

R = σn2 I + A2ee0 =

M

X

λivivi0

i=1

λ1 = σn2 + M A2, v1 = e1/M

2 incoherent signals, C = diag[A21, A22]

R = σn2 I + A21e1e01 + A22e2e02 =

M

X

λivivi0

i=1

S+N subspace {v1, v2} contains {e1, e2}

DEPARTMENT OF INFORMATICS

J&D 7, Oct 2000 SH/7

UNIVERSITY

OF OSLO

MUSIC and EV methods

P M V (e) = [e0R−1e]−1

M

X

0 2 −1

=[

λ−1

|e

vi| ]

i

i=1

Signal+noise subspace + noise subspace:

Ns

M

X

X

0 2 −1

0 2 −1

P M V (e) = [

λ−1

+[

λ−1

i e vi | ]

i |e vi | ]

i=1

i=Ns +1

Eigenvector method, Johnson & DeGraaf

1982:

M

X

−1

0 −1

EV

−1 −1

REV

=[

λ−1

(e) = [e0REV

e]

i vi vi ] , P

i=Ns +1

MUltiple SIgnal Classification (MUSIC)

Schmidt 1979, Bienvenu & Kopp 1979

−1

RM

U SIC = [

M

X

−1

−1

vivi0 ]−1, P M U SIC (e) = [e0RM

e]

U SIC

i=Ns+1

DEPARTMENT OF INFORMATICS

J&D 7, Oct 2000 SH/8

UNIVERSITY

OF OSLO

Coherent signals

Signal+noise subspace has lower rank than

number of signals

Subarray averaging

Linear array of M sensors is divided into

M − Ms + 1 overlapping subarrays each with

Ms sensors. Correlation matrix averaging, OK

rank if Ms ≥ Ns, but loss of resolution

Forward-backward averaging

Only for two coherent signals and linear

array. Average R and RB = JR∗J where J is an

exchange matrix with ones on the

anti-diagonal.

DEPARTMENT OF INFORMATICS

J&D 7, Oct 2000 SH/9