Red paper

IBM® WebSphere®

Red

paper

Robert Blackburn

Jonathan Collins

Jamie Farmer

Mark Gambino

Colette Manoni

Joshua Wisniewski

Pushing Data Performance with IBM

WebSphere Transaction Cluster Facility

Executive overview

Online transaction processing (OLTP) has been used for decades and remains the cornerstone by which most businesses do business. It involves gathering input information, processing that information, and updating data to reflect the new information. Whether a business handles worldwide commerce though the Internet or runs reservation systems for major airlines, the core of its IT environment is OLTP.

As the world grows more interconnected, OLTP is becoming more complex and has new demands on the systems that support it. In large applications, efficient OLTP can depend on transaction management software or database optimization tactics to facilitate the processing of large numbers of concurrent updates to an OLTP-oriented database. For more challenging, decentralized database systems, software can distribute transaction processing among multiple computers on a network while it is closely integrated into a service-oriented architecture (SOA) and web services. These approaches can be successful for easily partitioned workloads.

However, for more demanding workloads with interrelated data at high transactions rates, these solutions often lead to data inconsistency, scalability, and performance issues.

This IBM® Redpaper™ publication, which was written for architects, designers, system engineers, and software developers, explores a unique and innovative software solution that solves the challenges of OLTP. This solution is called

IBM WebSphere® Transaction

Cluster Facility

.

This paper presents a case study of a large customer in the travel and tourism industry that handles airline reservations. This case study highlights the unparalleled scalability and performance characteristics of WebSphere Transaction Cluster Facility to handle workload challenges today and into the future. In addition, this paper examines the importance and power of the WebSphere Transaction Cluster Facility schema design and how it differs from traditional relational database designs. It includes analysis of the hierarchical network data model for WebSphere Transaction Cluster Facility.

ibm.com/redbooks 1 © Copyright IBM Corp. 2013. All rights reserved.

Introduction to WebSphere Transaction Cluster Facility

IBM WebSphere Transaction Cluster Facility is designed for applications that have large, centralized databases with frequent read and update requirements, where data must be globally consistent. It is ideal for applications that are common in many industries where performance, scalability, and availability are paramount.

IBM WebSphere Transaction Cluster Facility provides a high-performance, continuously available, and highly scalable offering for large-volume OLTP. WebSphere Transaction Cluster

Facility is designed for applications that have large, centralized databases with demanding and frequent read and update requirements where data is not easily partitioned and must be globally consistent. Typical uses for WebSphere Transaction Cluster Facility include reservation, payment, and gambling systems.

WebSphere Transaction Cluster Facility is built on IBM DB2® Enterprise Server Edition to provide a highly available database solution. It also uses the IBM DB2 pureScale® feature for

DB2 Enterprise Server Edition. This feature provides superior performance and near-linear scalability for a centralized database in a clustered, distributed system. WebSphere

Transaction Cluster Facility is a distributed system-based solution that is modeled on proven

IBM OLTP database technology to provide world-class application performance, availability, and scalability. This combination of IBM middleware solutions provides the infrastructure that is needed for demanding transaction processing applications.

The WebSphere Transaction Cluster Facility database architecture and middleware are based on the industry-proven IBM z/Transaction Processing Facility Enterprise Edition (z/TPF). z/TPF has been the premiere mainframe-based transaction processing solution at the core of reservation and financial systems around the world for more than 50 years. The advent of the

DB2 pureScale Coupling Facility made it possible to develop a distributed, high-performance, continuously available, and highly scalable offering (WebSphere Transaction Cluster Facility) for large-volume OLTP on IBM Power Systems™ servers.

The DB2 pureScale feature uses a high-speed InfiniBand data network to synchronize database workloads across servers in the cluster. Through this interconnection, the DB2 pureScale feature can achieve cross-server update rates of just microseconds. This capability is key for WebSphere Transaction Cluster Facility to scale in a near-linear fashion as more servers are added to the cluster to support growing workloads. WebSphere Transaction

Cluster Facility is a modern solution with a rich history that addresses the most extreme demands of today’s transaction processing environments.

WebSphere Transaction Cluster Facility takes a unique approach to the challenge of a centralized, nonpartitionable database architecture. WebSphere Transaction Cluster Facility uses DB2 in a nonrelational manner to implement a customized network or hierarchical model database with a flexible, many-to-many relationship between collections of heterogeneous customer-defined records. This highly customized approach to defining a database allows for high performance data traversal, reads and updates that use hash-based indexing, minimized index collisions, and data consolidation that can minimize I/O operations. The unique approach to database architecture design used by WebSphere Transaction Cluster Facility results in a large scale, high performance database solution.

WebSphere Transaction Cluster Facility ensures data integrity by using customer-designed database metadata. This way, WebSphere Transaction Cluster Facility can automatically perform type checking, input validation, and data verification through generated code. It can ensure data consistency at all levels of the database hierarchy and throughout the customer code. WebSphere Transaction Cluster Facility maintains transaction integrity across the various members of clustered systems by storing all data on disk, not in memory.

2 Pushing Data Performance with IBM WebSphere Transaction Cluster Facility

Because WebSphere Transaction Cluster Facility uses DB2 as a persistent data store,

WebSphere Transaction Cluster Facility users can take advantage of many features of DB2.

Some of these features include transaction recoverability, excellent reliability, availability, and serviceability (RAS), database backup and restore, advanced data caching algorithms, redundancy for high availability and failover, and disaster recovery.

The object-oriented C++ and Java interface of WebSphere Transaction Cluster Facility helps enhance application performance and programmer productivity in an IBM AIX® development environment. WebSphere Transaction Cluster Facility stores and retrieves defined data objects in the database without costly marshalling and demarshalling of data. This process for handling data is typical of relational database solutions, such as object-to-relational mapping, which helps achieve maximum read and update performance.

WebSphere Transaction Cluster Facility supports multitenancy, which is an architectural principle of virtualization and cloud computing where multiple customers, or tenants, can share hardware and software resources in an isolated and controlled fashion. This method helps to maximize the utilization of your physical resources. It also helps to reduce the ongoing development and maintenance costs of supporting multiple customer databases.

WebSphere Transaction Cluster Facility is equipped with and works with the following tools:

Graphical and Eclipse-based, the WebSphere Transaction Cluster Facility Toolkit helps database layout and record content design. The

WebSphere Transaction Cluster Facility

Toolkit Configuration Assistant

generates object classes for the traversal, read, and update of the data structures in the database. It simplifies code development, enhances the maintainability of the customer code, and helps improve application time to market.

WebSphere Transaction Cluster Facility provides several middleware features, such as a high performance cache manager for read-intensive data with synchronization across members in the cluster, and tools to ensure the integrity of the database.

By using

IBM Tivoli® Monitoring

, an agent allows specific WebSphere Transaction

Cluster Facility measurements to be monitored in real time.

IBM WebSphere Application Server is an industry-leading runtime environment for Java applications that provides ease of integration for WebSphere Transaction Cluster Facility into a SOA.

Data model and schema design of WebSphere Transaction

Cluster Facility

The schema design in WebSphere Transaction Cluster Facility is different from a traditional relational database design. WebSphere Transaction Cluster Facility uses a hierarchical network data model that is proven to scale by the travel and transportation industry for extreme I/O rates, while it maintains data consistency and integrity. With a network model, no table join operations occur when retrieving data because the design or layout of the data is optimized for the specific use cases that are required by the application. In addition to use cases that require fast retrieval of data, the network data model of WebSphere Transaction

Cluster Facility provides efficient and scalable transactions that require consistent updates to multiple logical pieces of data. WebSphere Transaction Cluster Facility can scale these update-type use cases by minimizing the number of locks and the number of I/O operations that are required to access the data.

The performance of WebSphere Transaction Cluster Facility depends on the layout or design of the data. The following sections highlight an example of a customer in the travel and

Pushing Data Performance with IBM WebSphere Transaction Cluster Facility 3

transportation industry. They illustrate how the following questions or considerations influence the layout of your data:

How many different logical groupings of data do you have?

What records are closely related? If you need to access one record, are you likely to need access another related record that contains different information?

In what ways do you find a record? Which methods of finding a record must be optimized?

What types of indexes are needed?

When do you need to duplicate data versus just linking to it?

Database model

The case study in this paper involves a large customer in the travel and transportation industry that handles airline reservations. A

reservation

is the pairing of customer information with specific airline flight information. A customer can have multiple reservations, such as when a business traveler or frequent flyer has multiple trips that are booked in advance. A single reservation can contain multiple flight segments, such as for connecting flights to reach a destination, or for a round trip.

Cabinets, folders, and records

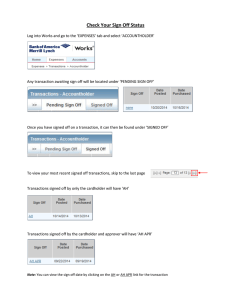

The data model for WebSphere Transaction Cluster Facility consists of a hierarchy of

cabinets, folders, and records (Figure 1) that are much like their physical counterparts.

Cabinet

Folder Folder Folder

Records Records Records

Figure 1 Simplified data model hierarchy for WebSphere Transaction Cluster Facility

Related pieces of paper (records) are stored in folders, and folders are kept in cabinets according to an organization methodology, such as alphabetically by drawer. To find information, you locate the folder, open it, and look for the specific record that you need.

Folders can contain different types of records, and depending on the size of the records, they can sometimes all be retrieved with a single I/O. For transactions that need to update multiple pieces of information, this organization is efficient in the number of I/Os that are required and in the number of locks that need to be held to perform the update.

4 Pushing Data Performance with IBM WebSphere Transaction Cluster Facility

Based on the application requirements, the case-study data model entails two logical groupings of data: customer information and reservation information. The basic information in each grouping contained the following elements:

Customer information

– Name

– Address

– Phone number

– Form of ID (example, driver’s license or passport)

– Credit card

– Frequent flyer number

Reservation information

– Name

– Flight number

– Date

– Origin and Destination

– Ticket number

– Payment

You can modify this organization for other use cases.

Logical groupings of data are typically organized in cabinets, as shown in Figure 2. This

example has two cabinets, one for customer information and one for reservation information.

CustomerInfo

Cabinet

Reservation

Cabinet

Figure 2 Cabinet organization for the use case

All use cases in the study involve accessing a reservation, so we look closer at the folders and records in the reservation cabinet. As stated earlier, a single reservation can be for multiple people, such as a family that is traveling together, and multiple flights. Therefore, you organize the information into multiple records, for example by passenger names, flight information, ticket number, and payment. If more flights are added to the reservation, you add a record. When you retrieve a reservation, you likely need to see the passenger name, flight information, and payment status. Keeping related records in the same folder is efficient because folder contents are kept together in the database.

The next consideration is how many different reservations to place in the same folder.

Considering that you locate information by first locating the folder, if multiple reservations are placed in the same folder, you compare each record to determine whether it is part of the reservation that you are trying to access. However, the actions of looping through records and doing compares are inefficient.

In this case study, a single reservation (also called a

passenger name record

(PNR)) is placed into a folder that contains a record for each of the following items:

Passenger name

Flight segment

Ticket number

Frequent Flyer number

ID information (for example, type of ID and ID number)

Pushing Data Performance with IBM WebSphere Transaction Cluster Facility 5

Payment information (for example, form of payment and credit card number)

Phone number

Email address

Indexes and references

Next, you set up indexes and fast access paths for the most frequent use cases. In this case

study, several tests contain different use cases. Table 1 shows these use cases and the

frequencies that these use cases occur.

Table 1 Use-case tests

Use case

Create, query, modify, and delete a reservation by using the reservation (PNR) ID

Query or update a reservation by using a form of ID

Query or update a reservation by using the ticket number

Query a reservation by using a frequent flyer number

Query or update a reservation by using the flight number and date

Query or update a reservation by using an agent ID and date

Query or update a reservation by using a phone number

Frequency

35%

32%

18%

8%

5%

1%

1%

To build the data model, we used mixed-message workload definitions (contain all transaction types), as typically seen in a production environment, to show how the data can be optimized.

The most frequent use case is to access a reservation by the reservation ID. Regarding the data model, this use case was set up by using the ultimate optimization. From a logical data organization standpoint, all records that are associated with a PNR were placed in a single folder. WebSphere Transaction Cluster Facility provided a way to save the location of the

folder. When you use the physical cabinet analogy (Figure 3), finding the PNR folder is the

same as locating the sixth folder in drawer #2. You do not have to think about how the folders are organized. Instead, you go directly to the specified folder.

Reservation

Cabinet

PNR ID PNR

Figure 3 Logical data organization

Although the use case that finds reservations based on a ticket number is not the next most frequent use case, that use case provides a good example of how to set up a simple index.

In WebSphere Transaction Cluster Facility, indexes are defined by using root cabinets. The index, which in this example is based on the ticket number, is used as a search key to locate

6 Pushing Data Performance with IBM WebSphere Transaction Cluster Facility

a folder in the root cabinet. The folder contains a record that associates the search key value with a reference to the item that you want to index. This case study involves associating the ticket number with a reference to the PNR folder.

The model in Figure 4 shows a root cabinet, a ticket folder, and a ticket record.

Ticket

Root Cabinet

Tickets

Cabinet

Cabinet

Ticket #

PNR

Figure 4 Using the ticket number as an index to get the PNR

Although the data is organized this way, the application needs only to make one call, using the ticket number as an index, to get the reference to the PNR. The reference is then used to read the specific records in the PNR folder.

For the use case that involves forms of ID, access is more complicated than accessing the

PNR by ticket number because the ID is more closely related to the specific customer than a specific reservation.

You can set up an index similar to ticket number, but there is a trade-off. That is, when a PNR is removed from the system, the customer information (and the index) is also removed. By separating the customer information into its own cabinet, you can reuse the customer information when you make new reservations. This consideration is significant for performance when you add new reservations because all of the customer-related index information is in place after the customer makes their first reservation.

Similar to the reservation information, the customer information is stored in multiple records within a folder. Some data duplication occurs between the customer information and what is stored in the reservation for performance reasons. When a reservation is retrieved by using ticket information, you do not need to go into the customer cabinet because the information is in the reservation. The data that is duplicated is infrequently updated. It is more expensive to update information if it is in multiple places. If the data needs to be updated frequently, it might be better to keep one copy and use references to access it from multiple places.

Pushing Data Performance with IBM WebSphere Transaction Cluster Facility 7

In Figure 5, the customer folder has a segment record (highlighted) that contains a reference

to a PNR folder.

CustomerInfo

Cabinet

Customer

Name FF #

Form of ID

Segment

PNR

Credit

Card

Phone e-mail

Figure 5 Using a segment record containing a reference to a PNR

As stated earlier, a customer can have multiple reservations, and a single reservation can contain multiple flights. For these use cases, multiple segment records might exist, where two segment records can point to different PNRs (multiple reservations) or to the same PNR (one reservation that involves two flights).

With this structure for the CustomerInfo cabinet, you can now set up an index to retrieve a

PNR based on a customer’s form of ID. Similar to the ticket index, a root cabinet is defined with the form of ID as the search key, and the ID value is associated with a reference to the customer folder.

Using the form of ID as a method to retrieve the PNR involves an extra step, compared to using a ticket number. The form of ID is used as an index, but you get a reference to the customer folder, not a reference to the PNR. Therefore, the segment record must be read to

obtain the reference to the PNR, as shown in Figure 6 on page 9. The path length to access

the PNR by using a form of ID is longer than when you use the ticket index. However, the path length to add the PNR to the form-of-ID-related index is similar to adding the ticket index because, when the customer exists, you need to add only the segment reference.

8 Pushing Data Performance with IBM WebSphere Transaction Cluster Facility

Form of ID

Root Cabinet

IDs

CustomerInfo

Cabinet

ID Value

Customer PNR

Segment

Figure 6 Reading a segment record to obtain the PNR reference

You can set up indexes for other customer-related information, such as frequent flyer number or phone number, in a similar way to what was done here for the form-of-ID-related index.

Distribution methods

The use cases to query or update a reservation by using an agent ID and date or by using a phone number depend on the selection of a reasonable distribution method. The folders in a root cabinet are placed into a hash table by using the search key as a hash value. Based on the hash value, the size of the hash table (maximum number of folders) and the algorithm that is used for hashing determine the distribution of the records across all of the folders in the root cabinet. Determining the best size and algorithm is a balance between space requirements and performance. If space is not an issue, you choose a distribution algorithm that can spread your data so that you can have, at most, one record per folder in the root cabinet. This approach can eliminate collisions and the need to compare the record against the search key value.

For a large database, such as the one in this case study with the number of PNRs defined to be 100 million, an enormous amount of space would be consumed for each index. Consider the Agent ID example. The case study defined the number of agents to be 100,000 with reservations kept in the system for 365 days. Using the Agent ID and date as the search key would result in 365 million unique values. Because the frequency of the Agent ID-based query or update is only 1%, the improved performance was not worth the space needed for the large number of entries that are required.

Pushing Data Performance with IBM WebSphere Transaction Cluster Facility 9

The alternative solution is to create a multilevel index structure as shown in Figure 7.

Agent

Root Cabinet

Agent ID

AgentDate

Date

Reservation

PNR

Figure 7 Using a multilevel index structure

It takes a longer to access the data by using multiple indexes, but this method requires less space. The first-level index is used to find data that is associated with a particular agent based on the agent’s ID. The second-level index is set up by date. Date folders are created only when an agent creates a reservation on that particular date, which minimizes the number of folders that are created as part of the second-level index.

The last use case involves accessing the PNR by using a flight number and date. The number of flights or the size of the data is not a factor as it was for the use case with the Agent ID and date as input. Instead, it is more a consideration of the data to use as a hash value. Not all data is ideal as a hash value.

WebSphere Transaction Cluster Facility offers the DirectOrdinalSpecification option, where access to folders is based on an ordinal number rather than a search key value. By using the

DirectOrdinalSpecification option, you can create your own method for finding and placing data into root cabinet folders. It works well for a fixed or managed set of data values (such as flight numbers and dates) that can be mapped easily to an ordinal number.

In this use case (Figure 8 on page 11), each combination of flight number and date is

assigned to an ordinal number in a table. To access the PNR by using a flight number and date, a binary search is done on the table to find the ordinal number that is assigned to the flight and date. The ordinal number is then used to access the flight record that contains references to all of the PNRs that are associated with that flight.

10 Pushing Data Performance with IBM WebSphere Transaction Cluster Facility

Flights

Root Cabinet

FlightOrdinal

Reservation

Cabinet

FlightRes

PNR

Figure 8 The DirectOrdinalSpecification option in WebSphere Transaction Cluster Facility

Making updates

When you make updates to WebSphere Transaction Cluster Facility data, by adding new records or by modifying existing ones, WebSphere Transaction Cluster Facility holds locks on the folders where updates are being made. For example, if an update is being made to information in a PNR, WebSphere Transaction Cluster Facility locks that specific PNR folder.

In the example data model, each PNR is in its own folder, so that there is no contention when you update the PNR. However, updating information in a PNR that is used as an index involves more than just updating the PNR. For example, when you update a ticket number, the

PNR and the index records that use the ticket number need to be updated. After the PNR is updated, a new index is created based on the new ticket number. After the new ticket number index is created, the old index can be removed.

When processing indexes, WebSphere Transaction Cluster Facility minimizes any lock contention by holding only the lock on the folder that contains the new ticket number long enough to create the index. Yet a large amount of contention can still occur if the data is distributed poorly, such as when many ticket numbers go to the same folder. In this case, updating any ticket number in the folder requires the folder lock to be held for a longer time. It also increases the likelihood of another transaction that needs that same folder lock and must wait (lock contention), which impacts overall system performance. By mapping the ticket numbers to different folders, updates to different ticket numbers can proceed with minimal lock contention, resulting in better overall system performance.

Pushing Data Performance with IBM WebSphere Transaction Cluster Facility 11

Data model summary

The WebSphere Transaction Cluster Facility data model uses a hierarchy of cabinets, folders,

and records, as shown in Figure 9. Logical groupings of data form cabinets that hold folders.

Data is stored in records, and related records are placed into the same folder for efficient retrieval and update. Indexes are defined by using root cabinets that provide fast access to folders. The index-based distribution method that is used with root cabinets is an important factor to minimize contention for updates and to ensure performance for retrieval.

Form of ID

Root Cabinet

Ticket

Root Cabinet

Flights

Root Cabinet

Agent

Root Cabinet

IDs Tickets FlightOrdinal Agent ID

ID Value Ticket # FlightRes AgentDate

Date

Reservation

Customer

PNR PNR ID

Segment

Figure 9 Complete WebSphere Transaction Cluster Facility data model hierarchy

Performance tests

The performance tests involve the same large customer in the travel and tourism industry that handles airline reservations. All information that is related to a reservation is kept in a PNR document. A reservation can contain multiple passengers and multiple flight segments. Some transactions work with a single PNR, such as creating a reservation or updating an existing reservation. Other transactions involve multiple PNRs, such as showing all reservations for a customer or generating a passenger manifest for a flight.

12 Pushing Data Performance with IBM WebSphere Transaction Cluster Facility

An agent or customer can locate a PNR by using multiple ways. The query PNR transaction allows the following forms of input among other forms:

PNR record locator (also referred to as the

PNR ID

)

Ticket number

Form of ID (driver’s license, passport, or other government issued ID)

Credit card number and type

Phone number

Email address

Passenger name, flight number, and date

Passenger name, departure city, and date

Similarly, the update PNR transaction can have multiple variations such as the following examples:

Adding or changing a phone number

Updating nonindexed data such as a PNR history record

Adding a flight segment

Canceling an existing flight for one or more passengers

Changing a flight (adding a new flight and canceling an old flight)

Creating or updating a reservation can require updating multiple database records (changes must be persisted to a storage device). These records must be updated in the same transaction scope, including the PNR, the customer database records for each passenger, and the inventory and manifest records for each flight.

The test environment consisted of five WebSphere Transaction Cluster Facility server nodes, each running on its own 20-core IBM POWER7® (P780) box. The WebSphere Transaction

Cluster Facility database contained 100 million PNRs, 50 million unique customers, and

1 million flights. A client load generator generated transaction request messages and sent them across the network to the server. The client load generator was cluster unaware. That is, any transaction for any PNR could be sent to any of the WebSphere Transaction Cluster

Facility server boxes. The environment was as close to a real production system as possible, including high availability (HA) of the server and database layers. It was also a fully functional, indexed database that was could handling multiple transaction types.

The following terminology is used in the test descriptions:

Response time The mount of time it takes WebSphere Transaction Cluster Facility to process a transaction. This is the time between when WebSphere

Transaction Cluster Facility receives a request message from the network and when WebSphere Transaction Cluster Facility sends the response to that message.

Full message mix When the ratio of PNR query, update, create, or delete actions is

100:10:1:1. In a full message mix, for every 112 transactions, 100 transactions are to query a PNR, 10 transactions are to update a PNR, one transaction is to create a PNR, and one transaction is to delete a

PNR.

Test results: The results of the tests are specific to the test environment, test data, network, and other variables for this paper. These results will vary depending on your specific circumstances.

Pushing Data Performance with IBM WebSphere Transaction Cluster Facility 13

Test 1: Querying the PNR mix

The goal of this test was to show that WebSphere Transaction Cluster Facility can handle high query request volumes, because querying a PNR is the most frequent type of transaction. In the test environment, query PNR-type transactions were sent to WebSphere Transaction

Cluster Facility at a rate of 100,000 transactions per second. At this sustained rate, the average response time remained constant and was less than 3 milliseconds.

Test 2: Update PNR mix

The goal of this test was to show that WebSphere Transaction Cluster Facility can handle high database update rates. In the test environment, update PNR-type transactions were sent to

WebSphere Transaction Cluster Facility at a rate of 18,000 transactions per second. At this sustained rate, the average response time remained constant and was less than 18 milliseconds.

Test 3: Transaction scalability

The goal of this test was to show that, as message rates increase, WebSphere Transaction

Cluster Facility can be scaled by adding more nodes to the cluster. The test began with one active WebSphere Transaction Cluster Facility node that sends traffic by using the full message mix at increasing rates until the maximum capacity of the node was reached. This message rate is called

M1

. A second node was then activated, and traffic was increased until the maximum capacity of both nodes was reached. This message rate is called

M2

. This procedure was repeated by adding third, fourth, and fifth nodes. Message rate

M5

represents the message rate with all five nodes active.

Throughput scaled in a near linear fashion as nodes were added. For example, the throughput of two nodes (

M2

) was 1.99 times the throughput of one node (

M1

). The throughput of five nodes (

M5

) was 4.97 times the throughput of one node.

Figure 10 illustrates the throughput as nodes were added.

5N

4N

3N

2N

N

1 2 3 4

Number of WTCF Nodes

Figure 10 Throughput as extra nodes are added

5

14 Pushing Data Performance with IBM WebSphere Transaction Cluster Facility

Test 4: Database scalability

The goal of this test was to show that no increase in response time occurs as the size of

WebSphere Transaction Cluster Facility database increases. The first part of the test involved running the full message mix at a certain message rate against a smaller database that contained 100,000 PNRs. The second part involved running the full message mix at the same message rate against a larger database that contained 100 million PNRs. Even though the database was 1,000 times larger, the average response time in the second test was identical to the average time in the first test.

Test 5: Burst of transactions

The goal of this test was to show that, even when you process a heavy workload, WebSphere

Transaction Cluster Facility can handle large increases or spikes in message rates. The initial setup involved the full message mix at a rate of 21,000 transactions per second, with the system running steady at that rate for a predefined period.

Next, the message rate was increased to a steady 31,000 transactions per second, and

WebSphere Transaction Cluster Facility immediately reacted to the increase in traffic. At time

T

, WebSphere Transaction Cluster Facility was processing 21,000 transactions per second. At time

T plus one second

, WebSphere Transaction Cluster Facility was processing 31,000 transactions per second and remained at that rate during the test. The average response time remained constant from time

T

until the end of the test.

Figure 11 shows the transaction rates and average response times during the test.

= Transaction Rate

= Average Response Time

Start T T+1 End

Time in Seconds

Figure 11 Rates and response times during bursts of transactions

Test 6: Stability

The goal of this test was to show that WebSphere Transaction Cluster Facility can process high transaction workloads consistently for long periods of time. The full message mix was run at 50,000 transactions per second for 18 hours. The average response time was less than

5 milliseconds and remained consistent throughout the test.

Pushing Data Performance with IBM WebSphere Transaction Cluster Facility 15

Even higher transaction rates

The tests that are described in the previous sections were for a large volume PNR workload.

Yet these tests demonstrated only a small fraction of the full capabilities of WebSphere

Transaction Cluster Facility. WebSphere Transaction Cluster Facility can scale vertically by adding more cores to a node. It can scale horizontally by adding more nodes to the cluster.

The full message mix PNR workload in this test environment showed a result of 50,000 transactions per second across five WebSphere Transaction Cluster Facility nodes, each with

20 cores and running at only 75% of processor capacity. Considering the observed scalability characteristics of WebSphere Transaction Cluster Facility, a projection was made for this test environment. The projection is that 128 nodes, with 80 cores each (plus sufficient DASD, desk control units, and network bandwidth), can process more than 4 million transactions per second in the full message mix PNR workload.

Summary

The demands of OLTP will continue to challenge existing database solutions. IBM

WebSphere Transaction Cluster Facility provides a continuously available, high-performance, scalable solution for large-volume online transaction processing. The DB2 pureScale feature enables WebSphere Transaction Cluster Facility to synchronize database workloads across the servers in the cluster. The DB2 pureScale feature allows WebSphere Transaction Cluster

Facility to achieve cross-server update rates of microseconds, which is key to the product’s ability to scale in a near-linear fashion to support expanding workloads. Data integrity is ensured through metadata that automatically performs data type checking, and this transaction integrity is maintained across clustered systems by storing all data on disk and not in memory.

For workloads where the data cannot be partitioned, the powerful WebSphere Transaction

Cluster Facility networked data model provides a number of advantages over relational technologies that rely on partitioning. The unique benefit of this data model lies in its ability to efficiently handle transactions that require consistent updates to multiple logical pieces of data. WebSphere Transaction Cluster Facility is able to scale these update-type use cases by minimizing the number of locks and the number of I/Os required for data operations.

WebSphere Transaction Cluster Facility uses a highly customized, networked data model that defines the relationships between collections of heterogeneous customer-defined records.

This database definition approach allows for high performance data traversal, reads, and updates that minimize index collisions and consolidate data for minimal I/O operations.

The airline case study demonstrates that WebSphere Transaction Cluster Facility is a viable option for a large, high-volume online transaction system. WebSphere Transaction Cluster

Facility successfully met and exceeded the various requirements, showcasing the characteristics of scalability, reliability, consistency, and stability that are required in any mission-critical system. WebSphere Transaction Cluster Facility provided a single view of a database that contains 100 million PNRs from a multinode complex. It also showed that it can process 4 million transactions per second in a larger environment. This case study proved that

WebSphere Transaction Cluster Facility can handle enormous workloads, such as those of an airline, both today and for many years to come. Explore how this distinctive technology can help you achieve your short-term and long-term transaction processing needs.

For more information about WebSphere Transaction Cluster Facility, go to: http://www.ibm.com/software/webservers/wtcf

16 Pushing Data Performance with IBM WebSphere Transaction Cluster Facility

The team who wrote this paper

This paper was produced by a team of specialists from around the world at the International

Technical Support Organization (ITSO), Raleigh Center.

Robert Blackburn is a Senior Statistician with IBM in Poughkeepsie, New York (NY). He has more than 30 years of experience in the field of performance analysis. His specialties include probability, queueing theory, and applied statistics. Robert is the performance analyst for the z/TPF and WebSphere Transaction Cluster Facility products and consults with z/TPF customers on matters of performance and design. He also works with the IBM hardware design teams to enable optimum performance in processor and storage systems. Robert has a doctorate degree in mathematics from The Graduate Center at The City University of New York.

Jonathan Collins is a Product Line Manager for the WebSphere Transaction Cluster Facility,

Airline Control System, and Transaction Processing Facility products in the IBM Software Group.

Jonathan handles financial management, acquisitions, pricing, marketing, new business opportunities, partner enablement, vendor relationships, strategy, and product direction. He ensures that the EPS Industries portfolio of products meets the transaction processing needs of

C-level and LOB executives. His background includes software development and management in several fields, and he has written extensively about the value of IBM transaction processing products. Jonathan has a Bachelor of Arts degree from Union College with a major in English and minor in Computer Science.

Jamie Farmer is a Senior Software Engineer at IBM in Poughkeepsie, NY. Jamie is a lead developer for two leading high volume transaction processing platforms: z/TPF and IBM

WebSphere Transaction Cluster Facility. He has over 15 years of experience in designing and developing components of these platforms, including network communications, cryptography and encryption, database management, and enterprise integration. Jamie has a Bachelor of

Science degree in computer science from Ithaca College and a master degree in computer science from Marist College.

Mark Gambino is a Senior Technical Staff Member at IBM in Poughkeepsie, NY. He is a senior architect for the Transaction Processing Facility and WebSphere Transaction Cluster

Facility high-end transaction processing product lines. Mark has over 24 years of experience in designing, developing, and testing numerous components of these platforms.

Colette Manoni is a Senior Technical Staff Member and Architect for the Transaction

Processing Facility family of products in IBM Software Group. With over 28 years of experience in developing large-scale transaction systems, Colette is responsible for the requirements, functional designs, and overall product solutions. She is also a Master Inventor and holds over 20 patents in the US, Japan, China, and several other countries. Colette has a

Bachelor of Science degree from Rensselaer Polytechnic Institute.

Joshua Wisniewski is a Senior Software Engineer at IBM in Poughkeepsie, NY. He has over

12 years of experience in designing, developing, and maintaining various features for the high-volume transaction processing platforms of z/TPF and IBM WebSphere Transaction

Cluster Facility. He was a technical lead, customer liaison, developer, architect, and project manager for z/TPF debugger technologies, various z/TPF tools, and WebSphere Transaction

Cluster Facility applications development. Joshua has Bachelor of Science degree from

Clarkson University with a major in computer engineering.

Pushing Data Performance with IBM WebSphere Transaction Cluster Facility 17

Now you can become a published author, too!

Here's an opportunity to spotlight your skills, grow your career, and become a published author—all at the same time! Join an ITSO residency project and help write a book in your area of expertise, while honing your experience using leading-edge technologies. Your efforts will help to increase product acceptance and customer satisfaction, as you expand your network of technical contacts and relationships. Residencies run from two to six weeks in length, and you can participate either in person or as a remote resident working from your home base.

Learn more about the residency program, browse the residency index, and apply online at:

ibm.com/redbooks/residencies.html

Stay connected to IBM Redbooks

Find us on Facebook: http://www.facebook.com/IBMRedbooks

Follow us on Twitter: http://twitter.com/ibmredbooks

Look for us on LinkedIn: http://www.linkedin.com/groups?home=&gid=2130806

Explore new IBM Redbooks® publications, residencies, and workshops with the IBM

Redbooks weekly newsletter: https://www.redbooks.ibm.com/Redbooks.nsf/subscribe?OpenForm

Stay current on recent Redbooks publications with RSS Feeds: http://www.redbooks.ibm.com/rss.html

18 Pushing Data Performance with IBM WebSphere Transaction Cluster Facility

Notices

This information was developed for products and services offered in the U.S.A.

IBM may not offer the products, services, or features discussed in this document in other countries. Consult your local IBM representative for information on the products and services currently available in your area. Any reference to an IBM product, program, or service is not intended to state or imply that only that IBM product, program, or service may be used. Any functionally equivalent product, program, or service that does not infringe any IBM intellectual property right may be used instead. However, it is the user's responsibility to evaluate and verify the operation of any non-IBM product, program, or service.

IBM may have patents or pending patent applications covering subject matter described in this document. The furnishing of this document does not grant you any license to these patents. You can send license inquiries, in writing, to:

IBM Director of Licensing, IBM Corporation, North Castle Drive, Armonk, NY 10504-1785 U.S.A.

The following paragraph does not apply to the United Kingdom or any other country where such

provisions are inconsistent with local law: INTERNATIONAL BUSINESS MACHINES CORPORATION

PROVIDES THIS PUBLICATION "AS IS" WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR

IMPLIED, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF NON-INFRINGEMENT,

MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. Some states do not allow disclaimer of express or implied warranties in certain transactions, therefore, this statement may not apply to you.

This information could include technical inaccuracies or typographical errors. Changes are periodically made to the information herein; these changes will be incorporated in new editions of the publication. IBM may make improvements and/or changes in the product(s) and/or the program(s) described in this publication at any time without notice.

Any references in this information to non-IBM websites are provided for convenience only and do not in any manner serve as an endorsement of those websites. The materials at those websites are not part of the materials for this IBM product and use of those websites is at your own risk.

IBM may use or distribute any of the information you supply in any way it believes appropriate without incurring any obligation to you.

Any performance data contained herein was determined in a controlled environment. Therefore, the results obtained in other operating environments may vary significantly. Some measurements may have been made on development-level systems and there is no guarantee that these measurements will be the same on generally available systems. Furthermore, some measurements may have been estimated through extrapolation. Actual results may vary. Users of this document should verify the applicable data for their specific environment.

Information concerning non-IBM products was obtained from the suppliers of those products, their published announcements or other publicly available sources. IBM has not tested those products and cannot confirm the accuracy of performance, compatibility or any other claims related to non-IBM products. Questions on the capabilities of non-IBM products should be addressed to the suppliers of those products.

This information contains examples of data and reports used in daily business operations. To illustrate them as completely as possible, the examples include the names of individuals, companies, brands, and products.

All of these names are fictitious and any similarity to the names and addresses used by an actual business enterprise is entirely coincidental.

COPYRIGHT LICENSE:

This information contains sample application programs in source language, which illustrate programming techniques on various operating platforms. You may copy, modify, and distribute these sample programs in any form without payment to IBM, for the purposes of developing, using, marketing or distributing application programs conforming to the application programming interface for the operating platform for which the sample programs are written. These examples have not been thoroughly tested under all conditions. IBM, therefore, cannot guarantee or imply reliability, serviceability, or function of these programs.

© Copyright International Business Machines Corporation 2013. All rights reserved.

Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by

GSA ADP Schedule Contract with IBM Corp.

19

This document REDP-4910-00 was created or updated on March 13, 2013.

Send us your comments in one of the following ways:

Use the online Contact us review Redbooks form found at:

ibm.com/redbooks

Send your comments in an email to: redbooks@us.ibm.com

Mail your comments to:

IBM Corporation, International Technical Support Organization

Dept. HYTD Mail Station P099

2455 South Road

Poughkeepsie, NY 12601-5400 U.S.A.

®

Red

paper

™

Trademarks

IBM, the IBM logo, and ibm.com are trademarks or registered trademarks of International Business Machines

Corporation in the United States, other countries, or both. These and other IBM trademarked terms are marked on their first occurrence in this information with the appropriate symbol (® or ™), indicating US registered or common law trademarks owned by IBM at the time this information was published. Such trademarks may also be registered or common law trademarks in other countries. A current list of IBM trademarks is available on the Web at http://www.ibm.com/legal/copytrade.shtml

The following terms are trademarks of the International Business Machines Corporation in the United States, other countries, or both:

AIX®

DB2®

IBM®

Power Systems™

POWER7® pureScale®

Redbooks®

Redpaper™

Redbooks (logo)

Tivoli®

WebSphere®

®

The following terms are trademarks of other companies:

Java, and all Java-based trademarks and logos are trademarks or registered trademarks of Oracle and/or its affiliates.

Other company, product, or service names may be trademarks or service marks of others.

20 Pushing Data Performance with IBM WebSphere Transaction Cluster Facility