Assessing Structure in Monetary Policy Models Ragnar Nymoen University of Oslo ∗

advertisement

Assessing Structure in Monetary Policy Models∗

Ragnar Nymoen

University of Oslo

2 May , 2005.

Abstract

Structural models carry positive connotations in economics. Hence proponents of alternative theories and modeling strategies compete about priority to

label their models as structural. When policy decisions hinge on model properties, the debate about ‘structure’ has potentially important consequences.

The consensus view today is to define “structural models” as synonymous with

systems of equations that are lifted from modern Walrasian macroeconomics.

In this paper we discuss a wider operational definition of a model structural

properties. We define structural property as a many faceted model feature,

e.g., theory content, explanatory power, stability and robustness to regime

shifts. It follows that structural properties, and a structural representation of

the economy, are not guaranteed by a close connection to microfoundations. If

the modelling purpose is to assist monetary policy, a stand must be taken on

issues relating to wage and price making behaviour. Therefore, our examples

are i) the New Keynesian Phillips curve, and ii) a non-Walrasian approach

to inflation modelling based on the idea that in modern economies the rents

generated by the operation of the firm is shared between workers and firms.

1

Introduction

Different monetary policy models is often characterized along a (general) theorydata dimensions, as discussed in Pagan (2003). The trade-off at first appears to be

evident: Direct translation of theoretical relationships to econometric specifications

are likely to lead to misspecified models with inefficient estimates and unnecessary

bad forecasts. On the other hand, relevant theory is needed to get a clear interpretation of estimation results and of model properties.

The appeal of the postulated trade-off between theoretical and empirical coherence (Pagan’s terminology) is that both model builders and model consumers

(e.g., those using models as an aid in policy decision making) can recognize the

balance between theoretical input and data instigated formulation of the model’s

∗

This paper is an extension of Nymoen (2002) and builds on a lecture given at the Economic

Research Centre, Department of Economics, Middle East Technical University, Ankara, 7 April

2003. Thanks to Bjørn Naug for comments and discussion. It also draws on joint work with

Gunnar Bårdsen, Øyvind Eitrheim and Eilev S. Jansen, see Bårdsen et al. (2005). The numerical

results were produced by PcGive 10, Doornik and Hendry (2001) and EViews 5 (provided by

Quantitative Micro Software). Please address correspondence to: Ragnar Nymoen, University of

Oslo,Department of Economics, P.O. Box 1095 Blindern, N-0317 Oslo, Norway. Phone: + 47 22

85 51 48. Fax + 47 22 50 35 35. Internet: ragnar.nymoen@econ.uio.no

1

equations. But the intuitive appeal of the Pagan-frontier can also be misleading, for

example if it is used as a rationale for picking a particular model ‘along the frontier’.

After all the Pagan-frontier is just a construction, and there is no practical way of

telling whether a particular structural VAR or a particular dynamic stochastic general equilibrium model, DSGE, are ‘on the frontier’ and that the only difference

between them is the degree of data/theory coherency. In practice, the (real) reasons

for choosing one model or the other is much more complex include both established

knowledge and subjective beliefs about what is the best theories and econometric

techniques to use. Moreover, the preferences that model producers have about the

theory-data balance (and other aspects of the model specification and evaluation)

is general not shared by the consumers of models, see Granger (1990).

A possible side-effect of Pagan’s trade-off figure, is to encourage attitudes

saying that ‘if only my theory model is good enough (state of the art)’ then ‘anything

goes’ in terms of degree of empirical coherence. If the sole purpose of the model is to

clearly express a theory, then perhaps the ‘anything goes’ position is all right, i.e.,

since a theory cannot explain the observational data of the real world (philosophers

of science would replace real world by ‘phenomenal system’, econometricians by data

generating process, DGP), but the counterfactual data of an isolated and idealized

physical system, see Davis (2000, pp. 207–208). However, it is plain that models

used for monetary policy are not developed with pure theory testing in mind, but

instead with the purpose of explaining the current economic situation, and to provide

forecasts of the future rate of inflation in particular as an aid to the policy decisions

process.

From this perspective, it is intriguing to note that a main development in

macroeconomics in recent years is that New Keynesian DSGE models have been

widely adopted as suitable models for monetary policy analysis. These models,

though modified with nominal price rigidity, have a real business cycle model as

its core. This means that what was originally offered as a normative (Ramsey)

model, with infinitely lived representative agents with perfect foresight and perfectly

competitive markets, has been completely “transformed into a model for interpreting

last year’s and next year’s national accounts’ (Hahn and Solow (1997, p 2)).

In this paper, one of the main thesis is that since the New Keynesian models are

now used for explanation of real world observations, they are also open to the full set

of modern econometric valuation methods. However, until recently the evaluation of

for example the New Keynesian Phillips curve, NPC, curve has been fairly limited

and of a corroborative nature. A limited range of goodness-of-fit tests have been

used, and the consensus view on that basis is that the NPC is a success:it provides

economics with a model of inflation dynamic that scores high on both theoretical

coherence (of course), but also reasonably well in terms of degree of data coherence.

In section 3 we review the results of more complete evaluation exercises. We conclude

in a completely different way than the consensus view: the empirical NPCs that

hitherto has been taken as support for the underlying theoretical model, are in fact

without the claimed theoretical content. In terms of a terminology developed in

section 2, the NPC therefore scores low in terms of the structural properties that we

typically want in macroeconometric models (beside theoretical consistency: ability

to explain the data, stability and constancy, and encompassing earlier findings).

2

'Marshall'

Figure 1: A modified Pagan Frontier

The New Keynesian Phillips curve (and full-blown DSGEs), are part of the reductionist programme of microfoundations to macroeconomics which has become so

dominant that it is now ‘mainstream’–first in academic economics and increasingly

also in central banks (and ministries?). This programme is Walrasian in its methodological orientation since it requires the model to be fully and correctly specified in

one step. In section 4 we ask whether it is reasonable to expect that econometric

models stemming from this programme will obtain a ‘high score’ in terms of structural properties. Based on the work of Kevin Hoover and others, the answer seems

to be negative. Thus if structural model properties are wanted, there is reason to

try to develop alternative modelling methodologies.

In section 5 we note that, ever since the invention of macroeconomics (Frisch

(1933)), there are traces of a parallel programme in macroeconomics which can be

labelled Marshallian because of its insistence on a high level of understanding of

the individual markets (detailed sector modelling), price making behaviour, and a

stepwise modelling approach to the (general equilibrium) macro model, cf. Hoover

(2004) and De Vroey (2004). In line with this view, section 6 presents a Marshallian

counterpart to the New Keynesian Phillips curve. It consists of a game theoretic

model of nominal wage setting, together with monopolistic price setting schedule,

and delivers precise hypotheses about cointegration relationships. Given cointegration (and identification) the theoretical framework can be extended to the errorcorrection model of wage-price dynamics, with lines going back to Sargan (1964),

and which has a range of interesting economic properties. For example,it implies

3

a steady state where the real wage is constant at a given level of unemployment,

hence a natural rate of unemployment is not implied (and is not needed to avoid

destabilizing wage inflation), so there is a rationale for a more detailed modelling

of the real economy in addition to a wage-price core model. In an extended empirical example we show that this approach delivers a model with some structural

properties in place. For example, it explains the data reasonably well, and several

important model features in terms of economic interpretation are constant across

identifiable regime-shift. Finally, the post Walrasian model of the inflation spiral

encompasses both old and new (Keynesian) Phillips curves.

We end this introduction by noting how the results and view of this paper could

lead to a modification of Pagan’s figure of modelling-conflicts, which we referred to

at beginning of the Introduction. First, we agree that theoretical interpretation and

clarity are important structural traits, so we would keep the same vertical axis as Pagan, labelled Degree of theoretical coherence in figure 1. But we discard the premise

that there is only one admissible theoretical framework to build macroeconometric

models from–there are at least two and we represent them as Walrasian and Marshallian in the figure. Along the horizontal axis we have what we think of as an index

of score on the difference aspects of structure (apart from theoretical consistency of

course).

The main message is that models that stem from the Walras programme are

likely to score low on the horizontal axis, but by definition they should score high on

theoretical coherence (but see the analysis of the NPC below) Marshallian models,

as we envisage them are bound to take a more eclectic approach to the theoretical

framework of the macro model, so we cut them off from “maxim score” on the

vertical axis. The score of Marshallian models on the horizontal structural property

axis will no doubt depend on the data generating process (some being more difficult

to model than others) and on econometric and statistical methods. It is of practical

importance however, that structural properties are relatively easy to evaluate within

the Marshallian framework, because of the stepwise modelling approach it brings

back into econometrics as a respectable route to model specification.

2

Structural interpretation and structural properties

To newcomers to the discipline, econometrics appears to be filled up with terminology, and even among professionals, confusion is sometimes caused by different

econometricians using different words for the same phenomena. Nevertheless, it

more often happens that, because we are having a relatively limited disciplinary

vocabulary and a wide range of phenomena to describe, very different things pass

under the same name, and therefore we are in danger of considering them as identical. One example is the word structure in relation to macroeconometric models.

For example, as recently as in the 1980s, most economists would associate the term

structural equation, for example, simply with an equation in system of simultaneous equations, as opposed to a reduced form equation in the reduced form (of the

structural model).

Today, when the word structural model is used, the vast majority of economists will think of entirely different models than the systems-of-equations of the

1970s. Most likely the kind of model that springs to ones mind is model made up of

4

equations that are derived from (or at least consistent with) modern macroeconomic

economic theory of the representative agent, intertemporal optimizing type. In such

model, each equation is said to have structural interpretation with ‘deep structural

parameters’) that are immune to the Lucas critique. This is unproblematic when

the data that the model is used with the counterfactual observations of an idealized

theoretical system. Sometimes however–and the current situation in monetary economics is such a time–the usage of theory models is extended to observations of

the real world. In terms of Pagan’s frontier, this is what happens when a monetary

authority chooses a model very close to the theory coherence axis.

We will argue that the new common understanding of the term structural

model represents a wrong-turn in the development of econometrics since it fails to

grasp the distinction between a model’s postulated structural interpretation and its

eventual structural properties, which are essential for understanding and forecasting observations generated by the real world economy (the ‘phenomenal system’ or

DGP). Structural properties are the gold nudged that we hope to sift out by ardent

repeated theory-data confrontations and modelling. They are not established by

decree.

One example of the now common use of the terms structural equation and

structural model is found in a review of monetary policy and institutions in Norway, Svensson et al. (2002). The group, named Norges Bank Watch, specifically

recommended that the Norwegian Central Bank invests resources into the building

of structural models of the Norwegian economy, to be used for policy simulation and

forecasting. By structural models Norges Bank Watch means modern open economy

macroeconomics, see for example p. 7 and p. 56 of Svensson et al. (2002). In 2004,

the first version of the Norges Bank structural macroeconomic model was commissioned for use in policy making process. It is a DSGE model, a real business cycle

model with a New Keynesian Phillips curve.

DSGE models are calibrated, or estimated under the assumption that the

theoretical disturbance are identical to the residuals. This claim is never really

argued but reflects an ambition in the radical research program initiated by Lucas,

namely that the goal of economic theory is to produce models that accurately mimics

the economy. However, as argued by David Hendry, Kevin Hoover and (I am sure)

many others, the claim is quite incredible.1 Basically, because economic data are non

experimental, theoretical ceteris paribus clauses and simplifying assumptions cannot

be trusted to carry over to the empirical analysis. As a result, model residuals do

not reflect the assumed properties of the disturbances (making inference difficult),

omitted variables induce bias in the estimates of the parameters of interest, and

the econometric model becomes unstable with respect to extensions of the data

set. Econometric techniques have been invented in order to rectify some of these

problems, but the validity of these correction methods remain dependent on the

initial assumption that the model is basically sound, and that e.g,. autocorrelated

residuals are indeed a sign of autocorrelated disturbance of the true model and not

of misspecification. This is an example of the strategy of handling a violation of an

1

In the terminology of Spanos (1995), Lucas and Kydland and Prescott and their followers

embrace the theory of errors as the basis of econometrics, and abandons the economerics of Fisher’s

experimental methods (and the way it was integrated in the Cowles-Commisions programme), see

Hoover (2004).

5

apriori assumption of the variance of the disturbance by respecifying the variance

and is based on ‘the axiom of correct specification’ (Leamer (1978)) and implicitly

interprets the residual as the disturbance. In section 3 we shows how this problem

goes right to the heart of the new consensus model of monetary policy: the New

Keynesian Phillips curve.

A main thesis of the present paper is that all models that are used for explanation of the observations of the real macroeconomy should be made subject to

a broad evaluation, regardless of whether they originate close to or far from the

theory-axis of Pagan’s diagram. In particular we oppose the view that a model’s

structural interpretation is a valid argument for by-passing the evaluation stage, or

only doing very limited evaluation.

When the model’s purpose is to understand the real world, its eventual structural properties are conceptually different from its structural interpretation. Heuristically, we take a model to have high degree of structural property if it explains the

data well, is robust to shocks, and is able to fence off challenges from rival models.

A model which is a structural representation of the economy does not disintegrate

that easy! Structural properties are nevertheless relative, to the history, nature and

significance of regime shifts. There is always the possibility that the next shocks to

the system may incur real damage to a model with high structural content hitherto.

Our definition implies that a model’s structural properties must be evaluated

along several dimensions, and the following seem particularly relevant

1. Theoretical interpretation.

2. Ability to explain the data.

3. Ability to explain earlier findings, i.e., encompassing the properties of existing

modes.

4. Robustness to new evidence in the form of updated/extended data series and

new economic analysis suggesting e.g., new explanatory variables.

Economic analysis (#1) is a main guidance in the formulation of econometric models. Clear interpretation also helps communication of ideas and results among researchers and it structures the debate. However, since economic theories are necessarily abstract and build on simplifying assumptions, it seems self evident that direct

translation of a theoretical relationship to an econometric model must lead to such

problems as biased coefficient estimates, wrong signs of coefficients, and/or residual

properties that contradict any initial assumption of white noise disturbances. The

main distinction seems to be between seeing theory as representing the correct specification, (a la leaving parameter estimation to the econometrician), and viewing

theory as a guideline in the specification of a model which also accommodates institutional features, attempts to accommodate heterogeneity among agents, addresses

the temporal aspects for the data set and so on, see e.g., Granger (1999).

Arguments against “largely empirical models”, e.g., sample dependency, lack of

invariance (the Lucas-critique), unnecessary complexity (in order to fit the data) and

chance finding of “significant” variables. Yet, ability to characterize the data (#2)

remains an essential quality of useful econometric models, and given the absence

of theoretical truisms, the implications of economic theory have to be confronted

6

with the data in a systematic way. Moreover, the mentioned pitfalls of empirically

based models can avoided, see e.g., Granger (1999), Hendry (2002). Due to recent

advances in the theory and practice of data based model building, we know that

by using general-to-spesific (gets) algorithms a researcher stands a good chance of

finding a close approximation to the data generating process, see Hoover and Perez

(1999), and Hendry and Krolzig (1999), and that the danger of over-fitting is in fact

(surprisingly) low.2 Conversely, acting as if the specification is given by theory alone,

with only coefficient estimates left to “fill in”, is bound to result in the econometric

problems noted above and which section 3 below will demonstrate.

There is usually controversy in macroeconometrics , so a new model’s capability of encompassing earlier findings is an important aspect of structure (#3). There

are many reasons for the coexistence of contested models for the same phenomena,

some of which may be viewed as inherent (limited number of data observations,

measurement problems, controversy about operational definitions, new theories).

Nevertheless, the continued use a corroborative evaluation (i.e., only addressing

goodness of fit or predicting the stylized fact correctly) may inadvertently hinder

cumulation of evidence taking place. One suspects that there would be huge gains

from a breakthrough for new standards of methodology and practice in the profession.

Ideally, empirical modelling is a cumulative process where models continuously

become overtaken by new and more useful ones. By useful we understand in particular models that are relatively invariant to changes elsewhere in the economy,

i.e., they contain autonomous parameters, see Haavelmo (1944), Johansen (1977),

Aldrich (1989), Hendry (1995b). Models with a high degree of autonomy represent

structural properties: They remain invariant to changes in economic policies and

other shocks to the economic system, as implied by #4 above.3 However, structure

is partial in two respects: First, autonomy is a relative concept, since an econometric

model cannot be invariant to every imaginable shock. Second, all parameters of

an econometric model are unlikely to be equally invariant, and only the parameters

with the highest degree of autonomy represent structure. Since elements of structure

typically will be grafted into equations that also contain parameters with a lower

degree of autonomy, forecast breakdown may frequently be caused by shifts in these

non-structural parameters.4

A strategy that puts a lot of emphasis on forecast behaviour, without a careful

2

Naturally, with a very liberal specification strategy, overfitting will result from gets modelling,

but with “normal” requirements of levels of significance, robustness to sample splits etc, the chance

of overfitting is small. Thus the documented performance of gets modelling now refutes the view

that the axiom of correct specification must be invoked in applied economerics, Leamer (1978). The

real problem of empirical modelling may instead be to keep or discover an economically important

variable that has yet to manifest itself stronlgy in the data, see Hendry and Krolzig (2001). Almost

by implication, there is little evidence that Gets leads to models that are prone to forecast failure,

see Clements and Hendry (2002).

3

see e.g., Hendry (1995a, Ch. 2,3 and 15.3) for a concise definition of structure as the invariant

set of attributes of the economic mechanism.

4

This line of thought may lead to the following practical argument against large-scale empirical

models: Since modelling resources are limited, and some sectors and activities are more difficult to

model than others, certain euations of any given model are bound to have less structural content

than others, i.e., the model as a whole is no better than its weakest (least structural) equation.

7

evaluation of the causes of forecast failure ex post, runs a risk of discarding models

that actually contain important elements of structure. Hence, for example Doornik

and Hendry (1997) and Clements and Hendry (1999, Ch. 3) show that the main

source of forecast failure is deterministic shifts in means (e.g., the equilibrium savings rate), and not shifts in such coefficients that are of primary concern in policy

analysis (e.g., the propensity to consume). Therefore it may not be necessary to bin

a whole model even if it has gone to a rough spell in terms of forecasting. However,

failure to adapt to the new regime, may be a sign of a deeper source of forecast

failure, non-constant derivative coefficients is the most common case (the coefficient

of unemployment a Phillips curve equation would be one example).5 Conversely,

models with high structural content will nevertheless lose regularly to simple forecasting rules, see e.g., Clements and Hendry (1999), Eitrheim et al. (1999). Hence

different models may be optimal for forecasting and for policy analysis, which fits

well with the often heard recommendation of a suite of monetary policy models.

Structural breaks are always a main concern in econometric modelling, but

like any hypothesis of theory, the only way to judge the quality of a hypothesized

break is by confrontation with the evidence in the data. Moreover, given that an

encompassing approach is followed, a forecast failure is not only destructive but

represents a potential for improvement, if successful respecification follows in its

wake, cf. Eitrheim et al. (2002).

3

Evaluation a ‘structural’ inflation equation

There is usually a big gulf between measurable complexities of the real economy

and what an econometric model can hope to incorporate. Hence, starting with the

idea that theory is complete (or that we can act as if it is complete) does not solve

any of the problems of empirical modelling in economics, and specifically does not

guarantee a high degree of structural content.

The New Keynesian Phillips Curve, NPC, has emerged as a consensus theory

of inflation in modern monetary economics, largely because of its stringent theoretical derivation, see Galí and Gertler (1999) and Galí et al. (2001), hereafter GG

and GGL. In a short time it has become an integral part of the New Keynesian

Model of monetary policy, as a modernized and state of the art theoretically instigated aggregate supply equation. In terms of the previous section’s list of structural

characteristics, the NPC scores high on theory consistency (#1), as we show below.

However, tests of all the other structural properties show that the NPC has low

structural content.

3.1 The NPC model6

Let pt be the log of a price level index. The NPC states that inflation, defined

as ∆pt ≡ pt − pt−1 , is explained by Et ∆pt+1 , expected inflation one period ahead

conditional upon information available at time t, and excess demand or marginal

5

See Nymoen (2004) for an analysis of a recent failure in inflation forecasting.

6

This part builds on Bårdsen et al. (2004) and Bårdsen et al. (2005, Chapter 7).

8

costs xt (e.g., output gap, the unemployment rate or the wage share in logs):

(1)

∆pt = bp1 Et ∆pt+1 + bp2 xt + εpt ,

where εpt is a stochastic error term. GG have given a specification of the NPC in line

with Calvo’s work: They assume that a firm takes account of the expected future

path of nominal marginal costs when setting its price, given the likelihood that its

price may remain fixed for multiple periods. This leads to a version of the inflation

equation (1), where the forcing variable xt is the representative firm’s real marginal

costs (measured as deviations from its steady state value). They argue that the

wage share (the labour income share) wst is a plausible indicator for the average

real marginal costs, which they use in the empirical analysis. A hybrid version of

the NPC that uses both Et ∆pt+1 and lagged inflation as explanatory variables is

also provided. It’s main rationale is that the hybrid model does not hinge on jumpbehaviour of the rate of inflation for stability (see below). Our impression is that it

is the hybrid version that is found in most DSGE models commissioned for monetary

policy analysis.

Equation (1) is incomplete as a model for inflation, since the status of xt is left

unspecified. In order to analyse the dynamic implication of the NPC we therefore

consider the following completing system of stochastic linear difference equations7

(2)

(3)

∆pt = bp1 ∆pt+1 + bp2 xt + εpt − bp1 η t+1

xt = bx1 ∆pt−1 + bx2 xt−1 + εxt

0 ≤ |bx2 | < 1

The first equation is adapted from (1), utilizing that Et ∆pt+1 = ∆pt+1 − η t+1 , where

η t+1 is the expectation error. Equation (3) captures that there may be feed-back

from inflation on the forcing variable xt (output-gap, the rate of unemployment or

the wage share) in which case bx1 6= 0.

In order to discuss the dynamic properties of this system, re-arrange (2) to

yield

(4)

∆pt+1 =

1

bp2

1

∆pt −

xt −

εpt + η t+1

bp1

bp1

bp1

and substitute xt with the right hand side of equation (3). The characteristic polynomial for the system (3) and (4) is

¸

∙

1

1

2

(5)

p(λ) = λ −

+ bx2 λ +

[bp2 bx1 + bx2 ] .

bp1

bp1

The system has no stationary solution if either of the two roots are one in magnitude.

If neither of the two roots are on the unit circle, unique stationary solutions exists,

and they may be either causal solutions (functions of past values of the disturbances

and of initial conditions) or future dependent solutions (functions of future values of

7

Constant terms are omitted for ease of exposition.

9

the disturbances and of terminal conditions), see Brockwell and Davies (1991, Ch.

3), Gourieroux and Monfort (1997, Ch. 12).

The future dependent solution is a hallmark of the New Keynesian Phillips

curve. Consider for example the case of bx1 = 0, so xt is a strongly exogenous forcing

variable in the NPC. This restriction gives the two roots λ1 = b−1

p1 and λ2 = bx2 .

Given the restriction on bx2 in (3), the second root is always less than one, meaning

that xt is a causal process that can be determined from the backward solution.

However, since λ1 = b−1

p1 there are three possibilities for ∆pt : i) No stationary

solution: bp1 = 1; ii) A backward solution: bp1 > 1; iii) A forward solution: bp1 < 1.

If bx1 6= 0, a stationary solution may exist even in the case of bp1 = 1. This

is due to the multiplicative term bp2 bx1 in (5). The economic interpretation of the

term is the possibility of stabilizing interaction between price setting and product

(or labour) markets–in fact in direct parallel to the (old) accelerationist Phillips

curve which also hinges on the endogeniety of the rate of unemployment (or output

gap) for stability.

If we consider the rate of inflation to be a jump variable, there may be a

saddle-path equilibrium as suggested by the phase diagram in figure 2. The drawing

is based on bp2 < 0, so we now interpret xt as the rate of unemployment. The

line representing combinations of ∆pt and xt consistent with ∆2 pt = 0 is downward

sloping. The set of pairs {∆pt , xt } consistent with ∆xt = 0 are represented by the

thick vertical line (this is due to bx1 = 0 as above). Point a is a stationary situation,

but it is not globally stable. Suppose that there is a rise in x represented by a

rightward shift in the vertical curve, which is drawn with a thinner line. The arrows

show a potential unstable trajectory towards the north-east away from the initial

equilibrium. However, if we consider ∆pt to be a jump variable and xt as a state

variable, the rate of inflation may jump to a point such as b and thereafter move

gradually along the saddle path connecting b and the new stationary state c.

10

b

Figure 2: Phase diagram for the system for the case of bp1 < 1, bp2 < 0 and bx1 = 0

The jump behaviour implied by models with forward expected inflation is at

odds with observed behaviour of inflation. This have led several authors to suggest

a “hybrid” model, by heuristically assuming the existence of both forward- and

backward-looking agents, see for example Fuhrer and Moore (1995).

In the same spirit as these authors, and with particular reference to the empirical assessment in Fuhrer (1997), GG also derive a hybrid Phillips curve that allows

a subset of firms to have a backward-looking rule to set prices. The hybrid model

contains the wage share as the driving variable and thus nests their version of the

NPC as a special case. This amounts to the specification

(6)

∆pt = bfp1 Et ∆pt+1 + bbp1 ∆pt−1 + bp2 xt + εpt .

GG estimate (6) for the U.S. in several variants –using different inflation measures, different normalization rules for the GMM estimation, including additional

lags of inflations in the equation and splitting the sample. They find that the overall

picture remains unchanged. Marginal costs have a significant impact on short run

inflation dynamics and forward looking behaviour is always found to be important.

In the same manner as above, equation (6) can be written as

(7)

∆pt+1

bbp1

1

bp2

1

= f ∆pt − f ∆pt−1 − f xt −

εpt + η t+1

bp1

bp1

bp1

bp1

11

and combined with (3). The characteristic polynomial of the hybrid system is

#

"

¤

bbp1

1 £ b

1

3

2

(8)

p(λ) = λ − f + bx2 λ + f bp1 + bp2 bx1 + bx2 λ − f bx2 .

bp1

bp1

bp1

Using typical results for the expectation and backward-looking parameters, bfp1 =

0.25, bbp1 = 0.75, together with the assumption of an exogenous ws process with

autoregressive parameter 0.7, we obtain the roots {3.0, 1.0, 0.7}.8 Thus, there is no

stationary solution (neither backward nor forward) for the rate of inflation in this

case.

This seems to be a common result for the hybrid model as several authors

choose to impose the restriction

bfp1 + bbp1 = 1,

(9)

which forces a unit root upon the system. To see this, note first that a 1-1 reparameterization of (7) gives

"

#

b

b

bbp1 2

1

bp2

1

p1

2

∆ pt+1 = f − f − 1 ∆pt + f ∆ pt − f xt − f εpt + η t+1 ,

bp1 bp1

bp1

bp1

bp1

so that if (9) holds, (7) reduces to

(10)

2

∆ pt+1

f

−bp0 (1 − bp1 ) 2

bp2

1

= f +

∆ pt − f xt − f εpt + η t+1 .

f

bp1

bp1

bp1

bp1

Hence, the homogeneity restriction (9) turns the hybrid model into a model of the

change in inflation. Equation (10) is an example of a model that is cast in the

difference of the original variable, a so called dVAR, only modified by the driving

variable xt . Consequently, it represents a generalization of the random walk model

of inflation that was implied by setting bfp1 = 1 in the original NPC. The result in

(10) will prove important in understanding the behaviour of the NPC in terms of

goodness of fit, see below.

If the process xt is strongly exogenous, the NPC in (10) can be considered at

its own. In that case (10) has no stationary solution for the rate of inflation. A

necessary requirement is that there are equilibrating mechanisms elsewhere in the

system, specifically in the process governing xt (e.g., the wage share). As noted

above, this requirement parallels the case of dynamic homogeneity in the backward

looking Phillips curve ( i.e., the accelerationist model, with a vertical long run

Phillips curve). In the present context the message is that statements about the

stationarity of the rate of inflation, and the nature of the solution (backward or

forward) requires an analysis of the system.

The empirical results of GG and GGL differ from other studies in two respects.

First, bfp1 is estimated in the region (0.65, 0.85) whereas bbp1 is one third of bfp1 or less.

Second, GG and GGL succeed in estimating the hybrid model without imposing (9).

GGL (their Table 2) report the estimates { 0.69, 0.27} and {0.88, 0.025} for two

different estimation techniques. The corresponding roots are {1.09, 0.70, 0.37} and

{1.11, 0.70, 0.03}, illustrating that as long as the sum of the weights is less than one

the forward solution prevails.

8

The full set of coefficients values is thus: bx1 = 0, bfp1 = 0.25, bbp1 = 0.75, bx2 = 0.7

12

3.2 Evaluating the NPC as a model of observed inflation

The main tools for evaluation of the NPC on‘Euroland’ (and US) data have been

the GMM test of validity of overidentifying restrictions (i.e., the χ2J -test below)

and measures and graphs of goodness-of-fit. In particular the closeness between the

fitted inflation of the NPC and actual inflation, is taken as telling evidence of the

models relevance for understand US and ‘Euroland’ inflation, see GG and GGL. We

therefore start with an examination of what goodness-of-fit can tell us about model

validity in this area. The answer appears to be: ‘very little’. In this section we also

reviews the result of other approaches. The studies we cite employ data both from

the two large economy data sets (euro area and US data), as well as data from two

open economies: the UK and Norway.

As mentioned above, GG and GGL use the formulation of the NPC (1) where

the forcing variable xt is the wage share wst . Since under rational expectations the

errors in the forecast of ∆pt+1 and wst is uncorrelated with information dated t − 1

and earlier, it follows from (1) that

(11)

E{(∆pt − bp1 ∆pt+1 − bp2 wst )zt−1 } = 0

where zt is a vector of instruments.

The orthogonality conditions given in (11) form the basis for estimating the

model with generalized method of moments (GMM). The authors report results

which they record as being in accordance with a priori theory. Using their aggregate data for the Euro area9 1971.3 -1998.1, we replicate the results for Europe in

GGL:10 ,11

(12)

∆pt = 0.914∆pt+1 + 0.088wst + 0.14

(0.04)

(0.04)

(0.06)

χ2J

σ̂ = 0.321

(9) = 8.21 [0.51]

2

χRW (2) = 4.55 [0.10] .

The instruments used are five lags of inflation, and two lags of the wage share, output

gap (detrended output), and wage inflation. σ̂ denotes the estimated residual standard error, and χ2J is the statistics of the validity of the overidentifying instruments

(Hansen, 1982).12

In terms of the dynamic model (3) - (4) the implied roots are {1.08, 0.7} for

the US and {1.09, 0.7} for Europe. Thus, as asserted by GGL, there is a stationary

forward solution in both cases. However, since neither of the two studies contain

9

See the Data Appendix and Fagan et al. (2001).

10

Below, and in the following, square brackets, [..], contain p-values whereas standard errors are

stated in paranthesis, (..).

11

i.e., equation (13) in GGL01. We are grateful to J. David López-Salido of the Bank of Spain,

who kindly provided us with the data for the Euro area and a RATS-program used in GGL01.

12

GGL01 report estimates of (1) for the US as well as for Euroland. Using US data for 1960:1

to 1997:4, they report

(13)

∆pt = 0.924 ∆pt+1 + 0.250 wst .

(0.029)

(0.118)

13

any information about temporal properties of the observed wage share data, the

roots obtained are based on the additional assumption of an exogenous wage share

(bx1 = 0) with autoregressive coefficient bx2 = 0.7.

The statistic χ2RW (2) reports the outcome of testing the joint hypothesis

bp1 = 1 and bp2 = 0,

and we see that the hypothesis that the model can be reduced to a random walk

cannot be rejected. Hence, although the point estimates give a unique solution,

there is no significant support for that claim once we take into account estimation

uncertainty. The insignificant χ2RW (2) statistic has another implication too, namely

that in terms of fit, the Euro-area NPC does as good (or bad) as a simple random

walk.

We dwell on the NPC’s inability to explain inflation observations better than

a random walk exactly because several papers place conclusive emphasis on the

(reasonably) good fit of the NPC. Using US data, GG, though rejecting the pure

forward-looking model in favour of a hybrid model, nonetheless find that the baseline

model remains predominant. In the Abstract of GGL the authors state that “the

NPC fits Euro data very well, possibly better than US data”. Even more recently,

Galí (2003) writes

...while backward looking behaviour is often statistically significant,

it appears to have limited quantitative importance. In other words,

while the baseline pure forward looking model is rejected on statistical

grounds, it is still likely to be a reasonable first approximation to the

inflation dynamics of both Europe and the U.S. (Gali (2003, section

3.1).

We think that this is unconditional declaration of success is completely unwarranted–

the only thing that can be said is that the NPC fits about as well as a random walk

(not always an easy model to beat of course, but we don’t think that this is what

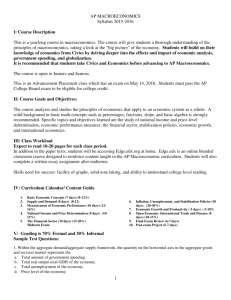

Gali had in mind.). Figure 3 illustrates the point by showing actual and fitted values

of (12) together with the fit of a random walk in the left-hand panel. The similarity

between the series is obvious, and the right-hand panel shows the cross-plot with

regression line of the fitted values.

14

Fitted values from NPC vs.

fitted values from random walk

Actual inflation and fit from the NPC

3.5

3.0

3.0

2.5

2.5

NPC-FIT

3.5

2.0

1.5

2.0

1.5

1.0

1.0

0.5

0.5

0.0

1975

1980

1985

1990

NPCRATSFIT

1995

0.0

0.0

0.5

1.0

DP

1.5

2.0

2.5

3.0

3.5

RW-FIT

Figure 3: Actual and fitted values of equation (14) together with the fit of a random

walk.

Our replication of the Euro-inflation hybrid NPC is given in (14):

∆pt =

0.681 ∆pt+1 + 0.281 ∆pt−1 +

(0.073)

(14)

(0.072)

0.19 wst

(0.026)

+ 0.063

(0.069)

GMM, T = 107 (1971 (3) to 1998 (1))

χ2J (8) = 8.01 [0.43]

Perhaps surprisingly, the same regress (from a structural model to a random walk)

applies to the hybrid model. The main intuition is that, since the coefficients of the

two inflation terms are close to unity (0.96) and the coefficient of the wage share is

neither statistically nor numerically significant, equation (14) is practically speaking

indistinguishable from a pure time series model of the dVAR type. From equation

(10), using the estimation results of the euro-area hybrid NPC the fitted rate of

inflation (∆p̂t ) is given by

∆p̂t = 0.09 + 1.06∆pt−1 + 0.41∆2 pt−1 + 0.02wst−1 .

Note first, that since the variance of ∆2 pt−1 is of a lower order than ∆pt−1 , the sum

1.06∆pt−1 +0.41∆2 pt−1 is dominated by the first factor. Second, since the coefficient

of the forcing variable wst is only 0.02, the variability of wst−1 must be huge in order

to have a notable numerical influence on ∆p̂t . But remember that wst is the wageshare (or its log), which means that its variability is relatively small (for example a

change of more than 0, 01 most be counted as quite big) in normal times.

15

It is interesting to note that when the NPC is applied to other data sets, i.e.,

to such diverse data as US, UK and Norwegian inflation rates, very similar parameter estimates are obtained, see Bårdsen et al. (2002). Is this a sign of structure?

GG and GGL, and other proponents of the NPC claim so. But a the correct interpretation seems to be that the NPC is almost void of explanatory power, and that

it captures only one common feature among different countries data sets, namely

autocorrelation.

Hence, the NPC (as an empirical) model fails to corroborate the theoretical

message: that rational expectations transmits the movements of the forcing variable strongly onto the observed rate of inflation. Recently, it has been shown by

Fuhrer (2005) that the typical NPC fails to deliver the expected result that inflation persistence is ‘inherited’ from the persistence of the forcing variable. Instead,

the derived inflation persistence, using estimated NPCs, turns out to be completely

dominated by ‘intrinsic’ persistence (due to the accumulation of disturbances of the

NPC equation). Quite contrary to the consensus view, Fuhrer shows that the NPC

fails to explain actual inflation persistence by the persistence that inflation inherits

from the forcing variable. Fuhrer summarizes that the lagged inflation rate is not a

‘second order add on to the underlying optimizing behaviour of price setting firms,

it is the model’.

More evaluation of the NPC is provided by e.g., Bårdsen et al. (2004) and

Bårdsen et al. (2005, Ch. 7) which also includes testing of parameter stability (over

sample periods), sensitivity (wrt estimation methodology), robustness (e.g., moving

one variable such as the output-gap from the set of overidentifying instruments to

the ‘structural’ part of the model) and encompassing (preexisting models (on UK

and Norwegian data).13 Expect for recursive stability, the results are disheartening

for those who believe that the NPC represent a data coherent and theory driven

model of price setting. As for recursive stability, it is more apparent than real since

the inherent fit of the model is so poor that statistical stability tests have low power

, and graphs of the sequence of recursive coefficient estimates of the forcing variable

show ‘stability around zero’.

Hence, after evaluation, the structural content of the NPC ‘disappears out

of the window’ leaving a huge question mark hovering over the New Keynesian

modelling programme paradigm: namely how to model inflation? In terms of the

Pagan Frontier, the NPC model (and therefore also the DSGE model) are misplaced

by earlier authors who place it high up on the frontier. On the basis of the results

reviewed here, the relevant position is well inside the frontier and close to origo

(since the fit there intrinsic persistence should not have been there, according to

theory).

Luckily, if the task of modelling real world inflation is started from another position, with a theoretical framework that incorporates some important traits of real

life wage setting for example, no such “puzzles” arise. Section 6 below elaborates.

13

See also Bårdsen et al. (2002) which includes Norwegian data.

16

4

Can we expect structural properties from microfoundations?

The DSGE models in macroeconomics, of which NPC is an integral part, is a continuation of the microfoundations programme in macroeconomics that started with

new classical macro models, rational expectations and the real business cycle model.

Macroeconomics as a discipline of economics was defined in the 1930s by Ragnar Frisch and others. In his paper on business cycles, Frisch was the first to use

the word “microeconomics” to refer to the study of single firms and industries and

“macroeconomics” to refer to the study of the aggregate economy.14 Interestingly,

according to De Vroey (2004), Frisch viewpoint was that macroeconomics belongs

to the domain of general equilibrium because macroeconomics is concerned with the

“whole economics system in its entirety”.15

Today, it is almost universally accepted among economists that macroeconomics is methodologically and ontologically reducible to microeconomics. This is

the essence of the program of microfoundations which aims to explain all macroeconomic phenomenon of the economy, in principle as least, by reference to the

behaviour of rational economic agents as postulated by microeconomics. Nevertheless, those who have reflected at all deeply on the matter often express a scepticism

to the program of microfoundations, and take an anti-reductionist position on the

basis that macroeconomic institutions and variables can fruitfully be viewed as real

entities and that causal structures between macroeconomic variables for example

lay can be disclosed by the systematic application of macroeconomics methodology

and econometrics for example.

A non-reductionist position is a potential starting point for setting up alternatives to the current dominance of representative agent macromodels. It can perhaps

be best presented by first considering the logical near impossibility and the practical

failures of the program of microfoundations (in a rather literal meaning). Microfoundations is typically associated with methodological individualism. Mark Blaug 1992

defines methodological individualism as the principle that “asserts that explanation

of social, political, or economic phenomena can only be regarded as adequate if they

run in terms of beliefs, attitudes and decisions of individuals”. Hence methodological individuals is a doctrine about the priority of intentional (and hence individual)

explanations in economics, as opposed to causal and functional explanations, and

it is widely accepted, almost by default. However there is gulf between theory and

practice, since methodological individualism is not widely practised. The simple

reason is what Kevin Hoover (e.g., Hoover (1988, p. 135)) has dubbed the “Cournot

problem” after the nineteenth mathematician and economist: There are too many

individuals (firms and consumers) and to many goods to be handled by direct modelling. Consequently, we observe that few explanations macroeconomic phenomena

14

In few memorable pharagrams early in his acclaimed paper Frisch states that

15

After ’propagation’, Frisch worked hard on business-cycles right up to the war. But he never

managed to formulate his paradigm as a set of equations, and in a way his macro economic research

programme became a failure, see Bjerkholt and Lie (2001). We can only speculate why he fell short

of formulating a macroeconimic system. Although Frisch of course knew Marshall very well, he

also knew Walras (his formative stay in France), and Frisch seems to have ’chosen’ Walras as his

ideal for a macroeconomic system. (But this is my speculation.)

17

have been successfully reduced to their microfoundations, see Blaug (1992, p. 46).

In fact, one example of the failure of microfoundations is provided by our review

of the poor structural properties of NPC model of (real world) inflation dynamics.

Following the innovative work of Clarida et al. (1999) and others, the NPC has

quickly acquired a position of a (new) consensus model of inflation dynamics, for

example as the model of the supply side of dynamic stochastic general equilibrium

models.16 This wide acceptance no doubt reflects the belief that the NPC represents

the end of the profession’s decade long quest for a data-consistent, micro-based,

rational expectations model of price-setting. Alas, as we have seen that even on its

own premises, the NPC is a complete failure. 17

The fate of the NPC illustrates that the commitment among economists to

methodological individualism is not grounded in successful applications, see Hoover

(2001, p 111). Rather, it appears to be based on an a instinctive or habitual belief

that methodological individualism is the only way “to do” macroeconomic research

properly. As already hinted above, the approach underlying our modelling programme is non-reductionist and is founded on the view that macroeconomic variables

are “real” entities with an external objective existence and that a goal of modelling

is to chart-out the causal structures (which need not be constant or invariant) between them. This view has recently been convincingly developed by Hoover (2001),

which we have made several references too already. A strong motivation of taking

a macroeconomic reality as a premise for a macro modelling is the noted failures of

the reductionistic programme. The reductionist programme does not seem tenable,

and we regard the Cournot problem as its fundament obstacle. Despite paying lipservice to methodological individualism, the closest that macroeconomics has come

to microfoundations is the representative agent model. However, the representative

agent side-steps the whole problem of representing the behaviour of each individual

and build up macroeconomic aggregates from there. Hence the representative agent

is framework can be seen as a concession to the impossibility of overcoming the central problem of the microfoundations program, namely how to link the postulated

behaviour of microeconomic agents to the measured behaviour of macroeconomic

variables, see Hoover (2001, page 285).

A commitment to a macroeconomic reality does not justify (or force upon

us) an atheoretical or wholly empirical and/or mechanical approach to macroeconometric modelling.18 To deny an essential connection between individual economic

16

The original NPC seems to have lost its credentials very quickly, the following remarks apply

to the hybrid NPC, where the lagged rate of iflation is included.

17

In addition economic theory develops over time, so the hallmark of structural interpretation

changes its valour over time, and the theory instigated macro models face the possibility of nonconstancy and regime shifts from within so to say. For example, DSGE models build on Ricardian

equivalence, and therefore imply that increased government expenditure reduce privare consumption. In new DSGE models, a proportion of households are assumed to be non-Ricardian. This

brings the models predictions closer to the evidence, but it also represents a regime shift in the

model.

18

In Hoover (2001), the coexistence of a macroeconomic and microeconomic reality is sought

established by the use of the philosophical theory of supervenience, see Chapter 5 in particular.

Supervenience allows a macroeconomic configuration to be derived from a given configuration of

microeconomic elements. But starting from a descpription of the macro level, there is no determined

(unique) corresponding constellation of micro elements.

18

behaviour and macroeconomics aggregates would be quite absurd. The general point

is that we regard that the right level for both specification and evaluation of any

formulated relationship is the macroeconomic level. below discusses some of the

specific issues that figure prominently in the ‘theory’ versus ‘data’ debate.

5

Back to square one: A Marshallian approach to macromodelling

Criticism of the reductionists approach to macroeconomics is usually met with the

answer that there are no alternatives–unless one want to stay forever with the ISLM model. Clearly, this is not true, and other macroeconomic programmes are now

being developed. Although the approaches differ in detail, there is also a wide common ground. For example: i) A resistance of the almost casual transformation of

a normative perfect foresight, intertemporal substitution perfect competition model

into a model for interpreting real world macroeconomic observations. ii) A focus

in the large-scale economic pathologies (prolonged depression, mass unemployment,

persistent inflation), which are strictly speaking unmentionable in the new consensus model. iii) A clear stand in the behaviour of the labour market, which is

modelled consequently as noncompetitive and with information asymmetries, and

non-market clearing. Consequently these “non-consensus” macro models do not accept the natural rate hypothesis in the form of an accelerationist Phillips curve for

example. iv) The alternative (new) macroeconomic models are non-Walrasian in

that they account for why the labour market fails to clear in particular, and that

they are critical to the postulate of a complete set of Arrow-Debreu markets in

general (again once the purpose is explanation).

The new alternative macro theory is also characterized by what we can call a

stepwise modelling. A good example is the essay by Hahn and Solow (1997) where a

model of wage and price setting and the labour market is developed first, and then

it is grafted into what the authors refer to as a ‘prototype macroeconomic model’.

Apparently, this stepwise approach to macroeconomic modelling flies in the

face of current new classical macroeconomics, of which DSGE models are the latest

and most popular development. These models adhere to the Walrasian principle that

one need to consider an entire economy at once, rather than a section of it. However,

if we imagine for a second that Walras never wrote his Elements and that, in the inter

war years, those who wanted to build macroeconomic general equilibrium models

only had Marshall’s writings available.19 In such a setting, Walras’ principle about

studying an entire economy on one go would be counter-intuitive. Intuitively, one

would rather take a stepwise approach consisting of studying the functioning of one

isolated market (Model A in our terminology) in the first stage, and piecing together

the results of the practical analysis to a complete model (Model B) in the second

stage. This two-tier general equilibrium methodology can according to De Vroey

(2004) be considered as typically Marshallian in contrast to the one-shot Walrasian

methodology. The gist of the approach was often expressed by ‘the Keynesians’ for

example by Hicks:

19

This analysis is due to De Vroey (2004), section 3.2.

19

If a model of the whole economy is to be securely based, it must be

grounded in an intelligible account of how a single market is supposed

to work, Hicks (1965, p. 78)20

In our view, one interesting topic to discuss is whether a modernized Marshallian approach to macroeconometric modelling is capable of establishing structural

properties in model that are intended for explanation and to aid policy. Of course

it has been a long standing task of model builders to establish good practice and

develop operational procedures for econometric model building which secures that

the end product of piecewise modelling is tenable and useful. Important contributions in the literature include Klein (1983), Klein et al. (1999), Christ (1966), and

the surveys in Bodkin et al. (1991) and Wallis (1994).

In Bårdsen et al. (2005) we supplement the existing literature by suggesting

the following stepwise operational procedure:21

1. By relevant choices of variables we define and analyse subsectors of the economy (correspond to marginalisation in econometrics).

2. By distinguishing between exogenous and endogenous variables we construct

(by conditioning) relevant partial models, which we will call models of type A

3. Finally, we need to combine these submodels in order to obtain a model B for

the entire economy.

Our thesis in the book is that, given that Model A is a part of Model B, it is

possible to learn about Model B from Model A. Of course this stepwise approach to

macroeconoming modelling flies in the face of current new classical macroeconomics,

of which DSGE models are the latest and most popular development. However,

given the failure of that programme to establish structural properties to the model

of inflation dynamics for example, a radically different approach may be needed.

Examples of properties that can be discovered using the stepwise procedure

includes cointegration in Model B. This follows from a corollary of the theory of

cointegrated systems: any non-zero linear combination of cointegrating vectors is

also a cointegrating vector. In the simplest case, if there are two cointegrating vectors in Model B, there always exists a linear combination of those cointegrating

vectors that “nets out” one of the variables. Cointegration analysis of the subset of

variables (i.e., Model A) excluding that variable will result in a cointegrating vector

corresponding to that linear combination. Thus, despite being a property of Model

B, cointegration analysis of the subsystem (Model A) identifies one cointegration

vector. Whether that identification is economically meaningful or not remains in

20

In this Hick’s would find himself in agreement with Milton Friedman is seems. In an interview

Friedman, Snowdon and Vane (1997), was asked specifically about his methodological position:

Question [to Friedman]: Kevin Hoover has drawn a methodological distinction between your work

as Marshallian and that of Robert Lucas as Walrasian. Is that distinction valid? Answer: There

is a great deal to that. On the whole I believe that it is probably true. I have always distinguished

between the Marshallian approach and the Walrasian approach. I have been personally always a

Marshallian (p. 202).

Maybe De Vroy is right, and that a Marshallian approach was ‘in the air’ ?

21

See Jansen (2002), reply to Søren Johansen (Johansen (2002)).

20

general an open issue, and any such claim must be substantiated in each separate

case. Our experience is that there is relatively few problems connected with identification of equilibrium relationships in subsectors (model A). For example, having

completed the macro model, it is possible to check the validity of the implicit assumption underlying the cointegration analysis of the consumption function. Other

important properties of the full model that can be tested from subsystems include

the existence or not of a natural rate of unemployment, see Bårdsen and Nymoen

(2003) and the relevance or not of forward looking terms in wage and price setting,

e.g. Bårdsen et al. (2004).

As pointed out by Johansen (2002), there is a Catch 22 to the above procedure: a general theory for the three steps will contain criteria and conditions which

are formulated for the full system. This seems to cut post-Walrasian econometrics

off from anything but VARs with only a few variables, so that the full Johansen

procedure can be applied. However, piecewise modelling can in practice be seen as

a sort of gradualism - seeking to establish sub-models that represents partial structure: i.e., partial models that are invariant to extensions of the sample period, to

changes elsewhere in the economy (e.g., due to regime shifts) and remains the same

for extensions of the information set. The alternative to this thesis amounts to a

kind of creationism, i.e., unless macroeconometrics should be restricted to aggregate

models, see Bårdsen et al. (2005, Chapter 2).

A separate reason to focus on sub-models is that the modellers may have good

reasons to study some parts of the economy more carefully than other parts. For

a central bank that targets inflation, there is a strong case for getting the model

of the inflationary process right. This calls for careful modelling of the wage and

price formation conditional on institutional arrangements for the wage bargain, the

development in financial markets and the evolving real economy in order to answer

a number of important questions: Is there a natural rate (of unemployment) that

anchors unemployment as well as inflation? What is the importance of expectations

for inflation and how should it be modelled? What is role of money in the inflationary

process? In line with this, next section evaluates a post-Walrasian alternative to the

NPC.

6

Evaluating the structural properties of an econometric

wage-price model

The modelling of inflation dynamics is important for monetary policy models, and

requires not only theoretical models, good data and efficient estimation routines.

One also needs to draw on knowledge about the characteristics of the labour market,

wage-setting and of other institutional traits that are specific to the economy under

study. If there is a trade off between estimation efficiency and the issue of getting the

economic institutions and mechanisms right, the practitioners of macroeconometric

modelling should give priority to the latter.

The clearest alternative to the NPC makes use of a framework that focuses on

wage setting, along with the price making of firms. An important underlying idea

is that workers and firms bargain over the distribution of rents created within the

firm. Although this is an almost trivial starting point, following the implications

leads to models that are quite unlike the NPC, or the conventional backward looking

21

Phillips curve for that matter. For example, there is no ‘need’ for unemployment

to stay close to a natural rate in order to avoid an accelerating price level (refuting

Phillips curve models), hence the average rate of unemployment is not pinned down

from wage and price setting alone (refuting a Layard-Nickell model); in the medium

run, real wages predicted to be positively linked to productivity.

This approach to inflation modelling has a relative long history and has been

tested repeatedly tested against real world data.22 . The structure and theoretical

content has not been destroyed by testing, but it has evolved in the process.

6.1 Wage bargaining theory

A main advance in the modelling of labour markets rests on the perception that

firms and their (organized or unorganized) workers are engaged in a partly cooperative and partly conflictual sharing of the rents generated by the operation of the

firm. In line with the assumptions, nominal wages are modelled in game theoretic

framework which fits the comparatively highly level of centralization and coordination in Norwegian wage setting, (e.g., Nymoen and Rødseth (2003a) and Barkbu

et al. (2003) for a discussion of the degree of coordination).

Our ability to model nominal wage setting in game theoretic terms is a main

advance in terms of theoretical underpinnings of macro model, and as one would

expect of such a revolution, the approach has profound implication. Linked up with

an assumption of monopolistically competitive firms, it represents a satisfactory

theory of the supply-side in the medium run. However, in applications we must

bridge the gap between the formal relationships of the model, and the empirical

relationship that may be present in the data. The main step here is to invoke the

assumptions of stochastic trends and to interpret the theoretically derived equations

as hypothesized cointegration relationships. From that premise, a dynamic model

of the inflation spiral in error-correction form follows logically.

There is a number of specialized models of “non competitive” wage setting.

Our aim in this chapter is to represent the common features of these approaches in a

model of theoretical model of wage bargaining and monopolistic competition, building on Rødseth (2000, Ch. 5.9) and Nymoen and Rødseth (2003a). We start with

the assumption of a large number of firms, each facing downward-sloping demand

functions. The firms are price setters and equate marginal revenue to marginal costs.

With labour being the only variable factor of production (and constant returns to

scale) we obtain the following price setting relationship

Qi =

ElQ Y Wi (1 + τ 1i )

ElQ Y − 1

Zi

where Zi = Yi /Ni is average labour productivity Yi is output and Ni is labour input.

Wi is the wage rate in the firm, and τ 1i is a payroll tax rate. ElQ Y > 1 denotes the

absolute value of the elasticity of demand facing each firm i with respect to the firm’s

22

In particular Sargan (1964), 1980. Bårdsen et al. (2005, Ch 3) trace the kind of model we use

below, with an explicit distinction between theoretical relationships that apply to the steady state,

and other dynamic equations that are intended to explain actual inflation, back to the Norwegian

model of inflation, see Aukrust (1977).

22

own price. In general ElQ Y is a function of relative prices, which provides a rationale

for inclusion of e.g., the real exchange rate in aggregate price equations. However, it

is a common simplification to assume that the elasticity is independent of other firms

prices and is identical for all firms. With constant returns technology aggregation

is no problem, but for simplicity we assume that average labour productivity is the

same for all firms and that the aggregate price equation is given by

(15)

Q=

ElQ Y W (1 + τ 1)

ElQ Y − 1

Z

The expression for real profits (π) is therefore

π=Y −

W (1 + τ 1)

W (1 + τ 1) 1

N = (1 −

)Y.

Q

Q

Z

We assume that the wage W is settled in accordance with the principle of maximizing

of the Nash-product:

(ν − ν 0 )f π1−f

(16)

where ν denotes union utility and ν 0 denotes the fall-back utility or reference utility.

The corresponding break-point utility for the firms has already been set to zero in

(16), but for unions the utility during a conflict (e.g., strike, or work-to-rule) is nonzero because of compensation from strike funds. Finally f is represents the relative

bargaining power of unions.

Union utility depends on the consumer real wage of an unemployed worker and

the aggregate rate of unemployment, thus ν( W

, U, Aν ) where P denotes the consumer

P

23

price index. The partial derivative with respect to wages is positive, and negative

with respect to unemployment (ν 0W > 0 and ν 0U ≤ 0). Aν represents other factors

in union preferences. The fall-back or reference utility of the union depends on the

overall real wage level and the rate of unemployment, hence ν 0 = ν 0 ( W̄

, U) where

P

W̄ is the average level of nominal wages which is one of factors determining the size

of strike funds. If the aggregate rate of unemployment is high, strike funds may run

low in which case the partial derivative of ν 0 with respect to U is negative (ν 00U < 0).

However, there are other factors working in the other direction, for example that

the probability of entering a labour market programme, which gives laid-off workers

higher utility than open unemployment, is positively related to U. Thus, the sign

of ν 00U is difficult to determine from theory alone. However, we assume in following

that ν 0U − ν 00U < 0.

With these specifications of utility and break-points, the Nash-product, denoted N , can be written as

or

23

½

¾f ½

¾1−f

W

W (1 + τ 1) 1

W̄

N = ν( U, Aν ) − ν 0 ( )

(1 −

)Y

P

P

Q

Z

½

N = ν(

¾f ½

¾1−f

1

W̄

Wq

(1 − Wq )Y

, U, Aν ) − ν 0 ( )

Pq (1 + τ 1)

P

Z

We abstract from a proportional income tax rate.

23

where Wq = W (1 + τ 1)/Q is the producer real wage and Pq (1 + τ ) = P (1 + τ 1)/Q

is the so called real wage wedge (between the consumer and producer real wage).

It might be noted that the income tax rate is omitted from the analysis since

it plays no role in the empirical model This simplification is in accordance with

previous studies of aggregate wage formation, see e.g. Calmfors and Nymoen (1990)

and Nymoen and Rødseth (2003b), where no convincing evidence of important effects

from the average income tax rate τ 2 on wage growth could be found. Note also that,

that unlike many expositions of the‘bargaining approach’, for example Layard et al.

(1991, Chapter 7), there is no aggregate labour demand function (employment as a

function of the real wage), subsumed in the Nash-product. In this we follow Hahn

and Solow (1997, p 32), who point out that bargaining is usually over the nominal

wage and not over the number of employment.24

The first order condition for a maximum is given by NWq = 0 or

(17)

f

Wq

, U, Aν )

ν 0W ( Pq (1+τ

1)

Wq

ν( Pq (1+τ

, U, Aν ) − ν 0 ( W̄

, U)

1)

P

= (1 − f)

1

Z

(1 − Wq Z1 )

.

q

In a symmetric equilibrium, W = W̄ , leading to W

= W̄

in equation (17), and the

Pq

P

b

aggregate bargained real wage Wq is defined implicitly as

(18)

Wqb = F (Pq (1 + τ 1), Z, f, U ).

A log-linearization of (18), using the property of homogeneity of degree 1 in prices,

and with subscript t for time period added, gives:25

(19)

w = mw + q + (1 − δ 12 ) (p − q) + δ 13 z − δ 15 u + δ 16 τ 1.

0 ≤ δ 12 ≤ 1, 0 < δ 13 ≤ 1, δ 15 ≥ 0, 0 ≤ δ 16 ≤ 1

Equation (19) is a general proposition about the bargaining outcome and its

determinants, and can serve as a starting point for describing wage formation in any

sector or level of aggregation of the economy.

Equation (15) already represents the normal price setting rule, and upon

linearization we have

(20)

q = mq + (w + τ 1 − z) .

24

They also make the point that such a demand function represents and internal inconsistency,

since the firms are price makers, not price takers, in the model.

25

Ideally, from the defintion of the wedge, (1 − δ 12 ) = δ 16 . However, in light of entirely different

time series properties of the empirical counterparts to p − q and τ 1, this restriction is not imposed.

The role of the wedge as a source of wage pressure is contested in the literature. In part, this

is because theory fails to produce general implications about the wedge coefficient ω–it can be

shown to depend on the exact specification of the utility functions ν and ν 0 , see e.g., Rødseth

(2000, Chapter 8.5) for an exposition.

24

6.2 How does the theory apply in a macro model?

At this point we must ask how the two theoretical relationships can be translated

into hypothesized relationships holding between actual time series? The answer is

that we interpret (19) and (20) as two cointegration relationships. Equation (19)

is then a hypothesis about how the wage rate the total economy, when measured

by an aggregated wage index wt (where t denotes quarters), cointegrates with the

empirical counterparts (time series) of p − q, z, u and τ 1. To be more precise,

we assume that wt , pt , qt and zt are I(1), and cointegrated. ut and τ 1t are I(0) by

assumption, and affect the mean of the cointegration relationship.26

Hence, as a first step, we re-write (19) and (20) as two hypothesised cointegration relationships:

(21)

(22)

wt = mw + q + (1 − δ 12 ) (pt − qt ) + δ 13 zt − δ 15 ut + δ 16 τ 1t + ecmb,t

qt = mq + (wt + τ 1t − zt ) + ecmf,t

where the subscript t for time period has been introduced, and where the two disturbances ecmb,t and ecmf,t are both I(1) if the theory is correct.

At this stage it is worth mentioning one interpretation of (21) and (22) which

is frequently made in the literature, but which represents a wrong turn: By making

use the homogeneity property, the two equations can be written as two conflicting

equations of the real wage wt − qt :

(23)

(24)

b

wq,t

= mw + qt + (1 − δ 12 ) (pt − qt ) + δ 13 zt − δ15 ut + δ 16 τ 1t + ecmb,t

f

wq,t

= −mq − τ 1t − zt − ecmf,t

Next, take the mathematical expectation on both sides of both equations. The

f

b

addition requirement of E[wq,t

] = E[wq,t

], then allows us to solve for the ‘natural

rate’ of unemployment (or ‘wage-curve’ NAIRU) from this partial system (of wag

and price setting). If δ12 = 1 the wedge drops out and the ‘natural rate’, call it uw is

seen to depend only on factors from the supply side of the economy. However, from

the dynamic model below it is straight forward to established the general result that

ut → uss 6= uw , where uss denotes the the rate of unemployment in a steady state

of a dynamic wage and price model, see Kolsrud and Nymoen (1998). Thus, there

is in general no correspondence between the wage curve NAIRU uw and the steady

state of the dynamic wage-price system which we develop next.27

26

Real-world data of the rate of unemployment has also non-linear traits, such as shifts in mean,

but this non-linearity is probably better represented by regime shift dummies than by a unit-root.