Adaptive Bitrate Video Streaming over HTTP in Mobile Wireless Networks Haakon Riiser

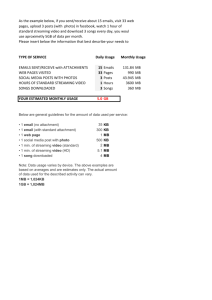

advertisement