MAT4701: Voluntary Assignment 2

advertisement

—————————————————————————————————

MAT4701: Voluntary Assignment 2

Sindre Froyn

—————————————————————————————————

——————————————–

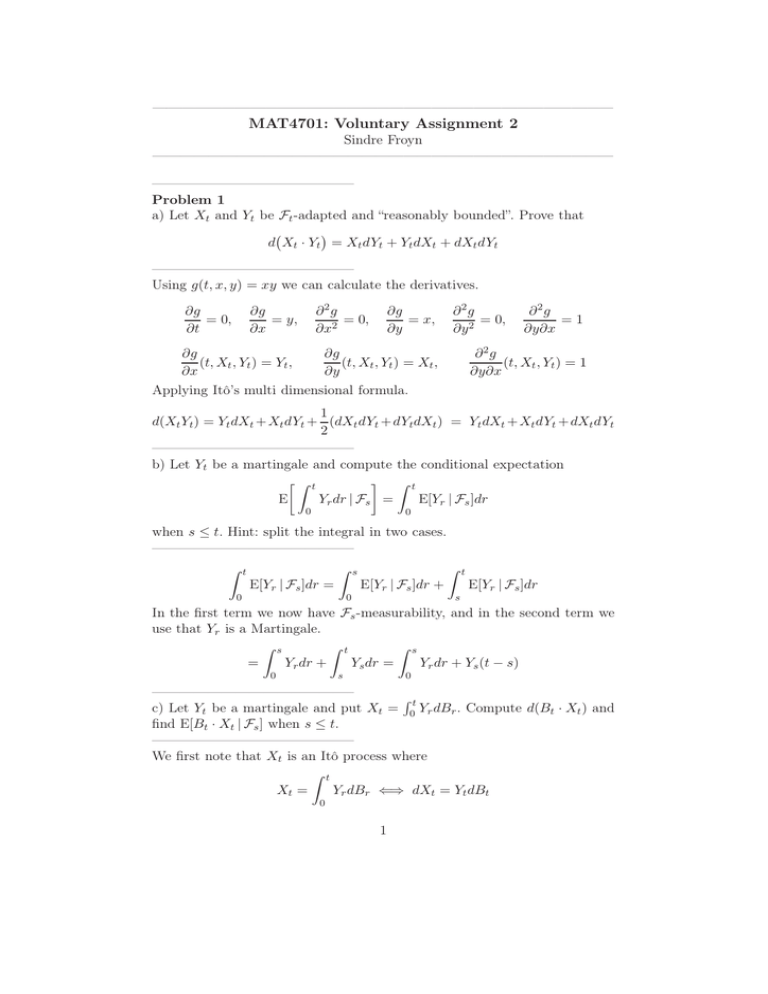

Problem 1

a) Let Xt and Yt be Ft -adapted and “reasonably bounded”. Prove that

d Xt · Yt = Xt dYt + Yt dXt + dXt dYt

——————————————–

Using g(t, x, y) = xy we can calculate the derivatives.

∂2g

= 0,

∂x2

∂g

= y,

∂x

∂g

= 0,

∂t

∂g

= x,

∂y

∂2g

= 0,

∂y 2

∂2g

=1

∂y∂x

∂2g

(t, Xt , Yt ) = 1

∂y∂x

∂g

∂g

(t, Xt , Yt ) = Yt ,

(t, Xt , Yt ) = Xt ,

∂x

∂y

Applying Itô’s multi dimensional formula.

1

d(Xt Yt ) = Yt dXt + Xt dYt + (dXt dYt + dYt dXt ) = Yt dXt + Xt dYt + dXt dYt

2

——————————————–

b) Let Yt be a martingale and compute the conditional expectation

Z t

Z t

Yr dr | Fs =

E

E[Yr | Fs ]dr

0

0

when s ≤ t. Hint: split the integral in two cases.

——————————————–

Z t

Z s

Z t

E[Yr | Fs ]dr

E[Yr | Fs ]dr +

E[Yr | Fs ]dr =

0

0

s

In the first term we now have Fs -measurability, and in the second term we

use that Yr is a Martingale.

Z s

Z t

Z s

=

Yr dr +

Ys dr =

Yr dr + Ys (t − s)

0

0

s

——————————————–

Rt

c) Let Yt be a martingale and put Xt = 0 Yr dBr . Compute d(Bt · Xt ) and

find E[Bt · Xt | Fs ] when s ≤ t.

——————————————–

We first note that Xt is an Itô process where

Z t

Yr dBr ⇐⇒ dXt = Yt dBt

Xt =

0

1

Using the result from a), we know that

d(Bt Xt ) = Xt dBt + Bt dXt + dBt dXt

Substituting Yt dBt for dXt and using that dBt2 = dt.

= Xt dBt + Bt Yt dBt + Yt dt = Yt dt + Xt + Bt Yt dBt

Writing in integral form with B0 X0 = 0 since B0 = 0.

Z t

Z t

(Xr + Br Yr )dBr

Yr dr +

Bt Xt =

0

0

Taking the conditional expectation.

hZ t

i

hZ t

i

E[Bt Xt | Fs ] = E

(Xr + Br Yr )dBr | Fs

Yr dr | Fs + E

0

0

The first term was calculated in b), and the second term is a pure stochastic

integral which means it is a martingale:

Z s

Z s

(Xr + Br Yr )dBr

Yr dr + Ys (t − s) +

=

0

0

——————————————–

Problem 2

a) Let σ(t, ω) be any bounded Ft -adapted stochastic process. Solve the initial

value problem

dXt = −2tXt dt + σ(t, ω)

X0 = 1

and compute E[Xt ]. Xt and Bt are 1-dimensional.

——————————————–

2

Introducing the integrating factor et .

2

2

2

d(et Xt ) = 2tet Xt dt + et dXt =

2

2

2tet Xt dt + et

2

− 2tXt dt + σ(t, ω) =

(

((2 ((

2

2

t (((

2te

Xt dt − 2tet Xt dt + et σ(t, ω) = et σ(t, ω)

((

(

Writing in the integral form, and recalling that X0 = 1.

Z t

2

t2

es σ(s, ω)dBs

e Xt = X0 +

0

Z

2

Xt = X0 e−t +

t

es

2 −t2

σ(s, ω)dBs

0

−t2

Xt = e

+

Z

t

es

0

2

2 −t2

σ(s, ω)dBs

Taking the expectation:

h

2

E[Xt ] = E e−t ] + E

Z

t

es

0

2 −t2

i

2

σ(t, ω)dBs = e−t

2

since e−t is a constant and the expectation to a stochastic integral is 0.

——————————————–

b) Consider the 1-dimensional initial value problem

dXt = b(t, Xt )dt + σ(t, Xt )dBt

X0 = x

Spell out in detail what conditions on b and σ are needed for a unique solution

to exist.

——————————————–

As per theorem 5.2.1 from the text book.

|b(t, x) − b(t, y)| ≤ C|x − y|

|σ(t, x) − σ(t, y)| ≤ C|x − y|

|b(t, x)| ≤ C(1 + |x|)

|σ(t, x)| ≤ C(1 + |x|)

Both b and σ need to be Lipschitz continuous and they can have at most

linear growth. The uniqueness is dependant on the Lipschitz property. Also

E[|x|2 ] < ∞.

——————————————–

In the rest if thus problem we will assume that b : [0, ∞) → R is a continuous

function (note: no dependence on ω in b). We further assume that the

coefficients in the initial value problem

dXt = b(t) · Xt dt + σ(Xt )dBt

X0 = x

satisfy the conditions spelled out in b).

c) Find Ex [Xt ].

——————————————–

We begin by solving the SDE, and do so by multiplying with

R t the integrating

−B(t)

′

factor e

where B (t) = b(t), or equivalently B(t) = 0 b(s)ds.

d e−B(t) Xt = −b(t)e−B(t) Xt dt + e−B(t) dXt =

−b(t)e−B(t) Xt dt + e−B(t) b(t)Xt dt + σ(Xt )dBt = e−B(t) σ(Xt )dBt =⇒

Z t

−B(t)

e−B(s) σ(Xs )dBs

e

Xt = X0 +

0

Xt = xeB(t) +

Z

t

eB(t)−B(s) σ(Xs )dBs

0

Taking the expectation,

Ex [Xt ] = xeB(t)

3

——————————————–

d) Let h > 0 and assume that Xh (ω) is known. Compute Ex [Xt+h |Fh ].

——————————————–

By the Markov property, and using that f (x) = x,

Ex [f (Xt+h ) | Fh ] = EXh [f (Xt )] = Ex [Xt ]x=Xh

By the result in c),

= Xh eB(t)

——————————————–

Rt

e) Prove that Yt = Xt · e− 0 b(s)ds is a martingale.

——————————————–

Rt

We’ll use B(t) = 0 b(s)ds, so

Z t

−B(t)

e−B(s) σ(Xs )dBs .

Yt = Xt e

=x+

0

There are three properties we must verify. First Yt must be Ft -measurable.

This is trivial as we don’t use information from the future. Secondly Yt must

be finite, which follows from the assumptions we have on σ(Xs ) given in this

exercise. Thirdly E[Yt | Fu ] = Yu for u ≤ t. This point needs some special

consideration.

Z t

i

h

e−B(s) σ(Xs )dBs | Fu =

E[Yt | Fu ] = E x +

0

x+E

hZ

u

−B(s)

e

i

σ(Xs )dBs | Fu + E

0

hZ

u

t

i

e−B(s) σ(Xs )dBs | Fu =

By measurability and independence,

Z u

i

hZ t

−B(s)

e

σ(Xs )dBs + E

e−B(s) σ(Xs )dBs =

x+

0

u

since expected stochastic integrals are 0,

Z u

e−B(s) σ(Xs )dBs = Yu .

x+

0

The three properties are satsified, and Yt is a martingale.

——————————————–

Problem 3

Consider the initial value problem

p

X0 = x

dXt = rXt dt + |Xt |dBt

The coefficients in this equation do not satisfy the usual conditions for a

unique solution to exist. Which condition fails? Prove your statement.

——————————————–

4

The stochastic part σ(Xt ) does not satisfy the Lipschitz property. To

show this, we first see where the property is satisfied (as in equation 7.1.5 in

the text book).

σ(x) − σ(y) ≤ C|x − y|

p p

|x| − |y| ≤ C|x − y|.

p

p

If we define a = |x| ≥ 0 and b = |y| ≥ 0, we get a2 = x ≥ 0 and

b2 = y ≥ 0

|a − b| ≤ C|a2 − b2 | = C (a + b)(a − b) = C(a + b)|a − b| =⇒

1

≤ (a + b)

C

However, when we have a fixed C and choose small enough a and b such that

1 ≤ C(a + b) =⇒

1

> (a + b)

C

the Lipschitz continuity is not satisfied, and we can’t guarantee uniqueness.

——————————————–

Problem 4

Let B1 and B2 be two independent Brownian motions, and let τD be the

first exit time of the diffusion dXt = (dB1 (t), dB2 (t)) from the unit ball

D = {x = (x1 , x2 ) | x21 + x22 < 1}. Compute E x [τD ] when starting from a

point inside D. Hint: Use the Dynkin’s formula on f (x1 , x2 ) = x21 + x22 .

——————————————–

Dynkin’s formula states that,

hZ τ

i

Ex [f (Xτ )] = f (x) + Ex

Af (Xs )ds .

0

We begin by finding the generator Af (Xs ), which is given by

Af (x) =

X

i

bi (x)

∂2f

1X

∂f

(σσ T )i,j (x)

+

.

∂xi 2

∂xi ∂xj

i,j

Calculating the partial derivatives for i = 1, 2.

∂f

= 2xi ,

∂xi

∂2f

= 2,

∂x2i

∂2f

= 0 (i 6= j).

∂xi ∂xj

From the diffusion dXt we see that bi = 0 and σi = 1 for i = 1, 2, so all the

first derivatives are cancelled and σiT = σi . This results in

Af (x) =

1

∂2f

1

1X 2

σi (x) 2 = (1)(2) + (1)(2) = 2

2

2

2

∂xi

i

5

Obviously Af (Xs ) = 2. Using τ = σk = min(k, τD ) and the function f we

have, when (x1 , x2 ) is the starting point in D,

h Z σk i

x

2

2

2

2

x

E [B1 (σk ) + B2 (σk )] = x1 + x2 + 2E

ds

0

1

1 − (x21 + x22 )

2

When k → ∞ we get τD = lim σk < ∞ and

Ex [σk ] ≤

Ex [τD ] =

1 − (x21 + x22 )

2

——————————————–

Problem 5

Let dXt = 3dt + 2dBt , Xs = x, t ≥ s.

a) Write down Lf (s, x) for the extended process Yt = (t, Xt ).

——————————————–

By definition, for some f ∈ C 2 ,

LX f (x) =

X

bi (x)

i

∂2f

1X

∂f

(σσ T )i,j (x)

+

∂xi 2

∂xi ∂xj

i,j

1

= b(x)f ′ (x) + σ 2 (x)f ′′ (x) = 3f ′ (x) + 2f ′′ (x).

2

For the extended process,

LY f (s, x) =

∂f

∂2f

∂f

+3

+2 2.

∂s

∂x

∂x

——————————————–

b) Consider the optimal stopping problem

Φ(s, x) = sup E(s,x) [e−2τ Xτ ].

τ ≥s

Assume that this problem has an exit region

D = {(s, x) | − ∞ < x < x0 }

and use the verification theorem to determine x0 and Φ(s, x).

——————————————–

By the verification theorem we have the requirement Lφ + f = Lφ = 0 (since

f is 0 in this case).

Lφ(s, x) =

∂φ

∂φ

∂2φ

+3

+2 2 =0

∂s

∂x

∂x

6

(from a)

If we assume a solution on the form φ(s, x) = e−2s ψ(x) we get:

Lφ(s, x) = −2e−2s ψ(x) + 3e−2s ψ ′ (x) + 2e−2s ψ ′′ (x) = 0

=⇒ −2ψ(x) + 3ψ ′ (x) + 2ψ ′′ (x) = 0

We have a standard second degree differential equation. Using the quadratic

formula we find the roots r1 = 21 and r2 = −2 and get

x

ψ(x) = C1 e 2 + C2 e−2x .

For x ∈ D we see that as x → −∞, C2 e−2x → ∞ when we require it to be

equal to zero, so C2 = 0. Since we need to have continuity at the border of

D, we have the additional requirement ψ(x0 ) = x0 .

ψ(x0 ) = C1 e

x0

2

= x0

C1 = x0 e−

⇒

x0

2

Since φ ∈ C 1 (G) in the verification theorem we can determine x0 .

φ(s, x) =

∂φ(s, x)

=

∂x

x0 x

e−2s x0 e− 2 e 2

e−2s x

−2s

e

x0 −

2 e

e−2s

x0

2

x

e2

if x ≤ x0

if x > x0

if x ≤ x0

if x > x0

Setting the derivatives equal in the point x = x0 , which is on the border of

D these must be equal, and we can determine x0 .

e−2s

x0 − x 0 x 0

e 2 e 2 = e−2s

2

⇒

x0 = 2

By the verification theorem

x

Φ(s, x) = 2e 2 −2s−1 .

——————————————–

c) Solve the problem in b) again using dXt = 2dBt , Xs = x, t ≥ s. Prove

that the exit region (the complement of D) is bigger than in b), and try to

give an intuitive explanation for this.

——————————————–

For this process the b functions are 0, so all the first derivatives disappear

from the function.

∂2φ

∂φ

+2 2

Lφ =

∂s

∂x

Assuming φ(s, x) = e−2s ψ(x):

−2e−2s ψ(x) + 2e−2s ψ ′′ (x) = 0

7

⇒

ψ ′′ (x) − ψ(x) = 0

Finding the solutions for x2 − 1 = 0 which is r1 = 1 and r2 = −1 we have

ψ(x) = C1 ex + C2 e−x = C1 ex

since C2 = 0 because the second term diverges when x → −∞. By continuity

we require ψ(x0 ) = C1 ex0 = x0 so C1 = x0 e−x0 .

We have established that

−2s

e

x0 e−x0 ex if x ≤ x0

φ(s, x) =

e−2s x

if x > x0

We differentiate and set these equal at the transitional point x0 so we can

determine what it is.

−2s

∂φ(s, x)

e

x0 e−x0 ex if x ≤ x0

=

e−2s

if x > x0

∂x

e−2s x0 e−x0 ex0 = e−2s

⇒

x0 = 1

By the verification theorem Φ(s, x) = ex−2s−1 . We have proved that the exit

region with x0 = 1, which is [1, ∞), is bigger than the exit region from b)

which is [2, ∞).

The difference between the exit regions depends on the term 2dt which is only

present in the process from b). This term provides us with a positive drift

that counters the possible negative effects of the stochastic term. Because of

this the process allows for a larger maximum stopping time.

8