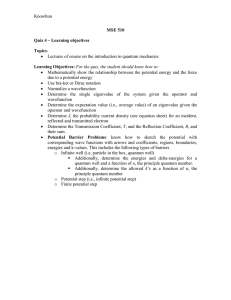

A system for variational Monte Carlo calculations spring 2009

advertisement

A system for variational Monte Carlo calculations

Project 1, FYS4410, Computational Physics 2,

spring 2009

Kyrre Ness Sjøbæk

April 14, 2009

Abstract

In this document I will describe a program for calculating the energy of various atoms using Metropolis-Hastings Monte-Carlo, given

an electron many-body wavefunction. I will further describe how it

may be easily generalized it to any quantum system where the Hamiltonian is only acting in position space, and we have an even number

of fermions.

I will also describe methods to find the wavefunction describing the

ground state of the system. Given this, we can calculate properties such

as ground state energy to a high accuracy, as well as the onebody- or

charge density.

I will also describe a technique for estimating the calculation error

(blocking), as well as how to automate this calculation.

1

Contents

1 Introduction

1.1 Many-body quantum mechanics . . . . . . . . . . . . . . . .

1.1.1 Choice of test wavefunctions . . . . . . . . . . . . . .

1.1.2 Onebody- and charge density . . . . . . . . . . . . .

1.2 Monte-Carlo integration . . . . . . . . . . . . . . . . . . . .

1.2.1 Importance sampling . . . . . . . . . . . . . . . . . .

1.2.2 Metropolis algorithm . . . . . . . . . . . . . . . . . .

1.2.3 Metropolis-Hastings algorithm . . . . . . . . . . . .

1.2.4 Use of Monte Carlo integration in Quantum physics

1.2.5 Error estimation through blocking . . . . . . . . . .

1.3 Variation of parameters . . . . . . . . . . . . . . . . . . . .

1.3.1 Sample and plot . . . . . . . . . . . . . . . . . . . .

1.3.2 Outline of the Conjugate Gradient Method (CGM) .

.

.

.

.

.

.

.

.

.

.

.

.

4

5

6

8

9

10

11

12

14

15

16

16

17

2 Program

2.1 Basic structure . . . . . . . . . . . . . . . . . .

2.2 Wavefunctions . . . . . . . . . . . . . . . . . . .

2.2.1 Stateless . . . . . . . . . . . . . . . . . .

2.2.2 Statefull . . . . . . . . . . . . . . . . . .

2.2.3 Support for Conjugate Gradient Method

2.2.4 Other features . . . . . . . . . . . . . .

2.3 Algorithms . . . . . . . . . . . . . . . . . . . .

2.3.1 Brute-force Metropolis . . . . . . . . . .

2.3.2 Importance sampling Metropolis . . . .

2.3.3 Blocking . . . . . . . . . . . . . . . . . .

2.3.4 CGM . . . . . . . . . . . . . . . . . . .

2.4 Runner scripts . . . . . . . . . . . . . . . . . .

2.4.1 Grid sampling programs (vmc_*.cpp) . .

2.4.2 CGM (paramsearch_conjgrad.cpp) . .

2.4.3 Misc. . . . . . . . . . . . . . . . . . . . .

2.5 Parallelization . . . . . . . . . . . . . . . . . . .

2.6 Ideas for improvement . . . . . . . . . . . . . .

2.6.1 Performance improvements . . . . . . .

2.6.2 Better program structure . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

19

19

19

19

21

23

23

23

24

25

25

26

27

27

28

29

29

30

30

31

.

.

.

.

.

.

32

32

32

34

36

36

38

3 Results

3.1 Minimum of Ē[~

α] .

3.1.1 Helium . . .

3.1.2 Beryllium .

3.1.3 Neon . . . .

3.2 Value of the energy

3.2.1 Blocking . .

. . . . . . . . . .

. . . . . . . . . .

. . . . . . . . . .

. . . . . . . . . .

at the minimum

. . . . . . . . . .

2

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

3.3

3.2.2 Helium . . . . .

3.2.3 Beryllium . . .

3.2.4 Neon . . . . . .

Charge density profiles

3.3.1 Helium . . . . .

3.3.2 Beryllium . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

4 Appendix A: Header files

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

38

39

40

42

42

43

43

3

1

Introduction

The goal of our calculation is to find an approximation to the exact solution

of Schrödinger’s equation (1). We are going to work in the position basis,

so the vector ψ is represented by a wavefunction. The Hamiltonian Ĥ is

therefore also written in a position basis.

Ĥψ = Eψ

(1)

Equation (1) is an eigenvalue equation. We may identify the eigenvalue E

of the Hamiltonian and eigenfunction ψ as the energy of the system, given

that it is in the state ψ.

The solution we are after is what is called the ground state, ψgs , which

has the energy Egs . This is the solution of (1) with the lowest possible

eigenvalue E, and thus the lowest possible energy. This is an interesting

state as the dynamics of the quantum system will probably take it toward

this state via interaction (emission of photons etc.).

We will not try to solve (1) exactly, but instead try to find an as good as

possible estimate for the wavefunction ψgs . In order to do this will make an

ansatz wavefunction with tunable parameters, which we will vary in order

to find an as low as possible energy. This is called the variational method1 .

The method is then to calculate the energy at different sets of variational

parameters, in order to find the set of parameters that yields the lowest

energy. This is equivalent to sampling different points in the Hilbert space, as

our parameter space is a subset of the full Hilbert space. It also means that by

varying the set of parameters, we will pick up different energy eigenfunctions,

as our test wavefunction2 ψT ({~r}; α

~ ) may be written as a weighted sum over

energy eigenstates ψi :

∞

X

ψT =

Ci ψi

i=gs

As our «convergence criteria» is that we find a minima in the energy as a

function of the parameters3 , this will quite reliably give us the ground state

energy (given that we have a good ansatz wavefunction), but the wavefunction might not be as well described. This is due to that several wavefunctions will contribute (unless we have the true ground state WF), which could

make an impact for example the shape of the the onebody density (see section 1.1.2)) and other observables. This will be especially bad if we have a

degenerate or close to degenerate ground state.

1

See for example D.J.Griffiths, ’Introduction to quantum mechanics’ (Pearson 2005,

2ed, international edition), Chapter 7 ’The variational principle’

2

I have defined {~r} as the set of single-particle positions, and α

~ is the set of variational

parameters.

3

In other words, δE = E(δ~

α) → 0

4

1.1

Many-body quantum mechanics

As most interesting physical systems are composed of several interacting

particles, we need a formalism that accommodates it. Further, quantum

particles have non-classical properties, such as being indistinguishable, as

well as being either fermions or bosons.

If we specialize to fermions, as electrons are of this type (being spin 1/2),

they are characterized by not being able to share the same quantum state,

and also fulfilling the anti-symmetrisation requirement

P̂ ψ(~r1 , s1 ; ~r2 , s2 ; . . . , ~ri , si ; . . . ; ~rj , sj ; . . . ; ~rn , sn )

= −ψ(~r1 , ~r2 , . . . , ~rj , . . . , ~ri , . . . , ~rn )

(2)

, where P̂ is an exchange operator interchanging ~ri , si and rj , sj , or more

generally interchanging the quantum numbers of single-particle wavefunction

i with single-particle wavefunction j 4 .

A way of writing a manybody wavefunction fulfilling (2), is by using a

Slater determinant, such as shown in (3).

ϕ1 (~r1 , s1 ) ϕ2 (~r1 , s1 ) · · · ϕn (~r1 , s1 ) ..

ϕ1 (~r2 , s2 ) ϕ2 (~r2 , s2 )

.

(3)

ψ(~r1 , s1 ; r2 , s2 ; · · · ) = |φ| = ..

.

.

.

.

.

.

.

ϕ1 (~rn , sn ) ϕ2 (~rn , sn ) · · · ϕn (~rn , sn )

Exchange of any two coordinates is equivalent to interchanging two rows in

(3), and exchange of any two quantum numbers is equivalent to interchange

of two columns. This yields a sign change in the determinant (and thus the

wavefunction), which is exactly what we want for fermions.

However, this means that for an N -body wavefunctions, we have to keep

track of an N × N slater matrix, which is quite heavy for a big system.

Luckily, if the Hamiltonian is spin-independent, and N is even, the slater

determinant of the wavefunction used as input to this operator may be split

into two N2 × N2 matrices.

We may see this by a simple example – Helium. The ground-state of

Helium is two electrons in the 1s orbital, which means the spins have to be

anti-symmetric. This means we may write the slater matrix as

ϕ1s (~r1 )χ↑ (s1 ) ϕ1s (~r1 )χ↓ (s1 )

|φ| = ϕ1s (~r2 )χ↑ (s2 ) ϕ1s (~r2 )χ↓ (s2 )

=ϕ1s (~r1 )χ↑ (s1 )ϕ1s (~r2 )χ↓ (s2 ) − ϕ1s (~r2 )χ↑ (s2 )ϕ1s (~r1 )χ↓ (s1 )

4

We might write ψ(~r1 , s1 ; ~r2 , s2 ; · · · ) as h~r1 , s1 ; ~r2 , s2 ; · · · |ϕ1 , ϕ2 , ϕ3 , · · ·i, where ~ri , si are

single particle positions in position- and spin-space, while ϕj are single-particle wavefunctions. This means that if whether choose to interchange the positions or the single-particle

wavefunctions is irrelevant. We also see that the space the particles are described in (here:

position and spin) does not matter.

5

which means that

Ĥϕ1s (~r1 )χ↑ (s1 )ϕ1s (~r2 )χ↓ (s2 ) − ϕ1s (~r2 )χ↑ (s2 )ϕ1s (~r1 )χ↓ (s1 )

= (χ↑ (s1 )χ↓ (s2 ) − χ↑ (s2 )χ↓ (s1 )) Ĥϕ1s (~r1 )ϕ1s (~r2 )

, where ϕ1s (~r1 )ϕ1s (~r1 ) is the product of two (1 × 1) slater matrices with

identical space-only quantum numbers. This results generalizes to higher

number of particles – for example for beryllium (Z = 4) we get

ϕ1s (~r1 )χ↑ (s1 ) ϕ1s (~r1 )χ↓ (s1 ) ϕ2s (~r1 )χ↑ (s1 ) ϕ2s (~r1 )χ↓ (s1 )

ϕ (~r )χ (s ) ϕ1s (~r2 )χ↓ (s2 ) ϕ2s (~r2 )χ↑ (s2 ) ϕ2s (~r2 )χ↓ (s2 )

|φ| = 1s 2 ↑ 2

ϕ1s (~r3 )χ↑ (s3 ) ϕ1s (~r3 )χ↓ (s3 ) ϕ2s (~r3 )χ↑ (s3 ) ϕ2s (~r3 )χ↓ (s3 )

ϕ1s (~r4 )χ↑ (s4 ) ϕ1s (~r4 )χ↓ (s4 ) ϕ2s (~r4 )χ↑ (s4 ) ϕ2s (~r4 )χ↓ (s4 )

which may be written as the product of two 2 × 2 matrices

ϕ1s (r~1 ) ϕ2s (r~1 ) ϕ1s (r~3 ) ϕ2s (r~3 )

·

|φ1 | · |φ2 | = ϕ1s (r~2 ) ϕ2s (r~2 ) ϕ1s (r~4 ) ϕ2s (r~4 )

when calculating the energy with a spin-independent Hamiltonian. Similar

is the case for Neon (Z = 10), where we instead get 5 × 5 matrices when the

Hamiltonian does not act on spin.

Unfortunately5 , Slater matrices are not all there is to manybody quantum

mechanics. A quantum system is fundamentally different from a classical

system in that it is more than the sum of its parts – there are multi-particle

correlations that needs to be taken care of. In order to do this, we will use a

Jastrow factor which takes into account two-particle correlations, as we only

have two-particle interactions. This may schematically be written as

Y

J=

g(rij )

(4)

i<j

, where g(rij ) is some function of the separation distance rij , and we are

taking the product of g(rij ) between the all possible sets two states.

1.1.1

Choice of test wavefunctions

In order to have an as good as possible convergence to the ground state, it is

necessary that our parametrized test wavefunction span the part of Hilbert

space containing the true ground state, or at least get close to it. Further,

we need to avoid infinities in the integrals.

In order to satisfy the last condition, we need to obey the so-called cusp

condition6 . This is in order to avoid the infinities when we have an Hamiltonian which includes terms on the form rZi and/or r1ij . This may be done by

5

If this was true, we would rather use Hartree-Fock methods, which are much faster,

but can only handle systems described by a Slater determinant

6

M. Hjorth-Jensen, ’computational physics’, university of Oslo, 2009, unpublished.

6

demanding that the radial part of the wave function should be on the form

α

RT ∝ e− l+1 r

, where α ≈ Z.7 Similarly for the jastrow factors g(rij )

rij

g(rij ) ∝ exp

2(l + 1)

.

Since we here are interested in atomic systems, a good choice of trial

wavefunctions is probably to use the Hydrogen wavefunctions, as they are

exact solutions to a similar problem, and are quite easy to handle (at least

for low quantum numbers) both numerically and analytically. We have used

the (non-normalized) single-particle wavefunctions listed below. I have also

listed the gradients and the laplacians, as they are needed for the kinetic

energy, and also for the Metropolis-Hastings algorithm described in sections

1.2.3 and 1.2.4.

ϕ1s (r) = e−αr

~ 1s (~r) = − α~r e−αr

∇ϕ

r

α

2

∇ ϕ1s (r) =

(αr − 2) e−αr

r

αr − α r

ϕ2s (r) =

1−

e 2

2

~ 2s (~r) = − α~r 2 − αr e− α2 r

∇ϕ

2r 2

αr αr 2 α

α

2

4−5

+

e− 2 r

∇ ϕ2s (r) = −

2r

2

2

α

ϕx2p (~r) = αxe− 2 r

αxy αxz α

αx2

x

~

k̂ e− 2 r

∇ϕ2p (~r) = α

1−

î −

ĵ −

2r

2r

2r

α2 x αr −α r

4−

e 2

∇2 ϕx2p (~r) = −

2r

2

For the 2p state, we actually have four states, corresponding to the magnetic

quantum numbers m = {0, ±1}. For our observables we may use so-called

real solid harmonics instead. Thus ϕx2p in the table actually represents three

wavefunctions – one for x (the one listed), one for y, and one for z.

For our Jastrow factor, we choose

arij

g(rij ) = exp

(1 + βrij )

7

Approximately due to charge-screening effects, making the effective charge seen by

electrons smaller than the real Z

7

, where a = 1/2 for opposite spins, and a = 1/4 for equal spins. The gradient

and Lapla|cian of the Jastrow factor is then:

~ i g(rij ) =

∇

∇2i g(rij ) =

1.1.2

a(~ri − ~rj )

rij (1 + βrij )2

2a

rij (1 + βrij )3

Onebody- and charge density

Another experimental8 quantity we are interested in (in addition to the

ground state energy), is the charge density of the atom. For single particle wavefunctions, this is simply given by equation (5), while it is generally

(for N-particle systems) given by equation (6).

ρ(~r) = Z · |ψ(~r)|2

Z

|ψ({~r})|2 d3~r2 . . . d3~rn

ρ(~r) = Z ·

(5)

(6)

V

We see that eq. (5) is just a special case of eq. (6).

Further, we define the onebody density as

f (~r) =

ρ(~r)

Z

(7)

. The variational calculation may be used to get an estimate the groundstate wavefunction, which may then be plugged into (6) to get the charge

density. However we are still bound by that the variational calculation may

not give a good estimate for the ground-state wavefunction, as discussed in

section 1.

We also see that the onebody density has a normalization criteria

Z

Z Z

3

f (~r)d ~r =

|ψ({~r})|2 d3~rd3~r2 · · · d3~rn

V

V

V

Z

(8)

2 3

3

=

|ψ({~r})| d ~r1 · · · d ~rn = 1

V

as the last integral is identical to the normalization integral for the wavefunction.

8

When doing scattering experiments, we often get a «form factor» F (~

q 2 ) (where ~

q2

is the square of the momentum transfer) that modifies the main part of the differential

crossection. This form factor is in turn given by the fourier transform of the charge

distribution, e.g.

Z

F (~

q2 ) =

ei~q·~x/~ f (~

x)d3 ~

x

where f (~

x) is the onebody density. See for example Povh, Rith, Scholz and Zetshe,

’Particles and Nuclei’, 5th ed, Springer 2006, chapter 5.4.

8

It is often useful (especially for wavefunctions where f (~r) is spherically

symetric) not to plot the onebody density, but instead use the radial density,

which for a spherically symmetrical wavefunction is defined as

(9)

R(r) = f (r) · r2

. This is proportional to «how much» of f (r) is found at the distance r from

the center, as it takes into account that the volume of the infinitely thin

spherical shell the PDF f (r) lives on is ∝ r2 . The radial density (if assumed

to be spherically symmetric) then has the normalization condition

Z ∞

Z ∞Z

Z

2

3

R(r)dr

f (r)r drd = 4π

f (r)d ~r =

1=

0

V

0

ω

Z

∞

⇒

R(r)dr =

0

1.2

1

4π

(10)

Monte-Carlo integration

When integrating multidimensional functions as we are doing when calculation the energy of a manybody quantum system

Z

E = hψ| Ĥ |ψi = ψ ∗ ({~r})Ĥψ({~r})d3~r1 · · · d3~rn

, the most efficient method is Monte-Carlo (MC) integration, where the error

in the results scales approximatly9 as N −1/2 , while for quadrature-based

methods the error scales as N −k/d where k is the order of the method, and

d is the dimension of the hypercube we are integrating.

This method is based on statistics, where the expectation value of an

observable O depending on a stochastic variable x defined in an interval

x ∈ [b, a] is given as

Z b

hOi =

O(x)X(x)dx

a

, where X(x) is the probability distribution function (PDF) of x. This may

be approximated (for example when doing an experiment, where you have a

finite sample size) as

1 X

Ō =

O(x)

N

N

, where x (and thus O(x)) are picked according to the underlying probability

distribution X(x).

9

Se M.H. Jensen, ’Computational Physics’, chapter 8 ’Outline of the Monte-Carlo

strategy’ (as of 20/11 2007)

9

The simplest form of MC integration of a function f (x) is simply to force

1

f (x) = O(x), and X(x) = a−b

, where a, b are the limits of integration. This

yields a brute-force approach, where

Z a

1 X

f (x)dx ≈

f (x)

(11)

N

b

N

1

, and x is picked according to the flat PDF X(x) = a−b

.

This might work well if f (x) is approximately flat within the limits of

integration. However, if the limits are very large (such as ±∞ or [0, ∞i. . . ),

or the function is not at all flat, we will need very many cycles to achieve a

reasonable accuracy. What we then may do is to sample according to another

PDF than the simple flat one, preferably one that follows the function we

are trying to integrate quite closely. This may be seen as factorizing the

function we are trying to integrate f (x) into a PDF and an observable

f (x) = X(x)O(x)

(12)

.

1.2.1

Importance sampling

The most direct way of doing the factorization (12), is if we have an analytical

expression for the PDF X(x) contained in f (x). For example, this may be

an exponential distribution X(x) = e−x , x ∈ [0, ∞), as is the case with the

integrals we have to solve to get the one-body densities.

As a practical example, we may take a function f (x) = e−ax g(x). If we

now take X(x) = ne−ax to be our PDF, where n is an normalization constant

which can be shown to be n = a.

However, we cannot sample directly from an exponential distribution,

as our random generators only gives us numbers from the uniform distribution. Thus we make a switch of variables x → y, where y is an uniformly

distributed random variable on the interval y ∈ [0, 1]. Conservation of probability yields that

ae−ax dx = 1dy

, and integrating this yields the cumulative probability distributions

Z x

Z y

−1

ae−ax dx = a (e−ax − a0 ) =

dy = y

a

0

0

. Using this we can finally arrive at the change of variables we need:

x=

−1

ln(1 − y)

a

10

(13)

This means our integral may be written as

Z ∞

Z ∞

g(x)

ae−ax

f (x)dx =

f (x) =

dx

a

0

0

Z 1

g −1

a ln(1 − y)

=

dy

a

0

(14)

, which may then be approximated using (11). If our new function g(y) then

is quite flat, we may then need vastly fewer cycles to achieve the same level

of accuracy, and we may sample x ∈ [0, ∞] without making a cutoff.

1.2.2

Metropolis algorithm

If we cannot get an analytical expression for the inverse of the PDF, such

as (13), we may still do importance sampling. One way of doing this is by

simulating the probability distribution using the metropolis algorithm.

In the metropolis algorithm, one simulates the diffusion equation to move

the sampling point around in the space of integration points. The PDF we

are simulating from is then taken to be the the steady-state distribution from

a diffusion process. The sampling point then moves about according to this

PDF.

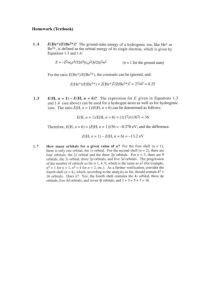

This leads to10 the algorithm shown in figure 1, given the factorization

(12). In the metropolis algorithm the ratio

R=

X(xi+1 )

X(xi )

(15)

plays a vital role, as it decides whether the jump is made or not. As the

random number r ∈ [0, 1], we see that a move to a more probable position

will always be accepted, as this yields R > 1. However, there is a nonzero

probability R for accepting a move to a less probable position. This means

that we will reach the most likely state of the system (equilibrium), while all

points in space may be sampled (ergodic).

We therefore run the algorithm for many cycles while collecting statistics of functions distributed according to the PDF (there may be several),

basically using eq. (11). But before we start collecting statistics, we should

do a small (O(104 )) number of cycles in order to reach the most likely state

of the system. This is called thermalization.

When choosing the stepsize h of the algorithm, we must be a bit careful,

and not choose a to large stepsize, as this will lead to large fluctuations in the

value of the wavefunction between one step and the next. This will in turn

mean that the probability for accepting (especially for a PDF approximatly

shaped as a Gaussian or an exponential function, which are much larger at

the center, and then tapers of into nothing when we leave this area) a move

10

Read (for example) M.H. Jensen ’Computational physics’ for more details

11

Start at a point x0

Propose a move to

a new point x1 = x0±h

Calculate the ratio

R = X(x1)/X(x0)

Generate an

uniformly distributed

random variable r

Compare R and r

Repeat many times

(number of MC cycles)

If R < r:

Go back to x=x0

If R > r:

accept move, x=x1

Collect statistics

(add up sum of functions to

be integrated , which are distributed

according to X(x) )

Store

of stats

Figure 1: Schematic overview of the Metropolis algorithm

is very low. This will in turn lead to highly biased statistics, as we will

barely move at all. A good rule of thumb for efficient calculation is to set

the stepsize so we get about 50% of our moves accepted.

1.2.3

Metropolis-Hastings algorithm

The Metropolis-Hastings algorithm also utilizes a variety of the diffusion

equation called the Fokker-Planck equation, which reads

X

∂

∂

∂P

=

D

− Fi (xi ) P

(16)

∂t

∂xi ∂xi

i

12

. This is different from the usual diffusion equation in that it incorporates a

drift term Fi (xi ). The Fokker-Planck equation may be simulated using the

Langevin equation

d~x

= DF (x(t)) + η

(17)

dt

, which describes the time-evolution of the position of a single particle doing

Brownian motion. Here η is a Gauss-distributed random variable. Integrating using Euler’s method yields

√

~x(ti + ∆t) = DF (x(ti ))∆t + η 0 ∆t

(18)

where η 0 is a normal distributed random variable, and ∆t is the finite timestep

from Euler’s method.

When we have moved our «sampler particle», we must check if we accept

the move or not. The condition on the movement of the sampler is then that

we are in equilibrium (which again necessitates thermalization steps), which

yields11

P (x(t) → x(t + ∆t)) = P (x(t + ∆t) → x(t))

⇒ W (x(t + ∆t)|x(t)) X(x(t)) = W (x(t)|x(t + ∆t)) X(x(t + ∆t))

, where W (x(t + ∆t)|x(t)) is the Green’s function for moving from (x, t) to

(x(t + ∆t), t + ∆t). This yields (in style with the «brute-force» metropolis

algorithm, where W = 1) that the probability for making a move is

R0 =

A(x(t + ∆t)|x(t))

W̃ (x(t + ∆t|x(t)))X(t + ∆t)

=

=

A(x(t)|x(t + ∆t))

W̃ (x(t)|x(t + ∆t))X(t)

W̃ (x(t + ∆t|x(t)))

·R

=

W̃ (x(t)|x(t + ∆t))

(19)

, where A(x(t + ∆t)|x(t)) is the (a priori unknown) acceptance probability of

accepting a move from x(t + ∆t) to x(t), and W̃ (x(t + ∆t|x(t)) the transition

probability for making a move from (x(t), t) to (x(t + ∆t), t + ∆t). R is the

ratio defined in (15), same as in the «brute force» metropolis method.

This transition probability can be calculated to be

h

i

1

0

~ (~x))2

W̃ (~x0 , ~x, δt) =

exp

−(~

x

−

~

x

−

Dδt

F

(4πDδt)3N/2

, where N is the number of particles12 , and we have assumed that the change

in F (~x) is small for a small timestep δt. If we know the diffusion constant D

and the external force F~ (~x), which depend on the PDF, we now know enough

to calculate the new position, and decide whether to accept the move or not.

11

12

For 1-dimensional motion – the generalization to more dimensions is straightforward

The vectors ~

x, ~

x0 are thus vectors in 3N dimensions

13

Thus, the PDF we are simulating from here enters in both the determination of R from eq. (15) (as in the brute-force Metropolis algorithm), and

in the external force F~ (~x), which yields better jumps.

Ideally the timestep ∆t should be as small as possible, so what we might

do is to make several calculations with different ∆t, and then making a fit

of O[∆t], finding the limit O[∆t] → 0, where O is the observable following

the PDF we are simulating.

1.2.4

Use of Monte Carlo integration in Quantum physics

In writing this program, we are interested in simulating from a quantum

PDF, which is the absolute value of the wavefunction squared, which for a

non-normalized wavefunction ψT means

X({~x}) = R

|ψT |2

|ψT |2 d~r1 · · · d~rn

(20)

, where the integral in the denominator is simply the normalization integral.

The main observable of interest for us is the energy, which normally is

calculated as (for a normalized wavefunction ψ)

Z

Ē = hHi = ψ ∗ Ĥψd~r1 · · · d~rn

. However, we want the function under integration, ψ ∗ Ĥψ, on the form of

equation (12), where X(x) should be the quantum PDF (20). This can be

done through a simple rewrite of the equation for Ē,

Z

Z

ψ

∗

Ē = ψ Ĥψd~r1 · · · d~rn = ψ ∗ Ĥψd~r1 · · · d~rn

ψ

(21)

Z

Z

2 Ĥψ

d~r1 · · · d~rn = P ({~r}) · EL ({~r})d~r1 · · · d~rn

= |ψ|

ψ

, where the «local energy» EL is our new observable, following the wanted

PDF (20). We might also define more observables, such as the distance

2 i = hE 2 i − hEi2 , etc.

r12 = |~r1 − ~r2 |, the variance in energy hσE

A good thing about the Metropolis- and Metropolis-Hastings algorithms,

is that the PDF in use do not need to be normalized. To understand this,

we observe that the PDF’s enter in a ratio R, defined in (15). This means

that any normalization constants will removed, as they are constants.

For the Metropolis-Hastings algorithm, it may be shown that the force

F~ is the quantum force13

~

2∇ψ

F~ =

(22)

ψ

13

Assumed that we are in a stationary state, ⇒

14

∂|ψ|2

∂t

=0

, where the vectors are in 3N dimensions14 . The diffusion constant D is in

this case D = 12 .

We may show that in this case, the ratio R0 (defined in eq. (19)) needed

in the metropolis algorithm, may be calculated as

i 1 ~

1 h~ 0

0

0

0

~

~

F (~x ) − F (~x) · ~x − ~x +

F (~x) − F (~x ) D∆t R

P (accept) = R =

2

2

, where we are considering making a move from ~x to ~x0 .

1.2.5

Error estimation through blocking

When we have calculated observables using some Monte-Carlo method, it

is important to be able to say something about the errors of our estimates.

In order to do this, we note that we might view each observation of the

observable as an experiment, and calculate the variance of the observations.

However, this has a couple of problems – the observable might carry its

own intrinsic variance, and the experiments are not independent. This is

explicitly true when we are using Metropolis Monte-Carlo aka Markow chain

Monte-Carlo, but also when using pseudorandom numbers in «normal» importance/brute sampling.

If we instead of calculating the variance of repeated single observations,

we repeat the whole experiment several times, and calculate the estimator

each time. If we have set this up correctly, we will then have several independent observations of the observable we are trying to estimate the error

in. This then yields an distribution of the estimator. If we assume that each

of the estimators are Gauss-distributed around the real expectation value,

we get that the variance in the estimator is given by

2

σ̄ =

2

σ̄hOi

N −1

2

, where N is the number of measurements of the estimator Ō, and σhOi

is

the variance of these measurements. We define the error in the estimator as

s

s

2

σhOi

√

O2 − (Ō)2

errŌ = σ 2 =

=

(23)

N −1

N −1

However, we do not want to actually perform many experiments, and

even our separate Monte-Carlo «experiments» are not guaranteed to be uncorrelated. But what we do know, is that as the Markow chain moves the

sampling walker about, the amount of correlations decrease. Further, if we

14

It may be shown that equation (22) factorizes into N independent 3-vectors if and

only if the wavefunction ψ factorizes into N independent single-particle wavefunctions

15

make a number of moves comparable to the correlation time15 τ , the sampled

values may be taken to be uncorrelated.

Thus, we may divide the experiment into «blocks» of sizes on the order

of τ . Each of these blocks may then be taken to be a separate, uncorrelated

experiment, and we may then use equation (23) to estimate the error in the

total average.

Now we need at method to estimate the optimal block size. This must

be larger than τ in order to have truly uncorrelated blocks, but not to large,

as this will lead to fewer blocks and thus worse statistics when calculating

(23). One way to do this, is to plot errŌ as a function of the blocksize b. We

will then see that as b increases, errŌ does as well. This continues until one

reaches some value of b where errŌ plateaus for a while, before just becoming

noise. This happens when b ≈ τ , so the correct value of errŌ is then the

value at the plateau. I did develop an automatic method for finding the

position of the plateau, and thus automatically calculating the error of the

estimator – see section 2.3.3.

1.3

Variation of parameters

As discussed in the introduction, we need to vary the parameters for of our

wavefunction in order to find the set of parameters witch yields the lowest

energy E[~

α]. I have used a combination of two strategies to do this – «sample

and plot», and the conjugate gradient method. Typically, I have used sample

and plot first in order to approximately bracket the minima, and then used

the conjugate gradient method to find the exact minima.

1.3.1

Sample and plot

In this quite straight-forward method, one defines a set of points in parameter

space that is hoped to surround and include the minima in E[~

α]. One then

calculates the energy of these points, and plots the energy as a function of

the parameters. This plot is then used to eyeball the position of the minima.

An analytical example is if we have a hydrogen 1s test wavefunction with

a single parameter α

ψT = e−αr

, which yields the normalization and local energy

Z

π

ψT∗ ψT d3~r =

α3

α−1

− 0.5α2

EL [α] =

r

15

Se for example H. Flyvbjerg and H.G. Petersen, ’Error estimates on averages of correlated data’, Journal of Chemical Physics, 1989

16

(with Ĥ = − 12 ∇2 − 1r ). We might from this calculate E[α] using eq. (21),

which yields

1

hE[α]i = α2 − α

2

, which has the analytical minima at α = 1, hE[α = 1]i = −0.5, which is the

analytical solution. This yields a plot as shown in figure 2. We can easily

see where the minima is (at least approximately), as well as how deep it is.

Hydrogen, analytical solution

0

−0.05

−0.1

−0.15

E[α]

−0.2

−0.25

−0.3

−0.35

−0.4

−0.45

−0.5

0

0.2

0.4

0.6

0.8

1

α

1.2

1.4

1.6

1.8

2

Figure 2: Analytic solution of E[α] for hydrogen, ψT = e−αr

If we where to do this in a case where calculating E[~

α] was quite expensive

(For Neon, it takes something like 3 minutes with 60 quite modern CPU’s),

we would calculate and plot a few points, look at the plot, chose some new

points etc. all the time trying to use our computational resources as close as

possible to the minimum. However, I did use hydrogen as a test case when

developing my codes.

1.3.2

Outline of the Conjugate Gradient Method (CGM)

If we are able to get not only the value of the function we are trying to

minimize (in our case E[~

α]), but also the partial derivatives, this could lead

to a quicker convergence than sampling and plotting. We might then approximate the function as a quadratic form

1

f (~x) ≈ c − ~b · ~x + ~xT A~x

2

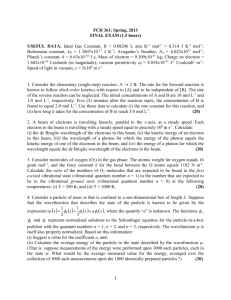

, as shown in figure 3.

17

(24)

Figure 3: The steepest decent method often have to make a lot of extra

evaluations. (Figure from ’Numercal Recipes’ by William H. Press, Saul A.

Teukolsky, William T. Vetterling, Brian P. Flannery, 3rd edition, Cambridge

university press 2007, figure 10.8.1)

A naive way of exploiting the partial derivative (aka the gradient) information, is to always move in the direction of steepest decent. However, this

turns out not to always be effective, as we might end up jumping a lot «back

and forth», as shown in figure 3. What the CGM method does, is trying

to construct the next vector so it is conjugate to the previous (with respect

to the quadratic form.16 A reference to CGM is ’Numerical Recipes’, 3rd

ed. (detailed citing in caption of figure 3), chapter 10.8 ’Conjugate Gradient

Methods in Multidimensions’.

This is exploitable in our MC method, as we may sample the gradients

the same way we are sampling the local energy etc. It may be shown that17

∂E

ψi

ψi

=2

EL − hEL i

(25)

∂αi

ψ

ψ

, where ψi is defined as:

∂ψ

(26)

∂αi

I use this to sample the partial derivatives at each point. This is quite

cheap when we get analytic expressions for ψi (Helium, Hydrogen), but beψi ≡

16

Ideally it should be conjugate to all previous jumps, at least until the assumption of a

quadratic form break down. At this point the Fletcher-Reeves version of CGM effectively

resets, going down the steepest gradient again. I do not know what version of CGM is

implemented in the library we have used.

17

See for example M. Hjorth-Jensen, computational physics

(Lecture notes), university of Oslo, 2009, unpublished:

http://www.uio.no/studier/emner/matnat/fys/FYS4410/v09/

undervisningsmateriale/Slides%20from%20Lectures/slides2009.pdf

18

comes more expensive when we have to evaluate the partial derivatives of

the slater matrices and the Jastrow factor numerically.

2

Program

This is a short description on how the program is built, and how to use

it. It is not a detailed description of the algorithms used – for that look

into to section 1, and the slides from the lectures, which may be found at

http://www.uio.no/studier/emner/matnat/fys/FYS4410/v09/

undervisningsmateriale/Slides%20from%20Lectures/slides2009.pdf

. I will also describe the structure of the program and how to use it in a talk

which will be put on my webpage, http://folk.uio.no/kyrrens.

2.1

Basic structure

The main part of this program is the classes Wavefunction (described in

section 2.2, header file found in listing 2), and MontecarloAlgo (described

in section 2.2, header file found in listing 3).

2.2

Wavefunctions

The class Wavefunction is an interface – it does not provide a complete

implementation. In order to have a complete implementation, a class you

can actually have an object of, you need to define another class that inherits

from Wavefunction.

These objects are the actual physical description of the system, and defines the test wavefunction, the Hamiltonian (expressed as the local energy)

and more.

The point of doing this, is so that you may pass around pointers to

general wavefunctions, use them in MonteCarlo integrations (see section 2.3)

etc. without needing to rewrite this code every time you want to treat a new

system – which is represented as a new implementation of the Wavefunction

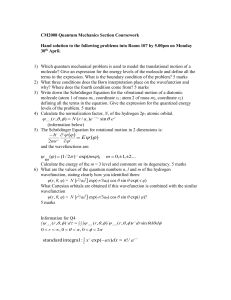

interface/baseclass. The tree of classes belonging to the Wavefunction family

may be seen in figure 4.

Note that there are actually two interfaces – Wavefunction and

Wavefunction_Slater. The first is the most general, and is used directly by

the «stateless» wavefunctions (described in section 2.2.1), while the second

is used for larger many-body systems using slater determinants (described

in section 2.2.2).

2.2.1

Stateless

The simpler systems are implemented using the Wavefunction interface directly, and includes hydrogen_1s, helium1_nocor, and helium1. These

19

Figure 4: Class diagram for the wavefunctions

20

classes then have to provide implementations of the methods

• double getWf(double** r)

• double local_energy(double** r)

• void print_params()

• double* get_partPsi_over_psi(double** r)

. These wavefunctions are called stateless as they do not keep a state on

position – you specify the position with each call to getWf, local_energy

etc.

In addition helper functions that simplifies definition of the Hamiltonian

is provided. Also, all the implementing wavefunctions I have written provides

functions double getAlpha() etc. in order to make it possible to retrieve

the variational parameters, which was set in the constructor.

2.2.2

Statefull

The statefull wavefunctions are implemented using the interface Wavefunction_Slater,

and includes the atomic systems beryllium_nocor, beryllium, and neon.

These classes only have to provide an constructor to configure Wavefunction_Slater.

The reason these wavefunctions are called statefull, is because they keep

a set of particle positions stored internally, and the local energy etc. is then

only defined for this position. If you want to get these values for another set

of positions, you have to move your particles, one at a time – as in done in

the metropolis algorithm. This is done in order to save many calculations

within the Slater determinant and the Jastrow factor, as not all needs to be

recalculated when moving one particle.

There are two modes of movement – one adapted to the Brute-force MC

algorithm (described in section 2.3.1), and one for the importance sampling

MC algorithm (described in section 2.3.2).

When brute-force sampling, all you need to decide whether you accept

or not, is the ratio R which is defined in equation (15). In order to get this,

call the function double getRatio(double* r_new, int particle). This

will return the ratio, which you may then compare to a random number

in the Metropolis algorithm. If you decide to accept, you then call void

acceptMove(), which will update the internal state of the wavefunction.

You may now call double local_energy() etc. to get the local energy at

the current position.

However, when importance-sampling, we need more information in order to compute the ratio R0 defined in equation (19). Especially, we need

the quantum force defined in equation (22), which involves the gradient

of the wavefunction. In order to calculate this, we need to update the

Slater determinant and the Jastrow factor. This is done by calling void

21

tryMove(double* r_new, int particle). When this has been called, the

internal state is updated, and it is possible to get the new quantum force

etc. at the new position. In order to make it easy and fast to rollback if the

move was rejected, the method void rollback() moves back to the position

before the move was made.

As the test wavefunction may be written as

(27)

ψT = φ1 ~r1 , · · · , ~rN/2 · φ2 ~rN/2+1 , · · · , ~rN · J(rij )

, this wavefunction holds pointers to two Slater and one Jastrow class.

Slater determinant The Slater class18 implements a single Slater determinant in a statefull way. It keeps an updated version of the Slater matrix,

the inverse slater matrix, etc. By use of these, it makes it possible to get the

ratio R, the gradient and Laplacian of the matrix etc.

As a Slater matrix is defined by the single-particle orbitals it contains

(as described in section 1.1 and particularly 1.1.1), a N -dimensional Slater

matrix class holds pointers to N objects implementing the Orbital interface.

These are in turn stateless19 , and provides the value of the single-particle

wavefunction at a given position in 3-space. In addition they also provides

the gradient and the Laplacian of the orbital they are representing at a

given position. This may be implemented analytically or numerically, but in

the current implementations of Orbital (orb1s, orb2s, and orb2p) it is all

analytical, which provides both better accuracy and higher execution speed.

The algorithms used for updating the matrix is described in M.H.Jensen,

’Slides from FYS4410 Lectures’, unpublished, http://www.uio.no/studier/

emner/matnat/fys/FYS4410/v09/undervisningsmateriale/Slides%20from%

20Lectures/slides2009.pdf

Jastrow factor The Jastrow class20 implements a Jastrow factor as discussed in section 1.1. The pure Jastrow class is actually an interface, for

which two implementations are provided – the JastrowDummy and JastrowExp

classes.

The JastrowDummy class is used to provide a Jastrow function pointer

to Wavefunction_Slater in cases where we want to deactivate the Jastrow

factor. This is equivalent to setting J = 1, see equation (27).

The JastrowExp class is used to provide a Jastrow factor on the form

Y

J=

exp(g(rij ))

i<j

18

Header file of Slater class and related classes shown in listing 4.

They do contain a fixed variational parameter set in its constructor

20

Header file of Jastrow class and related classes shown in listning 5.

19

22

, which is what is demanded of the cusp condition (see section 1.1.1). It therefore holds a pointer to another object, which is implementing g(rij ). This

object should be an implementation of the jasFuncExp interface. There exist

one such implementation today, which is jasFuncExp1, which implements a

g(rij )-function on the form

g(rij ) =

arij

1 + βrij

. Here a is 1/2 if the spins of particle i and j are opposite, and 1/4 if the

spins of the particles are equal, and β a variational parameter.

Setting up a statefull wavefunction When setting up a statefull wavefunction, one must setup two Slater objects and one Jastrow object, in

addition to configuring parameters such as the central charge Z etc. You

may also make a setup for the conjugate gradient method, see section 2.2.3

This is done in the class constructor, and I refer you to read the constructors

of the implemented wavefunctions for example.

2.2.3

Support for Conjugate Gradient Method

In order to be able to use the Conjugate Gradient Method, as discussed in

section 1.3.2, we need to be able to sample ψi , as defined in equation (26).

This is implemented through the method double* get_partPsi_over_psi(double**)

which is defined in the Wavefunction interface.

Different implementations provide this differently – hydrogen_1s and

helium1 provides analytical expressions, while classes implementing Wavefunction_Slater

can use helper functions in this interface to setup a numerical derivative.

This method returns an array of length equal to the number of variational

parameters, which contains the different ψi as [ψ1 , ψ2 , · · · ].

2.2.4

Other features

There are support for density calculation support through a stateless implementation of getWf(double** r) present in both in the direct implementations of Wavefunction, as well as in Wavefunction_Slater.

All the wavefunctions also implement a function void print_params(),

which prints the variational parameters as well as some other stats. As this

calls into the Slater and Jastrow objects, this means that any implementation of the underlying classes jasFuncExp and Orbital also have to provide

such a printing function.

2.3

Algorithms

There are basically two algorithms implemented: brute-force metropolis, as

described in section 1.2.2, and importance-sampling Metropolis, also known

23

as Metropolis-Hastings, described in section 1.2.3. This results in four classes,

as there are two different kinds of algorithms, which should be able to work

with two different kinds of wavefunctions (stateless and statefull). The class

diagram for these can be seen in figure 5, and the header file in listing 3.

Figure 5: Class diagram for the algorithms

Generally, an algorithm needs a wavefunction to work with, and a number of MC cycles and thermalization steps. Once setup, you may run the

algorithm by calling void run_algo(). This will take some time, and when

it has returned, you may get the results by calling double getEnergy() etc.

2.3.1

Brute-force Metropolis

The classes metropolis_brute and metropolis_brute_slater implements

a brute force Metropolis method, as discussed in section 1.2.2. The difference

between them is that the first is adapted to a stateless wavefunction, while

24

the second is adapted to a statefull one. This results in the adaptations

discussed in 2.2.1 and 2.2.1. The brute-force metropolis algorithm needs, in

addition to what is mentioned in section 2.3, the step length as a parameter,

which is supplied to the constructor.

2.3.2

Importance sampling Metropolis

In parallel to to the brute-force metropolis algorithms, the classes

metropolis_importance_sampler and metropolis_importance_sampler_slater

implements support for the Metropolis-Hastings algorithm, as discussed in

section 1.2.3, for stateless- and statefull wavefunctions.

This algorithm needs, in addition to what is mentioned in sections 2.3,

the timestep ∆t as a parameter. As is discussed in section 3.2, this parameter

should ideally ∆t → 0, which is obviously not practical. What may be done,

is to calculate the energy (with errorbars) for several ∆t, and make a fit to

what happens when ∆t → 0. We may also run it with a quite small step size

and many steps, as this will also give us a good accuracy.

2.3.3

Blocking

Error estimation through blocking, as discussed in section 1.2.5, is supported

by a set of methods in the MontecarloAlgo interface:

• void activateBlocking()

• double block(long blocksize)

• double getIdealBlockSize()

• double getError(int blocksize)

As it is needed to store all sampled values of the blocked observable21 ,

it we do not want to turn this function on by default. This does not mean

that storing this data is a big problem to do on todays computers – the size

of the blocking array will be equal to sizeof(double)*mc_cycles, where

sizeof(double) = 8 bytes.22 This means that a blocking array of 106

elements would take 8 MB of memory. However, it also means for a really

long run of 109 cycles, it would take 8 Gb, which is a problem. Thus it has

to be activated before you make a run in order to be created.

The method double block(long blocksize) does blocking on the array

with the set blocksize, and returns the estimator O2 of the array. Ō2 does

not need to be calculated for the blocking array, as it equals Ō2 for the entire

set of measurements (in our case, use the square of the return value from

21

22

Only support for the local energy EL is implemented

Tested using GCC as well as mpicxx (Intel icc 10.1) on 64-bit RHEL5 installations

25

double getEnergy()) as long as the blocksize is a factor in the length of

the blocking array, ie. blocksize MOD len(block_array) = 0.

As the error in the estimate of the observable is found by applying eq.

(23) with a blocksize just above the «edge» in errŌ (blocksize), as discussed

in section 1.2.5, it would be nice to have an automatic way of finding this

edge. It turned out (see section 3.2.1) that the derivative of errŌ (blocksize)

was not only relatively noise-free, but also followed the expression

derrŌ (b.s.)

≈ axb

d(b.s.)

, where a and b are constants, quite closely. Thus i could get a quite good estimate of the position of the edge by doing blocking for some reasonable set of

block-sizes23 , calculating the derivative, and fitting to the above expression.

I chose24 to demand that derivative of the error as a function of the block

1

size should at the ideal block-size fall to 1000

of the derivative with block

size 1, in other words

1

1

err0 (b.s. = 1)

=

=

C

1000

err0 (b.s. = ideal)

(28)

. This demand is implemented as a constant in the said function.

In order to make the fit, I implemented a linear least squares method

double* leastsquares(double* x, double* y, double* variance, int

N), which is based on the normal equations25 . This is found in the general

utilities file util.cpp, for which the header file is found in listing 6.

Using this estimate for the ideal block size, you can then call double

getError(int blocksize), which searches for the next matching blocksize,

does blocking using this blocksize, and returns the error.

2.3.4

CGM

As sampling for CGM might be expensive (especially when using Wavefunction_Slater,

as discussed in section 2.2.3), this is turned off by default. If you want to

use it, call void activateDerivatives() before calling void runAlgo().

The results of

∂E

∂αi

may then be fetched using the method double* get_partial_energy() .

You may also call the methods double* get_psiP_over_psi() and double*

23

The implementation is to use the first 30 matching blocksizes

in double getIdealBlockSize()

25

Se David C. Lay, ’Linear Algebra’, 3rd edition, Pearson 2006, and Are Strandlie,

Slides from lectures in FYS4550 (autumn 2008), part I Probability and statistics, http:

//folk.uio.no/ares/FYS4550/Lectures_H08_1.pdf

24

26

get_el_times_psiP_over_psi() to get

ψi

ψ

and

ψi

EL

ψ

, which might be useful if you are running several MC sampling algorithms

in parallel on the same parameter set.

2.4

Runner scripts

As I said, the main parts of this program are kept in the classes described

in sections 2.2 and 2.3. However, some code is needed to setup and run

these algorithms, which is the runner scripts. They are written in C++,

but I choose to call them scripts – they are simple, replaceable, and ugly.

And you frequently need to edit them when you want to test another set of

parameters.

2.4.1

Grid sampling programs (vmc_*.cpp)

The simplest minimization method, described in section 1.3.1, is to calculate

the values at a set of points, plot it, and eyeball the plot for the minima. It

is brutal, but it is also robust and easy to use, and the plots gives a good

feeling for how the wavefunction depends on the parameters. I have used

these methods first, before bringing in the CGM method to find the exact

minima – using the eyeballed minima from this method as a starting point.

vmc_serial, vmc_MPI_brute, and vmc_MPI_importance are all grid samplers. They take input on grid type, number of cycles etc. from the command

line, but wavefunction and/or sampler must be selected by editing a few lines

in the script. Their output is (in addition to what is written directly to the

screen for progress monitoring) tab-separated files where each line represents

a point on the format

α β

E

2

σE

r12

σr212

When sampling many-particle wavefunctions, I have often chosen to disable

the last two output points, as they are zero anyway.

vmc_slatertest is a simple program for test-running the algorithms with

a wavefunction. It only outputs to screen, and any setup is done within the

program. I choose to print this in listing 1, as it is a good starting point if

you want to make your own runner script.

Listing 1: vmc_slatertest.cpp

1

2

3

4

5

6

7

#include <i o s t r e a m >

#include " w a v e f u n c . hpp "

#include " a l g o s . hpp "

#include < s t d l i b . h>

using namespace s t d ;

i n t main ( i n t a r g c , char ∗ a r g v [ ] )

{

27

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

// l o n g mc_steps (1 e7 ) , therm_steps (1 e5 ) ;

long mc_steps ( 1 e 6 ) , t h e r m _ s t e p s ( 2 e 4 ) ;

// B e r y l l i u m

double a l p h a = 3 . 8 5 ;

// d o u b l e b e t a = 0 . 1 5 ;

//Neon

// d o u b l e a l p h a = 9 . 8 ;

// d o u b l e b e t a = 0 . 1 2 ;

W a v e f u n c t i o n _ S l a t e r ∗ wf = new b e r y l l i u m _ n o c o r ( a l p h a ) ;

// Wavefunction_Slater ∗ wf = new b e r y l l i u m ( alpha , b e t a ) ;

// Wavefunction_Slater ∗ wf = new neon ( alpha , b e t a ) ;

// MontecarloAlgo ∗ a l g o = new m e t r o p o l i s _ b r u t e _ s l a t e r ( wf , mc_steps , therm_steps , 0 . 5 , −1);

M o n t e c a r l o A l g o ∗ a l g o = new m e t r o p o l i s _ i m p o r t a n c e _ s a m p l e r _ s l a t e r ( wf , mc_steps , therm_steps , 0 . 0 5 , − 1 ) ;

wf−>p r i n t _ p a r a m s ( ) ;

c o u t << e n d l ;

a l g o −>p r i n t _ p a r a m s ( ) ;

c o u t << e n d l ;

a l g o −>r u n _ a l g o ( ) ;

}

a l g o −>p r i n t _ r e s u l t s ( ) ;

2.4.2

CGM (paramsearch_conjgrad.cpp)

The CGM method, described in section 1.3.2, finds the minima more or less

automatically. However, it does need a fairly good starting point, else it will

easily go «astray».

This is implemented in paramsearch_conjgrad.cpp, which calls void

dfpmin(...) as provided from conjgrad.h, shown in listing 7. The code is

from Numerical Recipes, provided by M.H. Jensen.

dpfmin then calls the functions double E_function(double p[]) and

void dE_function(double p[], double ret[]) in order to get the values

and the gradient at point p[]. Note that dfpmin is using fortran convention

in array numbering – it only looks at elements 1 . . . N of an array of length

N + 1.

As the CGM method is dependent of approximating the function as a

quadratic form, it should be started reasonably close to the minima. If this is

not done, it will lead to long convergence times, poor convergence, or crashes

when it tries to sample negative β.26

The program also writes a file as-it-goes, with the format

α β

E

2

σE

∂E

∂α

∂E

∂β

where the last column is set to zero if num_params = 1

26

«Crashes» here means that the algorithms behaviour is undefined, not that the program exits abruptly. Some have implemented methods for leading it back to the area

where the energy is defined like returning ∂E/∂β = −1, E = 0 if β < 0, but this will

probably make the estimate of the Hessian matrix A in equation (24) horribly wrong, and

thus lead to poor results.

28

2.4.3

Misc.

In addition to the minimum finders described above, there are a couple of

other runner scripts. The most significant ones are blocking_test and

deltat_test, which has been used to produce the plots in section 3.2.

blocking_test runs an algorithm, does blocking on the output, and

produces datafiles on the form

blocksize err(blocksize) err0 (blocksize)

. It also outputs an estimate of the ideal blocksize and the error at the next

legal blocksize after this.

deltat_test runs an algorithm for several ∆t, and tries to estimate the

error using the same method as blocking_test. It outputs files on the form

∆t Ē[∆t] err(estimated ideal blocksize, ∆t)

.

There is also the the charge-density calculators chargeDensity and

chargeDensity_importance, which performs the integral defined in eq. (6)

with the «first» particle placed at increasing distances from origo. They use

respectively brute-force and exponential importance sampling, as discussed

in sections 1.2 and 1.2.1. However, they do not utilize parallelization, which

would have been a big help when calculating the charge density of beryllium.

Using metropolis or metropolis-hastings to simulate the PDF may also have

provided a good speedup, however I am not shure about how to define the

factorization of eq. (12) in this case.

The output is files in the format

r

R(r) = f (r)r2

f (r)

, and are by default named chargeDensity.out.

2.5

Parallelization

There are two obvious ways of parallelization of the grid sampling programs –

either run all nodes in sync on one set of parameters at a time, or make a stack

of sets of parameters on a master node, and hand them out to calculation

«slave» nodes as they finish the previous set.

I did implement the second option in 2007 during the VMC/MPI-project

in Computational Physics 1, which made a very big difference as it was able

to fully utilize a quite hetrogenous cluster. The biggest advantage was that

you avoided blocking the entire calculation while waiting for one or two slow

nodes. This scheme is somewhat more complicated to setup, as it needs to

do explicit message passing, as well as writing (and debugging!) of semiadvanced «handler» code.

29

However, this time I had access to two quite homogenous clusters – Titan,

and the local cluster, which has been upgraded since last time to stable

machines sporting quad-core 2.66 Ghz CPU’s. Thus I this time went with

the first approach, which made it possible to produce statistics faster (about

60 times faster – 15 quad-core machines). This meant I could get higher

precision on a single point in parameter space in shorter calculation time.

It is also possible to implement a CGM method utilizing the cluster to get

higher accuracy faster, but as this requires a bit of message passing etc. I

did not implement it at this time, instead utilizing a single CPU for these

calculations.

2.6

Ideas for improvement

There are several things that could be improved in my program, both related

to efficiency, and to have an as smart and flexible as possible implementation,

that I did not have time to implement. I will here describe my ideas for

improvement briefly.

2.6.1

Performance improvements

Implementation of real rollback support As discussed in section 2.2.2,

it is necessary to update quite a lot in the Slater and Jastrow objects

contained within the Wavefunction_Slater in order to get the Quantum

force. However, most often a large proportion of moves will be rejected,

which mean that one has to redo this calculation in order to get back the

old state.

There is a method void rollback() in Wavefunction_Slater that is

called when a move is rejected by the Metropolis-Hastings algorithm. Today

this makes a whole complete calculation for making a step – back to the

previous position. This is quite wastefull, and should be replaced with an

implementation (with corresponding support in Slater and Jastrow) that

simply fetches the previous data instead of recalculating.

Smarter calculation of determinants of Slater matrices Today the

determinants for matrices larger than 2 × 2 is calculated using a recursive

cofactor expansion (2 × 2 is evaluated using explicit formula). This is wastefull, especially for large matrices, as it scales as n!. However, methods based

3

on tridiagonalizing the matrix, such as LU-decomposition, is of order 2n3 .27

Thus implementation of a better method of calculating this determinant

would boost calculation speed of CGM and charge density for large systems.

27

David C. Lay, ’Linear Algebra and its applications’

30

2.6.2

Better program structure

Sampler classes Today what observables to sample is hard-coded into the

algorithms. This could be replaced by an interface for sampling classes (and

a collection of implementing classes). The sampling algorithm would then

call a function in the sampling class each time it wanted to take a sample,

which would then make the sampling of the desired observables. Which

sampler class to use would then be an input to the algorithm the same way

which wavefunction to use is today.

There should be several implementations – simple implementations for

sampling the local energy and its variance, implementations implementing

support for CGM, blocking etc.

This would clean up the MontecarloAlgo code, and make the code easier

to use and more adaptable.

Generalization of double getAlpha(), double getBeta() Today all the

wavefunctions implement functions double getAlpha(), double getBeta()

that returns their defining parameters. This is at least used from the CGM

running script.

However, this causes a problem, as they are defined within each implementing wavefunction, instead of in the Wavefunction interface – as they

have to be, as the names of parameters change from wavefunction to wavefunction. This means that casting to the correct is necessary for getting

them. Cast to the wrong type, and something undefined happens.

This could be solved by defining a function double* getParams() in

the Wavefunction interface, which would then return an array (of length

num_params, which is gettable from every Wavefunction).

Generalization of Wavefunction_Slater to include other Hamiltonians Today, Wavefunction_Slater explicitly implements the Hamiltonian

X

N 1X

Z

1

2

Ĥ = −

∇i −

+

2

ri

rij

i=1

i<j

. This should be moved28 into each implementing class, making it possible

to treat systems such as quantum dots etc.

It is semi-possible to do this today as well by overriding the interfacedefined double local_energy(), but this it is ugly.

28

Probably, the implementation in Wavefunction_Slater should be kept, but renamed

and made a protected function that implementations may utilize.

31

3

Results

Minimum of Ē[~

α]

3.1

In this section I will present results of minimum-finding using sample-andplot (section 1.3.1) together with CGM (section 1.3.2). The value of α

~ =

(α, β) at the minimum will be quoted, as well as the approximate energy. I

have used the Metropolis-Hastings to calculate the energy in all cases.

3.1.1

Helium

For helium, I found the minimum at α = 1.8436, β = 0.347083, with

2 = 0.140302, all in atomic units. The inter-electron

Ē = −2.89095 and σE

separation was r12 = 1.3550753 at a quite close point α = 1.85, β = 3.85.

Graphs of E[~

α] around the minimum can be seen in figures 6, 7, and 8.

Helium

−2.88

−2.882

−2.884

−2.886

<E>

−2.888

−2.89

−2.892

−2.894

−2.896

run01

run02

run03

run04

conjgrad (vector length log10 scale)

−2.898

−2.9

0.5

0.45

0.4

0.35

0.3

0.25

0.2

0.15

0.1

1.85

1.8

1.75

β

1.9

1.95

α

Figure 6: Helium, 3D-plot around minimum

All simulations where run with ∆t = 0.01529 , with 107 cycles + 2 · 104

thermalization steps pr. CPU. Sample-and plot calculations where run with

60 CPU’s in parallel, CGM with a single CPU.

I have no good explanation why of why the CGM data points are systematically a bit lower (about 0.0005 atomic energy units) than what was found

29

This results in a very high acceptance ratio, but is OK as long as we are doing enough

steps to actually sample the entire space

32

Helium

run01

run02

run03

run04

conjgrad

−2.8895

<E>

−2.89

−2.8905

−2.891

1.8

1.81

1.82

1.83

1.84

α

1.85

1.86

1.87

1.88

1.89

Figure 7: Helium, α around the minimum

Helium

−2.888

run01

run02

run03

run04

conjgrad

−2.8885

−2.889

<E>

−2.8895

−2.89

−2.8905

−2.891

−2.8915

−2.892

0.25

0.3

0.35

β

0.4

Figure 8: Helium, β around the minimum

33

0.45

with from the sample and plot points. This only happens for Helium. However, we can probably thrust the quality of the minima, as the two methods

2 30 is in good compatibility

are in good agreement. The further, the small σE

with finding a point in Hilbert space close to a true eigenstate of Ĥ, which

is important as these parameters will be used as input to calculations of the

charge density profile.

3.1.2

Beryllium

For beryllium, I found the minimum at α = 3.96, β = 0.105, with Ē =

2 = 1.70707, all in atomic (Hartree) units.

−14.5003 and σE

Graphs of E[~

α] around the minimum can be seen in figures 9, 10, and

11.

Beryllium

<E>

−14.2

−14.4

run01

run02

run03

run04

conjgrad (vector length log10 scale)

1

0.8

0.6

−14.6

3

0.4

3.5

0.2

4

4.5

0

β

α

Figure 9: Beryllium, 3D-plot around minimum

All simulations where again run with ∆t = 0.015, with 107 cycles + 2·104

thermalization steps pr. CPU. Sample-and-plot calculations where run with

60 CPU’s in parallel, CGM with a single CPU. Again we find a quite small

2 31 , which is good.

σE

√

2

σE

≈

Ē√

2

σE

31

Beryllium: Ē

30

Helium:

12%

≈ 12%

34

Beryllium

run01

run02

run03

run04

conjgrad

−14.43

−14.44

−14.45

<E>

−14.46

−14.47

−14.48

−14.49

−14.5

−14.51

3.7

3.8

3.9

α

4

4.1

4.2

Figure 10: Beryllium, α around the minimum

Beryllium

−14.42

run01

run02

run03

run04

conjgrad

−14.43

−14.44

−14.45

<E>

−14.46

−14.47

−14.48

−14.49

−14.5

−14.51

0.05

0.1

β

0.15

0.2

Figure 11: Beryllium, β around the minimum

35

0.25

3.1.3

Neon

For neon, I found the minimum at α = 10.2068, β = 0.0911634, with Ē =

2 = 35.1335, all in atomic (Hartree) units.

−127.765 and σE

Neon

−126.4

run01

run02

run03

run04

run05

run06

run07

run08

run09

conjgrad

−126.6

−126.8

<E>

−127

−127.2

−127.4

−127.6

−127.8

0.2

0.15

0.1

0.05

9.7

β

9.8

10

9.9

10.1

10.2

10.3

10.4

10.5

α

Figure 12: Neon, 3D-plot around minimum

These simulations where again done with ∆t = 0.015, 60 · 106 cycles +

2 · 104 thermalization steps pr. CPU. Sample-and-plot calculations where

done with 60 CPU’s in parallel, CGM on a single CPU.

2 32 was greater, but so was the energy. We notice espeThis time the σE

cially that α > Z, which is opposite to the screening effect that happened

with Helium and Beryllium. We also notice that β is even smaller than it

was Beryllium. If I was to give a physical interpretation of this, we would

have to take into account that this is the first atom under study with an

l = 1 orbital (2p).

3.2

Value of the energy at the minimum

In order to calculate the value of the energy of the minimum, I will take the

parameter set found in section 3.1, and use it as input to several MetropolisHastings calculations of the energy. I will thus assume that the position of

√

32

Neon:

2

σE

Ē

≈ 4%

36

Neon

run01

run02

run03

run04

run05

run06

run07

run08

run09

conjgrad

−127.64

−127.66

−127.68

<E>

−127.7

−127.72

−127.74

−127.76

−127.78

9.9

10

10.1

α

10.2

10.3

10.4

Figure 13: Neon, α around the minimum

Neon

run01

run02

run03

run04

run05

run06

run07

run08

run09

conjgrad

−127.58

−127.6

−127.62

−127.64

<E>

−127.66

−127.68

−127.7

−127.72

−127.74

−127.76

−127.78

0.05

0.06

0.07

0.08

0.09

0.1

β

0.11

0.12

0.13

Figure 14: Neon, β around the minimum

37

0.14

0.15

the minimum is independent of the choice of ∆t.33

3.2.1

Blocking

As discussed in section 1.2.5 and 2.3.3, the error may be estimated via use

of blocking. We get plots such as shown in figures 15, 18, and 21 which we

may then use to estimate the error of the calculation.

As also discussed, this is possible to estimate by doing a curve fit of the

derivative, as is shown in figures 16, 19 and 22.

These fits, with the parameter C = 1000 as defined in equation (28), I

get the estimates shown in the marked points in figures. From this it seems

that C might be set a bit low, but it is OK for making errorbars.

3.2.2

Helium

Blocking-plots for helium can be seen in figures 15 and fit to derivative in

16. We see that the machinery works very well.

−4

4.5

Helium

x 10

dt=0.1

dt=0.015

X: 200

Y: 0.0003861

4

Error estimate

3.5

3

2.5

X: 50

Y: 0.0002322

2

1.5

1

0

10

1

2

10

10

Blocksize

Figure 15: Blocking on helium. Automatically found errors are indicated

The final energy can be seen from figure 17. It is here evident that at

very large ∆t the algorithm gives large systematic errors (to large energy),

while for very small ∆t, we get fluctuations. The problem at small ∆t can

probably be remedied by increasing the number of steps, in order to have