Analysis of News-On-Demand Characteristics and Client Access

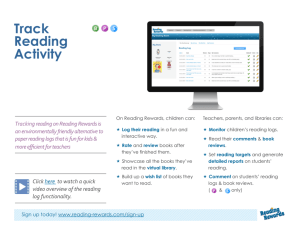

advertisement