11 INTERFACE ROBUSTNESS TESTING: EXPERIENCES AND LESSONS LEARNED FROM

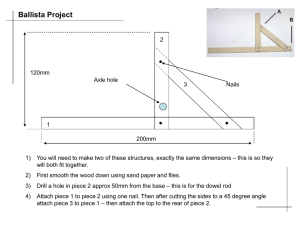

advertisement