R 1

advertisement

R

1

DEPARTMENT OF INFORMATICS

March 2003

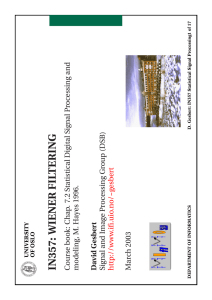

David Gesbert

Signal and Image Processing Group (DSB)

http://www.ifi.uio.no/~gesbert

D. Gesbert: IN357 Statistical Signal Processing1 of 21

Course book: Chap. 9 Statistical Digital Signal Processing and modeling,

M. Hayes 1996 (also builds on Chap 7.2).

IN357: ADAPTIVE FILTERS

UNIVERSITY

OF OSLO

DEPARTMENT OF INFORMATICS

D. Gesbert: IN357 Statistical Signal Processing2 of 21

• Example: Adaptive beamforming in mobile networks

• Performance of RLS

• The RLS algorithm

• Performance of LMS

• The LMS algorithm

• Steepest descent in adaptive filtering

• Steepest descent and optimization theory

• The adaptive FIR filter

• Motivations for adaptive filtering

Outline

UNIVERSITY

OF OSLO

Motivations for adaptive filtering

..

p−1

x (n)

x 0(n)

x2 (n)

p observations

desired signal

d(n)

Wn

filter

error signal

e(n)

estimated signal

D. Gesbert: IN357 Statistical Signal Processing3 of 21

^

d(n)

filter W must be adjusted over time n

observed random process, may be non stationary

desired random process (unobserved) may be non stationary

observed random process, may be non stationary

observed random process,may be non stationary

DEPARTMENT OF INFORMATICS

{xp−1(n)}

{d(n)}

{x0(n)}

{x2(n)}

Goal: “Extending optimum (ex: Wiener) filters to the case where the data

is not stationary or the underlying system is time varying”

UNIVERSITY

OF OSLO

DEPARTMENT OF INFORMATICS

D. Gesbert: IN357 Statistical Signal Processing4 of 21

• Example 2: To find the adaptive beamformer that tracks the location

of a mobile user, in a wireless network. d(n) is stationary (sequence of

modulation symbols), but {xi(n)} are not because the channel is

changing.

• Example 1: To find the wiener solution to the linear prediction of

speech signal. The speech signal is non stationary beyond approx

20ms of observations. d(n), {xi(n)} are non stationary.

The filter W must be adjusted over time and is denoted W (n) in order to

track non stationarity:

Cases of non stationarity

UNIVERSITY

OF OSLO

DEPARTMENT OF INFORMATICS

D. Gesbert: IN357 Statistical Signal Processing5 of 21

• (Block filtering) One splits time into short time intervals where the

data is approximately stationary, and re-compute the Wiener solution

for every block.

• (Adaptive filtering) One has a long training signal for d(n) and one

adjusts W (n) to minimize the power of e(n) continuously.

Two solutions to track filter W (n):

Aproaches to the problem

UNIVERSITY

OF OSLO

DEPARTMENT OF INFORMATICS

where T is the transpose operator.

ˆ

d(n)

= W (n)T X(n)

D. Gesbert: IN357 Statistical Signal Processing6 of 21

W (n) = [w0(n), w1(n), .., wp−1(n)]T

X(n) = [x0(n), x1(n), .., xp−1(n)]T

Vector Formulation (time-varying filter)

UNIVERSITY

OF OSLO

DEPARTMENT OF INFORMATICS

D. Gesbert: IN357 Statistical Signal Processing7 of 21

Find W (n) such that J(n) is minimum at time n. W (n) is the optimum

linear filter in the Wiener sense at time n.

where E() is the expectation.

ˆ

e(n) = d(n) − d(n)

J(n) = E|e(n)|2 varies with n due to non-stationarity

Time varying optimum linear filtering

UNIVERSITY

OF OSLO

DEPARTMENT OF INFORMATICS

(2)

(3)

(1)

D. Gesbert: IN357 Statistical Signal Processing8 of 21

Rx(n) = E(X(n)∗X(n)T )

rdx(n) = E(d(n)X(n)∗)

Rx(n)W (n) = rdx(n) where

The solution W (n) is given by the time varying Wiener-Hopf equations.

Finding the solution

UNIVERSITY

OF OSLO

DEPARTMENT OF INFORMATICS

(4)

D. Gesbert: IN357 Statistical Signal Processing9 of 21

(n + 1) = W (n) + ∆W (n)

where ∆W (n) is the correction applied to the filter at time n.

Tracking is formulated by:

• Recursive Least Squares (RLS) algorithm.

• Steepest descent (also called gradient search) algorithms.

Two key approaches:

The time-varying statistics used in (1) are unknown but can be

estimated. Adaptive algorithms aim at estimating and tracking the

solution W (n) given the observations {xi(n)}, i = 0..p − 1 and a training

sequence for d(n).

Adaptive Algorithms

UNIVERSITY

OF OSLO

DEPARTMENT OF INFORMATICS

D. Gesbert: IN357 Statistical Signal Processing10 of 21

Because J() is quadratic here, there is only one local minimum toward

which W (n) will converge.

where µ is a small step-size (µ << 1).

δJ

• W (n + 1) = W (n) − µ δW

∗ |W =W (n)

• W (0) is an arbitrary initial point

Idea: “Local extrema of cost function J(W) can be found by following the

path with the largest gradient (derivative) on the surface of J(W).”

Assumptions: Stationary case.

Steepest descent in optimization theory

UNIVERSITY

OF OSLO

DEPARTMENT OF INFORMATICS

J(W )

e(n)

δJ

δW ∗

δJ

δW ∗

δJ

δW ∗

= −E(e(n)X(n)∗)

D. Gesbert: IN357 Statistical Signal Processing11 of 21

= E(e(n)e(n)∗) where

ˆ = d(n) − W T X(n)

= d(n) − d(n)

δe(n)

δe(n)∗

∗

= E(

e(n) + e(n)

)

∗

∗

δW

δW

∗

δe(n)

= E(0 + e(n)

)

∗

δW

Derivation of the gradient expression:

The steepest descent Wiener algorithm

UNIVERSITY

OF OSLO

DEPARTMENT OF INFORMATICS

Problem: E(e(n)X(n)∗) is unknown!

D. Gesbert: IN357 Statistical Signal Processing12 of 21

W (n) will converge to Wo = R−1

x rdx (wiener solution) if 0 < µ < 2/λmax

(max eigenvalue of Rx . (see p. 501 for proof).

• W (n + 1) = W (n) + µE(e(n)X(n)∗)

• W (0) is an arbitrary initial point

Algorithm:

The steepest descent Wiener algorithm

UNIVERSITY

OF OSLO

DEPARTMENT OF INFORMATICS

• Repeat with n + 2..

• W (n + 1) = W (n) + µe(n)X(n)∗

• W (0) is an arbitrary initial point

D. Gesbert: IN357 Statistical Signal Processing13 of 21

Idea: E(e(n)X(n)∗) is replaced by its instantaneous value.

The Least Mean Square (LMS)

Algorithm

UNIVERSITY

OF OSLO

(5)

• The algorithm is derived under the assumption of stationarity, but

can be used in non-stationary environment

as a tracking method.

DEPARTMENT OF INFORMATICS

D. Gesbert: IN357 Statistical Signal Processing14 of 21

• A small µ results in larger accuracy but slower convergence.

• µ allows a trade-off between speed of convergence and accuracy of the

estimate.

• The variance of W (n) around its mean is function of µ.

Important Remarks:

(W (n)) → R−1

x rdx when n → ∞

Lemma: W (n) will converge in the mean toward Wo = R−1

x rdx , if

0 < µ < 2/λmax, (see p. 507) ie.:

The Least Mean Square (LMS)

Algorithm

UNIVERSITY

OF OSLO

rdx(n) =

Rx(n) =

k=0

k=0

k=n

X

k=n

X

λn−k d(k)X(k)∗

λn−k X(k)∗X(k)T

= rdx(n)

DEPARTMENT OF INFORMATICS

(8)

(7)

(6)

D. Gesbert: IN357 Statistical Signal Processing15 of 21

where λ is the forgetting factor (λ < 1 close to 1)

Where

x (n)W (n)

Idea: build a running estimate of the statistics Rx(n), rdx(n), and solve

the Wiener Hopf equation at each time:

A faster-converging algorithm

UNIVERSITY

OF OSLO

DEPARTMENT OF INFORMATICS

(9)

(10)

(11)

D. Gesbert: IN357 Statistical Signal Processing16 of 21

Answer: Using the matrix inversion lemma (Woodbury’s identity)

Question: How to determine the right correction ∆W (n − 1) ??

Rx(n) = λRx(n − 1) + X(n)∗X(n)T

rdx(n) = λrdx(n − 1) + d(n)X(n)∗

W (n) = W (n − 1) + ∆W (n − 1)

To avoid inverting a matrix a each step, ones finds a recursive solution

for W (n).

Recursive least-squares (RLS)

UNIVERSITY

OF OSLO

DEPARTMENT OF INFORMATICS

(12)

D. Gesbert: IN357 Statistical Signal Processing17 of 21

−1

H −1

A

uv

A

H −1

−1

A + uv ) = A −

1 + v H A−1u

We apply to Rx(n)−1 = (λRx(n − 1) + X(n)∗X(n)T )−1

We define P(n) = Rx(n)−1. The M.I.L. is used to update P(n − 1) to P(n)

directly:

Matrix inversion lemma

UNIVERSITY

OF OSLO

= λ Rx(n − 1)

−1

DEPARTMENT OF INFORMATICS

Rx(n)

−1

−1

D. Gesbert: IN357 Statistical Signal Processing18 of 21

λ−1Rx(n − 1)−1X(n)∗X(n)T Rx(n − 1)−1

−

(13)

1 + λ−1X(n)T Rx(n − 1)−1X(n)∗

(14)

Matrix inversion lemma

UNIVERSITY

OF OSLO

DEPARTMENT OF INFORMATICS

(21)

(19)

(20)

(18)

(15)

(16)

(17)

D. Gesbert: IN357 Statistical Signal Processing19 of 21

where δ << 1 is a small arbitrary initialization parameter

W (0) = 0

P(0) = δ −1I

Z(n) = P(n − 1)X(n)∗

Z(n)

G(n) =

λ + X(n)T Z(n)

α(n) = d(n) − W (n − 1)T X(n)

W (n) = W (n − 1) + α(n)G(n)

1

P(n) = (P(n − 1) − G(n)Z(n)H )

λ

The RLS algorithm

UNIVERSITY

OF OSLO

DEPARTMENT OF INFORMATICS

D. Gesbert: IN357 Statistical Signal Processing20 of 21

Accuracy: In LMS the accuracy is controlled via the step size µ. In RLS

via the forgetting factor λ. In both cases very high accuracy in the

stationary regime can be obtained at the loss of convergence speed.

Convergence speed: LMS slower because depends on amplitude of

gradient and eigenvalue spread of correlation matrix. RLS is faster

because it points always at the right solution (it solves the problem

exactly at each step).

Complexity: RLS more complex because of matrix multiplications. LMS

simpler to implement.

RLS vs. LMS

UNIVERSITY

OF OSLO

DEPARTMENT OF INFORMATICS

To be developped in class.

D. Gesbert: IN357 Statistical Signal Processing21 of 21

The LMS applied to the problem of adaptive beamforming...

Application

UNIVERSITY

OF OSLO