ARHVS Matrix

advertisement

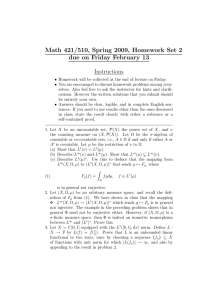

Nuclear Norm Penalized LAD Estimator for Low Rank

ARHVS

Matrix Recovery

MASSACHUS' FTT T-,,1-TT

OF ECiHNOLLUY

by

JUN 3 0 2015

Wenzhe Wei

LIBRARIES

B.S., Peking University (2010)

Submitted to the Department of Mathematics

in partial fulfillment of the requirements for the degree of

Doctor of Philosophy in Mathematics

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

June 2015

@ Wenzhe Wei, MMXV. All rights reserved.

The author hereby grants to MIT permission to reproduce and to distribute

publicly paper and electronic copies of this thesis document in whole or in

part in any medium now known or hereafter created.

Signature redacted

Author.......................................................

Department of Mathematics

Signature redacted

C ertified by .... ,

I"

May 18, 2015

........................

Lie Wang

Associate Professor

Signature redacted

Thesis Supervisor

.....

A ccepted by ........................................................

Alexei Borodin

Chairman, Department Committee on Graduate Theses

TF

2

Nuclear Norm Penalized LAD Estimator for Low Rank Matrix

Recovery

by

Wenzhe Wei

Submitted to the Department of Mathematics

on May 8, 2015, in partial fulfillment of the

requirements for the degree of

Doctor of Philosophy in Mathematics

Abstract

In the thesis we propose a novel method for low rank matrix recovery. We study the framework using absolute deviation loss function and nuclear penalty. While nuclear norm penalty

is widely utilized heuristic method for shrinkage to low rank solution, the absolute deviation

loss function is rarely studied. We establish an near oracle optimal recovery bound and

gave a proof using E-net covering argument under certain restricted isometry and restricted

eigenvalue assumptions. The estimator is able to recover the underlying matrix with high

probability with limited observations that the number of observation is more than the degree

of freedom but less than a power of dimension. Our estimator has two advantages. First the

theoretical tuning parameter does not depends on the knowledge of the noise level, and the

bound can be derived even when noises have fatter tails than normal distribution. The second advantage is that absolute deviation loss function is robust compared with the popular

square loss function.

Thesis Supervisor: Lie Wang

Title: Associate Professor

3

4

Acknowledgments

I would like to express my sincere gratitude toward my advisor Prof. Lie Wang, for invoking

my interest in this exciting area. He provided me with such a fascinating problem and led

me through many obstacles and details. His hands on experience helped me a lot during my

research.I also appreciate that he taught me quite a few math tricks and skills in the area.

Aside from my advisor, I would like to thank Prof. Han Liu for his continuing support.I

benefit much from discussion and causal chat with him online as well as during my short

visit at Princeton University.I greatly appreciate Prof. Laurent Demanet and Prof. Peter

Kempthorne for agreeing to be in my thesis defense committee.They carefully checked my

work and provided many useful suggestions.

During my stay at MIT, I received endless support from graduate co-chairs Prof. Alexei

Borodin, Prof. William Minicozzi, Prof. Gigliola Staffilani, Prof. Bjorn Poonen and and

many other professors for both academic and nonacademic issues.

I also enjoyed my five

years with my peers Lu Wang, Guozhen Wang Xuwen Zhu, Yi Zeng, Qiang Guang, Ruixun

Zhang, Teng Fei, Chenjie Fan, Ben Yang, Hong Wang, Haihao Lu in math department.I

would also thank Ziyang Gao, Wenda Gu for our friendship since college. It reminds me of

all the days with happiness when we made phone calls.

Finally, I thank my parents and my girlfriend Rong Jiang for being there for me always.

5

6

Contents

Background and literature review . . . . . . . .

. .

13

1.2

Mathematics preliminary . . . . . . . . . . . . .

.

.

16

. .

16

.

.

1.1

An overview of matrix norm . . . . . . .

1.2.2

Useful tools . . . . . . . . . . . . . . . .

17

LAD Estimator for Low Rank Matrix Recovery

19

Introduction . . . . . . . . . . . . . . . . . . . .

19

.

.

21

2.1.2

Choice of penalty . . . . . . . . . . . . .

. . . . . . . . . . . . . . .

22

Necessary assumptions . . . . . . . . . . . . . .

. . . . . . . . . . . . . . .

23

2.2.1

Assumptions on the error distribution . .

. . . . . . . . . . . . . . .

24

2.2.2

Assumptions on the design matrices . . .

. . . . . . . . . . . . . . .

24

M ain theorem . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . ... .

27

Properties of the estimator . . . . . . . .

. . . . . . . . . . . . . . .

27

. . . . . . . . . . .

. . . . . . . . . . . . . . .

28

. . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . .

33

2.3.1

.

.

.

.

.

.

.

. . . . . . . . . . . . . . .

Proof of main theorem 2.3.1

2.5

Important lemmas

.

2.4

.

2.3

Problem setup . . . . . . . . . . . . . . .

2.5.1

Cone condition

. . . . . . . . . . . . . .

. . . . . . . . . . . . . . .

33

2.5.2

E-net covering lemma . . . . . . . . . . .

. . . . . . . . . . . . . . .

34

2.5.3

Expectation lemma . . . . . . . . . . . .

. . . . . . . . . . . . . . .

35

2.5.4

Expectation difference lemma

. . . . . .

. . . . . . . . . . . . . . .

36

2.5.5

Finer version of cone condition

. . . . .

. . . . . . . . . . . . . . .

37

2.5.6

Concentration lemma . . . . .

. . . . . . . . . . . . . . . .

39

.

.

.

2.2

2.1.1

.

2.1

.

1.2.1

7

.

2

13

Introduction

.

1

A Tables

41

B Figures

43

8

List of Figures

9

10

List of Tables

11

12

Chapter 1

Introduction

1.1

Background and literature review

We live in an era of "big data". We face a massive amount of data everyday. Problems arise

when we want to estimate a group of parameters that are represented by vector or matrix

because these structures are usually in huge dimension, however for many special problems,

the underlying latent parameter structure has properties that can significantly reduce the

real dimension of the problem.

To be specific, we consider the family of linear models.

Traditional linear regression

solves the estimation of beta coefficients with n observations and p features.

It assumes

n > p (and no multicolinearity) to ensure a unique solution. Recently researchers have been

doing extensive research under a high dimensional setting which is characterized by p > n.

In 1996, Tibshirani [1996] proposed Lasso (least absolute shrinkage and selection operator)

for linear regression. While Lasso does not emphasize p > n, it has been widely used then in

in variable selection to pick up significant variables. Particularly for a linear regression model

y = X,3 + e when the design matrix X is orthogonal, the Lasso solution is doing thresholding

so that it is expected to pick significant variables. It was later found to be in close connection

with compressed sensing (see Candes [2008] and Bickel et al. [2009]). The innovative idea

in Lasso is penalizing the loss function with Li norm of the parameter vector. It proves

to be quite useful especially in high dimensional settings when the number of features p is

much larger than the number of observations n. The variations of Lasso appeared to address

13

different situations. For example Belloni et al. [2011] proposed Square-root Lasso to address

the unknown variance. They proved that given a linear model y = x'0o + O-e where the

E^

- arg minQ()

noise ci has mean 0 and variance 1. Let

1 2

/ + A II1/11

be the solution to

the minimization problem (square-root Lasso). Then under suitable conditions of the design

and noise, one has, with large probability 110 -

3112

< U

log(2p/a)

which is in the same

magnitude as the oracle bound of traditional Lasso.

While Lasso and other related methods above recover sparse vectors from limited observations, it is natural to ask what is the generalization to the case of matrix space? The

special structure we are seeking is a low-rank structure in matrix space.

The analogy of

sparsity for vectors is low-rank while the regularizer is taken to be the nuclear norm in the

matrix case. Similar settings such as restricted isometry and eigenvalue properties can also

be generalized to matrix case. But the problem becomes more complicated because singular

values are usually not the entries of the matrix, so the singular vector matrix structure is

also involved in the analysis. Plenty of work has been done in this fruitful area of low-rank

matrix recovery.

[Fazel et al., 2001] showed that in practice it is possible to generate low-rank solutions

Since then several researchers explored and

using the nuclear norm penalty as heuristic.

contributed theoretical results on the recovery performance. Since then there are explosive

literatures on the topic. [Recht et al., 2010] studied the theory of nuclear norm penalization

based on RIP property, which is analogous to a similar setting in compressed sensing. [Keshavan et al., 2009] focused on matrix completion with noisy entries.

[Candes and Recht,

2009] studied the exact matrix completion problem with minimum samples required and

later [Cands and Tao, 2010] quantified the minimum number of entries needed to recover a

matrix by establishing an information theoretical limit. While those works are under noiseless settings, [Candes, 2010] furthermore explored matrix completion with noise.

[Gross,

2011] as well as [Recht, 2011] proposed a novel yet simpler approach in 2011. Following their

work and inspired by those approaches, [Negahban and Wainwright, 2011] studied the general observation model in both low-rank and near low-rank cases under the high dimensional

settings where r <

max{mi, m 2 } and n

error under restricted strong convexity.

<

m 1 m 2. They also analyzed the nonasymptotic

Later [Negahban et al., 2009] proposed a unified

14

framework for both sparse regression and low-rank matrix recovery and emphasized the importance of restricted strong convexity and decomposability of regularizers. [Rohde et al.,

2011] considered a similar observation model with noise and their recovery scheme is not

limited to nuclear norm but general schatten-p norm. It is worth noting that the schatten-p

norm is not convex if p < 1. Later the bound was improved in [Koltchinskii et al., 2011].Gross

[2009] provides a novel way in matrix recovery with Fourier typed basis.[Rennie and Srebro,

2005], from machine learning's perspective, studied the collaborative filtering problem with

low-rank settings. In addition to the standard metric between matrices, [Davenport et al.,

2014] have found that bit-matrix completion achieves better results than standard procedure

when applied to move rating problem if the ratings are discrete number between 1 and 5. In

the mean time a lot of work has been done to develop effective algorithms. [Liu and Vandenberghe, 2009] developed an interior-point method. [Cai et al., 2010] proposed a singular

value thresholding algorithm. [Mazumder et al., 2010] and [Nesterov, 19831 also provided

first order algorithm in 11/nuclear norm typed optimization.

Among those works mentioned, most of the existing methods of matrix recovery requires

the knowledge of the variance of the error or at least a fair estimate of the variance. However

this is not always known in advance. Several literature comes out to address that issue. As

I am working on my own solution to the addressed questions, I notice that [Klopp, 2014]

has been doing similar research on this. The author's approach, by considering the root of

sum of square loss function, is much like square-root Lasso in [Belloni et al., 2011]. We will

compare the result later in the thesis.

The thesis will be organized as follows.

Chapter 1 (This Chapter) mainly discusses the background of matrix recovery. It starts

with some important conclusions from lasso and compressed sensing. It also briefly mentions

the critical ideas in the literatures that establish upper bound and oracle inequalities of those

estimators. These ideas inspires generalization to the family of matrix recovery problems.

Chapter 1 also compares the results of different recovery scheme.

Chapter 2 Starts with the setting of low-rank matrix recovery problem. It defines necessary notation and makes certain assumptions. Then some useful lemmas and math tools will

be introduced. The main results on Li penalized LAD estimator will be stated and proved.

15

Chapter 2 will also discuss the novelty and advantages of our framework and compare the

approach to the results in chapter 1.

1.2

Mathematics preliminary

In this section, we will make definitions and prove some useful results.

1.2.1

An overview of matrix norm

Throughout the thesis we will mainly focus on these 3 matrix norms: nuclear norm, Frobenius

norm.

Let the matrix A has a singular value decomposition A = UEVT, where E

=

diag(ui, ... , 0,)

is the diagonal matrix of singular values.

Definition 1.2.1. the Schatten-q norm (q > 0) of a matrix A is defined by it's singular

values as follows

|jAjjq = (

O

1/q

(1.2.1)

j=1

Remark: The Schatten-q norm is invariant under orthogonal transformation.

Definition 1.2.2. Particularly

1IAI

= U 1 (A) equals the largest singular values. Sometimes we also use IA 112 for the

schatten oo norm.

r

* IIAII,

= E cj

j=1

* IIAI|F

=

is called the Nuclear norm of matrix A.

r

a ?f is called the Frobenius norm.

j=1

Definition 1.2.3. The sub-differential M of a matrix function f(A) is the set of all matrices

M with the same size of A, such that

f(B) - f(A) >_ tr(M T (B - A))

16

(1.2.2)

1.2.2

Useful tools

Note that if A is a m, x m 2 matrix, it is naturally an bounded linear operator from the

Hilbert space Ri" to RrM2. Theorems of bounded operator can be applied to Schatten-p

norms of A.

Lemma 1.2.1. nuclear norm IIAII, is a convex function on the vector space of all m1 x m 2

matrices.

Proof. Proof our proof is adapted version from Lax [2002]. Let's denote by F the space of

all m, x m 2 matrices (i.e. all the linear operator from R 1 to Rlm2, which has a induced

family of norms. By characterization in Lax [20021,

IIAII*

= sup

(Aei, fi)

(1.2.3)

where the supreme is taken over all the pairs of orthogonal basis {ej and {fi}.

Let

A1

+ P2

=

1 be two nonnegative real numbers.

I|ii1A 1 +/

sup

< sup

2

A 2 11 = sup Z((p1iAi + p2 A 2 )ei, fi)

(1.2.4)

((pA, + [t2 A 2 )ei, fi)

((p1A1ej,

=jjp1A1||, +

fi) +

sup Z((/p 2 A 2 ei, fi)

(1.2.5)

I|p2A2||,

Lemma 1.2.2. Watson [19921 Let f(A) = |IA||* be the nuclear norm function of a matrix

A. Suppose A has a singular value decomposition A = UEV, then the subdifferential of

f(A) at A equals

af(A) = {U(diag{ti, t 2 , ... , tr})V,

ti = 1 if oi 54 0, -1

< ti 5 1 if oei = 0}

(1.2.6)

Lemma 1.2.3. Suppose A 1 and A 2 are matrices of size m1 x M 2 , we have trace duality

17

inequality

IIAfA2|I*

tr(A'A2 )

!

IIAiII00IA 2 I*

(1.2.7)

Proof. Let {ej} be a orthogonal basis of R m 2.

tr(A A 2 ) = E(AT A2ei, ej)

< sup Z((ATA 2 ei, fA)

(1.2.8)

=IIATA 2 11

For the second inequality see page 331 theorem 2 of [Lax, 2002, p. 331]

Lemma 1.2.4. The Frobenius norm IIAI|F of a matrix A

mi

IIAIF

=

(aij) has another form

m2

E a?

=

(1.2.9)

i=1 j=1

Proof. Suppose A

O-2 >

...

0 1 , - - ,U22.

=

UEVT is a singular value decomposition and has singular values or1

0r > 0. Then the matrix ATA is a positive semidefinite matrix with eigenvalues

Note that the trace of ATA equals the sum of all the eigenvalues. So

r

IIAIIF

mi

m2

o = tr(ATA) = E

=

i=1 j=1

j=1

18

a?

(1.2.10)

Chapter 2

LAD Estimator for Low Rank Matrix

Recovery

2.1

Introduction

We start with the linear matrix observation setting. Suppose we have observations of pairs

(yi, Xi), in which yj's are scalars and Xi's are matrices of dimension mi x M 2 . The parameter

of interest is the unknown matrix A which also has dimension mi x M 2 . Let ej be the noise,

i.e.

the observation error. Assume now that they have i.i.d distribution of mean 0 and

variance or. o.2 is also unknown to us. And the following holds

(2.1.1)

yj = tr(X7A) + i

Remark. It is worth noting that we can rewrite both Xi and A in vector form xi and A.

We then define a vector y

=

(yi, y2,

... , yn)

and a larger matrix X

=

(X 1 ,

Xn) T.

(matrix

container of vectorized Xi). On the other hand, the trace function The matrix equation

model becomes

y =X A + ec

(2.1.2)

So the matrix equation model is equivalent to linear vector model without additional

structure. Assuming the unknow matrix A sparse implies that the vector A is sparse and

19

vice versa. However low rank constraint makes a critical difference because there is no easy

way to derive an explicit function expression of the rank structure for A based on its entries.

A lot of matrix estimation and recovery problems and be rewritten in the form. For

instance,

(A) Netflix problem [Bennett and Lanning, 20071

In the year of 2006, Netflix launches a program called "million dollar prize" for the best

recommendation systems.

Netfiix provided a training dataset which contains about 100 millions records.

Each

record is a triplet of user, movie and ratings. There are a couple thousands of movie and a

hundred thousands of users. Every user grades the movie from 1 to 5 stars and the grades

are integers. Hence it induces a natural matrix structure where rows are users and columns

are movies. Grades appear as matrix entries.

Now that of course it is impossible that everyone watches every piece of movie. That

means the whole matrix is unknown to us and only a subset of the ratings are revealed.

Given that we had known partial information (a subset of ratings) from the database, we

expect to recover the whole matrix. As long as we approximate the true rating matrix, we

can subsequently do recommendations.

We form it as a low rank matrix recovery problem. A very intuitive connection between

Netflix recommender and low rank matrix recovery is that the ratings of users' are mainly

decided by much fewer factors such as Genre, Director, Cast etc. So we expect the decisive

factors of the matrix is much less than the dimensions. It is natural to assume that the

matrix is low-rank.

The Netflix problem can be viewed as a special example of matrix completion. Consider

the matrix completion problem. A is the matrix of interest. We have observed several entries

,

from A with noise. Suppose the observed entries are in position (i1 , ji), (i2 , j2), ... (ik, k), ...

denoted by yij, - - - , Yiik respectively. We take Xk to be an zero-one matrix Eikik in which

the only nonzero entry is in

(ik,

jA). Then

Yk = tr(X A) +

20

Ek

(2.1.3)

where Ek are observation errors.

(B) Multi-task Learning [Mei et al.]

As shown in [Mei et al.], in the multi-task learning setting, we are given T related learning

tasks. The training data are (x t), y(t

)ut i,

where nt is the number of training samples of task

t, xt) is the feature vector and yt) the response. Suppose the predictors are linear through

E(yilxi) = (xi, wi). Define W = [w1 ,w 2 ,-

,WT] E RPxT and Xt = [xi , x2

,xn

Now assume that the coefficient matrix is low rank, which is kind of reasonable if these

learning tasks are indeed related and the implicit parameter are controlled by much fewer

factors. A general formulation for multi-task learning can be expressed as

T

W = arg min E L(yt, XTwt) + A ||WI,,

(2.1.4)

t=1

We can see that it is not hard to rewrite (Xt, Wt) in trace form of matrices W and Xt

and breakdown the vector yt into scalars.

2.1.1

Problem setup

We start by defining

V)(A) = n j yj

i=1

-

tr(X A)I+ AIIAj,

(2.1.5)

jyj - tr(XiA)l

(2.1.6)

and

n

q(A)

n

i=1

and let

A

=

arg minO (A)

AEA

(2.1.7)

be our estimate for the unknown matrix A. The optimization setup has two features. The

loss function is taken to be absolute deviation (i.e. Li loss function) and the penalty function

is the nuclear norm as defined in chapter 1.

Remark 2.1.1. Why nuclear norm penalty lead to possible low rank solution? It was first

introduced in [Fazel et al., 2001], in which the authors showed that the nuclear norm is the

21

convex envelop of the rank function in the unit ball. On the other hand, recall that in vector

linear regression Li penalty leads to sparse solution. We have a natural analogy here as

nuclear norm is exactly the Li norm of the singular vectors while the rank function is the

LO norm of the singular vectors.

We want to point out that the first advantage of our approach is that absolute deviation

function has robustness. It is commonly known that absolute deviation function is more

resistant to outliers in the observation yi. In the next section we will discuss how to choose

the optimal penalization factor A to achieve best theoretical property.

Next as mentioned in [Negahban et al., 2009], two properties of the estimator, decomposability of regularizer and restricted strong convexity are critical to our analysis. We will

revisit it in detail in the section of lemmas.

2.1.2

Choice of penalty

The first question is how to choose the penalty A. In practice it is plausible to test over an

interval to choose desired A by cross validation. However this is not the main focus here.

We are interested in theoretical analysis instead. Hence we introduce

A = COCM (mi + M 2 ) log(Mi + M 2 )

y

n

(2.1.8)

where Co > 1, CM > 1 are constants and we will discuss it later. The motivation to pick A is

to dominate the empirical loss with high probability. The level of penalty should dominate

the noise with large probability. We will prove the following proposition. More discussion

on the tuning parameter A will appear in ??.

Proposition 2.1.1. Assume that

n

n

i=1

where M = CM max

(m 1 ,M

2

).

< nMImi and

XTXi

nMIm 2

(2.1.9)

i=1

Without loss of generality we will always assume mi 1 > M 2

.

SiXTx

Here CM is some constant larger than 1. In practice it will be determined based on tolerance

level.

22

Then we have

nZ)i112)

i=I

1- (in1 +Mi 2 -0(1)

Proof. According to [Nemirovski, 2007, Proposition 3 on page 13], we define Q =

and

e=

(2.1.10)

in (mi +

im 2

v n-M, then

n

IP(lIl

sgn(zi)Xjil 2

> Q2E) = VnM

In

(in1

+

in2 ))

(2.1.11)

<0(1) exp (-O(1)22)

=O(1)(Mi

+ M2)~0

Therefore

i

n

P (A > Con Esgn(zi)Xi2

i=1

=P

(i

+ M 2 ) log(Mi + m 2

n

)

(COCM

CoIl Esgn(zi)Xi112

(2.1.12)

=1 - P( nI

sgn(zi)Xi12

>=

>=

Chmi n (mi + M2 ))

QO

i=1

2

)

>1 - 0(1) exp (-0(1)Q

=1 - O(1)(Mi + M2)-0(1)

Remark 2.1.2. Here we have made the first assumption on the design matrices. We will

revisit it in the subsequent section on all assumptions.

2.2

Necessary assumptions

In this section, we will introduce a set of assumptions based on which our main result is

derived.

23

)

E

Coli

)

Coil

P (A >

2.2.1

Assumptions on the error distribution

We assume that the error 6i's are i.i.d random variables with continuous density function.

Further the denstity function is symmetric around 0. These assumptions will ensure the

strong convexity inequality holds.

We also require that error distribution ej satisfies

1

P (e j !x ) < 2 +1

fo r a ll x > 0

2 +aox

P(ej < X) < -

I

2 + aox

(2.2.1)

for all x < 0.

for some positive real number ao.

Remark 2.2.1. Note that this condition on the tail distribution is quite mild. For example

normal distribution and Cauchy distribution satisfies the condition. However this does not

hold for noiseless setting because ej cannot be constant anymore.

2.2.2

Assumptions on the design matrices

Define sampling operator X :

RmIm2-

> Rn as X(D) = (tr(XT D), tr(XT D),

...

, tr(XTD))

We make following assumptions:

(A) Bounded above

Recall that we assume the design matrix

n

i [

(2.2.2)

nCumlImi

i=1

We will prove a statement here to study the asymptotic result regarding the sums.

Proposition 2.2.1. Suppose all the entries in all the design matrices are independently

standard Gaussian variables with mean 0 and variance 1, we have

lim

n->oo

n

XiX

2 m 2 Imi

lim - E X[X,

i=2

n->oo n

24

-4

mIm2

(2.2.3)

Proof. We only need to prove the first statement. Let X 1

(x 1 ,x 1 )

X 1 Xf

= m2S41

(X,Xmi)

.

x2 , -- -

,

, therefore

1

(2.2.4)

=

(xmi, Xi)

Now E((xj, Xk))

= [X 1 ,

-..

(Xmi, xmi)J

since each xj is a random vector of length m 2 with standard

normal entries. Hence by the law of large number

lim

n->oo nS

XjX[

-4

(mi2.2.5)

m2

Remark 2.2.2. Now go back to the assumptions, as long as the bound M is larger than m1

and M 2 , the assumption will holds with large probability under Gaussian random design. So

the condition makes sense here.

(B)Restricted Isometry Property

(second order eigenvalue condition)first, there exist positive constants Ku and K' that

D1

It

E [ , K2"l

(2.2.6)

holds for all nonzero mi x M2 matrix D

Remark 2.2.3. In fact, this assumption is another way of expressing "Restricted Isometry Property" which is introduced in [Candes and Tao, 2007

The following lemma helps us better understand the assumption under Gaussian Random

Design:

Proposition 2.2.2. Suppose all the entries in all the design matrices are independently

standard Gaussian variables with mean 0 and variance 1, we have

lim IIX(D)I

n->oo

nrID 112

25

4

(2.2.7)

Proof. Without loss of generality we can assume |ID11'

=

1, we vectorize every matrix here

just as we did in previous section. Then

IIX(D)1|

nIID11

En 1 Itr(XTD) 12

n

En

1

(2 2 8)

2

n

According to the law of large number

-n'

-

n

E((X

(2.2.9)

)2)

Hence Y

,where X is a random vector with i.i.d normal (0,1) entries.

a normal random variable. Now note that |IDI12

=

1, therefore Var(Y)

=

=

((X, D) is also

1 and obviously

E(Y) = 0. Hence Y is a standard normal random variable. so we have E(Y 2 ) = 1 as desired.

Remark 2.2.4. The intuition behind the RIP condition is that we want to probe the unknown

matrix A with dispersed design matrix while the best ones are the orthogonal ones. In another

word, the design matrix should spread out evenly on a sphere so as to obtain a better picture

of the unknown matrix

(C) Restricted Eigenvalue Condition

There exists a positive number ru that

llX(D)IlI

_

nIIDIIF

k

En 1Itr(XTD)I

> ku

-i nIIDIIFT

(2.2.10)

holds for every nonzero mi x m 2 matrix D

We have similar conclusion about REC condition:

Proposition 2.2.3.

IIX(D)lII

nIIDIIF

En~ 1 tr(XTD)I

nIIDIIF

Proof. Without loss of generality we can assume

just as we did in previous section. Then

26

IIDI1

2

(2.2.11)

7

(.

= 1, we vectorize every matrix here

IIX(D)JIi

I

1Ultr(XTD)I _

1|D 112

n

)1

(2.2.12)

n

According to the law of large number

+]E(I(iI,1)D)

(2.2.13)

n

Y= ((i, 1) is a standard normal random variable. so we have E(IYI)

2.3

2.3.1

=

as desired.

Main theorem

Properties of the estimator

In this section, we state the main result of the estimator. We show that the bound for the

estimator can be established in terms of r, in1 , M 2 , n.

Theorem 2.3.1. Suppose we have a matrix recovering problem under the setting above and

meets all the assumptions. Then there exist constants C, C7, C8 that does not depends on

r, n, M 1 , M 2 that the estimation error has near oracle performance

IA - AoIIF

-\MiM2

with probability 1 - ( 1

1

r(ml

)C7 r(m1+m 2 )-C8s

in

2)

n

~

logIm1 i 2)

(2.3.1)

2

Remark 2.3.1. We use the constant C in stead of big 0 notation. The constant C does

depend on the parameters nu, .2,11, ao in the assumptions on design matrices as well as noise

distribution. So the tail distribution of the noises will affect the bound C.

=

1nii, we achieve an recovery rate

wt - Ab

with probabilit y 1 -

lF

1

Ar(mi + M 2 ) log(MiM 2

( 1 )Cyr(m1+M2)-C8M1

27

)

Corollary 2.3.2. Let s

-

n)(2.C3.2)

2.4

Proof of main theorem 2.3.1

Proof. Step 1: Matrix Partition

Based on the cone condition 2.5.6, we are able to decompose A into three parts A =

AI + AI + 2" and do partition on the matrix.

Particularly we partition a" into "72

blocks by row and column so that each block is

of size s x s. They are labeled E1 , E 2 , . . , E, i =

E,

.

f2

..

-Emil/s

..

(2.4.1)

E

But we still treat each Ej as m, x m 2 matrix and fill 0 into the remaining entries. For

convenience we define a, =

IF.

IIE

Then one has

(2.4.2)

and

||"I1 =

IE1 112 + IIE 2 112 +

... +

IIE I11= a2+ a2 + . . + a?

(2.4.3)

Similarly we partition the matrix A' and A" in the same way. Since A' plays a similar

role as A" and they are dealt together in following inequalities.

(2.4.4)

A/, = D1 + D2 + ... + Dj; A"/ = Dj+1 + . . + D2i

and

=

IIA"I11

|ID 1I$ +

=

JID2

Il$ + . .

IIDj+j11|2+

+

IDiI

=

3i

=

bi + b2 +

...

. . + IID 2411 = b 2 1 + . . + b 2

And finally we label all the matrix {Di, Ek : 1 <j

where u

=

3mim 2 /s 2

28

+b

(2.4.5)

(2.4.6)

2i, 1 < k < i} as A 1 , A 2 ,---

,

IA}II[

Remark 2.4.1. The motivation to do partitionis that. Each A, above now is satisfying the

s-sparse condition for rows and columns. That means they have singularvalue decomposition

with singular vectors in the set Ball(s, 0) and an important lemma 2.5.7 can be applied to

each Aj here.

Step 2: Establish constraints on partitioned matrices

j

Define Si = A 1 , Sj = L Aj, j = 1, 2,*--

,U.

According to lemma 2.5.7, with probability at least 1 - pi, one has

Z(Itr(XTA1) + zil - Izil)

ni=1

E(- Z(Itr(X'A1) + z

zn

i- ) - tIIA1IF (2.4.7)

=

Applying the lemma 2.5.7 again but to Aj and error z' = tr(XTSy_1)+zi, with probability

at lease 1 - pi, one has

E(-

t(Itr(X('SX)+zi-tr+zi)

(tr XTSJ + zeI-Itr(X'Sj-_)+zi1)-tA JIF

(2.4.8)

Take the sum of above inequalities, with probability at least 1 - kpi, we have

n

nI

1Z(Itr(X'A) + zil - Izil) (-

U

Z (Itr(X"A) + ziI

IziI) - tEIIAiIF

(2.4.9)

E(-Z (Itr(XTA)+zil - IzI))

(2.4.10)

-

i=

n=

n=

Therefore

tZIAiIlF +Z(tr(XI'A) + z

-

Iz~I)

i=1i=

29

We first bound the LHS of (2.4.10) above inequality, note that

(tr(XA) + z l - lzil) 5A(lAol l - llAiI

)

I

n.

(2.4.11)

<5A(IIA'1 lI - IA"||,)

-5A -v/-||IA'I IF

Hence

U

tE IIAiIIF + \V'~lIllF

Z(tr(XTA)

E(

+ z~i

-

Iz~I))

(2.4.12)

i=1

Next we proceed to consider the RHS of ( 2.4.10)

E(

3n,

by lemma 2.5.4

(ltr(XTA) + zil

-

jz,))

E

Itr(X i

)l(ltr(XTA)ISaA

)

Casel: if IIX(A)1 1 >

(2.4.13)

r_16

> 3||X(A)||1

C3KI'|IIlF

Here we have used the RSC condition about Li norm and Frobenius norm.

Now that

U

=1

12+

+

tZ IIAiIIF + AV2-IlA'IlF <t\/U

V -ll

'I

(2.4.14)

=tvIlA"

+ IA',ll|, + IIZYA'2 -+AV'lA'llF

<2tvI|AllF + A v'IIAllF

We will show that |jA IF = 0. Assume it is not zero, it follows from the above inequality and

2.4.12 that

2tVr + A v5 2-

u C3

(2.4.15)

Hence

n < u(C5 /ni + 2) 2 (r(mi + M 2 ))

30

(2.4.16)

which contradicts to our assumption that n > C 6 r(m1 +m 2 ) 2 /s. Note that the assumption is

2

implying the roughly degree of freedom of the question. We need the order of r(m1 + m 2 ) /s

observations. Therefore IIIF = 0.

by lemma 2.5.4

6

)

Case2: if IIX(A)I I < i,

(Itr(XTA) + Zil

E(

-

n=

lZiD)

tr(XTA) (Itr(XT A)

16

1!

> --

ao

IX(A)11

(2.4.17)

320

+11"2

|

+A

I

= L C4(1

Hence

F

||AiJF

A ,I12+ IIA ll1

C4

+

(2.4.18)

IIA"II 1

+

t

Based on the descending order of ak, one must have

IIA'IIIF + IAI IF

Z

IIAIIF. + IIAFIII

IAF

(2.4.19)

1<k<2r

kE

Hence

t

II~i ~

aAiF+

II F IF)

+

41 IIFa4CAIF

F+

I 1+1V~(l~lF+IAIF

32

-1

I

(2.4.20)

Step 3: Obtain the bound

We would like to maximize f(a, b) =

a

Z b? subject to two constraints. The first

E

constraint comes from Lemma 2.5.6 inequality (B)

Mia, b) = al +

a2

... + a - C,12(i+b2

31

. + bli) <; 0

(2.4.21)

and the second constraints is the translation of the inequality 2.4.20

U

tZ IJiIF + AV2'r(IIA' IIF + IIAIIIF) > aoC(1AI112 + IIA11112 + 11a"1I12)

(2.4.22)

Using the fact that b1 + b2 + ... + bi > IIA'IF, one has

f2 (a, b) = C (Z a + Z b2) - [t(E a +

Zbi) +

AV

E b] 5 0

(2.4.23)

The lagrange multiplier function is L(a, b, u 1, U2 ) = f(a, b) + uifi(a, b) + u2 f 2 (a, b). Let

(5, I) be a local maximum. KKT condition imples that

Vf = uiVfi + u27f 2

An immediate result is that at this local maximum all a's are equal and all b's are equal.

Therefore we can reduce the problem to a two-variable optimization problem.

Now consider

2

gi(a, b) = ia2 _ C2 ib < 0

(2.4.24)

and the second constraints is reduced to

g2 (a, b) = C4(ia2 + 2ib2 )

[t(ia + 2ib) + Axv/2ib] < 0

-

(2.4.25)

Solving this optimization problem and we found the optimal choice for a and b should be

a* = O(t) and b* = O(t + AV\/) under this setting, the optimal bound for IIAlF is

0(( mim 2 /s(t + Av/r))

Thus we complete the proof of theorem 1.

32

2.5

2.5.1

Important lemmas

Cone condition

Proposition 2.5.1. Decomposability. We adapted the proof in [Negahban and Wainwright,

2011] and modified a little bit here. Define A = A - AO be the difference between true value

and the estimate. Let A" = PuiAPV be the projection of A onto the orthogonal space of U

and V respectively. A' = A - A".

Proof. Since A minimize the function V)(A) = O(A)

+ AllIAII, we have

(Ao) + AIlAoll,

O(A) + AIIAII, ;

(2.5.1)

That is

A(IlAoll. - I[Al*)

>#(A) - O(AO)

#A) i n

(2.5.2)

(

r

n

tr(X(A - Ao))sgn(zi)

Attention if some of zi (the sample value of error) is 0, the subdifferential at this point

can be any value between [-1, 1] hence we interpret sgn(zi) as any number between [-1, 1].

Recall that

n

W

(2.5.3)

X'sgn(zi)

=

i=1

tr(XT(A - Ao))sgn(z ) = tr(W T A) >

-

IIWI1 2IIAII*

(2.5.4)

i=

-

||IAII,

Hence

C(IIAoll - JAo + All*)

33

-IA II

(2.5.5)

On the other hand

IAo + All* = I|Ao + A'+ A"11,

IlAo + A"IIl - IIA'II* = IIAoll + ||A"I* - IIA'II* (2.5.6)

That is

(2.5.7)

||A'||. - ||A"11* > I|A01|* - ||Z||l

So we have

C(l|A'll* - ||A"l|*) >!

If we define C1 =

-||All*

11A'/ll*

-

I||A"| //1

(2.5.8)

,+1that becomes

11AI'll*:5

2.5.2

>_ -

C111A111*

(2.5.9)

e-net covering lemma

Before we state the covering lemma, we introduce some notations below.

Definition 2.5.1. Define the s-ball under q-norm by

B(s,q,mi) = {IXIlq < s : x E Rm l}

(2.5.10)

and define

Ball(s, q, mi, m 2) = {UEV : columns of U, V are in B(s, q, mi)}

(2.5.11)

consists of all the m, x m 2 matrices that have a singular value decomposition with s-sparse

singular vectors. We will use Ball(s, q) for Ball(s, q, in, m 2 ) for convenience.

Lemma 2.5.2. Consider the set of such X = {X = UEVT : U, V E Ball(s, 0), rank(X)

s,

IIXIIF

=

1}, there is an 6-net X with covering number

(9mis )33,2

(2.5.12)

Proof. This is an adapted version of proof in [Plan, 20111 with some modifications to our

34

problem. We will construct the e-net in following steps:

Step 1.

According to [Bourgain and Milman, 1987], for the set B(s, 0, mi, 1) which

consists of all length mi and s-sparse vectors , there is an 6/3s with size (9mis/e)s. And

note that the space of all m, x s column-wised orthogonal matrix with s-sparse columns can

be thus covered by the product of s independent nets. The product net has size of at most

(9mis/E)3 2 , denoted by MU. The same argument applies to generating Nu.

In addition there is a e/3-net Mr with size at most

(9)32

Now for each U E Mu, V E Mv and 2 E ME,

IIUEV

- C IF = |(UEV

-

CEV) + (UEV -

fV) + (UJV

- )MF + IIUZ(V

-IU(E

< I(U - U)EV1IF +

-

C2V)IF

V)IF

(2.5.13)

Hence we can take cross product of MU, MV and Mr to form a E-net covering of the set,

which of course has the size

2.5.3

..

(9ms 9)352

Expectation lemma

Lemma 2.5.3. The function g(c) = E(Izi + cl - Izi|) is twice differentiable given that the

density function f(z) is continuous differentiable.

Proof. First of all, we show that this function is differentiable if we impose regularity condition on the distribution of random variable zi. Actually assume that zi has a continuous

differentiable density function f(z). The difference of expectation can be expressed as

g(c)

=

E(zi + cl - izil)

=

j

35

Iz + clf (z)dz -

j zf (z)dz

(2.5.14)

Hence when c > 0

z

g(c)

C

+ c - Izi f(z)dz

f

~OO

O

C09.~

-C

c

JC

Jo

j

1 - 2F(-c) +

(2.5.15)

zf (z)dz + 1 - F(0)

-F(-c) + F(0) - F(-c) +

=

00 f(z)dz

2z + c f(z)dz +

(-f(z))dz +

zf(z)dz

We can easily check that the same expression holds for c < 0. The expression tells that

g(c) is twice differentiable given that the density function f(z) is continuous differentiable.

g'(c)

=

1 - 2F(-c)

(2.5.16)

Since we have made the assumption that the noise zi is symmetric with respect to 0. Hence

F(0) = 1/2 and then g'(c) = 0.

The second order derivative

g"(c) = 2f(-c)

(2.5.17)

is always positive. This implies that g(c) is a global convex function.

2.5.4

Expectation difference lemma

Lemma 2.5.4 (See Wang [20131). Suppose we impose more assumption on the error distribution zi such that

P(zi

x)

PI(zi < x) 5

1

2 + ax for allx>0

+

2 +aolxl

(2.5.18)

for all x < 0.

Then

E(Izi + cl - Izil) , ao IcI(Icl A 6)

ao

16

Lemma 2.5.5 (See Wang [2013]). For a vector x = (x 1 , x 2 , ... , xn) E Rn, we have

36

(2.5.19)

{Xii(iiiU

U11x|1j

n

G(x)

(2.5.20)

Iixi12

AU)

>

=

=

(

U

if ||x1|| > nU/2

if ljxiii

nU/2

Remark: In fact the function g(c) is a global convex function of c. These three lemmas

regarding the expectation difference make sure that g(c) is quadratic near 0 and g(c) equals

a linear function of c asymptotically (i.e. when c is sufficiently large).

2.5.5

Finer version of cone condition

In this part, we will introduce a lemma. This lemma can be viewed as a stronger version of

the cone condition mentioned. More precisely we establish a series of inequalities regarding

the partitioned submatrices above. This finer cone condition is also a critical lemma to our

main result

Lemma 2.5.6. (Strong Version) Nuclear norm cone implies Frobenius norm cone. Assume

that A = A' + A" is a decomposition satsifiying

(B) The (nuclear norm) cone condition IIA"iI. - C||A'||, holds;

(C) rank(A')< 2r.

Then there is a decomposition of A = A' + A" + a" satisfying

(A)

IIA 11y2

}.(11A ' 112 + |I| II12 + ||1a "|11);

(B) The Frobenius norm cone condition holds ||A"iiF

C max (IIA' F, IIA IF);

(C) rank(A') + rank(A") < 2r.

Proof. Suppose the singular value decomposition of A' is A'

and the SVD of A" is A" =

... +U2rU(-2r)V(U2r)T

...

+ Ormu(Um)V(-m)

T

.

=

-1U(-1)V(-1)T+O2U(02)V(02T+

2r+1U(02r+1)v(-2r+1)T+02U(0-2r+2)v(2r+2)+

In the following proof I'll use ormuvT for short of -mu(am)v(-m) T as

the singular vectors u and v are the corresponding ones with singular value am.

The nuclear norm cone condition implies that

C(-1 +

a2

+

...

+ Ur)

37

(0

2 r+i+

...

+ am)

We rearrange the singular values ai, 02,

Um

.,

in descending order that a ;>

2

...

! ai.

Define I = {ii, i 2 , ... , i2r} to be the indices of the 2r largest eigenvalues. We still have the

cone condition holds CEkEI

k

Ek I Uk

Let

A'=

AI = A - AI

kUV,

kEI,1<k<2r

k' I

A

kUV>2,AI=

I

kEI,k>2r

Define

\" = A'c + A'c and we will verify the condition actually holds.

(C) is straightforward since rank(A') + rank(A') = #1 = 2r.

(B)

1

"|111 IIA'c||2 + ||A'/c||2

Ok +

5

Ok

kEIc,k>2r

kEIc,1 k<2r

i 2,(E

Ok)

kEIc

(2.5.21)

kEI

< 2C max (IIA'11 2, 11 A'll

)

C(IAflI2 + IIAII1)

IIAII2 = IIA'II1

+

(A)

1|1"11

+IIA

2 I2IF2>! 2+1A1

IIA F+1A/112

IF2IF +IZ1IF

II"I'

+

|A'2

I

1 +

lA

|A"||1

+ 1a"|11)

(2.5.22)

0

Thus completes the proof

38

2.5.6

Concentration lemma

Lemma 2.5.7. Suppose S, = {D E RmXM2 : D E Ball(s, 0), rank(D) 5 s,

lID IF

=

1}, then

there exists constants C8 and C9 then

r(mi + M 2 ) log(mi)

sup IB(D)I 5 t == C

(2.5.23)

DESr

holds with probability at least 1 - ( 1

Proof. Note that IB(D)I

1

En

)(Cs(m1+m2)-C9(2s+r))

1 (Itr(XTD)

+ zil - Iz i - E(Itr(XTD) + zil - Izil)), and

Itr(XTD) + zil - Izil - E(Itr(XTD) + zil - Izil) E [-2tr(XTD),2tr(XTD)]. According to

Hoeffding's inequality,

If IIDIIF

=

1, we have (by

IIX(D)11 2

t)

n2"IIDIIF, let

(2.5.24)

t 2 n2 11

2 exp (

)

P(IB(D)I

K 2 =2

nt2

(2.5.25)

Lemma 2.5.2 that the set S, = {D e Rm1"2 : D E Ball(s, 0), IIDIIF

5

r

=

1} has an E-net

with respect to the Frobenius norm obeying

s32

I5rI < (

Therefore we obtain the union bound for the covering subset. That is

P((sup IB(D)I

DES,

t)

2 (9mls) 3 s2

6

(2.5.26)

exp (--2

Also note that

_2

tr(XTD1) - tr(XT D 2 )1

Sn= -IIX(Di - D 2 1, 5 2K 211D 1 - D 2IIF

)

IB(D1) - B(D 2 )|

(2.5.27)

39

Therefore

DESr

t) <P(sup iB(D)I > t - 2

2

E)

DEs

!;2(9mis

)

1 n(t -

32

p

22E)

2

(2.5.28)

(

P(sup IB(D)I

8K 2

Now let

t

t = C6 Vr(mi + m 2) log(mi)

V/n-

4rI2

(2.5.29)

Plug into the above inequality, one has

P( sup JB(D)j ': t) <5P( sup JB(D)j > t - 2KiE)

DESr

Dr

(

)

(2.5.30)

n

3s2 exp

<2(

- 9mis)

E

(32K2

Assume that m, < n < m', where a is a constant greater than 1. That is what we mean

"limited observation"

Let

pi = 2 (9mls )

6

()(Cs(mI+m 2)r-Cgs 2)

(2.5.32)

Pi

m

3 2

exp

m-C(M+M2)r

nt2

(32K2

(2.5.31)

1

Remark 2.5.1. Let's analyze the probability pi here. If both r and s are comparatively

m

smaller than m1 . For example if r = s = yfii, the probability is approximately mi l and

the probability is sufficiently small in high dimensional setting.

40

Appendix A

Tables

41

42

Appendix B

Figures

43

44

Bibliography

Alexandre Belloni, Victor Chernozhukov, and Lie Wang. Square-root lasso: pivotal recovery

of sparse signals via conic programming. Biometrika, 98(4):791-806, 2011.

James Bennett and Stan Lanning. The netflix prize.

workshop, volume 2007, page 35, 2007.

In Proceedings of KDD cup and

Peter J Bickel, Ya'acov Ritov, and Alexandre B Tsybakov. Simultaneous analysis of lasso

and dantzig selector. The Annals of Statistics, pages 1705-1732, 2009.

Jean Bourgain and Vitaly D Milman. New volume ratio properties for convex symmetric

bodies in ADI n. Inventiones Mathematicae, 88(2):319-340, 1987.

Jian-Feng Cai, Emmanuel J Candes, and Zuowei Shen. A singular value thresholding algorithm for matrix completion. SIAM Journal on Optimization, 20(4):1956-1982, 2010.

Emmanuel Candes and Terence Tao. The dantzig selector: statistical estimation when p is

much larger than n. The Annals of Statistics, pages 2313-2351, 2007.

Emmanuel J Candes. The restricted isometry property and its implications for compressed

sensing. Comptes Rendus Mathematique, 346(9):589-592, 2008.

Emmanuel J Cand6s and Benjamin Recht. Exact matrix completion via convex optimization.

Foundations of Computational mathematics, 9(6):717-772, 2009.

Emmanuel J Cand6s and Terence Tao. The power of convex relaxation: Near-optimal matrix

completion. Information Theory, IEEE Transactions on, 56(5):2053-2080, 2010.

Yaniv Candes, Emmanuel J and. Matrix completion with noise. Proceedings of the IEEE,

98(6):925-936, 2010.

Mark A Davenport, Yaniv Plan, Ewout van den Berg, and Mary Wootters. 1-bit matrix

completion. Information and Inference, 3(3):189-223, 2014.

Maryam Fazel, Haitham Hindi, and Stephen P Boyd. A rank minimization heuristic with

application to minimum order system approximation. In American Control Conference,

2001. Proceedings of the 2001, volume 6, pages 4734-4739. IEEE, 2001.

David Gross. Recovering low-rank matrices from few coefficients in any basis.

abs/0910.1879, 2009. URL http: //arxiv. org/abs/0910.1879.

45

CoRR,

David Gross. Recovering low-rank matrices from few coefficients in any basis. Information

Theory, IEEE Transactions on, 57(3):1548-1566, 2011.

Raghunandan Keshavan, Andrea Montanari, and Sewoong Oh. Matrix completion from noisy

entries. In Advances in Neural Information Processing Systems, pages 952-960, 2009.

Olga Klopp. Noisy low-rank matrix completion with general sampling distribution. Bernoulli,

20(1):282-303, 02 2014. doi: 10.3150/12-BEJ486. URL http://dx.doi.org/10.3150/

12-BEJ486.

Vladimir Koltchinskii, Karim Lounici, and Alexandre B Tsybakov. Nuclear-norm penalization and optimal rates for noisy low-rank matrix completion. The Annals of Statistics, 39

(5):2302-2329, 2011.

Peter D Lax. Functional analysis. Pure and Applied Mathematics. Wiley, New York, NY,

2002. URL https: //cds. cern. ch/record/1001867.

Zhang Liu and Lieven Vandenberghe. Interior-point method for nuclear norm approximation with application to system identification. SIAM Journal on Matrix Analysis and

Applications, 31(3):1235-1256, 2009.

Rahul Mazumder, Trevor Hastie, and Robert Tibshirani. Spectral regularization algorithms

for learning large incomplete matrices. The Journal of Machine Learning Research, 99:

2287-2322, 2010.

Shike Mei, Bin Cao, and Jiantao Sun. Encoding low-rank and sparse structures simultaneously in multi-task learning.

Sahand Negahban and Martin J Wainwright. Estimation of (near) low-rank matrices with

noise and high-dimensional scaling. The Annals of Statistics, 39(2):1069-1097, 2011.

Sahand Negahban, Bin Yu, Martin J Wainwright, and Pradeep K Ravikumar. A unified

framework for high-dimensional analysis of m-estimators with decomposable regularizers.

In Advances in Neural Information Processing Systems, pages 1348-1356, 2009.

Arkadi Nemirovski. Sums of random symmetric matrices and quadratic optimization under

orthogonality constraints. Mathematical programming, 109(2-3):283-317, 2007.

Yurii Nesterov. A method of solving a convex programming problem with convergence rate

o (1/k2). In Soviet Mathematics Doklady, volume 27, pages 372-376, 1983.

Yaniv Plan. Compressed sensing, sparse approximation, and low-rank matrix estimation.

PhD thesis, California Institute of Technology, 2011.

Benjamin Recht. A simpler approach to matrix completion. The Journalof Machine Learning

Research, 12:3413-3430, 2011.

Benjamin Recht, Maryam Fazel, and Pablo A Parrilo. Guaranteed minimum-rank solutions

of linear matrix equations via nuclear norm minimization. SIAM review, 52(3):471-501,

2010.

46

Jasson D. M. Rennie and Nathan Srebro. Fast maximum margin matrix factorization for

collaborative prediction. In Proceedings of the 22Nd International Conference on Machine

Learning, ICML '05, pages 713-719, New York, NY, USA, 2005. ACM. ISBN 1-59593180-5. doi: 10.1145/1102351.1102441. URL http://doi.acm.org/10.1145/1102351.

1102441.

Angelika Rohde, Alexandre B Tsybakov, et al. Estimation of high-dimensional low-rank

matrices. The Annals of Statistics, 39(2):887-930, 2011.

Robert Tibshirani. Regression shrinkage and selection via the lasso. Journal of the Royal

Statistical Society. Series B (Methodological), pages 267-288, 1996.

Lie Wang. The 11 penalized lad estimator for high dimensional linear regression. Journal of

Multivariate Analysis, 120:135-151, 2013.

G Alistair Watson. Characterization of the subdifferential of some matrix norms. Linear

Algebra and its Applications, 170:33-45, 1992.

47