Solid-State Drive Caching in the IBM XIV Storage System Front cover

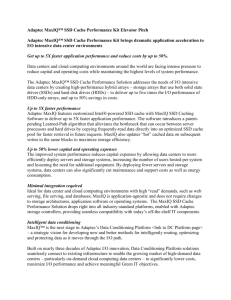

advertisement