Sharing statistics for SPARQL Federation optimization, with emphasis on benchmark quality

advertisement

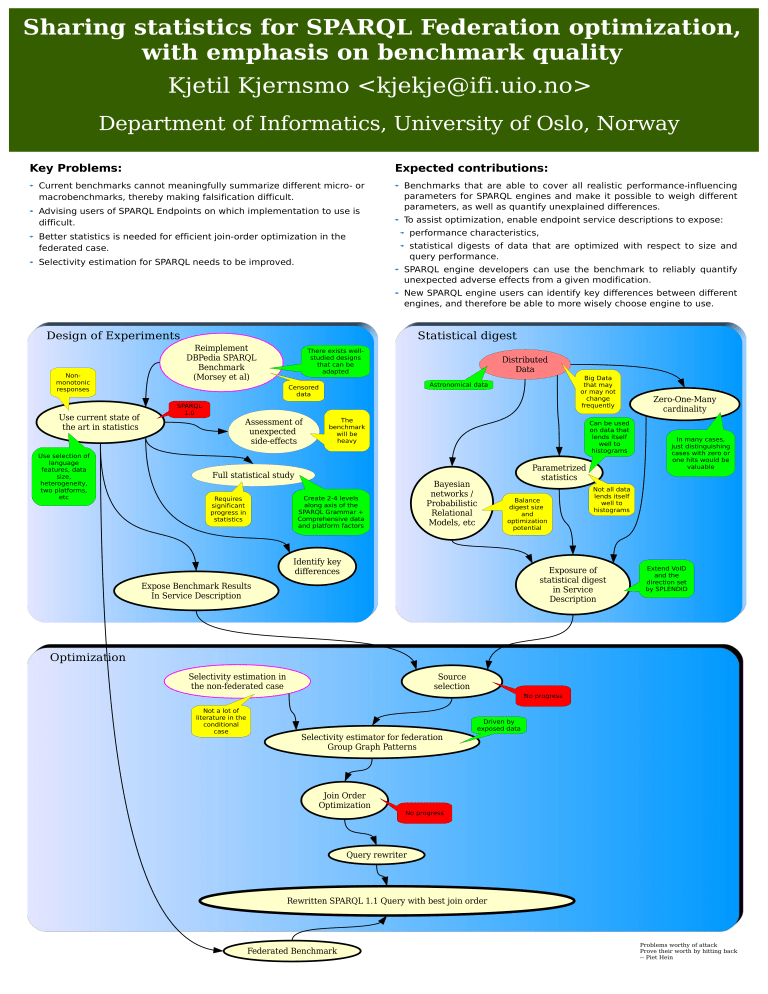

Sharing statistics for SPARQL Federation optimization, with emphasis on benchmark quality Kjetil Kjernsmo <kjekje@ifi.uio.no> Department of Informatics, University of Oslo, Norway Key Problems: Expected contributions: Current benchmarks cannot meaningfully summarize different micro- or macrobenchmarks, thereby making falsification difficult. Advising users of SPARQL Endpoints on which implementation to use is difficult. Benchmarks that are able to cover all realistic performance-influencing parameters for SPARQL engines and make it possible to weigh different parameters, as well as quantify unexplained differences. To assist optimization, enable endpoint service descriptions to expose: performance characteristics, Better statistics is needed for efficient join-order optimization in the federated case. statistical digests of data that are optimized with respect to size and query performance. Selectivity estimation for SPARQL needs to be improved. SPARQL engine developers can use the benchmark to reliably quantify unexpected adverse effects from a given modification. New SPARQL engine users can identify key differences between different engines, and therefore be able to more wisely choose engine to use. Design of Experiments Nonmonotonic responses Use current state of the art in statistics Use selection of language features, data size, heterogeneity, two platforms, etc Statistical digest Reimplement DBPedia SPARQL Benchmark (Morsey et al) There exists wellstudied designs that can be adapted Distributed Data Big Data that may or may not change frequently Astronomical data Censored data SPARQL 1.0 Assessment of unexpected side-effects The benchmark will be heavy Can be used on data that lends itself well to histograms Full statistical study Bayesian networks / Probabilistic Relational Models, etc Create 2-4 levels along axis of the SPARQL Grammar + Comprehensive data and platform factors Requires significant progress in statistics Parametrized statistics Balance digest size and optimization potential Identify key differences In many cases, just distinguishing cases with zero or one hits would be valuable Not all data lends itself well to histograms Exposure of statistical digest in Service Description Expose Benchmark Results In Service Description Zero-One-Many cardinality Extend VoID and the direction set by SPLENDID Optimization Selectivity estimation in the non-federated case Source selection No progress Not a lot of literature in the conditional case Driven by exposed data Selectivity estimator for federation Group Graph Patterns Join Order Optimization No progress Query rewriter Rewritten SPARQL 1.1 Query with best join order Federated Benchmark Problems worthy of attack Prove their worth by hitting back -- Piet Hein