Raytheon Electronic Systems Experience in Software Process Improvement Technical Report CMU/SEI-95-TR-017

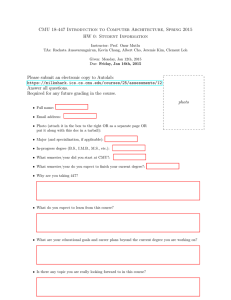

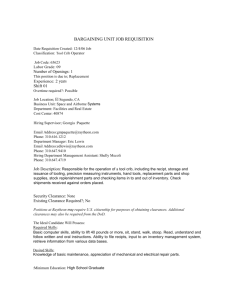

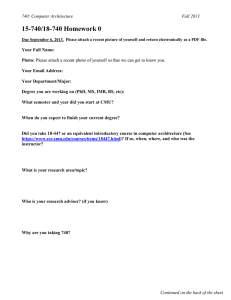

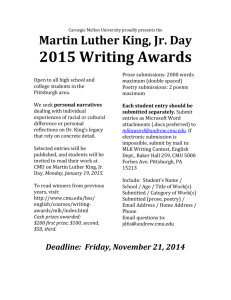

advertisement