APPLICATION AWARE FOR BYZANTINE FAULT TOLERANCE

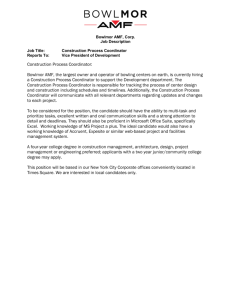

advertisement