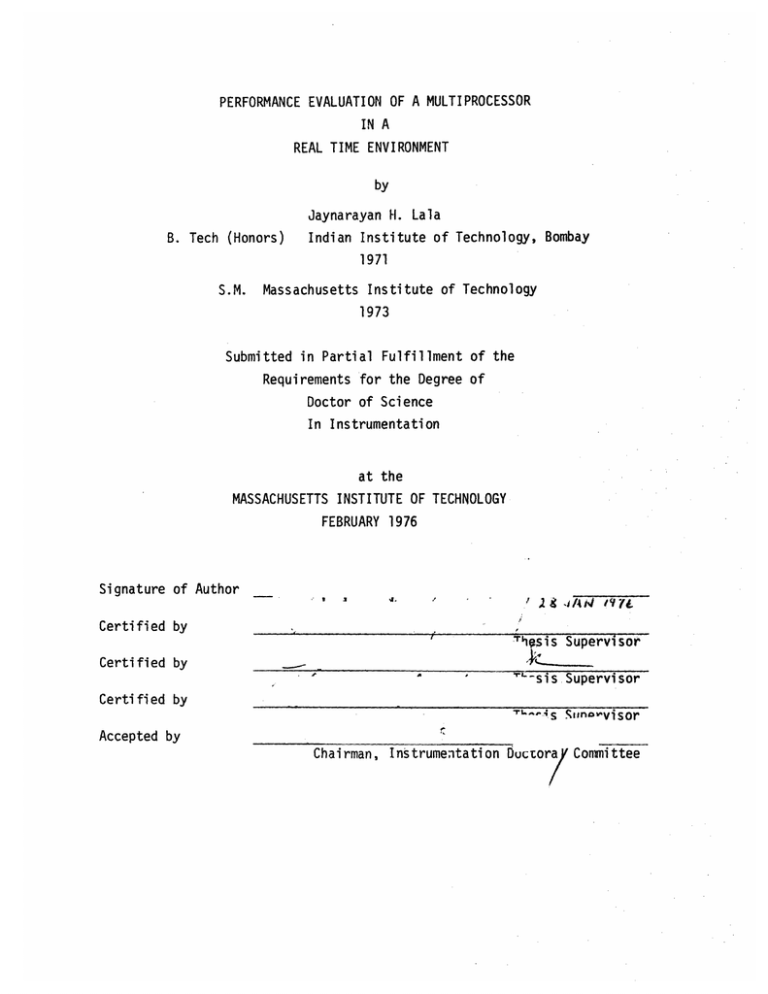

A IN REAL TIME ENVIRONMENT B.

advertisement