GAO DHS SCIENCE AND TECHNOLOGY Additional Steps

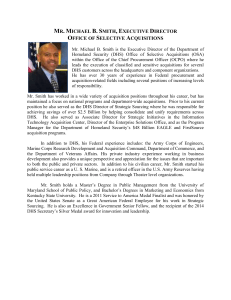

advertisement