Document 11262347

advertisement

OUTCOME ASSESSMENT

FALL

2005

Department of Political Science Political Science Majors and International Relations Majors Introduction: The Political Science Department conducts outcome assessment for two majors:

Political Science and International Relations. The Department conducts its assessment through a

careful examination of consensually determined criteria and in a methodologically appropriate

manner. The Department frequently discusses the results of the results of its assessments during

regularly scheduled faculty meeting. The Department has made modifications to both its

methodology and its two majors, particularly the International Relations major. Finally, the

Department intends to have a mini-retreat to further consider adjustments in both its

methodology and its curriculum to address some of the shortcomings indicated by its assessment

process.

Goals and Outcomes: The goals for the Political Science Department’s Political Science Major

and International Relations Major assessment are to have students demonstrate critical and

analytic thinking, to engage in proper research, and effective communication. The outcomes of

these goals are as follows:

Dimension

Expectations

Thesis Component

Clearly articulated thesis.

Hypothesis Component

Research question or hypothesis is clearly formulated.

~~~~

~

Evidence Component

Evidence is generally appropriate.

Conclusions Component

Draws appropriate conclusions.

~~~

~

Research Component

Sources Component

Five to ten scholarly sources cited or combination of

scholarly sources, government documents, interviews,

foreign news sources, and articles

newspapers of

record.

Citations Component

Appropriate citations (footnotes, endnotes,

embedded)

Bibliography Component

Properly organized bibliography.

Organization Component

Paragraphs Component

Sentence Structure Component

Grammar Component

Good organization.

Consistently developed paragraphs.

Concise sentence structure.

~~

grammatical errors.

The goals and outcomes were developed in a subcommittee consisting of Dr. Schulz, Dr.

Charlick, and Dr. Elkins. The goals and outcomes were presented to the entire Department for

approval. The goals and outcomes were refined by the

department and approved by the

department. The goals and outcomes have not been modified since the approval (See Appendix

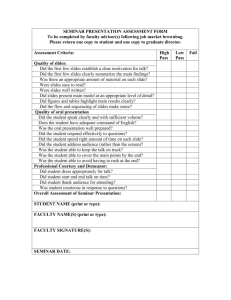

Research Methods: The method of assessment is based on students demonstrating outcomes as

indicated by their final papers in the Department’s senior seminars. The faculty members

teaching a senior seminar submit unmarked and anonymous final papers from each senior

seminar to the chair. The chair randomly selects fiom these papers a representative sample to

faculty members in the

distribute to paired teams of reviewers. The reviewers are

Political Science Department that did not teach senior seminars (faculty members that did teach

senior seminars are excluded fiom the pool of reviewers). The reviewers assess each paper using

an instrument measuring the outcomes of the discrete components of the goals (Appendix B).

The measurement instrument was modified between the spring and the fall to increase the level

of inter-coder reliability by increasing the number of measurement categories:

Exceeds Expectations

Meets Expectations

Does Not Meet Expectations

3

2

1

5

4

3

2

1

The Review of the Department’s assessment instrument by the Office of Assessment

indicated that the rating instrument should have only three categories (Research 8. “Consider

having three rates for each paper.”) The Department has only three rates for each paper, as

indicated above. However, it allows faculty member reviewers to select scoring categories that

indicated a feature of an assessment component that may not completely meet its target

expectation, while granting that it either excelled or was deficient in some degree of this feature.

The Department altered its measurement instrument in the fall of 2005 to correspond to

suggestions made by the Office of Assessment. The Office of Assessment noted, “We question

how you are measuring “diction” using a written paper.” The Department eliminated this

eliminated this feature from the rating form.

Findings: The Political Science Department has produced four reports based on its assessment

process. In general, the findings indicate that the majority of Political Science Majors and

International Relations Majors are meeting or exceeding Departmentally established expectations

(For more detailed information see Appendix C: Student Assessment Results, Spring 2003;

Student Assessment Results, Fall 2003; Student Assessment Results, Spring 2004; Student

2

Assessment Results, Fall 2004; and Influence of the Data Analysis Course on Senior

Grades). The Department is currently conducting its review of senior seminar final papers for

the spring 2005 semester. The report will be distributed in the fall of 2005 and discussed at the

first departmental faculty meeting.

Review: Students are involved in the review process by their submission of senior seminar

papers to the instructor of record. All

faculty members are involved in the review

process either as instructors in senior seminars or as reviewers for the purposes of student

assessment. In addition, reports were distributed to faculty members and discussed in

subsequent Departmental faculty meetings.

Action: The Department has determined that the guidelines for the International Relations

majors does not clearly enough indicate the proper sequencing of the senior seminar.

International Relations majors may take the seminar, according to current guidelines, as if it were

a regular course. The idea, however, is that the senior seminar is a capstone course. The

Department has directed the chair to take steps to articulate clearly that the senior seminar is a

capstone course and should only be taken near the completion of the degree, specifically after the

student has completed the core and track requirements. The Chair informs International

Relations Majors during the advising process that senior seminars are capstone courses. In

addition, the Department asks students to seek authorization to enroll in seminars.

At the most recent faculty meeting (April 28,2004) the Department decided that it must

ways to improve its student assessment for its two majors (Political Science Major

study

and International Relations Major).

Two issues stood out:

Assessment Agreement: Concern was expressed about the low level of the fall 2004

semester’s inter-coder reliability. Specifically, colleagues expressed concerns about the

level of agreement regarding the Critical and Analytical Component of the assessment.

Proposed response: Determine and define effectively measurements for this criteria.

Quality of Student Response: A separate,but entwined, issue is regarding the low level

of satisfaction reviewers have assessed of students’ seminar papers in the Critical and

Analytical Component. Proposed responses: (1) eliminate the criteria, (2) revise criteria

measurement, (3) increase research methods component in 300-level courses, (4)assign a

research paper writing text in seminars, (4) require methods course for International

Relations Majors.

Action plan:

As a first step, the Department’s faculty indicated that they would like to convene a brief

meeting, perhaps a retreat, to examine further the nature of assessment agreement in

Critical and Analytical Component. The faculty indicated a selection of selection of

seminar papers from multiple semesters should be provided. The faculty will proceed to

review and assess in this meeting the papers using the Department’s assessment rubric.

3 Then, the faculty members will discuss the elements of their individual decisions

regarding their ranking.

4 Appendix B

Assessment Measurement Instrument

-

E

Z

0

-53

>

W

a,

a

E

Z

0

-

a,

tia

m

a

-

E

Appendix A

Assessment Outcome Expectations

e,

0

EM

0

0

0

cd N

a 0

N

W

W Appendix C

Student Assessment Results, Spring 2003 Student Assessment Results, Fall 2003 Student Assessment Results, Spring 2004 Student Assessment Results, Fall 2004 Influence of the Data Analysis Course on Senior Seminar Grades i

MEMORANDUM

To:

PSC Faculty

Fr:

D. Elkins, Interim Chair

Date: September 25,2003

Re:

Student Assessment Results, Spring 2003

Overview

The results of the first student assessment have been calculated. In brief, the reviewing

faculty found that over three-quarters (76.3%) of the papers assessed either met or exceeded the

established expectations. However, the faculty, paired as reviewers, had a low degree of intercoder reliability (r =

The paired faculty reviewers agreed on only two-fifths (40.0%) of the

paired reviews.

Specific Findings

On the attached pages, you will find tables depicting the results of the assessment. I have

divided this section into two parts. The first part relates to the student assessment, and the

second part relates to the inter-coder reliability issue.

Student Assessment. The findings of the assessment indicate that the reviewers had

their most serious reservations about the papers in the Critical and Analytic Criteria. As

illustrated in Table 1,well over a third of the assessed papers did not meet established

expectations (37.5%). The components that the reviewers found most troublesome were

related to the Hypothesis Component and the Conclusions Component.

Despite the relatively low scores on the Critical and Analytic Criteria, the reviewers

found that the vast majority of the assessed papers either met or exceeded established

expectations for the Research Criteria and the Articulate and Communication Criteria. The

Research Criteria was a particularly noteworthy result with over two-fifths of the reviewers

scoring the papers in this area as exceeding established expectations, the Sources Component

and the Bibliography Component led this criteria.

Inter-Coder Reliability This was a non-trivial problem with the assessment. As

Table 2 illustrates, the reviewing faculty agreed on only 40.0% assessed papers’ dimensions.

1

.

..

.

The area of most

agreement was in the Research Criteria. The reviewers agreed over

half the time. By contrast, the Critical and Analytical Criteria and the Articulate and

Communicate Criteria pose had low levels of agreement. The reviewers were more likely to

disagree than agree in these two criteria. However, the nature of disagreement was unique to

each criterion.

The Critical and Analytic Criteria had the more difficult and troubling forms of

disagreements. There were more Major Disagreements, one reviewer scores a paper’s

dimension as Exceeds Expectations and the other scores the paper on this dimension as Does

Not Meet Expectations, in this section than in any other section of the assessment. The

reviewers were as likely to disagree in the Articulate and Communicate Criteria as they were

in the Critical and Analytic. However, the disagreement was almost as likely to be whether

the paper exceeded expectations instead of meeting expectations.

Proposed Suggestions

My suggestions are divided into the Substantive Outcome of Assessment and Inter-coder

Reliability sections. In the first, I observe that most students write case studies and that the

instruction that I use in the Data Analysis class has a quantitative assumption bias. In the next

section, I suggest that we modify the instrument, based on the suggestion of a colleague, to

minimize

the inter-coder reliability problem.

Substantive Outcome of Assessment: This is the Department’s first attempt at

conducting student assessment with this instrument and this process and these results are

obviously preliminary. My chief observation is that most of the students write case studies and

not quantitative-basedpapers. Given that the reviewers of the papers indicate the greatest

reservations in the Critical and Analytic Criteria, I

that this is a weakness that should be

discussed.

I can only speak for the section of PSC 25 1 Data Analysis that I teach, but I do not spend

much time at all instructing students on proper techniques of case study analysis. Indeed, I

instruct students with a quantitative assumption bias. That is to say, I instruct students

presuming that they are going to use quantitative methods. Still, there are at least two

components to this usage: consumption and production. On the one hand, the instruction in

quantitative methods is important to aid students as critical consumers of quantitative research.

2

On the other hand, as is evident in this round of assessment, the students produce papers that are

qualitative in orientation. There are obvious overlaps between the two forms

(quantitative and qualitative) of methods in the development of research questions, hypothesis

formation, use and import of theory. It is my sense that in my class my emphasis on the

quantitative may implicitly bias students into believing that these critical areas of overlap are

exclusive to quantitative research and perhaps not relevant to qualitativeresearch.

To the extent that my colleagues that teach Data Analysis have this problem (to varying

degrees) I think it is important that we insist and demonstrate the importance of the development

of research questions, hypothesis formation, use and import of theory in qualitative research as

well as with quantitative research.

Inter-coder Reliability: The inter-coder reliability must be increased. There are two

potential remedies. The first is a minor modification to the existing instrument’s coding

structure. One faculty member suggested that we create middle categories between the Exceeds

Expectations and Does Not Meet Expectations. For instance,

5

By

Does Not Meet

Expectations

Meets Expectations

Exceeds Expectations

4

3

2

1

the instrument, this may eliminate the number of Minor Disagreements and

increase the number of Agreements. Two reviewers may not feel compelled to a judgment of

“either-or” and settle for a “sort-of’ category when scoring a dimension on a paper. The

dilemma is that this may increase the number and magnitude of Major Disagreements.

The potential remedy for Major Disagreements is neither easy nor simple. One choice is

to re-work and further clarify the descriptions and instructions of each dimension in the Student

Assessment. The transaction cost of getting agreement among the faculty on this issue is

formidable. The other option is to provide training to the faculty reviewers regarding the use of

the Student Assessment. This would be time consuming and as difficult as the previous option.

My suggestion is twofold. First, adopt the coding structure modification for the Fall 2003

assessment. Analyze the Fall 2003 results and determine if a significant

remains with

3

inter-coder reliability. If inter-coder reliability remains a problem, identify those substantive areas that are creating the greatest problem, most likely the Critical and Analytical Criteria, and address those problems at that time. Attachments: Table 1: Frequency Distribution of Scores and Average of Scores for Assessment Papers, Spring 2003; Table 2: Frequency Distribution of Agreements and Disagreements of Paired Assessment Reviewers, Spring 2003; Table 3 : Frequency Distribution of Reviewer Agreements; Table 4: Student Assessment Spring 2003 Evaluator’s Comments 4

,

Frequency of Scores’

Does Not

Exceeds

Meets

Meet

Expectations Expectations

Expectations

Dimension

5

Thesis Component

Component

Evidence Component

Conclusions Component

Criteria Subtotal

I

I

4

18

I

12

I

Average2

7

1.93

14

1.67

7

15

8

1.96

3

15.8%

19

11

46.7%

56

1

37.5%

45

1.53

1.78

Research Criteria

Sources Component

16

13

1

2.5

Citations Component

10

15

5

2.17

14

44.4%

40

11

43.3%

39

5

12.2%

2.3

Bibliography Component

Criteria Subtotal

5

2.03

17

6

2.03

8

16

6

2

8

22.7%

17

58.0%

87

5

19.3%

29

2

6

Paragraphs Component

Sentence Structure Component

7

Diction Component

Component

Criteria Subtotal

TOTALS

I

2.32

34

25.8%

93

23.6%

182

85

2.03

2.02

Disagreements2

Dimension

Agreements’

Minor

Minor

Major

Thesis Component

6

4

4

1

Hypothesis Component

8

1

3

3

Evidence Component

3

6

3

3

5

36.7%

22

0

18.3%

7

28.3%

3

16.7%

17

IO

Sources Component

8

6

1

0

Citations Component

7

5

2

1

9

53.3%

0

6.7%

3

1

4.4%

24

5

35.6%

16

Organization Component

5

5

5

0

Paragraphs Component

4

5

0

Sentence Structure Component

6

6

3

6

0

Diction Component

5

6

4

0

6

34.6%

4

5

33.3%

25

25.0%

45

0

Conclusions Component

Criteria Subtotal

Bibliography Component

Criteria Subtotal

Grammar Component

Criteria Subtotal

TOTAL

26

40.0%

32.0%

24

28.3%

72

51

2

0

6.7%

12

Exceeds

Expectations

Dimension

Hypothesis Component

I

I

Evidence Component

I

I

Thesis Component

Meets

Expectations

I

Does Not Meet

Expectations

I

O

5

0

4

I

I

2

I

0

I

I

-

1

4

1

Conclusions Component

0

2

Sources Component

5

3

0

Citations Component

2

4

1

Bibliography Component

4

3

2

Organization Component

0

4

1

Paragraphs Component

0

4

0

Sentence Structure Component

2

0

Diction Component

1

4

3

4

Grammar Component

TOTAL

2

22.2%

16

58.3%

42

3

1

0

14

,

.E

0

8

-"a,

a,

.E

0

a,

0

.­

a

0

a,

...... .......

....

E

a,

a,

C

Memorandum To:

PSC Faculty

From: David R. Elkins

Associate Professor and

Political Science Department

Chair

Date: February 11,2004

Re:

Student Assessment Results, Fall 2003

Overview

This report summarizes the Department’s second student assessment. In brief, the four

members of the faculty that reviewed the twelve randomly selected senior seminar papers found

that two-thirds (66.6%) either met or exceeded departmentally established expectations. As

anticipated, altering the assessment instrument appears to have improved inter-coder reliability.

The paired faculty reviewers had a moderate level of agreement among their assessments (r =

which represents an improvement over the Spring 2003 assessment results (r =

Student Assessment Process

The student assessment process required the two faculty members teaching senior

seminars in the Fall 2003 semester to submit copies of all senior seminar term papers to the

student assessment coordinator. The senior seminar papers were un-graded, un-marked, and

anonymous versions of the papers submitted to the instructor of record for a grade. A total of

twenty-nine papers from the two Fall 2003 senior seminars (PSC 420 American Politics and PSC

422 International Relations) were submitted to the student assessment coordinator for review.

Twelve of the twenty-nine papers were randomly selected for review (41.3%). Four

faculty members reviewed the papers. The faculty member reviewers were paired, and the

pairings represented the identical pairing from the Spring 2003 student assessment. Each paired

reviewer received six randomly assigned papers, three from PSC 420 and three from PSC 423.

Each reviewer received a student assessment packet that included six seminar papers, six Senior

Seminar Student Assessment forms and one Senior Seminar Student Assessment Criteria

Explanation form on December 17,2003. The last set of reviews was submitted February 11,

2004.

Findings

This report describes two issues. It describes the changes that were adopted since Spring

2003 assessment, the outcome of those changes, and it describes the results from

iteration of

student assessment.

Assessment Modifications: The last round of student assessment identified a problem

with inter-coder reliability. The Spring 2003 inter-coder reliability was very low (r =

with

only about 40% of the paired reviewers agreeing on a dimensional score.

The Department decided to modify the measurement instrument itself.

Figure 1 illustrates the changes in the measurement instrument. The strategy was to

expand the number of possible categories from three to five. The presumption was that it would

minimize the number of minor disagreements and increase inter-coder reliability.

2

Figure 1: Illustration of Spring 2003 and Fall 2003 Student Assessment Instruments

Exceeds Expectations

Meets Expectations

Does Not Meet Expectations

3

2

1

5

4

3

1

2

A number of variables are different from the spring and fall student assessments (six

reviewers in the spring and four reviewers in the fall,

papers in the spring and twelve

papers in the fall, different seminars with different instructors) but the pairings of the four

reviewers remained the same from the spring and the fall. Though it remains to be seen in future

iterations of the student assessment process, the inter-coder reliability increased substantially (r =

from the Spring 2003 to Fall 2003. However, the improvement is a result of creating more

categories than the responses in those categories. Table 2 illustrates the percent of agreements

'

and disagreements between Spring 2003 and Fall 2003 student assessment. There were

proportionately fewer points of agreement in the fall assessment than in the spring assessment

and the percent of disagreements declined marginally. However, the percent of major

disagreements doubled. A major disagreement is defined as a difference in paired reviewers

scores by two or more points. Because the number of categories in the measurement instrument

increased by two-thirds from spring to fall, it is perhaps no surprise that the proportion of major

'

A complete display of diagnostics of agreement or disagreement for the fall 2003 assessment are attached to this

document (Tables 6 and 7).

3

disagreements increased. Still, the change in the instrument was a positive change increasing the

magnitude of inter-coder reliability appropriately.

Table 2: Percent of Agreements and Disagreements

between Paired Student Assessment Reviewers, Spring 2003

and Fall 2003

Spring 2003

Fall 2003

Agreements’

40.0%

38.2%

Minor High Disagreements’

28.3%

24.3%

Minor Low Disagreements

25.0%

23.6%

Major Disagreements

6.7%

13.9%

Student Assessment: Table 3 (see attached) illustrates the results of the Fall 2003 student

assessment. The reviewers found that two-thirds (66.6%) of the senior seminar papers met or

exceeded departmental expectations. Indeed, with an overall mean score of 2.94

and a

median of 3, the department can be reasonably comfortable that students that submitted senior

seminar papers are meeting its expectations. However, there remains variation in the

dimensional components.

The reviewers were most satisfied with the Research Criteria and the Articulate and

Communicate Criteria. In general, the reviewers found that four out of five (79.1%) seminar

papers met or exceeded Research Criteria expectations and that three-quarters (75%) of the

seminar papers met or exceeded Articulate and Communicate Criteria expectations. Individual

4

,

dimensions within these two categories varied, but even there the results were likely to meet or

exceed expectations. However, this result does not hold for the Critical and Analytical Criteria.

The majority (5 1.3%) of the Fall 2003 senior seminar papers did not meet the Critical and

Analytical Criteria expectations. This represents a substantial increase fiom the previous student

assessment and is likely accounted for in the changed metric of the instrument. For instance, if

two of the spring papers had been found not to meet expectations the results would have been

similar to this fall’s results. These results are disappointing and consistently so across all

dimensions of this criterion.

In light of the recent report on data analysis and its association with seminar grades, I

examined the rosters and transcripts of the Fall 2003 Semester’s senior seminars. Table 4 depicts

the frequency of students that completed seminars, and it shows that just under half had not taken

data analysis, and three of those that are counted as having taken data analysis took it during the

Fall 2003 Semester. Clearly, the probability of a reviewer reading a paper written by a student

that had not taken data analysis was very high. Still, this does not necessarily mean that the

missing data analysis class was the contributing factor. However, given that there is a clear

difference in the number of students having taken (or taking) the data analysis class by seminar

type, one way to examine whether completing the data analysis course has an impact is by

looking at the student assessment results across the three criterions by senior seminar type.

5

Data Analysis

PSC 420

PSC 422

Total

Yes

12

3

15

No

3

11

14

Each paired reviewer assessed six papers, three from PSC 420 and three from PSC 422.

Though the anonymous papers were randomly selected and assigned for assessment, I kept a

record of the paper assignments by reviewer and by senior seminar. If data analysis has a role to

play here I would expect two outcomes. First, I would expect that reviewers would be much

more likely to rate papers from PSC 420 as either meeting or exceeding expectations on the

Critical and Analytical Criteria than papers from PSC 422. Second, if it were truly a meaningful

outcome, I would expect very little difference between the ratings of papers on the other two

(Research Criteria and Articulate and Communicate Criteria). I would expect these two

outcomes because more students in PSC 420 took data analysis than in PSC 422, and I would

expect the review outcomes for other two criteria to be reasonably close because there is no

systematic evidence to suggest that either the quality of the students, the seminars’ substance, or

the instructors’ demands and instructions differ in important ways.

6

Meets or Exceeds Expectations

(3 ­ 5 Rating)

Critical and Analytical Criteria

Research Criteria

&?date

PSC 420

PSC 422

58.3%

35.4%

76.0%

76.0%

and Communicate Criteria

I

Note: There were a total 48 possible observations for the Critical and Analytical

Criteria and there were a total of 96 total possible observations for the Research

Criteria and the Articulate and Communicate Criteria.

Table 5 illustrates the proportion and frequency of reviewers indicating senior seminar

papers either met or exceeded expectations by criteria and seminar type. Nearly three-fifths

(58.3%) of the reviews of seminar papers emerging from PSC 420, where virtually all (80%) of

the students had taken or were taking data analysis, indicated the papers either met or exceeded

expectations. By contrast, just over a third (35.4%) of the reviews for papers from PSC 422,

where only a small minority (2 1%) of the students had taken data analysis, met or exceeded

expectations. Alone the first finding is interesting, but coupled with the findings for the other

two criteria the evidence becomes striking. There was no difference between the total number of

reviews in either seminar that met or exceeded expectations, none. What appears to separate the

quality of seminar papers from these two seminars is the demonstration of critical and analytical

thinking. And, though it is far from conclusive, the available evidence suggests that the data

analysis course may be an important variable in improving this quality.

Conclusion

7

The results of the Fall 2003 student assessment demonstrate that the alteration made to

the assessment instrument has a positive outcome for the problem of inter-coder reliability. The

Department may wish to review this further in order to increase it more, but for now it seems that

the modification has been successful. In addition, the results also indicate that overall the

reviewers are satisfied that the majority of the papers meet or exceed departmentally established

expectations.

The strength of the papers remains with the Research Criteria and the Articulate and

Communicate Criteria. The evidence suggests that many of our students are meeting and

exceeding our expectations. One question, however, should be asked: Are these standards too

low? Should we increase the Department's expectations for these two standards? Currently, I

believe the answer should be no, but it is something to consider. The reason not to increase

expectations now is because of the weaknesses revealed in this assessment.

The Critical and Analytical Criteria remain problems. The outcome was disappointing in

the Spring 2003 Semester student assessment and it is even more apparent in this assessment

(largely due to the change in the measurement instrument). The analysis presented here coupled

with an earlier report on seminar grades, indicates that there may be more reason to suggest that

some students are ill-prepared for the rigors of the seminar if they have not taken the data

analysis course. Though not depicted in Table 4, the students in PSC 422

had not taken the

data analysis course were International Relations majors and the three that had were Political

Science majors (the three in PSC 420 that had not taken data analysis were Political Science

majors). Though it will pose some distinct challenges for the International Relations major, the

Department may wish to consider requiring

majors to some type of analysis course and

require it be taken prior to the seminar.

8

~~

Table 3: Frequency Distribution of Scores and Average of Scores for Assessment Papers, Fall

2003

Frequency of Scores

Exceeds

Dimension

Meets

Expectations

or

I

Average

Critical and Analytical Criteria

Thesis Component

4

8

12

2

Hypothesis Component

4

8

12

2.4

2

3

Component

Criteria Subtotal

5

21.9%

Sources Component

5

25.0%

I

1.5

53.2 %

2.6

11

3

3.5

Citations Component

6

13

5

3.2

Bibliography Component

7

7

4

31.9%

10

47.2%

20.8%

Organization Component

8

8

8

Paragraphs Component

8

14

2

3.3

Sentence Structure Component

7

13

4

3.5

Diction Component

8

9

7

3

Grammar Component

6

9

9

2.75

30.8%

44.2%

25.0%

28.1

38.5%

33.3%

Criteria Subtotal

Criteria Subtotal

TOTALS

3.29

3.5

2.94

Agreements

Dimension

I

Thesis Component

Hypothesis Component

~

~~~

Disagreements’

’

Minor

I

2

3

3

7

3

4

Minor

I

Major

o

5

5

2

~~

Evidence Component

Conclusions Comuonent

5

3 7.5%

Criteria Subtotal

I

2

I

3

I

2

22.9%

29.2%

10.4%

Sources Component

5

3

1

3

Citations Component

6

2

3

1

Bibliography Component

5

4

44.4%

1

16.7%

22.2%

2

16.7%

Organization Component

3

3

4

2

Paragraphs Component

6

4

1

1

Sentence Structure Component

6

2

2

2

6

2

2

Criteria Subtotal

Diction Component

I

2

Grammar Component

I

4

I

3

I

3

I

2

= Agreement means that the paired reviewers agree on the paper’s score for a discrete dimension.

2 = There are two types of disagreements: Minor and Major. A minor disagreement means that the paired

reviewers differed by one point for a discrete dimension. A major disagreement means that the paired

reviewers disagreed by two or more points.

3 = A “Minor High” Disagreement indicates that one reviewer indicated that a paper at a minimum Meets

Expectations and the other reviewer indicated that the paper Exceeded Expectations.

4 = A “Minor Low” Disagreement indicates that one reviewer indicated that a paper at a maximum Meets

Expectations and the other reviewer indicated that the paper Did Not Meet Expectations.

Table 7 : Frequency Distribution of Reviewer Agreements, Fall 2003

Dimension

Exceeds

Expectations

Meets

Does Not Meet

Expectations

Expectations

Criteria

3

2

I

5

4

Thesis Component

0

0

1

3

1

Hypothesis Component

0

0

1

0

0

Evidence Component

0

2

0

5

0

Conclusions Component

0

0

1

3

1

Sources Component

o

1

4

0

0

Citations Component

1

O

I

4

Bibliography Component

2

O

I

2

1

o

o

Organization Component

0

2

1

0

Paragraphs Component

0

1

5

0

0

Sentence Structure Component

0

2

4

0

0

Diction Component

0

0

1

1

0

Srammar Component

0

1

2

1

0

5.5%

16.4%

l

l

29.1

-

3.6%

Memorandum To: Faculty Members,

Political Science Department

From: David R. Elkins

Associate Professor and Interim Chair

Political Science Department

Date: August 23,2004

Re:

Student Assessment Results, Spring 2004

Overview

This report summarizes the Department’s spring 2004 student assessment. In brief, the

four members of the faculty that reviewed the twelve randomly selected senior seminar papers

found that nearly two-thirds (64.6%) either met or exceeded departmentally established

expectations. The mean and median score for this semester’s student assessment is 2.75 and

respectively. The paired faculty reviewers had a moderate level of agreement among their

assessments (r =

which represents a slight decline from fall 2003 (r =

but remains a

substantial improvement over the spring 2003 assessment results (r =

Student Assessment Process

The student assessment process required the two faculty members teaching senior

seminars in the spring 2004 semester (PSC 420 American Politics and PSC 421 Comparative

Politics) to submit copies of all senior seminar term papers to the student assessment coordinator.

The senior seminar papers were un-graded, un-marked, and anonymous versions of the papers

submitted to the instructors of record for a grade. A total of seventeen papers from the two

spring 2004 senior seminars were submitted for student assessment review.

1

Twelve of the seventeen papers were randomly selected for review (70%). Four faculty

members reviewed the papers, and the reviewers were paired. Each paired reviewer received six

randomly assigned papers, two from PSC 420 and four from PSC

representing a

proportional sample. Each reviewer received a student assessment packet that included six

seminar papers, six Senior Seminar Student Assessment forms and one Senior Seminar Student

Assessment Criteria Explanation form on May 19,2004. The last set of reviews was submitted

on August 5,2004.

Findings

This report describes two issues. It describes the diagnostics of the spring 2004

assessment and it describes the results fi-om this iteration of student assessment.

.

Assessment diagnostics explains the level of agreement and

disagreements that were recorded in the spring 2004 assessment process. The inter-coder

reliability was satisfactory (r =

inter-coder reliability (r =

and is nearly identical when compared with the fall 2003

Table 1 illustrates the percent of agreements and disagreements

for the spring 2004 student assessment. The paired reviewers agreed on their individual

assessments over two-fifths of the time

had minor disagreements, again, a little over

two-fifths (43.1%) of the time, and major disagreements relatively infrequently (13.2%). A

minor disagreement is defined as a one-point difference between the paired reviewers, and a

major disagreement is defined as a difference

paired reviewers scores by two or more points.

Table 2 compares the fall 2003 and spring 2004 levels of agreements and disagreements.

The fall 2003 assessment is important because it uses the updated instrument

~~~~

I

~

the spring

~

I have included Table l a (see attached) in this report that demonstrates the areas of agreements.

2

Disagreement?

Dimension

Agreements

Minor

Minor

Major

Thesis Component

5

3

3

1

Hypothesis Component

6

2

3

2

Evidence Component

9

6

1

4

0

Conclusions Component

2

0

43.3%

11.7%

18.3%

2

8.3%

5

1

Criteria Subtotal

Research Criteria

4

2

Sources Component

,

Citations Component

3

0

6

3

Bibliography Component

4

4

30.6%

1

8.3%

41.7%

3

19.4%

Organization Component

4

2

2

3

Paragraphs Component

6

5

0

1

Sentence Structure Component

6

3

3

0

Diction Component

6

2

2

2

Grammar Component

4

6

22.0%

1

44.I

1

22.0%

11.9%

43.8%

16.0%

Yo

13.2%

Criteria Subtotal

~~

Criteria Subtotal

TOTAL

= Agreement means that the paired reviewers agree on the paper’s score for a discrete dimension.

2 = There are two types of disagreements: Minor and Major. A minor disagreement means that the paired

reviewers differed by one point for a discrete dimension. A major disagreement means that the paired

reviewers disagreed by two or more points.

3 = A “Minor High” Disagreement indicates that one reviewer indicated that a paper at a minimum Meets

Expectations and the other reviewer indicated that the paper Exceeded Expectations.

4 = A “Minor Low” Disagreement indicates that one reviewer indicated that a paper at a maximum Meets

Expectations and the other reviewer indicated that the paper Did Not Meet Expectations.

3

2003 student assessment, and it also had a sample of 12 senior seminar papers (only one pair of

reviewers remained matched in the fall to spring student assessment). The spring 2004 iteration

of student assessment indicates a marginal positive improvement in the number of agreements

from fall 2003 and it indicates a decline in the proportion of minor high disagreements.

However, the results also show a slight jump in the proportion of minor low disagreements.

There is virtually no change in the proportion of major disagreements.

Table 2: Percent of Agreements and Disagreements between

Paired Student Assessment Reviewers, Fall 2003 and Spring

I

2004

Fall2003

Spring2004

Agreements

38.2%

43.8%

Minor High Disagreements

24.3%

16.0%

Minor Low Disagreements

23.6%

27.1%

Major Disagreements

13.9%

13.2%

Student Assessment: Table 3 illustrates the results of the spring 2004 student assessment.

The reviewers, in an unpaired frequency analysis, found that nearly two-thirds (64.6%) of the

senior seminar papers either met or exceeded departmental expectations. In a paired analysis

using means, the papers received an overall mean score of 2.75

with a median of 3.

Though lower than the fall 2003 student assessment’s overall mean of 2.95, the department can

be reasonably comfortable that students that submitted senior seminar papers in

4 Frequency of Scores’

Does Not

Exceeds

Meets

Meet

Expectations Expectations

Expectations

(5 or 4)

(2 or 1)

Dimension

Thesis Component

4

14

6

2.83

Hypothesis Component

5

13

6

2.88

Evidence Component

2

3

19

2.04

19

52.I

1.91

=usions

Component

12.5%

35.4%

Sources Component

6

11

8

2.96

Citations Component

2

11

2.33

4

16.2%

10

43.2%

12

10

39.2%

Criteria Subtotal

Bibliography Component

Criteria Subtotal

Organization Component

~

Average2

I

I

6

2.42

2.79

2.69

2.79

Paragraphs Component

6

17

3

3.21

Sentence Structure Component

6

15

1

2.92

6

6

25.2%

12

12

3

3.04

55.5%

6

6

19.3%

45.8%

(132)

35.4%

(102)

Diction Component

Grammar Component

Criteria Subtotal

TOTALS

= The frequency of scores columns represent the rankings that

3.06

2.75

each faculty member gave to a paper.

In this portion of the analysis the scores are treated as discrete and not paired. That is to say, though

each paper had two reviewers (paired reviewers), I recorded in these columns the score that each of the

paired reviewers would have given on the various dimensions. For example, two colleagues reviewed

Assessment Paper #2. If the first colleague scored the Thesis Component as “Meets Expectations”and

the second colleague scored it as “Does Not Meet Expectations,” those scores would be represented in

two separate columns in the Frequency of Scores.

N = 288 {( 12 Dimensions . 12 Papers) 2 Reviewers}

2 = The arithmetic average was derived by establishinga mean for each dimension for each paper. I

then created an average of these averages.

3 = One reviewer did not provide a score.

5

spring 2004 are meeting its minimum expectations. However, there remains variation in the

dimensional components.

The reviewers were most satisfied with the Articulate and Communicate Criteria. In

general, the reviewers found that four out of five (80.7%) seminar papers met or exceeded this

dimensional expectation. This finding is also consistent with the

of the fall 2003 student

assessment. Individual dimensions within these two categories varied, but even there the results

were likely io meet or exceed expectations. However, this result does not hold for the other two

dimensions.

The reviewers found that nearly two-fifths of the seminar papers did not meet the

minimum expectations for the Research Criteria. The chief weakness among these set of

seminar papers is regarding the quality of the citations. Half of the assessed papers were found

to not meet expectations, and only two were found to exceed expectations. Still, this weakness

in the papers was minor compared with the on-going challenges student assessment has found

regarding the Critical and Analytical Criteria.

The majority (52.1%) of the spring 2004 senior seminar papers did not meet the Critical

and Analytical Criteria expectations. These results are disappointing, however they are not

consistent across all dimensions of this criterion. The reviewers were reasonably satisfied with

the Thesis and Hypothesis components with three-quarters of the assessments indicating that the

papers either met or exceeded expectations. The major weakness detected among the reviewers

was regarding the quality of the evidence the students used to

their thesis or

hypothesis and the conclusions derived fiom that evidence. The departmental expectations for

the Evidence criterion is that the “evidence is generally appropriate.” To meet the Conclusions

criterion the student “draws appropriate conclusions.” Nearly four -fifths of the time

6

individual reviewers found that the evidence or conclusions did not meet departmental

expectations.

Table 4: Percentage of Exceeds, Meets, or Does Not Meet Expectations of Student Assessment

Dimensions, Fall 2003 and Spring 2004

Exceeds

Meets

Does Not Meet

Research Criteria

The results of the spring 2004 student assessment have elements similar to the results of

the fall 2003 student assessment. Table 4 depicts the comparison of this academic year’s student

assessment for fall 2003 and spring 2004. What stands out most clearly between the two

semesters is that there were far fewer incidents in which reviewers scored an individual

dimension of a paper as exceeding expectations in the spring semester. This decline was

consistent across all criteria, but perhaps most notable in the Research Criteria, which also scored

a substantial increase in the proportion ranked as not meeting expectations.

The majority of the reviewers found the seminar papers did not meet expectations in the

Critical and Analytical Criteria, though there was a one-percentagepoint improvement

fall

2003. What is not depicted here is that there is actually substantial improvement in two

components of this dimension. In the fall 2003 assessment, reviewers found that half of the

7

papers did not meet expectations for the Thesis component or the Hypothesis component.

However, the spring 2004 assessment found that only a quarter did not meet expectations for

these two components. This improvement is undermined by the fact that reviewers found the

Evidence and Conclusions components lacking. I draw two conclusions fiom this. We have

become more focused on instructing students on the proper means to construct a thesis and a

hypothesis, but the students have not kept pace with their ability to marshal the evidence to test

these hypotheses or draw appropriate conclusions regarding them. And, the judgment that

students now have clearer theses and hypotheses makes it easier to determine for the reviewers

whether students are drawing appropriate empirical analyses and conclusions.

Conclusion

The results of the spring 2004 student assessment demonstrate that the alterations made

to the assessment instrument continues to have a positive outcome for its inter-coder reliability.

The Department may wish to review this further to increase it more, but for now it seems that the

modification has been successful. In addition, the results also indicate that the reviewers are

satisfied that the majority of the papers meet or exceed departmentally established expectations.

The strength of the spring 2004 papers is with the Articulate and Communicate criteria.

The evidence suggests that many of our students are meeting and exceeding our expectations.

One question, however, should be asked: Are these standards too low? Should we increase the

Department’s expectations for these standards? Currently, I believe the answer should be no, but

it is something to consider.

The Critical and Analytical Criteria remains a problem. The outcome was disappointing

in both of the previous student assessments, and it remains disappointing in this assessment too.

Though there were marked and important improvements in two components of this criterion

8

(Thesis

Hypothesis), there were

declines in the other two components (Evidence

and Conclusions). Ironically, it may be that the improvements in the first two led to apparent

difficulties with the other two.

9

Memorandum To: Faculty Members,

Political Science Department

From: David R. Elkins

Associate Professor and Chair

Political Science Department

Date:

February 18, 2005

Re:

IR Major and Political Science Major Student Assessment Results, Fall 2004

Overview

This report summarizes the Department’s fall 2004 student assessment. In brief, the six

members of the faculty that reviewed the nine randomly selected senior seminar papers found

that half (50.0%) either met or exceeded departmentally established expectations. The mean and

median score for this semester’s student assessment is 2.58 and 2.5, respectively. A score of 3

would indicate meeting Departmental expectations. The paired faculty reviewers had a modest

level of agreement among their assessments (r =

previous semesters (spring 2004, r =

which represents a decline from the two

fall 2003, r =

but remains an improvement over the

spring 2003 assessment results (r =

Student Assessment Process

The Department’s student assessment process required the faculty member teaching the

fall 2004 senior seminar (PSC 42 1 Comparative Politics) submit copies of all senior seminar

term papers to the student assessment coordinator. The senior seminar papers were un-graded,

un-marked, and anonymous versions of the papers submitted to the instructor of record for a

grade. A total of fifteen papers from the fall 2004 senior seminar were submitted for student

assessment review.

1

Nine of the seventeen papers were randomly selected for review (60%). Six faculty

members reviewed the papers, and the reviewers were paired. Each paired reviewer received

three randomly assigned papers. In addition, each reviewer received a student assessment packet

that included three seminar papers, three Senior Seminar Student Assessment forms, and one

Senior Seminar Student Assessment Criteria Explanation form on December 2 1,2004. One

written reminder was distributed on January 12,2005 and an oral reminder was provided during

the January 19,2005 department meeting. The last set of reviews was submitted on January 26,

2005.

The only change between fall 2003 and fall 2004 was in compliance with a suggestion

the Office of Assessment to eliminate one dimensional element, Diction, fi-om the Senior

Seminar Student Assessment. This change is unlikely to have affected, either positively or

negatively, the results of the fall 2004 student assessment.

Findings

This report describes two issues. It describes the diagnostics of the fall 2004 assessment

methods and it describes the results

this iteration of student assessment.

Diagnostics. Assessment diagnostics explains the level of agreement and disagreement

that were recorded in the fall 2004 assessment process. The inter-coder reliability was

disappointing (r = 1). This represents a decline from the two previous iterations of student

assessment (fall 2003 (r =

and spring 2004 (r =

Table 1 illustrates the percent of

agreements and disagreements for the fall 2004 student assessment. The paired reviewers agreed

on their individual assessments over two-thirds of the time

I

I have included a table (Appendix Table 1) in the Appendix to this memorandum that depicts the areas of

agreements.

2

,-

Disagreements

occur in this student assessment process. I define assessment

disagreement in several ways. First, there are major and minor disagreements. A minor

disagreement is where one of the paired reviewers differs by one point on a dimensional

component with his or her reviewing pair. For

if one reviewer scores a dimension a 3

while his or her pair scores it a 2, this is a minor disagreement. A major disagreement is where

the split between the paired reviewers is greater than one. For instance, one reviewer scores a

dimensional component a 3 while his or her pair scores the same dimensional component a 5. In

addition, I divide disagreements into high and low categories. A high category disagreement is

when at least one reviewer indicated that a dimensional component exceeded expectations. By

contract, a low category disagreement indicates that at least one paired reviewer found that a

dimensional component did not meet expectations. Consequently, in the first example above,

where one reviewer found a dimensional component a 3 while his or her counterpart gave it a 2

would be defined as a Minor Low Disagreement. The other example, where one reviewer found

a dimensional component met expectations (a score of 3) and his or her pair scored that

dimensional component a 5 would be defined as a Major High Disagreement.

Finally, there are two additional classes of disagreements that pose particularly difficult

problems with inter-coder reliability and I treated differently, though they meet the criterion

above. The first is the 2-4 Split. A 2-4 Split disagreement is, by definition, a major

disagreement. However, it is one where the reviewers split on whether a dimensional component

exceeded expectations, a score of 4, and did not meet expectations, a score of 2. The other

category is even more problematic. In this category, what I call Fundamental Split

Disagreements, the split is by three or more points and indicates that a fundamental disagreement

exists between the paired reviewers about a dimensional component. For instance, one reviewer

3

scoring a component with a 5 while his or her pair scores it 1 is the prime example of a

fundamental split.

For the fall 2004 student assessment, over a third of the disagreements were minor

disagreements, both high and low (35.3%).

is marginally good news, what it indicates,

along with the agreements, is that for nearly three-quarters (73.7%) of the time, the paired

reviewers either agreed or disagreed by one point on discrete dimensional components. In

addition, High Major and Low Major disagreements were relatively infrequent (12.1 %). Though

nearly as equally infrequent, the 2-4 Split disagreement and the Fundamental disagreement pose

problems. The 2-4 Split Disagreement poses a more substantive problem than statistical problem

about the assessment while the Fundamental Split Disagreement category poses both

of

problems. Delving deeper into the matter, I found that of the fourteen 2-4 Split Disagreements

and Fundamental Disagreements

ten were associated with one team of paired

reviewers and of that these paired reviewers differed substantially on a single paper scoring three

2-4 Split Disagreements and three Fundamental Disagreements.*

After re-checking my coding of the data, I looked more closely at the comments provided

by the reviewers. One reviewer wrote in the Critical and Analytical Criteria section, “There is no

thesis” and, on the Evidence component line, this reviewer wrote “of what? This is a description

of the Japanese economy and a literature review.” The scores are

and

in the component

area. By contrast, this reviewer’s pair wrote, “This seems a little too good. I’m skeptical that

this is original work” and scored the paper with four

and one 4. It is obvious the reviewers

assessed the paper differently, and the paper itself was problematic for both but for different

reasons.

’Both faculty members in

pair have been involved in every assessment since spring 2003.

4

Table 2 illustrates the various levels of agreement and disagreement with the past three

iterations of student assessment (spring 2003 is excluded because of a change in the

measurement instrument). The comparative data reveal that the proportions of agreements have

remained relatively stable at around 40% agreement. However, the nature of the major and split

forms of disagreements have grown over time. In this last round of assessment, these forms of

disagreements have accounted for over a quarter (25.2%) of the reviews.

Table 2: Percent of Agreements and Disagreements between Paired Student

Assessment Reviewers, Fall 2003 and Spring 2004

Fall2004

Agreements

38.2%

43.8%

38.4%

Minor High Disagreements

24.3%

16.0%

13.1%

Minor Low Disagreements

23.6%

27.1%

22.2%

Major Disagreements

9.0%

10.4%

12.1%

2-4 Split Disagreement

4.2%

2.7%

9.1%

0%

4.0%

Fundamental Split

Disagreement

report. The data had not been presented with the 2-4 Split category and the Fundamental Split

Because the Department’s assessment is dependent on a satisfactory level of inter-coder

reliability, this issue is of concern. I conducted a test to determine how badly the inter-coder

reliability was affected by the Fundamental Split Disagreements. I removed all dimensional

scores that are defined as fundamental splits (I did leave in the 2-4 Split category assessments),

and the correlation was r =

This is an obvious improvement

the r = 1 of the total

sample and it corresponds well with the fall 2003 and spring 2004 levels of inter-coder

5

reliability. The Department will need to develop a strategy for improving its inter-coder

reliability.

Student Assessment: Table 3 illustrates the results of the fall 2004 student assessment.

The reviewers, in an unpaired frequency analysis, found that half (50.0%) of the senior seminar

papers either met or exceeded departmental expectations. In a paired analysis using means, the

papers mean score is 2.58 (s =

with a median of 2.5. Though there are variation (and

positive ones) in dimensional components, the results are disappointing.

As with previous student assessments of the Political Science and International Relations

majors, the reviewers were most satisfied with the Research Criteria and the Articulate and

Communicate Criteria. In general, the reviewers found that two out of three (66.6%) of the

seminar papers met or exceeded the Research Criteria dimensional expectation, though there

remained problems with the quality of citations students used. The reviewers also found that

over half (55.5%) of the seminar papers either met or exceeded minimum expectations for the

Articulate and Communicate Criteria. There was no single identifiable weakness among this set

of seminar papers although half of the assessed papers were found to not meet expectations in the

Organization component. Still, this weakness in the papers was minor compared with the on­

going challenges student assessment has found regarding the Critical and Analytical Criteria.

A significant majority (68.1%) of the fall 2004 senior seminar papers did not meet the

Critical and Analytical Criteria expectations, and no single dimensional area in these criteria had

a majority of either meets or exceeds expectations. The reviewers were most dissatisfied with

the Hypothesis component of the assessments indicating that over four out of five (83.3%) of the

papers failed to meet this expectation. In addition, the reviewers were dissatisfied with the

conclusions students made in their papers with only nearly three quarters (72.2%) of the papers

6

Frequency of Scores'

Does Not

Exceeds

Meets

Meet

Expectations Expectations

Expectations

(5 or 4)

(2 or 1)

Critical and Analytical Criteria

Dimension

Average2

Thesis Component

2

6

10

2.28

Hypothesis Component

1

2

15

1.78

Evidence Component

2

11

2.78

13

68.I

1.89

I

Conclusions Component

Criteria Subtotal

1

8.3%

I

I

5

4

23.6%

I

2.06

Research Criteria

Sources Component

7

Citations Component

3

6

29.6%

Bibliography Component

Criteria Subtotal

8

5

7

37.0%

3

3.39

10

2.39

5

33.3%

3

2.93

Articulate and Communicate Criteria

Organization Component

Paragraphs Component

Sentence Structure Component

~~

~~

Grammar Component

Criteria Subtotal

TOTALS

2

7

9

2.61

6

6

5

26.3%

4

8

7

8

3

20.7%

5

5

29.2%

44.4%

50.0%

2.89

2.89

2.85

2.58

N = 198 {( 11 Dimensions Papers) 2 Reviewers}

1 = The

of scores columns represent the rankings that each faculty member gave to a paper.

In this portion of the analysis the scores are treated as discrete and not paired. That is to say, though

each paper had two reviewers (paired reviewers), I recorded the score that each reviewer gave on the

various dimensions. For example, two colleagues reviewed Assessment Paper #2. If the first

colleague scored the Thesis Component as a 3 and the second colleague scored it a 4 those scores

would be represented in two separate columns in the Frequency of Scores.

2 = The arithmetic average was derived by establishing a mean for each dimension for each paper. I

then created an average of these averages.

7

failing to meet expectations. Still, these are low spots in a straggling criterion. The results of

this student assessment need to be explored further. To do so, I examine how these results

compare to two previous assessments and next, I examine how it is associated with key findings

of an earlier Departmental report, “Influence of the Data Analysis Course on Senior Seminar

Figure 1 depicts the percent of the three assessment criteria that either met or exceeded

expectations in the fall 2003, spring 2004, and fall 2004

As is to be expected, there

Figure 1: Percent of Criteria Exceeding and Meeting Expectations, Fall 2003, Spring 2004, Fall 2004

____

80

70

60

*

Spring 2004

40

30

20

10

0

Critical and Analytical

Research

Articulate and Communicate

Criteria

3

David R.

“Influence of the Data Analysis Course on Senior Seminar Grades,” Report to the Department of

Political Science, Cleveland State University, February 2,2004.

4

Appendix Table 2 provides a more complete breakdown of the results.

8

are marked variations by semester. Each semester has different faculty members, a different set

of students, different seminars, perhaps different topics within same numbered seminars, and a

different number of papers assessed through this process. However, there is also a pattern; one

made more obvious during this cut of student assessment. While the Department is somewhat,

though not completely, satisfied with the Research and Articulate and Communication elements

of assessment, it is not satisfied with the performance in the Critical and Analytical criteria. The

faculty reviewers have never assessed a majority of the papers in any of the three previous

sessions as either meeting or exceeding the Critical and Analytical criteria. The fall 2004

assessment is the low point in a series of disappointing results. One possible explanation for the

fall 2004 semester’s disappointing results is found in the Departmental study, “Influence of the

Data Analysis Course on Senior Seminar Grades,” conducted last year.

This Departmental study examined a random selection of students (n = 169) that had

completed a senior seminar between the fall of 1998 and spring 2003. It statistically linked

success in senior seminars to two variables: student’s GPA in the semester preceding a seminar

and whether the student had taken PSC 25 1 Data Analysis. A further explanation, though neither

supported nor refuted by the study central theme, suggested that one problem area was in the fact

that International Relations

majors are not required to take PSC 25 1 as part of their

curriculum.

Table 4 provides a comparison of the Departmental study data collected for all senior

seminars and a subset of the two seminars required by IR majors, PSC 421 and PSC 422, and

compared that with the students in the fall 2004 semester’s PSC 421 Comparative Politics senior

seminar. First, the

of the students in the fall 2005 semester’s senior seminar is greater

than that of either the total or the subset of

senior seminars, though it is within

9

one standard deviation. To the extent that Cumulative GPA is a measure of the quality of

academic performance, it seems apparent that the students in this seminar were above the

qualitative norms of previous seminars. By contrast, the proportion of student’s in this seminar

that had taken PSC 25 1 were 15.1 percentage points below the average for previous PSC

seminars and 26.6 percentage points below that of all previous seminars. In addition,

the proportion of IR majors was 17.5 percentage points higher than the average for previous PSC

senior seminars, and 30.6 percentage points higher that the total of previous senior

seminars. Another way of stating this is that if the fall 2004 PSC 42 1 Comparative Politics

senior seminar had comported to previous comparative or IR senior seminars, there would have

been six students instead of only four in the class that had taken PSC 251. But, could this make a

difference in the outcomes of the fall 2004 student assessment?

Category

PSC 42 1 Comparative Politics,

Fall 2004 (n = 15)

PSC

(n = 122)

Total Senior Seminars (n = 169)

Percent

Completing

PSC 251

Percent

IR Majors

Cumulative

GPA

26.7%

66.7%

3.14

41.8%

1)

49.2%

2.91

53.3%

36.1

2.88

The fall 2003 and spring 2004 student assessments, 46.9% and 47.9% of the papers were

found to either exceed or meet Departmental standards for the Critical and Analytical criterion.

By contrast, only 3 1.9% of the papers during the fall 2005 semester met or exceeded

expectations for the Critical and Analytical criterion. If one can accept that there is a link

10

between completing PSC 25 1 and student outcomes on student assessment, then perhaps the

following is appropriate. If you add to this figure (31.9) to the percentage point difference

between the percentage of students that have completed PSC 25 1 in previous PSC

seminars versus the fall 2004 semester

5.1) as an adjustment, you arrive at a figure (47) that

resembles previous, though unsatisfactory, findings for this criterion.

Conclusion

The results of the fall 2004 student assessment demonstrate that the process of reviewing

papers, though improved

the spring 2003, are not without needed attention. The alterations

made to the assessment instrument were positive in terms of improving inter-coder reliability and

validity of the instrument; however, this needs to be followed by process improvements. Two

suggestions are worthwhile for the reviewers:

1. Review the assessment guidelines before reviewing each paper. Though we might

think we understand the Department’s standards, reviewing them prior to reading the

assigned paper will likely improve the quality of the review.

2. Do not rush the review of a paper. Do the review in a timely manner, but do not

attempt to rush through in order to meet the deadline.

The strength of the fall 2004 papers is with the Research criteria. The evidence suggests

that many of our students are either meeting or exceeding our expectations. The Critical and

Analytical Criteria remains a problem. The outcome was disappointing in the previous student

assessments, and it remains disappointing in this assessment. Indeed, this assessment suggests

more worrisome problems. The results of this student assessment should focus the Department’s

attention on the lack of methodological training for International Relations majors. The evidence

is mounting that the Department may have to either require International Relations majors to take

PSC 25 1, or some other methods course, or faculty members that teach the two senior seminars

11

associated with this major will have to do deliberate instruction on proper research methods as a

component of their class instruction. Because of the already

International Relations

curriculum, the Department may wish to request its Cumculum Committee to investigate other

possible alternatives.

12 Appendix ~

Appendix Table 1: Frequency Distribution of Reviewer Agreements, Fall 2004

Agreements

Exceeds

Meets

Does Not Meet

5

4

3

2

Thesis Component

0

0

0

1

1

Hypothesis Component

0

0

0

2

2

Evidence Component

0

0

1

0

0

Conclusions Component

0

0

1

0

3

Sources Component

1

0

3

0

0

Citations Component

0

0

1

2

2

Bibliography Component

0

1

2

0

1

Organization Component

0

0

2

3

0

Paragraphs Component

0

0

0

1

0

Sentence Structure Component

0

1

0

2

0

Diction Component

0

0

0

Grammar Component

1

0

1

3

0

TOTALS

2

2

11

14

9

0

I

I

I

I

I

I

I

I

I

I

I

I

I

I

--I---

I

I

Influence of the Data Analysis Course on Senior Seminar Grades

Summary

David R. Elkins Associate Professor Interim Chair Political Science Department February 2,2004 Over the last few years a number of colleagues have expressed reservations about the

quality of preparation of students for the intellectual challenges of senior seminars. The Spring

2003 student assessment pinpointed, to an even greater degree, that one chief problem area was

regarding students’ analytic capability. This raised questions about the data analysis course.

This paper attempts to address some of those questions. Specifically, it addresses the following:

Does taking a data analysis course effect a seminar grade?

Does the data analysis course have a different effect on seminar grades by seminar

type, by major, and by high and low student educational skill?

Does the timing of taking and performance in a data analysis class predict seminar

grades?

Drawing on a sample of 169 of the 256 students (66.6%) that took one (or more) of the

twenty senior seminars taught between fall 1998 and spring 2003, the analysis demonstrates:

Data Analysis Course’s Effect on Seminar Grades - Students that had either completed a

data analysis course prior to their seminar semester or enrolled and completed the data

analysis course during their seminar semester did statistically better than students that did

not. On average, a student that took data analysis improved her letter grade by roughly

one-half letter grade.

Student Enrollment in Data Analysis Course - Most Political Science majors have taken

PSC 25 1 Introduction to Data Analysis

to enrolling in a senior seminar. However,

the majority of International Relations majors have not because they do not have to per

the degree program.

Timing of Taking Data Analysis Course - general, students take the data analysis

course between ten and twelve months prior to taking a seminar, but this has no impact

on seminar grades. Still, a substantialproportion of students do not take the course early

enough. For instance, nearly one in five of the students that completed a seminar took the

data analysis course during their seminar semester, and another fifteen percent took the

data analysis course a semester before their seminar semester. This poses a problem to

the extent that the material taught in data analysis should bolster and deepen the

understanding of social science material taught in the Department’s baccalaureate-level

courses. For a third of students this is not happening.

Performance in Data Analysis and Its Effect on Seminar Grades - The results of this

analysis indicates that how a student did in the data analysis course has no bearing on

how the student will do in a seminar.

1

,

Grades in Senior Seminars - Overall, students do satisfactorily in senior seminars. The average grade earned is equivalent to a B. To the extent that assessment is about measuring students’ ability to meet educational targets, the faculty members of this Department are indicating, through their grades, that students in political science undergraduate seminars are meeting departmental expectations. Quality of Seminar Students - Students that complete senior seminars have cumulative GPAs of 2.92 (s =