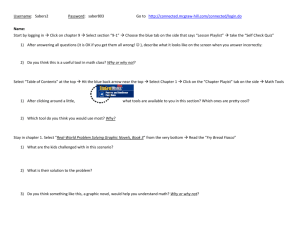

Document 11239530

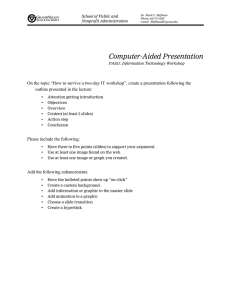

advertisement