IÌC /;( presented LINEAR

advertisement

AN ABSTRACT OF THE THESIS OF

JEROME JESS JACOBY

presented on

Mathematics

in

Title:

Master of Science

for the

/;(

IÌC

NUMERICAL INTEGRATION OF LINEAR INTEGRAL

EQUATIONS WITH WEAKLY DISCONTINUOUS KERNELS

Abstract approved:

(Joel Davis)

This thesis outlines a technique for accurately

approximating a linear Volterra integral operator

cs

(Kx)(s)

k(s,t)x(t)dt.

=

An approximation,

Kn,

to

J0

K,

the Volterra operator, is made by replacing the in-

tegral in

K

depend on

s.

by a quadrature rule on [0,1] whose weights

The special case of a modified Simpson's

rule is worked out in detail.

for the uniform convergence of

Error bounds are derived

Knx

to

Kx

when

x

is

a continuous function.

The technique is generalized to linear Fredholm in-

tegral operators that have kernels with finite jump dis-

continuities along a continuous curve.

ditions on the function

x

Under optimum con-

and the kernel the convergence

for Simpson's rule is improved from first to fourth order.

Numerical Integration of Linear

Integral Equations with Weakly

Discontinuous Kernels

by

Jerome Jess Jacoby

A THESIS

submitted to

Oregon State University

in partial fulfillment of

the requirements for the

degree of

Master of Science

June 1968

APPROVED:

Assistant Professor of Mathematics

In Charge of Major

Chairman of Department of Math matics

Dean of Graduate School

Date thesis is presented

Nky 2,

1968

Typed by Mrs. Charlene Williams for Jerome Jess Jacoby

ACKNOWLEDGEMENT

I

wish to express my thanks to Prof. Joel Davis for

his interest and encouragement during the preparation of

this thesis.

The initial idea was his, as were several

others that led to fruitfull results.

I

also express thanks to my wife, Sally, who has

earned her Ph. T. with honors.

This work was supported in part by the A. E. C.

under contract no. ÁT(45 -1) -1947.

TABLE OF CONTENTS

I.

Introduction

I.1

I.2

II.

Review of Numerical Integration of

Integral Equations

Theorems to be Used in the Thesis

The Special Integration Rule and the Volterra

Case

Generating a Smooth Kernel

The Quadrature Rule for Indefinite

Integrals

II.3 The Sum of the Absolute Values of the

Weights

II.4 The Remainder for the Special Integration

Rule

II.5 Applying the Formula

Interpolation Using the Special Rule

II.6

II.7 Convergence of the Approximation for

Smooth Solutions

II.8 Convergence of the Approximation for

Continuous Solutions

Convergence of the Solution

II.9

II.10 Examples

II.1

II.2

III.

IV.

1

1

3

9

9

14

16

19

24

30

32

35

37

39

Applications to Fredholm Equations

III.1 Fredholm Equations with Jump Discontinuities

III.2 An Example

Generalizations

III.3

45

Summary and Conclusions

50

Bibliography

52

Appendix A

53

Appendix B

55

45

47'

49

NUMERICAL INTEGRATION

OF LINEAR INTEGRAL EQUATIONS

WITH WEAKLY DISCONTINUOUS KERNELS

INTRODUCTION

I.

Review of Numerical Integration of Integral Equations

I.1

In this thesis we consider integral equations of the

Consider first a Fredholm equation of

second kind only.

the form

C1

x(s)

K(s,t)x(t)dt = f(s),

-

0

<

s

<

1,

(1-1)

0

where

and

K

are given real valued functions, the

f

is the

x

is the unit square, and

unknown

domain of

K

function.

One technique to find an approximation,

to

xn,

is to first approximate the integral with a finite

x

sum:

n

f(s)

-J¿1 wnjK(s,tnj )xn(tnj

= xn(s)

j

)

(1 -2)

,

=1

n

where

n3

j

the

wnj

and where

forms a partition of [0,1],

{tnj}

=1

are weights that depend on

tion formula used.

n

Note that for each

and the integras,

equation

(1 -2)

n

n

is an equation in the

unknowns

{xn(tnj)}

.

j

let

s

take on the

n

We

=1

values of the partition, genera-

2

.

Finally, to approximate

at the remaining points in

x(s)

(1 -2),

at the

xn(s)

ving the system of equations we obtain

partition points in [0,1]

By sol-

unknowns.

n

equations in

n

ting a system of

[0,1]

we use equation

,

which thus serves as an interpolation formula.

Consider next a Volterra integral equation of the

form

s

K(s,t)x(t)dt = f(s),

x(s) -

s

<

0

<

(1-3)

1,

S 0

and

f

are as in the Fredholm case, but now

the domain of

K

is the triangle

where

x

One technique to approximate

with

s

<

<

t

<

s

,

0

<

is to first replace

s

<

1.

K

Fredholm kernel which is identically zero for

a

t

x

0

<

1.

The resulting Fredholm equation would then be

solved as above.

The difficulty with treating Volterra equations as

though they are Fredholm equations is that the approximation corresponding to equation

good;

(1 -2)

is usually not very

that is, to guarantee a reasonably accurate answer

the number of partition points, and hence the size of the

algebraic system, usually must be very large.

The source

of the difficulty is the discontinuity of the new kernel

along the line

s

= t.

Disposal of this difficulty is

the motivation behind this thesis.

3

I.2

Theorems to be Used in the Thesis

In this section we develop the theorems that will be

used later, so as not to interrupt the continuity of the

The first three theorems parallel the

later discussion.

work of Milne (1949).

For any nonnegative integer, n, define

Definition.

a function

G

as follows:

n

t

tn,

Gn(t)

Note that for

G0(t)

=

n

>

0,

t

0,

>

0.

<

is continuous,

Gn

1,

is discontinuous at

and for

n = 0,

Two important pro-

t = O.

()

perties of

Theorem

vative of

are as follows:

Gn

G

For

1.

as defined above,

Gn(t)

is

n

(nGn_1(t), n

n(t)

>

1,

=

0,

n = 0,

except for the case when

n = 1

the anti -derivative of

Proof:

the deri-

Gn

is

with

n+

t = O.

n+1.

Differentiating formally, we have

d

d

ntn-1

=

n)

dt(tn)

,

t

n (t) =

át(0)

=

0

,

t

<

O.

>

0,

Further,

4

This formula obviously holds except when

and

n = 1

for then the difference quotient

t = 0,

h[Gn(0+h)

Gn(0)]

-

has two different limits according as

h -

0

or

h

0 +.

Hence, with this single exception

dn(t)

=

nGn-1(t)'

The second part of the theorem follows directly from

the proof of the first.

Theorem

If the

on

Let

2.

(n +l)th

be a function defined on

derivative of

then with

[a,b],

f

G

n

[a,b].

exists and is integrable

f

defined as above, and for

in

y

[a,b]

f(y)

= f(a) +

/

(yká)

kf (k) (a)

+

k=1

Proof:

n

bGn

!

Ja

(y-u) f

(n+l) (a) du.

By the fundamental theorem of integral cal-

culus,

f (y)

for

y

= f(a)

in

+

[a,b].

yf' (u) du = f(a)

a

+

y

Integration by parts

(y-u) Of' (u) du

f

a

n

times yields

5

f (y)

+

= f(a)

(yká) )

11

kf

)

(a)

( a )

(

k=1

J

J

From the definition of

V(17-ton

f

(n+1)

(yñu) n (n+l)

+

Gn(y -u)

a

(u)du.

we can write

b Gn(yu-)

(u)du =

n.

y

a

f

(n+l)

(u)du,

a

since the integrand is zero for

u

>

The desired re-

y.

sult follows immediately.

When approximating a definite integral by a finite

sum, one can define a remainder operator which acts on

functions:

n

pb

R(f)

=

af t) dt J

a

(

1wn7

f( tn

(1949), or Hildebrand

See, for example, Milne

(1956).

If

the integral is indefinite we do the analogous thing:

Let

Definition.

be in [a,b]

be defined on

f

If the integral from

.

[a,b]

to

a

s

and let

is

s

approx-

imated by a finite sum with weights that are functions of

then we define a remainder operator acting on

s,

s

by

n

rs

R(f,$)

-

`f (t)dt

a

w

-

j=1

(s) f

(tn

)

.

f

and

6

Note that if we construct the weights so that a poly-

if

such a polynomial, then

is

f

or less is integrated exactly, and

m

nomial of degree

R(f,$) =

0

.

Further,

since the integral and summation are linear operators,

R

will be a linear operator with respect to its first

argument.

Theorem

R(tk,$) =

for

0

M =

and let

Let

3.

R

be a remainder operator such that

0,

1,

k =

sup

f (m+l)

I

and

..., m,

If

.

.

I

f

s

(m+1)

in

(t)

[a,b],

is Riemann

a <t <b

integrable, then

R

(

f

,

s

=

)

mR

b

rI

Gm(t-u)f(m+l) (u)du,

J a

s

,

and further,

b

IR(f-s)

where

and

R

s

I

<

m!

R [ Gm

T

operates on

(

t-u

s]

du,

a

as a function of

Gm(t -u),

t,

only.

Proof:

By the previous theorem, if

s

is

in

[a,b],

then we have

f(t)

= f(a)

+

L

(tká)f (k)

k=1

Letting

R

operate on

f(t)

(a)

+

m!

we have

5:Gm(t_u)f(m±U

a

(u)du.

7

b

mR

R(f,$) =

Gm(t-u)f

(m+1)

(u)du,

s

,

a

since

R

linear in its first argument, and

is

m

polynomial of degree

nition of

=

of a

From the defi-

we have

R

R(f,$)

or less is zero.

R

m

Os rb

J Gm(t-u)f(m+l) (u)dudt

l

1

a

a

n

(s)

-

b

(m+1)

(u)du

aGm(tnj°u) f

.

7lwnj

From the theorem on iterated Riemann integrals (Fulks,

the order of integration may be interchanged, lead-

1961),

ing to

n.

R(f,$)

=

Sa(SaGm(t_l1t

a

a

m

b

=

S

m,

a

R[Gm(t-u), s]

wnj(s)Gm(tnj-u)

-

f(m+l) (u)du

j=1

f(m+l) (u)du,

and the final result follows directly.

Theorem

f

[Weierstrass Approximation Theorem]

4.

Let

be a real valued continuous function on a compact interThen, given any

val [a,b].

nomial

If (t)

p

-

E

(which may depend on

p (t)

I

<

s

for every

t

0,

>

s

in

),

there is a polysuch that

[a,b].

8

Proof:

See,

e.g.,

Goldberg (1964), page 261.

9

The Special Integration Rule and the Volterra

II.

II.1

Case

Generating a Smooth Kernel

Consider the Volterra integral equation of the sec-

ond kind:

s

x(s)

K(s,t)x(t)dt = f(s),

-

0

<

s

1,

<

0

with the kernel,

at least

r +

and the known function,

K,

f,

having

continuous derivatives on their respec-

1

tive (closed) domains.

A Volterra equation may be thought

of as a Fredholm equation with a kernel

K(s,t)

N(s,t) =

0, s

<

0

,

t

<

<

t

<

s,

1.

As mentioned in I.1, the discontinuity of

line

s

= t

along the

N

is the principal source of difficulty when

approximating the integral by a sum.

In order to eliminate the difficulty, we subtract the

discontinuity as follows:

's

f(s) = x(s)

-

{K(s,t) - K(s,$) + K(s,$)

J

}

x(t)dt

0

1

= x(s)

(s,t)x(t)dt -

N

S

0

0

K(s,$)

cs

J0

x(t)dt,

(2-1)

10

where

K(s,t) t)

NO (s

0,

t

<

s

is continuous across the line

ate a kernel,

N1(s,t)

{K(s,t)

-

0

t

-

s,

<

1

<

=

s

-<

t

We can also gener-

which has a continuous partial

,

derivative with respect to

f(s) = x(s)

K(s,$),

=

across the line

t

K(s,$) +

-

s =

t

:

x(t)dt

(s-t)K(1) (s,$)}

0

K(s,$) Ssx(t)dt + K(1) (s,$)

-

s(s-t)x(t)dt

0

0

= x(s)

1

-SN1(s,t)x(t)dt

-

K(Q) (s,$)

-

a

QK(s,t)

at

that

K(0) (s,$)

QK(R,)

(s,$)Js(s-t)x(t)dt,

Q=0

0

where

(-1n)

= K(s,$)

0

with the convention

,

t=s

and where

,

K(s,t) - K(s,$)

+

(s-t)K(1) (s,$)

,

t<

0 <

s,

N1 (s,t) =

0,

s

In general then,

<

t

1

<

,

subtracting

terms of Taylor's

a kernel with

series generates Nr(s,t),

partials with respect to

r

t

across the line

continuous

r

s

= t

:

11

1

f(s)

= x(s)

-

Nr(s,t)x(t)dt

S

0

-

(

Q=0

(

kQ

Q)

(s,$)

(2 -2)

Ss(s-t)Rx(t)dt,

0

where

(-1)IS-t) QK(Q)(s,$)

K(s,t)

0

,

<

t

s

= t.

<

s,

2=0

Nr (s,t) =

0, s

<

Clearly Nr(s,t)

If

x(t)

then we

also has

r

t

<

1.

is smooth across the line

continuous derivatives on [0,1]

can accurately approximate the first integral in

by a finite sum of the form

(2 -2)

(1 -2).

It is easy to

show that with the differentiability assumptions on

and

that

f

,

x

is forced to

have

r

K

continuous deriva-

tives.

We deal with the indefinite integrals in

section II.2.

(2 -2)

Presently, we consider the choice of

in

r --

the number of terms of Taylor's series subtracted from

K(s,t)

Integration rules under consideration here have

.

(p +1)th

error bounds which depend on a derivative,

of the integrand, assuming the

uous.

than

derivative is contin-

pth

Therefore, it makes sense to choose

p.

r

For example, with Simpson's rule

(Hildebrand, 1956).

Of course if

say,

K

and

no greater

p =

f

3

don't have

12

sufficiently many continuous derivatives, then we must

choose a smaller

r.

We elaborate on the above by applying a low "order"

rule repeatedly

n

For fixed

times.

continuity of the kernel

occurs in at most one of the

K

subintervals of application.

fixed

then

Let

If we apply Simpson's

s.

the jump dis-

s

cP(t)

rule

= K(s,t)x(t)

for

times on [0,1],

n

is discontinuous on at most one subinterval.

4

Consequently, the error will be bounded by

4

5

ñM0 + (n-1) (T)

where

M0

°C

=

M4

01I(t)

ñ

[MO +

and

1

(n-1)

M4

c[

(ñ)

T

0

M4]

I

,

qlv (t)

.

1

We

therefore have first order convergence for piecewise continuous functions

(Note:

which have only one discontinuity.

4

kth order convergence if the error

we would have

bound was divided by

same way, if

0

2k

when

n

was doubled.)

In the

is continuous, then we would have second

The extension is obvious,

order convergence.

for if

(I)"

is continuous then we will have fourth order convergence.

For Simpson's rule (which has fourth order convergence if the integrand is sufficiently differentiable),

a good choice for

this case,

r

in the general case is

N2(s,t)x(t)

r = 2.

In

will have a continuous second

derivative with respect to

t,

a piecewise continuous,

hence bounded, third derivative with respect to

t,

and

13

we will have fourth

order convergence.

Choosing

r =

as predicted in the earlier discussion, will not give

higher order convergence.

3,

14

The Quadrature Rule for Indefinite Integrals

II.2

We now develop

formula to approximate the integral

a

(s-t)x(t)dt.

0

We start with a quadrature rule to approximate the inte-

gral with

Nr

in

This rule will be called the

(2 -2).

"regular" rule as contrasted with the "special" rule to

Using the same abscissas as in the regular

be developed.

rule, we obtain a special rule with weights that are

functions of

s

and

It is necessary to use the

Z.

same abscissas in both rules so that the same

n

{xn(tn3)}

j

s

Thus, when

arise from both approximations.

,

unknowns,

n

=1

is allowed to take on the values of the partition,

in I.1, the system of equations will be

nonsingular, can be solved.

n

x

as

and, if

n

The theory for the special

Simpson's rule is developed below.

For fixed

k,

and for

0

<

-

s

<

-

2h,

where

h =

l

2n

we require

rs

2

(s-t)

J

0

where

R

quire that

x(t)dt =

w. (s,k)x(ih)

i=0 1

is defined by equation

(2 -3).

w0(2h,0) = w2(2h,O) = 3,

+ R(x,$)

,

(2 -3)

We naturally re-

and that

15

wl(2h,0) =

4h

that is, the special rule reduces to the

;

regular rule for

weights let

= 2h

s

and

1,

To find the three

= O.

and then let

R(x,$) = 0,

sively the functions

Q

t,

x(t)

be succes-

We arrive at a

and t2.

system of three equations whose solution is

w0

E

w0(s,Q) = CsQ+1[2s2-3sh(Q+3)+2h2(Q+2)(Q+3)],

wl

=

wl(s,Q) =

w2

=

w

where

2

(s

C =

tion of the

-

4CsQ+2[s

-

h(Q+3)].

= CsQ+2 [2s - h(Q+3)

[2h2(Q +1)(Q +2)(Q +3)] -1.

wi,

R(x,$)

]

,

(2-4a)

(2 -4b)

(2 -4c)

Note that by construc-

for a polynomial of degree two

or less will be identically zero.

16

The Sum of the Absolute Values of the Weights

II.3

V =

Let

+

Iw11

1w21

+

Iw31

This sum will be used

.

It is also important in that it provides a mea-

in 1I.8.

sure of the amplification of the errors in the ordinates

(Hildebrand, 1956).

From equations

for

s

in

(2 -4)

note that

and for all

[0,2h]

w0

R

>

0.

<

s

<

>

and

0

For

wl

0

>

we have

w2

three cases:

w2(s,Q)

>0,

R=

0,

<0,

Q=

0,

0

<

s

<

2h

(case 2),

>

1,

0

<

s

<

2h

(case 3).

<0,

2,

22

2h

(case 1),

le

Case

1:

Since

w2

>

V = w0 + wl + w2 =

0,

s

<

2h

by construction of the weights.

Case

2:

V =

Here

w2

s

-

(

<

0,

V = w0 + wl

so

-

w2

and

4s2 + 6sh + 12h2).

12h2

Taking the derivative of

V

with respect to

we have

s

2

V'

=

1 +

h

- s2,

h

which is a parabola that is concave downward.

V'(0) =

1

and

V'

zh)

(

increasing throughout

=

T'

[0, 2h]

we

conclude that

so that

Since

V

must be

17

V

Case

Again

3:

C =

-

w2

V = C[- 4sk +3+ 2h

where

V(2h= áh

<

(k +3)

so

sk +2+ 2h2 (k +2) (k +3) sk

V'

Since the product

C(k +3)s9'

where

2h2(k+l)(k+2).

is always non- negative, the

is determined by the sign of

V'

+1]

Taking the derivatives

= C(k +3)ska(s),

= - 4s2 + 2h(k+2)s +

a(s)

a(s).

And since

is a parabola that is concave downward with a(0)

a(s)

and

0,

2h.

[2h2(k +l)(k +2)(k +3)] -1.

as in case 2, we have

sign of

<

<

a(2h)

out [0,2h].

>

0,

we conclude that

V

<

V(2h) = g(k) (2h)

k+1

where

g(k)

for

k

>

1.

Result

_

k2 + 72, + 4

(k+1) (k+2) (k+3)

We have thus established

I.

0

is increasing through-

Thus,

V

>

The sum of the absolute values of the

weights of the special Simpson's rule is given by

18

V

<

g(9) (2h)

,Z+1

,

where

,

g(R)

k

-

0

_

2,2 + 7!Z + 4

(Q+l) (lZ+2) (Q+3)

Note that

for

2

>

0

Q

'

1.

>

is decreasing for

g(R)

the maximum of

g(R)

2

is one at

>

so that

0

R,

=

O.

Therefore, the sum of the absolute values of the weights

is bounded for all

Z

and for all

s

in

[0,2h].

19

II.4

The Remainder for the Special Integration Rule

From equation

(2 -3)

arbitrary function

x

we have the remainder of an

:

2

cs

R(x,$)

(s-t)

E

x(t)dt

50

where

<

0

s

<

2h

i=0

From theorem

.

(2-5)

wo (s,Q)x(ih),

-

1

2

of I.3,

+

2

x(t)

may be

(t-u)x"'

(u)du.

written

x(t)

=

x(0)

2h

2

tx'

+

2x" (0)

(0) +

G2

0

Applying the remainder function to this equation, we-have

2h

R(x,$)

= R

`

JO

2.

and applying theorem

G2(t-u)x"' (u)du,

s

we can interchange

3

,

and the

R

integral to get

M3

IR(x,$)I

<

2h IR[G2(t-u), s]Idu,

2

(2 -6)

0

M

where

=

sup

Os<llx

To determine

placed by

G2(t -u).

t1I

'I

R(G2,$)

With

apply

W = Ii

(2 -5)

wi

with

(s

R,)

we have

(s-t) kG2 (t-u)dt - W.

R(G2,$) =

'00

x(t)

re-

G2 (ih -u),

20

Integrating by parts

integrating

R(G

2

Gk

directly yields

+2

k1

_ -

,$)

0

u

<

2k(k-1)...(k-i) xk-iG.

2k1

Gn( -u)

Result II.

If

- G

(-u)

[G

k+3)T

2h,

<

i+3

(i+2)!

i =0

-

Since

(using theorem 1), and then

times

k

E

k+3

(s-u)

]

(-u)

-

W

.

so we have

0,

has a continuous second derivative

x

and a bounded third derivative, then

2h

M3

IR(x,$)I

--

<

2

where

M3 =

2

wi(s,k)G2(ih-u)Idu,

ILGk+3(s-u) -

sup

(2-7)

i=1

0

I

x "(

)

I

and

L = 2[(k +l) (k +2) (k +3)] -1.

0<s <2h

Analytic integration of

(2 -7)

for arbitrary

k

is

difficult due to the absolute value inside the integral.

The case for

eral

k,

k

=

0

will be worked out below.

For a gen-

note that the integrand is continuous and its

first derivative is piecewise continuous.

Therefore, we

are assured that the integral exists and can be accurately

approximated by the repeated midpoint rule, say.

A com-

puter program to estimate the remainder at selected points

in

[0,2h] was written and is presented in Appendix A.

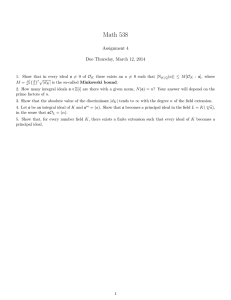

results for

in figure

1

k

= 0, 1,

2,

and

on the next page.

3

The

are presented graphically

21

in units of

IR1

M hQ+4

3

0.15

Q

max

0

0.04166... = 1/24

1

0.04444... = 2/45

2

0.08888... = 4/45

3

0.15238...

0.1

0.05

/

Q

= 1

R

= 0

2h

h

Figure

1

Sketch graph of the remainder for

Q

= 0, 1,

2,

3.

22

For

=

2,

we have from

0

(2 -7)

M3 ç2h

IR(x,$)I

<

2

13G3(s-u) - wl(s,0)G2(h-u)

J

0

This splits into two cases:

For

-w2(s,0) (2h-u)2,

-wl(s,0)(h -u)2

R(G2,$) =

-

u

-

w2(s,0)G2(2

0

<

s

<

)Idu.

we have

h

[h,2h]

e

w2(s,0)(2h -u)2,

u

e

[s,h]

ue[0,s].

3(s-u)3 -wl(s,0) (h-u)2-w2(s,0) (2h-u)2,

And for

h

<

s

<

we have

2h

-w2 (s,0) (2h-u) 2,

4(s_u)3

R(G2,$) =

3(s-u)

-

u

[s,2h]

e

w2 (s,0) (2h-u) 2,

3- wl

(s,0) (h-u)

2-- w2

u

[h,s]

E

(s,0) (2h-u)2, u e[O,h].

Thus in both cases we can split the integral into three

parts and deal with each part separately.

wl(s,0)

=

and noting the signs of

w2(s,0)

it can be shown

w2

Q

and

By writing out

(the algebra is tedious)

wl

and

that for

0

M3h4

I

for all

lated for

in

s

9,

=

[0,2h],

R(x)

<

I

24

which is what the program calcu-

O.

The fact that the integrand of

on

[0,2h]

'

(2 -7)

shows that there is a number

e2

is continuous

depending

23

on

k

and

suplxt(s)I

for

IR(x,$)I

s

c

<

e

(0,2h)

k

hQ+4

such that

;

that is, the bound on the remainder can be made independent

of

s.

This fact will be used in II.7 to prove a uniform

convergence theorem.

.

24

Applying the Formula

II.5

Let us reconsider the original problem:

cs

f(s)

= x(s)

-

J

K(s,t)x(t)dt,

0

(2-8)

1.

<

s

<

0

Recall that to approximate the function

we choose an

x

integration rule and a corresponding partition of [0,1],

convert

(2 -8)

to a Fredholm equation with a kernel

which is zero for

t >

and approximate the Fredholm

s,

integral with a finite sum.

an

n

x

This approximation leads to

system of equations whose solution determines

n

an approximation to

at the partition points.

x

Suppose we choose Simpson's rule repeated

so that the partition is

in

(2

x(0)

-8)

N(s,t)

that if

j

=

2n

}i =0'

=

0,

1,

then the integral is zero, so

0

2,

times

First note

h = 1/2n.

Consequently, we assume

= f(0).

for some

s

{ih

n

...,

n -1.

2jh

<

s

<

(2j +2)h,

The Fredholm problem can

then be written

2jh

f(s)

= x(s)

K(s,t)x(t)dt -

0

If

K

and

x

1

N(s,t)x(t)dt.(2-9)

S

2jh

have continuous third and bounded

fourth derivatives with respect to

t

on [0,2jh], then

we can approximate the first integral in

regular Simpson's rule

j

times:

(2 -9)

using the

25

2jh

hj-1

K(s,t)x(t)dt

1

3

0

{K(s,t2i)x2i + 4K(s,t2i+1)x2i+1

i=0

+ K(s,t2i+2)x2i+2},

xk = x(tk)

where

and

We use the regular rule

tk = kh.

because it has a higher order remainder than the special

rule and, hence, is likely to be more accurate.

Next, consider the second integral in

N(s,t)

=

for

0

c1

t

>

s,

N(s,t)x(t)dt

('

(2j+2)h

J

=

N(s,t)x(t)dt

.

2jh

takes on the partition points there will be two

s

cases:

N(s,t)

Since

we have

2jh

When

(2 -9).

s

=

=

(2j+l)h

on

K(s,t)

In the second case

(2j +2)h.

and

so the integral can

[2jh,(2j +2)h],

be approximated by the regular Simpson's rule applied once.

In the first case we use the results of II.1 as

follows:

('

(2j+2)h

(2j+2)h

K(s,t)x(t)dt =

J

2jh r

2jh

-

(-1)

!

i=0

where

s

=

Nr(s,t)x(t)dt

S

(2j+l)h.

!

k

K(Q)

2jh

To approximate this integral use the

regular rule on the integral with

rule on the integral with

for

t

>

s,

(s-t)Qx(t)dt,

s

(s,$)

and letting

(s

-t)

K2 +1

Nr

.

=

and the special

Recalling that

K()(s2j +l's2j +1)'

Nr

we

E

0

26

have

(2j+1)h

K(s,t)x(t)dt

5

-3Nr(s,t2j)x2j

°

2jh

2

L

Q=0

(

Q!

1

wi (h' Q) s2j+1

QK2j4 i=0

j.

Summarizing the above work, we have three cases:

(' S

s

= 0:

K(s,t)x(t)dt =

J

(2 -10a)

0,

0

s

=

(s

(2j+l)h:

K(s,t)x(t)dt

h

-

3

J 0

j-1

{K(s,t2i)x2i

i=0

+ 4K(s,t2i+1)x2i+1 + K(s,t2i+2)x21+2}

r

+

3Nr

(s't2

j

)

x2 j

(-1)

i=0

Q

2Q

+1

Q.

Q

2

X

L

wi

(h, Q)

(2

x2j+i'

-10b)

i=0

s

s

=

(2j+2)h:

SK(s,t)x(t)dt

3

0

i=0

{K(s,t2i)x2i

+ 4K(s,t2i+1)x2i+1 + K(s,t2i+2)x2i+2}.

Substituting approximations

generates a system of

2n +

1

(2 -10)

(2-10c)

into equation

equations in the

2n +

unknowns that will be of the form indicated in Figure

The *'s indicate generally nonzero entries.

(2 -8)

1

2.

The elements

27

inside the dashed lines are given in Figure

3.

The ele-

ments are shown with a general subscript, and as indicated,

the two -by -two system is repeated

r--I

*

0

0

0

0

0

0

0

xl

fl

0

0

0

0

x2

f2

*¡

0

0

x3

0

0

x4

f4

*

*

x5

f5

*

*

x6

f6

0

**

*

*

*

*

*

*

r*

*

*

*

*

*

*

*

*

*!

*!

*

*

*

*

*

;

Hence, the

f0

0

*

i

times.

n

0

0

;

f3

=

AX = F

Figure

A Schematic for the Array A for n =

2.

3.

system is reducible; that is, from the zeroth equation

x0 = f0.

Substitution of

into the first through

x0 = f0

(2n)th equation reduces the system to

equations one and two

xl

and

Cramer's rule; substitution for

three through

2n

From

can be found using

x2

xl

and

reduces the system to

Repeating this process on the pairs

(x2n- l,x2n)

(2n)x(2n).

x2

in equations

(2n- 2)x(2n -2).

(x3,x4),

(x5,x6)...,

will solve the system.

It is important to note that since a general formula

for the elements of the system can be written, it is unnec-

essary to store the whole array.

In fact, only the two -by-

two subsystems are stored as the elements are calculated.

A computer program using Simpson's rule is presented in

28

column 2j +2

column 2j +1

row

0)

0

0

2j)

0

0

2j+1)

1-

Q=0

(-1)

Q!

2j+3)

-TT K2j+3,2j+1

2j+4)

-

Key:

3

K2j+4,2j+1

3

_2h

4h

K2n,2j+1

3

= 1;

A1,0 = -3x1,0

Ku

= K(su,sv),

3.

K2j+3,

2j+2 + T2j+3

K2j+4,2j+2

K

K2n,2j+2

is a special case:

v

T

Figure

2h

_2h

4h

3

- 3

0

(h,Q)

K2j+2,2j+1

3

1

4h

2n)

(-1)Q K(Q) w

2j+2 2

Q!

h

-3 K2j+2,2j+1

A0,0

Q=0

4h

2j+2)

Column

_

K(Q) w (h,Q)

2j+1 1

u

=

rCC

L

Q,=O

=

Av,0

v,0

v = 2,3,...,n.

su = uh

(-1)

k

+ T1;

i

2

K(k) hQ+1

3

u

-

w0 (h, Q)

General Formulas for the Elements of the

Array A.

29

Appendix B.

When solving an actual problem, one needs the

following information:

and

1)

The known functions

2)

The partial derivatives of

to

f

and

K,

K

with respect

t.

These formulas are used as indicated-by-the-comment state-

ments in the program.

termines

h

The only- inputs are

and hence the error bound) and

ber of terms of Taylor's series to be used).

Some examples are given in II.10.

(which de-

n

r

(the num-

30

Interpolation Using the Special Rule

II.6

Further consideration of the approximations in the

previous section and the development-of-the special rule

in II.1 and II.2 indicates that an interpolation formula

may be easily obtained.

Suppose we have approximated the solution,

points of some partition of

an arbitrary

is desired.

x(s)

x(0)

= 0,

j

equation

If

s

Further, suppose

[0,1].

0

= 0,

<

s

<

2,

1,

...,

(n -1).

= f(s)

2jh

<

s

(2j +2)h

<

x(s):

(2j+2)h

K(s,t)x(t)dt +

+

for

As before, we arrive at

2jh

x(s)

that

and the number

1,

on page 24 but now we solve for

(2 -9)

at the

then we have immediately

Consequently, assume

= f(0).

some

given,

is

s

x,

0

S 2jh

N(s,t)x(t)dt.

(2-11)

The first of these integrals is approximated as before

using the regular Simpson's rule

integral we generate

tegral with

Nr

r

on the interval

(s -t)2'

a

j

times.

In the second

smooth kernel as in II.5.

The in-

is approximated with Simpson's rule once

[2jh,2jh +2h],

and the integral with

is approximated with the special rule.

approximation is as follows:

The final

31

JCl{K(s't2i)x2i

3

JK(s,t)x(t)dt

0

+ 4K(s,t2i+1)

o{K(s,t2i)x2i

i=0

+ K(s't2i+2)x2i+2}

+ 3Nr(s't2j)x2j +

3

2

+

G

(

-tl K(Q) (s,$)

i=0

i =0

wi(s- 2jh,Q)x2j +i

(2 -12)

2jh

Notice that for

<

s

<

(2j

+2)h

the approximation

involves the previous calculated values of

x(2jh +2h).

x2j +2 =

Hence,

the formula for

x

up through

x(s)

an extrapolation but an interpolation:

x(s)

-

f

(

s

)

+

[approximation (2-12)].

is not

32

II.7

Convergence of the Approximation for Smooth Functions

We now investigate convergence of the approximations

of the last two sections to their respective integrals as

n +

Our hope, to be established in I1.9, is that if

cc.

these approximations converge to their integrals then the

corresponding approximate solutions,

(in

n

some sense) to the correct solution,

Let us fix

for if

(0,1]

then

= 0,

s

x,

and choose an arbitrary

x

half -open interval

will converge

xn,

n

as

s

_.

-

in the

[There is no loss of generality,

.

x(0) = f(0)

by

In order

(2- 10a).]

to investigate convergence, we write

rs

E

E.

50

K(s,t)x(t)dt

Assume

= min {3,

[Approximation (2-12)].

is a non -negative integer and let

r

Assume

r +1 }.

with respect to

is well -known

-

and

t

has

K

x

(Hildebrand,

p + 1

has

p + 1

(2-13)

p

partial derivatives

derivatives.

It

1956) that for each application

of Simpson's rule, the error bound is

hp

+2MP +1

where

in this case

ap+l

Mp+l

If

p = 3,

=

p+1 OstPl

atp+1

for example,

three terms of

(2 -12)

to the integral with

of

Nr(s,t)x(t)

.

then

K(s,t)x(t)

C4 = 1/90.

In the first

we applied Simpson's rule

K(s,t)x(t)

j

times

and once to the integral

33

If

M

*

= C

p

p

a

N (s,

sup

r

0 <t<1 atp

t) x (t)

then the magnitude of the error from these three terms will

be bounded by

JMp+lhp+2 + Mp*hp+1

p+1

<

n

By the results of II.4, the error bound for approxi-

s

J

2jh

Apkhk +p +1,

to

will be less than or equal

(s- t)x(t)dt

mation of

Apk

where

=

Qp"k

0

sup (x(p)(t).

<t<1

for example are the numbers from equation

gested in figure

1.

_

=

Q Ap,kNkhk

[C +

2

r

P-1

¿

k=0

where

r

<

N

By the above work we have established

3.)

Result III.

If

0

<

s

<

1,

vatives are bounded on

are bounded for

t

and its first

x(t)

and if

0

<

p +

and its first

K(s,t)

derivatives with respect to

and

Q!Ap ,kNk hk] np +l

(Note that this requires

sup IK(k)(s,$)I.

<t<1

0<t

=

and sug-

(2 -7)

Therefore,

hp+1

np+l +

IEI

Q3,k

t

<

1,

where

r

partial

1

0

p + 1

<

t

<

s

deri-

is the high-

34

est order derivative subtracted from the kernel and

= min {3,

r +l },

then there is a constant

°,

p

depending

on the above bounds, such that the error in approximating

VoK(s,t)x(t)dt,

0

<

s

<

1,

o

with

n

tion on

applications of Simpson's rule and its modifica[0,1]

is bounded by

iEl

np+l

That is, the approxiamtion converges uniformly to the

integral as

n ±

00.

Note that by Result III there is no gain in taking

r

>

2

for the Simpson's rule case.

prediction in II.1 that

r = 2

This confirms the

is a good choice.

35

II.8

Convergence of the Approximation for Continuous

Functions

In the previous section strong assumptions were made

Uniform convergence of the approximation

on the solution.

operator to the integral operator can also be established

if the solution,

is only known to be continuous.

x,

The starting point is the Weierstrass approximation

theorem (see I.2 Theorem 4):

polynomial

in

s

such that

q(s)

lq(s)

e

there is a

0,

>

- x(s)1

<

e

for every

Knowing that such a polynomial exists, we

[0,1].

look to equation

in equation

given

(2 -12).

(2 -13)

-

Recalling the definition of

we subtract and add

q

wherever

E

x

Using the associative and distributive laws and

occurs.

the linearity of the integral and summand, we have,

for

example,

JsK(s,t)x(t)dt = sK(s,t)g(t)dt + JsK(s,t) [x(t) -q(t)]dt.

0

0

0

Next, we use the triangle inequality and the property of

integrals

tion

If

(t)dti

<

!J

(t)ldt

for any integrable func-

to write

4,

s

IEI

K(s,t)q(t)dt

<

-

[Approximation of K(s,t)q(t)]

0

+

+

[The remaining terms of

[(2 -12)

(2 -12)

with q(t)]

with lx(t) -q(t)1 wherever x occurs].

(2 -14)

36

Note that the first two terms of

K(s,t)q(t).

the error in approximating the integral of

the error of this approximation is less

So, by Result III,

than or equal to

for some

n-13-1,

%

q

q.

q

Finally, we use the assumption that

that

lx(t)-q(t)1

constitute

(2 -14)

<

depending on

1K(s,t)1

<

M0,

and that the sum of the weights

c,

(2h)2

of the special rule is less than or equal to

+1

to

assert that the sum of the absolute values of all the

weights will be bounded by

2

1 +

(2h)

2

+1

<

2, say,

2 =0

for

n

sufficiently large, and using this to conclude that

1E1

uniformly.

c

<-7Jc+i'jnp-l-0-/7c, as

q

Further, 97 is independent of

is arbitrary

1E1

-

0

as

n +

co.

00

c,

and since

37

Convergence to the Solution

II.9

In II.7 and II.8 we showed that the Simpson's rule

approximations converged to the integral as

lowing the lead of Anselone

K

and

K

n

n

-}

Fol-

co.

(1965), define the operators

as follows:

's

(Kx) (s)

E

\

JO

(Knx)(s) =

K(s,t)x(t)dt

[the approximation

(2 -12)].

Then our results have shown that

Knx

->

Kx uniformly as n +

00.

The integral equation and the approximate equation

have their corresponding operator equations:

Solving for

x

x - Kx = f,

(2-15a)

xn - Knxn = f.

(2 -15b)

in equation

(2 -15a)

and subtracting

(2 -15b)

from the result we have

x - xn - Knx + Knxn = Kx - Knx,

after subtracting

to

Knx

from both sides.

This equivalent

38

(I-Kn)(x-xn) = Kx

where

Knx,

-

If the operator

is the identity operator.

I

has an inverse, that is, if the determinant of the

(I -Kn)

matrix

A

represented in Figure

operate on the left by

(I -Kn)

x - xn =

then we can

is non -zero,

2

to get

-1

(I-Kn)-1 (Kx-Knx),

and taking norms we have that

(l

Now, if

H

x -xn

(

I

II

<

x-xn

(I -Kn)

II

Kx -Knx

uniformly as

<

I

-1

n

B

I

n +

<

II

II

-1II

-1

II

II

B

for some

0

as

-9-

II

(I-

I

Kx-Knx

and for all

B

n }

since

co,

.

I

Knx

--

n,

then

Kx

Thus we have

co.

IIx-xnll<B

;AT

n

for some

B

as defined in Result III.

Note that since

is a Volterra operator

K

(I -K)

exists and it can be shown (Anselone, 1965) that

a

II(I

-111

for all

This implies that

II(I

II

x

continuous on

Kn) -1II< B

n

as

[0,1]

for some

B.

II(I

-1

-Kn)

n +

00.

1II

39

Examples

II.10

section we present four examples that illus-

In this

trate the results predicted in section II.9.

presented in TABLES

through

1

The data are

The numbers are the max-

4.

imum of the errors listed on the computer print out, and it

the norm of the error,

is suggested that they "close to"

using the max norm.

The numbers in parentheses are the

factors- -the

convergence

error at one value of

n

is di-

vided by the convergence factor to obtain the error for

twice the value of

values of

and

0

r,

with

n.

*

indication that

The columns are for the various

indicating regular Simpson's rule

K(s,$)

only was subtracted.

Examples one and two are initial value problems:

1)

x' (s)

= x(s), x(0) = 1 => x(s)

2)

x' (s)

-

= es.

(3s2-4s+1)x(s) = 0, x(0) =

3

1

2

es -2s +s®

=>'x(s) =

The results speak largely for themselves --using the best

value for

predicted.

r

we obtain a convergence rate of

16

just as

Note also that the error with just two appli-

cations of the modified rule is many times better than the

error using 32 applications of the regular rule.

This sug-

gests a significant savings in computer time to obtain a

desired degree of accuracy by using the modification.

40

Worst Error at Grid Points

0

n

*

2

0.763

0.000492

(13.7)

(2.0)

4

0.374

8

0.188

0.0000360

(14.7)

(2.0)

0.00000244

(15.3)

(2.0)

0.000000159

0.0941

16

(15.9)

(2.0)

0.0000000101

0.0471

32

Worst error of grid points and 0.01, 0.02, ...,

0.99, 1.0

0

n

*

2

0.764

0.000497

(13.8)

(2.0)

4

0.375

8

0.188

0.0000361

(14.7)

(2.0)

0.00000244

(15.3)

(2.0)

16

0.0941

32

0.0471

0.000000159

(15.9)

(2.0)

0.0000000101

s

x' (s)

= x(s)

,

x(0)

= 0,

x(t)dt = 1.0

or x(s) 0

TABLE

1.

Example

1:

A listing of the worst error

at the grid points and at the points

j=

1,

2,

...,

100.

j(0.01), for

Worst Error at Grid Points

n

2

*

1

0

0.0442

0.0332

(<

4

0.0457

8

0.0336

32

0.00999

0.00148

(18.9)

0.00992

0.0000809

(16.0)

0.00258

0.00000506

(16.0)

0.00000472

(16.2)

(4.0)

0.000651

(1.9)

(19.5)

0.0000756

(3.8)

(1.8)

0.0190

0.00153

(3.3)

1)

(1.4)

16

2

0.000000312

(16.1)

0.000000292

(16.1)

(4.0)

0.000163

0.0000000194

(15.9)

0.0000000183

Worst of Grid and interpolation points

n

2

0

*

0.0735

1

0.0332

(1.3)

4

0.0557

8

0.0336

16

0.0190

32

0.00999

0.00403

0.00148

(13.7)

(3.3)

0.00992

(1.7

0.000394

(1.8)

0.00000472

(4.0)

(4.0)

Example

2:

0.000000292

(15.9)

(8.1)

0.000000658

x'(s)

(16.1)

(8.3)

0.00000536

0.000163

2.

(16.0)

(8.8)

0.0000447

0.000651

(1.9)

(19.5)

0.0000756

(3.8)

0.00258

TABLE

2

-

(3s2- 4s +1)x(s) = 0,

0.0000000183

x(0)

= 1 or

s

x(s)

(3t2-4t+1)x(t)dt = 1.

0

x(s)

= exp(s3-2s2+s).

Worst Error at Grid Points

n

*

2

0.211

0.113

8

0.0572

(2.0)

32

*

2

0.211

0.113

8

0.0572

16

0.0287

32

0.0144

TABLE

3.

(2.8)

0.000274

(13.6)

0.0000194

(15.1)

(9.5)

0.00000128

(15.7)

(8.9)

(3.7)

0.000000894

0.0000000816

(15.2)

(8.4)

(3.9)

0.0000743

2

0.00000795

0.000288

(2.0)

0.99, 1.00.

(5.3)

(3.4)

0.00107

(2.0)

...,

0.0000765

0.00369

Example

0.01, 0.02,

0.000404

0.0103

(2.0)

0.00000000536

1

0

4

(15.2)

(16.1)

0.00000000722

0.0000743

(1.9)

0.0000000816

0.000000116

(3.9)

Worst error of grid points and

n

(15.6)

(15.8)

(3.7)

0.0144

0.00000127

0.00000183

0.000288

(2.0)

(15.0)

(15.5)

(3.4)

0.0287

(13.7)

0.0000191

0.0000284

0.00107

(2.0)

0.000262

(14.2)

(2.8)

0.00369

4

2

0.000404

0.0103

(1.9)

16

1

0

0.00000000536

0.000000106

3:

s

x(s)

sin(s)cos(t)x(t)dt = sin(s) + (1-sin(s) )exp(sin(s)

S

0

)

.

x(s)

= exp [sin(s)

]

.

V

43

Example

cs

x(s)

-

3

is the problem

sin(s)cos(t)x(t)dt = sin(s) + (1-sin(s))e

sin(s)

,

50

and the solution is

The results are

= exp[sin(s)].

x(s)

very similiar to those of examples

1

and

An unexpected

2.

result is fourth order convergence on the grid points for

r = 1.

Notice that this occurred in example

2

originally expected the convergence factors to be

16

for

r =

*,

0,

1,

2,

4,

8,

No adequate expla-

respectively.

2

We

also.

nation has been presented as to why this phenomenon occurs.

Example

4

is the problem

s+ cos Tr(Trs2)

s

x(s)

sin

'

0

Trs 1ff5)

(Tr-1)

with solution

0

<

s

-<

Tr

s<

1

1

(l+cos (Trs2)

sin(s)-i-sin(Trs2)

ts,

0<-

Tr

sin(Trst)x(t)dt =

-

(Trs2)

2s2

s

-

<

1

s

<

7s

2

1

Tr

<

-

x(s) =

1-s

-1

Tr

1

Tr

-

- 1,

which was chosen to test the results of II.8.

Note that

the orderly convergence patterns are disrupted and we are

no longer quite so sure convergence is occurring.

But re-

call that no convergence rate was predicted for this case,

simply that convergence would occur.

44

Worst Error at Grid Points

n

2

1

0

*

0.0234

0.000720

0.00321

(4.5)

(1.4)

0.0166

8

0.00860

(<

(1.9)

0.00406

32

0.00203

64

0.00102

1)

1)

(z.

1)

0.000714

0.000771

(9.5)

(10.0)

(2.1)

16

(z

0.000670

0.000683

4

-

0.0000744

0.0000761

(3.3)

(3.3)

(2.0)

0.0000227

0.0000230

(7.4)

(5.2)

(2.0)

0.00000307

0.00000441

Worst error of Grid Points

0.01, 0.02,

and

n

2

...,

0.000870

0.00321

0.0234

0.000676

0.000683

0.0166

(<

0.000777

0.00860

0.00000307

0.00000441

Example

's

0

<

x(s) -

4:

sin(frst)x(t)dt

=

f(s)

5 0

<

s,

where

(7.4)

(5.2)

0.00102

4.

0.0000227

0.0000230

0.00203

TABLE

(3.8)

(3.3)

(2.0)

64

0.0000744

0.0000761

0.00406

1)

(9.6)

(10.0)

(2.0)

32

(<

1)

0.000716

(2.1)

16

(1.3)

(4.8)

(1.9)

8

1.00.

1

0

*

(1.4)

4

0.99,

s

<

-

is a continuous function.

x(s) =

1-s

Tr-

r,

1

<

s

<

1,

45

Applications to Fredholm Equations

III.

Fredholm Equations with Jump Discontinuities

III.1

An easy extension of II.1 applies to Fredholm integral

equations of the type

1

x(s)

N(s,t)x(t)dt = f(s)

0

where

K(s,t)

,

0

<

t

<

s,

R(s,t)

,

s

<

t

<

1,

N(s,t) _

and where both

K

and

are continuous on their closed

R

That is, along the line

triangles of definition.

there is a jump discontinuity in

K(s,$)

-

R(s,$)

N

s

= t

with magnitude

.

If in addition we assume that the

vatives with respect to

t

of

K

and

rth

R

partial deriare at least

piecewise continuous on their closed domains, then we can

define a jump function

R

J(Q) (s)

[K(s,t) - R(s,t)lt=s,

E

a

and proceed as in II.1:

tQ

46

f(s)

= x(s)

1

-

Nr(s,t)x(t)dt

J

0

Q-(k)

-

(s)

(3 -1)

(s-t)%c(t)dt,

Q=0

0

where

QiQ(s t)kJ(R,) (s)

K(s,t) - C(

Nr(s,t) =

R(s,t)

,

s

<

t

is a continuous kernel and has

across the line

<

,

t

<

s

k=0

s =

t.

<

1

r

continuous derivatives

Since both integrals in

(3 -1)

can

be well approximated by finite sums, it is reasonable to

expect that the corresponding approximations,

solution,

x,

will also be good.

If

r

is

xn,

to the

large enough

we would also expect fourth order convergence using repeated Simpson's rule and its modification.

47

An Example

III.2

The one -dimensional Green's function

t(1 -s),

0

<

t

<

s

s(1 -t),

s

<

t

<

1

G(s,t) =

arises in two -point boundary value problems.

G(s,t)

is

As seen,

continuous, but its first derivative has a jump

discontinuity along the line

x(s) -

1

10

S

s =

G(s,t)x(t)dt

The problem

t.

=

(1

0

- 12)sin(Trs)

r

was solved by first using the regular Simpson's rule ap-

proximation of the integral and then using the special rule.

Since

J(Q)(s)

to be 1.

=

0

for

Q =

0,

and

Q

>

r

2,

The data are presented in table

5.

was chosen

Note that as

predicted in II.7 there is fourth order convergence using

the special rule, which is a significant improvement over

the first order convergence of the regular Simpson's rule.

48

The worst error at the Grid Points

n

Regular

2

0.869

Special with r =

0.735

(24.2)

(1.5)

4

0.0303

0.582

(16.0)

(2.3)

8

0.00189

0.252

(16.0)

(3.4)

16

0.000119

0.0770

TABLE

5.

1

The worst error at the

partition points of the problem

x(s) - 1' G(s,t)x(t)dt

0

=

x(s) = sin(Trs).

(1

-

)sin(ffs),

n

49

Generalizations

III.3

A generalization of the problem in III.1 is one in

which the discontinuity in

g(s),

0

<

s

<

is along a continuous curve,

N

The procedure is nearly the same, except

1.

that

J(k) (s)

ak

-

[K(s,t)

R(s,t)

-

]

at

=g(s)

and

-1(

K(s,t)

Nr

r

(s, t)

Q)k[g(s)-t]kJ(k) (s),

0<

t < g(s),

k=0

E

R(s,t), g(s)

<

t

1.

<

The equation corresponding to

(2 -2)

is

1

f(s) = x(s)

C

k =0

(

Nr(s,t)x(t)dt

-

S

Q)

R!

0

J(k)

\g(s)

(s)

[g(s)- t]kx(t)dt.

0

Note that now the upper limit of integration is

of

s,

a

function

so that the special rule developed in II.2 will no

longer work (unless g(s)

E

of course).

s

It should be

relatively straight forward, however, to develop weights

that are functions of both

technique in II.2.

2,

and

g(s), using the same

50

Summary and Conclusions

IV.

We started with the Volterra integral equation

(1 -3)

and generated a Fredholm integral equation with a continuous kernel.

value of the discontinuity at

s

= t,

K(s,$),

namely

leaving a Fredholm kernel which is zero for

the

K(s,t)

This was done by subtracting from

t S 1.

5

s

The Fredholm kernel was made smoother across the line

s

= t

by subtracting terms of Taylor's series; the result-

ing kernel was

K(s,t)

-

_(-1) R (s-t)

L

Nr(s,t) =

0, s

Assuming that

K

<

and

t

<

x

K(R)

(s,$)

,

0

<

t

<

s

!'

k=0

1.

have

continuous partials with

r

1

respect to

t,

Nr(s,t)x(t)dt

we showed that

S

was well

0

approximated by existing integration rules.

In order to preserve equality,

(-1)

Q

K(Q)

R!

were added.

s,$)

rs

JJ

terms of the form

(s-t

£

x (t) dt

0

In II.2 we developed a generalization of Simp-

son's rule which approximated the indefinite integral.

In

II.5 we combined this new rule with the regular Simpson's

rule,

and showed in II.7 that the resulting approximation

has fourth order convergence assuming enough differentia-

bility of the integrand.

We also showed in II.8 that with-

out differentiability the approximation still converges

51

uniformly to the integral as

n }

00.

The work of II.5

developed an algorithm for solving linear Volterra integral

equations of the second kind.

In the last chapter we extended the results to Fred -

holm integral equations with jump discontinuities in the

kernel or one of its partial derivatives with respect to

t

along the line

s

= t.

52

BIBLIOGRAPHY

1.

Anselone, P. M. Convergence and error bounds for

approximate solutions of integral and operator

equations. In: Error in digital computation, ed.

by L. B. Rall. Vol. 2. New York, John Wiley, 1965.

p.

231 -252.

2.

Fulks, Watson. Advanced calculus; an introduction to

analysis. New York, John Wiley, 1961. 521 p.

3.

Goldberg, Richard R. Methods of real analysis. Waltham, Mass., Blaisdell, 1964. 359 p.

4.

Hildebrand, F. B. Introduction to numerical analysis.

New York, McGraw -Hill, 1956. 511 p.

5.

Milne, William E. Numerical calculus. Princeton,

Princeton University, 1949. 393 p.

6.

Tricorni,

F. G. Integral equations. New York, Inter

science, 1957. 238 p.

-

APPENDICES

53

Appendix A

begin

comment a program to estimate

IR[x(s)]

I

<(M3/2)hQ +4

s

(

-11)] Idu

JO

has been parameterized out of the

where the

h

integral.

The integral is approximated by the

repeated midpoint rule;

integer n,tn,i,j,l;

real s,t,rem,prod;

real procedure Gn(a,b);

value a,b;

integer

real b;

a;

Gn:= if b >0 then b +a else 0.0;

real procedure WB(b,c);

value b,c;

real b; integer c;

WB:= b +(c +2)

x

((2- b) /(c +1) +

(2xb)- 2) /(c +2)- b /(c +3));

real procedure WC(b,c);

value b,c;

integer

real b;

WC: =

-0.5 x

b+

c;

(c +2) x

(

(l-b)/(c+l) +

(2xb-1)/(c+2)-b/(c+3)

comment the program body appears below;

inreal

(60,n);

inreal

(60,k);

comment a statement to print

n

and

R,

and set up a

table for the output would occur here;

tn: =2xn;

prod =

:

(R,

+1)

x

(i +2)

x

(k+3);

for j: =0 step 1 until to do

begin

real temb, temc;

s: =j /n;

temb: =WB (s,

R,)

;

)

;

54

temc:= WC(s,k);

rem: =0.0;

for i: =1 step

1

until to do

begin

t:= (2xi- 1) /tn;

rem: =rem + abs(2xGn(Z +3,s -t) /prod

-temc

x

Gn(2,2 -t)

-

temb

x

Gn(2,1 -t));

end;

rem:= rem /n;

comment a statement to output

rem, at

end;

end of program;

s

s

would go here;

and the remainder,

55

Appendix B

begin

comment a program to solve a Volterra integral equation

of the second kind using a modified Simpson's rule.

integer n,r,tn,i,Q,fac,j,P;

real s,h,th,hsq,D,sn,hpw,tl,t,xs;

real array X,F[O:UL];

comment UL is the upper limit of the array, i.e., UL > 2xn;

comment the three weight functions are below. s =a, Q =b,

h =c and hsq = e;

real procedure WA(a,b,c,e);

value a,b,c,e;

real a,c,e;

integer b;

WA: =a +(b +l)

x

(b +3))

x

(a

(2.0xa- 3.0xcx(b +3))

x

/(2.Oxex(b +l)

x

(b +2)

x

+ 2.0xex(b +2)

(b +3));

real procedure WB(a,b,c,e);

value a,b,c,e;

real a,c,e;

integer b;

WB: =2.0

x

a +(b +2)

x

{ex(b +l)

(cx(b +3)- a) /(ex(b

(b +2)

x

x

(b +3));

real procedure WC(a,b,c,e);

value a,b,c,e;

real a,c,e;

integer b;

WC: =a+ (b +2)

x

(2.0xa -cx

(b +3)) /

(2.0xex (b +l)

x

(b +2) x (b +3))

real procedure KER(a,b);

value a,b;

real a,b;

comment evaluates the kernel K(s,t) at

KER: =[the kernel];

s =a

and t =b;

;

56

real procedure PK(a,b);

value a,b;

integer b;

real a;

comment evaluate the bth partial of K(s,t) with respect to

t at s =t =a;

begin

switch Q:= PO,Pl,P2;

real RL;

20 to Q[b +l];

PO:

RL:=[the zeroth partial];

to fin;

coo

Pl:

RL: =[the first partial];

go to fin;

P2:

fin:

RL: =[the second partial];

PK: =RL;

end;

real procedure NR(a,b,c);

value a,b,c;

real a,b;

integer

c;

comment evaluates the kernel Nr at

s =a,

t =b,

begin integer LL;

real temp, nn,ff,dd,pp;

if b >a then

begin temp: =0.0; go to BBB end;

temp: = KER(a,b);

nn: = -1.0;

dd: =a -b;

for LL: =0 step

1

until

c do

begin

ff:= if LL =0 then 1.0 else ffxLL;

nn: = -nn;

and r =c;

57

pp:= if LL =0 then 1.0 else dd

temp := temp - nn

x

pp

X

pp;

x

PK(a,LL) /ff;

end;

BBB: NR: =temp;

end of procedure Nr;

real procedure FUN(a);

value a;

real a;

comment evaluates the known function at

s =a;

FUN: =[the known function];

comment the program begins here.

the number of

is

n

times the rule is applied and

r

of derivatives to be subtracted;

eof (finished)

inreal

(60,n);

inreal

(60,r);

tn: =2

;

n;

X

for is =0 step 1 until to do

begin

s:

=i /tn;

F[i]

:

=FUN

(s)

;

end;

X[0]

:

=F

[0]

;

h:= 1.0 /tn;

the =2.0

X

h;

hsq:= 1.0 /(tnxtn);

D: = -h

X

KER(h,0.0) /3.0;

sn: = -1.0;

hpw: =1.0;

for Q: =0 step 1 until r do

begin

fac:= if

2

=0 then

sn: = -sn;

hpw: =h

X

hpw;

1

else fac

X

2;

is

the number

58

D: =D + sn

PK(h,k)

x

x

(hpw/3.0 - WA(h,R,,h,hsg) )/fac;

end;

f[1]:=f[1]

for

i:

- D

=2 step

X[0];

x

until to do

1

begin

s:

=i /tn;

F[i] :=F[i]

+ h

x

KER(s,0.0)

X[0]/3.0;

x

end;

for j: =0 step

1

until n -1 do

begin

real temp,D1,D2,D3,D4,DET;

integer p,q;

p: =2

x

j

+ 1;

=P + 1;

s: =p /tn;

q:

D1:= if r

<

4.0

x

h

else fac

x

k;

then 1.0

0

-

x

KER(s,$) /3.0

else 1.0;

sn: = -1.0;

for

2,:

=0 step 1 until r do

begin

fac:= if

then

k =0

1

sn: = -sn;

D1: =D1 - sn

x

PK(s,k)

x

WB(h,k,h,hsq) /fac;

end;

D2 =0.0;

:

sn: = -1.0;

for

k:

=0 step 1 until r do

begin

fac:= if

then

k =0

1

else fac

x

k;

sn:=-sn;

D2:=D2

end;

s:=q/tn;

t:=p/tn;

-

sn

x

PK(s,k)

x

WC(h,i,h,hsq) /fac;

59

D3: = -4.0

h

X

D4: =1.0 - h

DET: =D1

KER(s,$) /3.0;

x

D4

X

if abs(DET)

KER(s,t) /3.0;

X

D2

<

D3;

X

then

10 -7

begin

comment a statement here would print "The problem

generated a singular matrix. ";

go to finished;

end;

- F [q] XD2)

/DET;

X [p]

:

= (F [p] XD4

X [q]

:

= (F [q] XDl - F [p] XD3) /DET;

if

j

s:=

22 to otpt;

= n -1 then

(q +l)

/tn;

temp: =4.0

t:

=q /tn;

D:

=2.0

X

h

X

h

KER(s,t) /3.0;

X

KER(s,t) /3.0;

X

sn: = -1.0;

hpw: =1.0;

for k: =0 step 1 until r do

begin

fact= if

then

k =0

else fac

1

X

k;

sn: = -sn;

hpw: =h

X

D: =D

sn

-

hpw;

PK(s,k)

X

(hpw/3.0- WA(h,Q,,h,hsq)) /fac;

x

end;

-

F[q+l]:=F[q+1] + temp

X[p]

x

+ D

X

X[q];

tl: =p /tn;

for

step

i:=441.1-2

1

until to do

begin

s:

=i /tn;

F[i]: =F[i]

X

end;

end;

+

4.0'x h

KER(s,t)

X

X

KER(s,tl)

X[q] /3.0;

X

X[p] /3.0 + 2.0

x

h

60

comment a statement to print the problem, the

values of n and r, and column headings

otpt:

for i

:

would go here;

=0 step 1 until to do

begin

s

:

=i /tn;

comment a statement to output s and

X[i] = x(s) would go here;

end;

Values of

comment the interpolation begins here.

s

are

input until there are no more, whereupon the

eof (finished) command halts the program;

begin real xs,tl,t2,t3; integer Il;

inter: comment a statement goes here to input s;

if s =0.0 then begin j: =0;

for is =0 step

if

2

begin

until n -1 do

l

h<

x

i

x

to AAA end;

coo

s

j: =i;

A

coo

(2xi +2)

h>

x

s

then

to AAA end;

xs:= FUN(s);

AAA:

for

step

is =0

until

1

j

-1 do

begin

I1 =2

i;

x

tl: =ll

h;

x

t2:= (I1 +1)

x

h;

t3:= (I1 +2)

x

h;

xs: =xs +

(

KER( s, tl)xX[I1] +4.0xKER(s,t2)XX[I1 +1]

+KER(s,t3)xX[I1 +2])

x

h /3.0;

end;

11;=2

x

j;

tl:=Il

x

h;

t2:=(I1+1)

x

h;

xs:=xs + (NR(s,tl,r)xX[I1]+4.0xNR(s,t2,r)xX[11+1])

sn:=-1.0;

tl:=s

-

2.0

for i:=0

-

begin

x

h

x

step

1

until r do

j;

x

h/3.0;

61

fac:= if

then

i =0

1

else fac

x

i;

sn:=-sn;

xs:=xs + sn

x

PK(s,i)

x

(WA(tl,i,h,hsq)xX[Il]

+WB(tl,i,h,hsq)xX[I1+1]+WC(tl,i,h,hsq)

xX[I1+2] )/fac;

end;

comment a statement here would output

end;

finished: end of program;

s

and

xs = x(s);