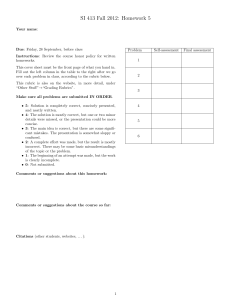

MASSACHUSETTS INSTITUTE OF TECHNOLOGY Working Paper

advertisement