Document 11198986

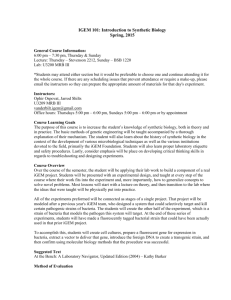

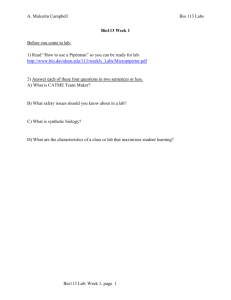

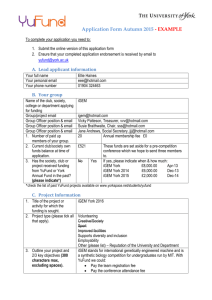

advertisement