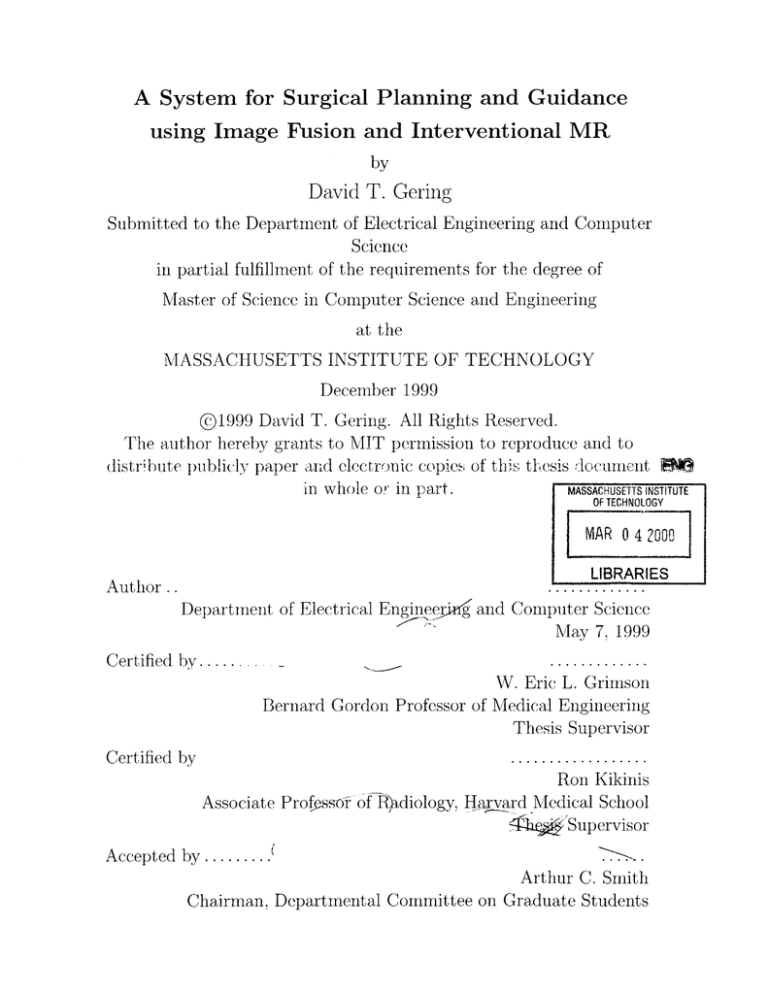

A System for Surgical Planning ... using Image Fusion and Interventional ... David T. Gering

advertisement