Foundations of Assessment II Methods, Data Gathering and Sharing Results

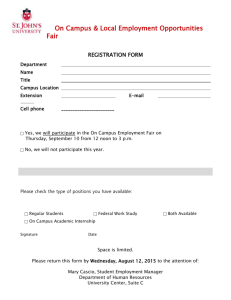

advertisement

Foundations of Assessment II Methods, Data Gathering and Sharing Results What do you still have questions about? • • • • How to effectively speak to students about problems How to include all stakeholders (develop partnerships) Developing effective questions What tools or mine-able data already exist that we can use in our assessment projects • When are you asking too much info at once (i.e. mid-year reviews)? • How to narrow down the focus of the assessment • How to stay on top of assessment while working on other programs What do you still have questions about? • How to draw conclusions from assessment data (especially data from long-term assessments) • Quick assessments and what they look like • How long an assessment process should take • How to choose the right assessment method • How to conduct an assessment interview • How to incentivize participation in data collection • Further understanding how to “measure” qualitative data • How much is too much data collection? How do you predict the info you will need or want? Goals of Today’s Presentation 1. Understand the various methods available for assessment 2. Learn how and why to share results 3. Gain confidence to plan and conduct assessments in your department The Assessment Cycle Website: Assessment Resources Forms A few questions • What methods have you used for data collection? • How did you determine that those methods were the best way to gather evidence? Common Methods for Gathering Evidence Surveys Rubrics Interviews Pre/Post evaluation Reflection Quick assessments Document Analysis Reviewing existing data Focus Groups Demonstrations Observations Written papers, projects Posters and presentations Portfolios Mobile data collection Tests, exams, quizzes Contributing Source: Campus Labs Things to consider when selecting a method How will you use the data? Available resources (i.e., time, materials, budget, expertise) Potential for collaboration Timing: Religious holidays, large events, student schedules Direct vs. indirect Quantitative vs. qualitative Contributing Source: Campus Labs What Type of Data Do I Need? Direct Methods: Any process employed to gather data which requires students to display their knowledge, behavior, or thought processes. Indirect Methods: Any process employed to gather data which asks students to reflect upon their knowledge, behaviors, or thought processes. Source: Campus Labs What Type of Data Do I Need? Quantitative Focus on numbers/numeric values Easier to report and analyze Can generalize to greater population with larger samples Less influenced by social desirability Sometimes less time, money Qualitative Focus on text/narrative from respondents More depth/robustness Ability to capture “elusive” evidence of student learning and development Specific sample Questions for Choosing a Method Is your method going to provide you with the evidence you need to make a statement about the learning that occurred? If you are assessing satisfaction or service effectiveness, is the method going to give you the most detailed and accurate information? Do you have the time and resources to use the specific method? Adapted from Campus Labs Surveys Advantages Disadvantages Useful when you have a large population. Easily administered Easy to compare longitudinally You can ask a variety of questions Quick data turnaround Good for statistical reporting Indirect measure Interpretation – lack of skill in understanding results Low response rates May need secondary direct measure to learn more information Focus Groups: What is a focus group? Qualitative research Small group, open discussion Gauges opinions, perceptions and attitudes Focus Groups Advantages Disadvantages Able to collect a lot of rich data in short time Facilitation requires skill Check perceptions, beliefs and options Explore specific themes Participants build off each other’s ideas Can be a good follow-up to a survey Not generalizable to population Time needed for preparation and analysis Lack of control over discussion Can be difficult to attract participants IRB may be required Quick Assessment Techniques Quick, easy, and systematic Assessment does not HAVE to be time-consuming Minimal resources “Pulse” on how things are going Specific techniques available on BC website Rubrics Scoring tool for subjective assessment Assess student performance on learning objectives Clearly define acceptable and unacceptable performance Training programs, interviews, projects Rubric Example Mobile Devices How/When to Use Mobile Devices Campus Pulse Survey Larger audience and more diverse sample Dining Halls, residence halls, computer lounge Topic suggestions: food, campus facilities, hot topics, world events Point of Service Survey Distribute to students after they stop an office on campus Focus on service experience Locations: Career Center, SABSC, Residential Life After an Event Survey Immediate response After a concert, meeting, or dance Activity: Choosing a Method • Use your assessment project/idea • What are the outcomes you are assessing? • What is the best way to gather this information? Why? • Report out to the group Analyzing and interpreting your data requires as much diligence and strategy as the design process Qualitative Data Analysis Read the data with an eye for themes, categories, patterns, and relationships Have multiple people read the data and discuss the key themes Identify contradictions surprises Interpretation Make assumptions, add meaning, and come up with conclusions – keeping your own assumptions and beliefs visible. Do not disregard outliers: Data that is surprising, contradictory, or puzzling can be insightful Source: InSites 2007 Quantitative Data Analysis Campus Labs - sort your data, use crosstabs, and view it in graphs or pie charts. Campus Labs does not help you analyze or interpret your data! Your responsibility is to describe the data as clearly, completely, and concisely as possible. Interpretation For each learning outcome, compare the results with the level of intended outcome. What does the data show? Reporting Assessment Results • In what ways have you seen assessment results reported? Source: Campus Labs Why Focus on Reporting Results? Role Modeling Buy-in Historical Documentation Evidence trail What about sensitive data and/or campus politics? Source: Campus Labs Formats for Audiences Students • Email invitations • PR campaign (flyers, newspaper, TVs) • Student government meetings Internal campus partners • • • • Cabinet meetings Elevator speech Exec. summary Annual reports Staff • • • • • Roadshows Brief emails Newsletters Retreat Full reports External Constituents • Presentations • Website • Press releases Source: StudentVoice Policymakers are more likely to read information if: • Information is in short bulleted paragraphs, not large blocks of type • Charts or graphs are used to illustrate key points • If provided in print rather than electronically • Recommendations and implications are presented Source: Campus Labs Questions to Consider when Planning a Report When do you need to report your results? Who is the audience of the report? Why is this information important to this audience? What are your options for reporting the results? What is the best method for reporting your results? What exactly should be or needs to be included? Basic Structure of Assessment Report 1. 2. 3. 4. Executive summary Purpose of assessment Methods Description of participants 5. Findings 6. Discussion/implications and conclusion Questions/Discussion