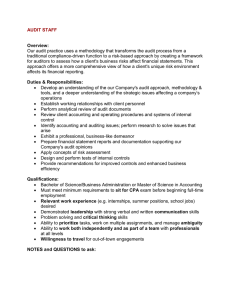

Audit Options to Certify Results for a “Cash on Delivery”

advertisement