AHHH HB31 2006 .M415

advertisement

MIT LIBRARIES

3 9080 02618 0981

Milt mil Wv

;i

''.''''

I!

'

!

ill:i .(;!|!:i

i'.ir-il:>!\!

ItllillPlli

v

'•iilPBiliSIl

HB31

.M415

no.06-36

11

2006

AHHH

Digitized by the Internet Archive

in

2011 with funding from

Boston Library Consortium

Member

Libraries

http://www.archive.org/details/usingrandomizatiOOdufl

1

HB31

.M415

Massachusetts Institute of Technology

Department of Economics

Working Paper Series

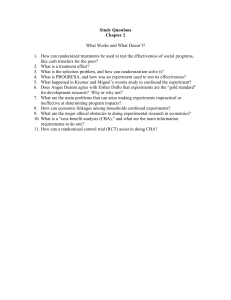

Using Randomization

in

Development Economics

Research:

A

Toolkit

Esther Duflo

Rachel Glennerster

Michael Kremer

Working Paper 06-36

December 12, 2006

Room

E52-251

50 Memorial Drive

Cambridge,

MA 021 42

This paper can be downloaded without charge from the

Social Science Research Network Paper Collection at

http://ssrn

.

com/abstract=95 1 84

MASSACHUSETTS INSTITUTE

OF TECHNOLOGY

LIBRARIES

Using Randomization

in

Development Economics Research:

A

Toolkit*

Esther Duflo| Rachel Glennerster^and Michael Kremer 5

December

12,

2006

Abstract

This paper

is

a practical guide (a toolkit) for researchers, students and practitioners wish-

ing to introduce randomization as part of a research design in the

field.

rationale for the use of randomization, as a solution to selection bias

to publication biases. Second,

tically

introduced in a

it

It first

and a

covers the

partial solution

discusses various ways in which randomization can be prac-

field settings.

Third,

it

discusses designs issues such as sample size

requirements, stratification, level of randomization and data collection methods. Fourth,

discusses

how

to analyze data

the basic framework.

ternalities.

Finally,

it

It

it

from randomized evaluations when there are departures from

reviews in particular

discusses

some

how

to handle imperfect compliance

and ex-

of the issues involved in drawing general conclusions

from randomized evaluations, including the necessary use of theory as a guide when designing evaluations and interpreting results.

Randomized

*We thank

Classification:

evaluations; Experiments; Development;

Program

10; JO;

OO; C93. Keywords:

evaluation.

the editor T.Paul Schultz, as well Abhijit Banerjee, Guido Imbens and Jeffrey Kling for extensive

discussions, David Clingingsmith,

assistance,

JEL

Greg Fischer, Trang Nguyen and Heidi Williams

for

outstanding research

and Paul Glewwe and Emmanuel Saez, whose previous collaboration with us inspired parts of

this

chapter.

^Department

of Economics, Massachusetts Institute of Technology

and Abdul Latif Jameel Poverty Action

Lab

*

Abdul Latif Jameel Poverty Action Lab

8

Department

of

Economics, Harvard University and Abdul Latif Jameel Poverty Action Lab

Contents

1

Introduction

3

2

Why

4

3

4

Randomize?

2.1

The Problem

2.2

Randomization Solves the Selection Bias

2.3

Other Methods to Control

of Causal Inference

for Selection Bias

5

7

10

2.3.1

Controlling for Selection Bias by Controlling for Observables

10

2.3.2

Regression Discontinuity Design Estimates

11

2.3.3

Difference-in-Differences

and Fixed Effects

12

2.4

Comparing Experimental and Non- Experimental Estimates

13

2.5

Publication Bias

15

2.5.1

Publication bias in non-experimental studies

15

2.5.2

Randomization and publication bias

17

Incorporating Randomized Evaluation in a Research Design

19

20

3.1

Partners

3.2

Pilot projects:

3.3

Alternative

From program

Methods

of

evaluations to field experiments

Randomization

Method

22

24

24

3.3.1

Oversubscription

3.3.2

Randomized Order

3.3.3

Within-Group Randomization

26

3.3.4

Encouragement Designs

27

Sample

size, design,

of Phase-In

and the power of experiments

25

28

4.1

Basic Principles

28

4.2

Grouped Errors

31

4.3

Imperfect Compliance

33

4.4

Control Variables

34

4.5

Stratification

35

4.6

Power calculations

in practice

38

5

6

7

8

Practical Design and Implementation Issues

40

5.1

Level of Randomization

40

5.2

Cross-Cutting Designs

42

5.3

Data Collection

45

5.3.1

Conducting Baseline Surveys

45

5.3.2

Using Administrative Data

46

Analysis with Departures from Perfect Randomization

6.1

The Probability

6.2

Partial Compliance

of Selection

Depends on the Strata

47

48

6.2.1

Prom Intention To Treat

6.2.2

When

is

47

to

Average Treatment Effects

IV Not Appropriate

51

55

6.3

Externalities

56

6.4

Attrition

58

Inference Issues

61

7.1

Grouped Data

61

7.2

Multiple Outcomes

62

7.3

Subgroups

64

7.4

Covariates

66

External Validity and Generalizing Randomized Evaluations

and General Equilibrium

66

67

8.1

Partial

8.2

Hawthorne and John Henry

8.3

Generalizing Beyond Specific Programs and Samples

70

8.4

Evidence on the Generalizability of Randomized Evaluation Results

71

8.5

Field Experiments and Theoretical Models

73

Effects

Effects

68

Introduction

1

Randomization

is

years, a growing

their input.

now an

number

integral part of a

development economist's toolbox. Over the

of randomized evaluations have been conducted by economists or with

These evaluations, on topics as diverse as the

(Glewwe and Kremer 2005), the adoption

Robinson 2006), corruption

of

new

effect of school inputs

in driving licenses administration (Bertrand,

and Zinman 2005b), have attempted

to

used by economists as a testing ground

in

consumer

them

more

and complex implementations

flexibility to researchers,

who can

While research involving randomization

experience on

have had

Working with

how

now

still

fairly

small budgets, making

on a smaller scale has

program

design.

As a

result,

tool.

represents a small proportion of work in de-

a considerable

to run these projects.

—with their large bud-

local partners

often influence

randomized evaluation has become a powerful research

is

markets (Karlan

— many of the randomized evaluations that have

in recent years in developing countries

velopment economics, there

credit

for their theories.

affordable for development economists.

also given

Djankov, Hanna, and

answer important policy questions and have also been

Unlike the early "social experiments" conducted in the United States

been conducted

on learning

technologies in agriculture (Duflo, Kremer, and

Mullainathan 2006), or moral hazard and adverse selection

gets, large teams,

last ten

body

of theoretical

In this chapter,

we attempt

knowledge and practical

to

draw together

in

one

place the main lessons of this experience and provide a reference for researchers planning to con-

duct such projects. The chapter thus provides practical guidance on how to conduct, analyze,

and interpret randomized evaluations

in

developing countries and on

how

to use such evaluations

to answer questions about economic behavior.

This chapter

Nor

is

its

is

not a review of research using randomization in development economics. 1

main purpose

to justify the use of randomization as a

complement or substitute

other research methods, although we touch upon these issues along the way.

a practical guide, a "toolkit," which

kremer

(2003) and

Glewwe and Kremer

we hope

will

be useful to those interested

Rather,

it

to

is

in including

(2005) provide a review of randomized evaluations in education;

Banerjee and Duflo (2005) review the results from randomized evaluations on ways to improve teacher's and

nurse's attendance in developing countries; Duflo (2006) reviews the lessons on incentives, social learning,

hyperbolic discounting.

2

We

have provided such arguments elsewhere, see Duflo (2004) and Duflo and Kremer (2005).

and

randomization as part of their research design.

The

outline to the chapter

as follows.

is

we use the now standard

In Section 2,

"potential

outcome" framework to discuss how randomized evaluations overcome a number of the problems

endemic to retrospective evaluation.

We

focus on the issue of selection bias, which arises

individuals or groups are selected for treatment based on characteristics that

outcomes and makes

it

compounded by a natural publication

is

bias towards retrospective

We

studies that support prior beliefs and present statistically significant results.

carefully constructed

In Section

3,

we

randomized evaluations address these

discuss

how can randomization be introduced

in the field.

pilot projects

can be introduced

an ethically and politically acceptable manner?

In section

4,

we

discuss

how

be used?

are the various

researchers can affect the

arrive at statistically significant conclusions.

level of

What

How

discuss

how

issues.

work with? How can

in

also affect their

disentangle the impact of the treatment from the factors that

difficult to

drove selection. This problem

may

when

power

ways

in

Which

partners to

which randomization

of the design, or the chance to

should sample sizes be chosen?

randomization, the availability of control variables, and the possibility to

How

does the

stratify, affect

power?

In section 5,

we

discuss practical design choices researchers will face

domized evaluation: At what

designs?

When

In section 6

level to

and what data to

we

discuss

how

randomize?

effects

when

discuss

the data

Finally in section 8

is

how

and cons

to analyze data from randomized evaluations

We

selection in different groups, imperfect compliance

we

are the pros

of factorial

review

and

how

when

there are

to handle different probability of

externalities.

to accurately estimate the precision of estimated treatment

grouped and when multiple outcomes or subgroups are being considered.

we conclude by

discussing

some

of the issues involved in drawing general

conclusions from randomized evaluations, including the necessary use of theory as a guide

designing evaluations and interpreting results.

2

Why

ran-

collect?

departures from the simplest basic framework.

In section 7

What

when conducting

Randomize?

when

The Problem

2.1

Any attempt

of Causal Inference

drawing a causal inference question such as "What

at

cation on fertility?" or

"What

is

is

the causal effect of edu-

the causal effect of class size on learning?" requires answering

essentially counterf actual questions:

How would

fared in the absence of the program?

individuals

How would

have fared in the presence of the program? The

a given point in time, an individual

is

who

participated in a program have

who were

those

difficulty

not exposed to the program

with these questions

either exposed to the

program

or not.

is

immediate. At

Comparing the same

individual over time will not, in most cases, give a reliable estimate of the program's impact

since other factors that affect outcomes

may have changed

since the

program was introduced.

We

cannot, therefore, obtain an estimate of the impact of the program on a given individual.

We

can, however, obtain the average impact of a program, policy, or variable (we will refer to

on a group of individuals by comparing them to a similar group of

this as a treatment, below)

individuals

To do

who were not exposed

this,

to the program.

we need a comparison group. This

is

a group of people who, in the absence of

the treatment, would have had outcomes similar to those

however, those individuals

who

Programs are placed

not.

who

received the treatment. In reality,

who

are exposed to a treatment generally differ from those

in specific areas (for example, poorer or richer areas), individuals

are screened for participation (for example, on the basis of poverty or motivation),

decision to participate in a

whether to send

are

program

girls to school.

is

often voluntary, creating self-selection.

Different regions chose to have

countries chose to have the rule of law.

For

all

women

to a treatment are often a poor comparison group for those

who

were.

Families chose

teachers,

who were

of these reasons, those

Any

and the

and

different

not exposed

difference

between

the groups can be attributed to both the impact of the program or pre-existing differences (the

"selection bias").

decompose the

To

(1974).

Y?

fix

ideas

Without a

way

to estimate the size of this selection bias, one cannot

overall difference into a treatment effect

it is

Suppose we are interested

in

is

Rubin

measuring the impact of textbooks on learning. Let us

call

Yf

the

the average test score of children in a given school

outcome that

and a bias term.

useful to introduce the notion of a potential outcome, introduced by

test scores of children in the

as

reliable

same school

i

if

i if

the school has textbooks and

the school has no textbooks.

actually observed for school

i.

We

Further, define Yi

are interested in the difference

Y? — Yp

',

which

the effect of having textbooks for school

is

to observe a school

i

is

As we explained above, we

both with and without books at the same time, and we

be able to estimate individual treatment

only one

i.

effects.

will

not be able

not

will therefore

While every school has two potential outcomes,

observed for each school.

However, we

may hope

to learn the expected average effect that textbooks have

on the

schools in a population:

E[Y?-YP}.

(1)

Imagine we have access to data on a large number of schools

textbooks and others do not. One approach

is

in

one region. Some schools have

to take the average of

both groups and examine

the difference between average test scores in schools with textbooks and in those without. In a

large sample, this will converge to

D = E[Y?

|

School has textbooks]

- E[Y c School

t

Subtracting and adding ij^lf |T],

i.e.,

has no textbooks]

= E[Y?\T\ -

\

the expected outcome for a subject in the treatment

group had she not been treated (a quantity that cannot be observed but

we

E\Yf\C].

is

logically well defined)

obtain,

D=

E[Y?\T) - E[Y?\T] - E[Yf\C)

The

term,

first

effect of

E[Y^ —

Yf"\T],

is

+ E[Yf\T] = E\Y? - if \T] + E[Yp\T\ - E[Y?\C}

the treatment effect that

treatment on the treated). In our textbook example,

on average,

in the

The second

may

term, -E^lflT]

— £[5f |C],

is

the selection

if

(i.e.,

the

the answer to the question:

it is

bias.

It

captures the difference in

and the comparison

have had different test scores on average even

would be true

are trying to isolate

treatment schools, what difference did the books make?

potential untreated outcomes between the treatment

schools

we

if

schools; treatment

they had not been treated. This

schools that received textbooks were schools where parents consider education

a particularly high priority and, for example, are more likely to encourage their children to do

homework and prepare

bias could also

work

for tests.

In this case, i?[lf |T] would be larger than E\Yf\C\.

in the other direction.

non-governmental organization to schools

would

likely

be smaller than

l£[Yf |C].

If,

for

example, textbooks had been provided by a

in particularly

It

The

disadvantaged communities,

£[lf |T]

could also be the case that textbooks were part of

a more general policy intervention (for example,

schools that receive textbooks also receive

all

blackboards); the effect of the other interventions would be

more general point

differences

is

in

our measure D. The

that in addition to any effect of the textbooks there

may be

systematic

between schools with textbooks and those without.

Since £[Y'j C'|X']

is

not observed,

it is

in general impossible to assess the

sign) of the selection bias and, therefore, the extent to

in

embedded

exist or find

is

to identify situations where

ways to correct

for

(or

even the

which selection bias explains the difference

outcomes between the treatment and the comparison groups.

empirical work

magnitude

we can assume

An

essential objective of

much

that the selection bias does not

it.

Randomization Solves the Selection Bias

2.2

One

setting in which the selection bias can be entirely

of individuals are

when

is

individuals or groups

randomly assigned to the treatment and comparison groups. In a randomized

evaluation, a sample of

the "population"

removed

may

N

individuals

not be a

is

selected from the population of interest.

random sample

of the entire population

Note that

and may be selected

according to observables; therefore, we will learn the effect of the treatment on the particular

sub-population from which the sample

sample

is

is

drawn.

We

will return to this issue.

This experimental

then divided randomly into two groups: the treatment group [N-p individuals) and the

comparison (or control) group (Nq individuals).

The treatment group then

is

exposed to the "treatment"

the comparison group (treatment status C)

pared

for

is

not.

both treatment and comparison groups.

Then

(their

treatment status

the outcome

Y

is

is

T) while

observed and com-

For example, out of 100 schools, 50 are

randomly chosen to receive textbooks, and 50 do not receive textbooks. The average treatment

effect

can then be estimated as the difference

in empirical

means

of

Y

between the two groups,

D = E[Y \T]-E\Y \C],

i

where

E

i

denotes the sample average. As the sample size increases, this difference converges

to

D = E[Y?\T] - E[Yf\C}.

Since the treatment has been randomly assigned, individuals assigned to the treatment and

Had

control groups differ in expectation only through their exposure to the treatment.

neither

received the treatment, their outcomes would have been in expectation the same. This implies

that the selection bias, E[Yf\T]

— E\Yf\C\,

is

equal to zero.

in addition, the potential

If,

outcomes of an individual are unrelated to the treatment status of any other individual

is

the "Stable Unit Treatment Value Assumption"

Rubin

(1996)),

3

(SUTVA)

(this

described in Angrist, Imbens, and

we have

-

E\Yi\T\

E\Yi\C]

= E[YT -

Yf\T]

= E\Y? - Y

t

the causal parameter of interest for treatment T.

The

D

regression counterpart to obtain

= a + 0T + ei,

Yi

where

T

is

a

dummy

for

it

us that

tells

(2)

Equation

assignment to the treatment group.

with ordinary least squares, and

This result

is

can easily be shown that Pols

it

when a randomized

evaluation

is

—

can be estimated

(2)

E{Y%\T)

—

E(Yi\C).

4

correctly designed and implemented,

provides an unbiased estimate of the impact of the program in the sample under study

estimate

is

internally valid.

simple set up

may

fail

There are of course many ways

when randomized

countries. This chapter describes

minimize such

failures

how

in

which the assumptions

—

this

in this

evaluations are implemented in the field in developing

to correctly

implement randomized evaluations so as to

and how to correctly analyze and interpret the

results of

such evaluations,

including in cases that depart from this basic set up.

Before proceeding further,

is

being estimated

is

it is

important to keep in mind what expression

change

in

response to the program.

impact of textbooks on test scores keeping everything

form

3

We

see this,

Y=

assume that the production function

J {I), where /

is

What

It

may be

different

from the

else constant.

for the

outcome

of interest

Y

is

of the

a vector of inputs, some of which can be directly varied using policy

This rules out externalities-the possibility that treatment of one individual affects the outcomes of another.

address this issue in Section

4

means.

the overall impact of a particular program on an outcome, such as test

scores, allowing other inputs to

To

(1)

6.3.

Note that estimating equation

estimated coefficient

is

2

with

OLS

does not require us to assume a constant treatment

simply the average treatment

effect.

effect.

The

tools, others of

which depend on household or firm responses. This relationship

structural;

is

holds regardless of the actions of individuals or institutions affected by the policy changes.

impact of any given input

relationship

affect

t

in

Y when

all

embedded

in this

respect to

which includes changes

with respect to

/, call it

t.

A

second estimate of interest

One estimate

of interest

is

is

change

For example, parents

t,

in

how-

the partial

i.e.,

the total derivative of

in other inputs in response to the

other inputs are complements to or substitutes for

to changes in other inputs j.

t.

other explanatory variables are held constant,

Y

if

is

on academic achievement that

one element of the vector

derivative of

t,

The

a structural parameter.

is

Consider a change

changes in

in the vector /

it

Y

with

In general,

t.

then exogenous changes in I will lead

may respond

to

an educational program

Alternatively, parents

may

consider the program a substitute for home-supplied inputs and decrease their supply.

For

by increasing

their provision of home-supplied educational inputs.

example, Das, Krishnan, Habyarimana, and Dercon (2004) and others suggest that household

educational expenditures and governmental non-salary cash grants to schools are substitutes,

and that households cut back on expenditures when the government provides grants to schools.

In general, the partial and total derivatives could be quite different, and both

interest to policymakers.

to

outcome measures

tells

The

after

is

happen

exogenously provided and agents re-optimize. In

effect it

is

of interest because

us the "real" impact of the policy on the outcomes of interest.

not provide a measure of overall welfare

to students

in favor of

of

will

total derivative

an input

shows what

may be

effects.

it

But the

total derivative

may

Again consider a policy of providing textbooks

where parents may respond to the policy by reducing home purchases of textbooks

some consumer good that

is

not in the educational production function.

derivative of test scores or other educational

this re-optimization.

outcome variables

Under some assumptions, however, the

will not

The

total

capture the benefits of

partial derivative will provide

an

appropriate guide to the welfare impact of the input.

Results from randomized evaluations (and from other internally valid program evaluations)

provide reduced form estimates of the impacts of the treatment, and these reduced form parameters are total derivatives.

model that

Partial derivatives can only be obtained

links various inputs to the

outcomes of

interest

and

collect

if

researchers specify the

data on these intermedi-

ate inputs. This underscores that to estimate welfare impact of a policy, randomization needs

to

be combined with theory, a topic to which we return

9

in section 8.

Other Methods to Control

2.3

for Selection Bias

Aside from randomization, other methods can be used to address the issue of selection bias. The

objective of any of these

methods

identifying assumptions.

The

of

is

comparison groups that are valid under a set of

to create

identifying assumptions are not directly testable,

and the

validity

any particular study depends instead on how convincing the assumptions appear. While

it is

not the objective of this chapter to review these methods in detail, 5 in this section we discuss

them

briefly in relation to

Controlling for Selection Bias by Controlling for Observables

2.3.1

The

randomized evaluations. 6

first

possibility

that, conditional

is

on a

set of observable variables

be considered to be as good as randomly assigned. That

is,

X, the treatment can

there exists a vector

X

such that

E [Yf \X,T]-E Yf \X,C] = 0.

(3)

[

A

case where this

ditional

on a

is

obviously true

set of observable variables

treatment or comparison

is

when

is

X

is

randomly assigned con-

In other words, the allocation of observations to

not unconditionally randomized, but within each strata defined by

the interactions of the variables in the set

after conditioning

.

the treatment status

X, the

allocation

We

on X, the selection bias disappears.

was done randomly. In

this case,

will discuss in section 6.1

how

analyze the data arising from such a set-up. In most observational settings, however, there

no

explicit

There are

different

the dimension of

X

is

is

sufficient to eliminate selection bias.

approaches to control

not too large,

is

to

for the set of variables

compute the

treatment and comparison groups within each

The treatment

effect

an application of

5

Much

is

randomization at any point, and one must assume that appropriately controlling for

the observable variables

for

to

fuller

is

cell

difference

X.

A

first

approach,

when

between the outcomes of the

formed by the various possible values of X.

then the weighted average of these within-cell effects (see Angrist (199S)

this

method

to the

impact of military service). This approach

treatments of these subjects can be found, notably

in this

(fully

non-

and other handbooks (Angrist and

Imbens 1994, Card 1999, Imbens 2004, Todd 2006, Ravallion 2006).

6

We

do not discuss instrumental variables estimation

in this section, since its uses in the

context of randomized

evaluation will be discussed in section 6.2, and the general principle discussed there will apply to the use of

instruments that are not randomly assigned.

10

parametric matching)

this case,

is

not practical

if

methods have been designed

A' has

to

many variables

or includes continuous variables. In

implement matching based on the "propensity

X. 7

or the probability of being assigned to the treatment conditional on the variables

approach

is

X

to control for

,

make

the set of variables

Both, however, are only valid on the underlying

different parameters.

many

how

is

not testable and

its

plausibility

true,

situations, the variables that are controlled for are just those that

and selection

(or "omitted variable") bias

remains an

happen

to be

issue, regardless

Regression Discontinuity Design Estimates

very interesting special case of controlling for an observable variable occurs in circumstances

more observable

loans to

women

their grade

of

be

no

must be evaluated on a case-by-case

where the probability of assignment to the treatment group

or

this to

is

flexibly the control variables are introduced.

2.3.2

A

there

X must contain all the relevant differences between the treatment and control

available in the data set,

of

for,

outcomes between treated and untreated individuals. For

groups. This assumption

basis. In

third

assumptions

different

assumption that, conditional on the observable variables that are controlled

difference in potential

A

parametrically or non-parametrically, in a regression framework. As

described in the references cited, matching and regression techniques

and estimate somewhat

score,"

is

variables.

living in

at least

a discontinuous function of one

For example, a microcredit organization

household with

50%; or

is

class size

less

may

may

than one acre of land; students

limit eligibility for

may

pass an

not be allowed to exceed 25 students.

If

any unobservable variable correlated with the variable used to assign treatment

the following assumption

E{\f\T,

where

X

is

X

is

<

reasonable for a small

X+ X

e,

>

X-

the underlying variable and

implies that within

some e-range

of

X

X, the

The

volume

the impact

is

smooth,

X+ X

X-

e)

= E[Y?\C, X <

is

the threshold for assignment.

selection bias

is

Todd

e,

zero and

is

>

e],

for

is

the basis of "regression

(2006) chapter in this volume for

due to Rosenbaum and Rubin (1983) see chapters by Todd (2006) and Ravallion (2006)

a discussion of matching ).

11

(4)

This assumption

results that controlling for the propensity score leads to unbiased estimate of the treatment effect

assumption 3

if

e:

discontinuity design estimates" (Campbell 1969); see

7

exam

under

in this

further details

and

references).

The

idea

to estimate the treatment effect using individuals

is

just below the threshold as a control for those just above.

This design has become very popular with researchers working on program evaluation in

developed countries, and

indeed implemented.

because

it

faces

many

argue that

removes selection bias when assignment rules are

it

has been less frequently applied by development economists, perhaps

It

two obstacles that are prevalent

in

developing countries. First, assignment rules

are not always implemented very strictly. For example,

Pitt

Morduch

(1998) criticizes the approach of

and Khandker (1998), who make implicit use of a regression discontinuity design argument

for the evaluation of

Grameen Bank

lending to household owning

There

is

problem

no discontinuity

is

the

officials

more than one

borrowing at the one acre threshold. The second

may be

implementing a program

below the threshold endogenous.

able to manipulate the level of the

which makes an individual's position above or

eligibility,

In this case,

side of the cutoff have similar potential

despite the official rule of not

acre of land, credit officers exercise their discretion.

in the probability of

underlying variable that determines

2.3.3

Morduch shows that

clients.

cannot be argued that individuals on either

it

outcomes and equation

(4) fails to hold.

Difference-in-Differences and Fixed Effects

Difference-in-difference estimates use pre-period differences in

outcomes between treatment and

control group for control for pre-existing differences between the groups,

before and after the treatment.

untreated") in period

1,

Denote by

after the

treated" ("if untreated") in period

T

or group C.

Group

T

is

Yf

{Yf) the potential outcome

treatment occurs, and Yq

0,

when data

"if

exists

both

treated" ("if

(Yq) the potential outcome

"if

before the treatment occurs. Individuals belong to group

treated in period

1

and untreated

in

period

0.

Group

C

is

never

treated.

The

difference-in-differences estimator

DD =

[mT

\T}

is

- E[Y C \T]} ~ [E[Y?\C\ - E[Y C \C}}

and provides an unbiased estimate of the treatment

E(Y^\T)] = [E(Yf\C) - E{Yff\C)},

i.e.,

effect

under the assumption that [E(Yf\T) —

that absent the treatment the outcomes in the two

groups would have followed parallel trends.

12

Fixed

effects generalizes difference-in-differences estimates

period or more than one treatment group.

The

when

there

is

more than one time

fixed effects estimates are obtained

by regress-

ing the outcome on the control variable, after controlling for year and group dummies.

and

difference-in-differences

or not they are convincing

comes

in the

fixed effect estimates are very

common

in applied work.

Both

Whether

depends on whether the assumption of parallel evolution of the out-

absence of the treatment

is

convincing. Note in particular that

have very different outcomes before the treatment, the functional form chosen

if

the two groups

for

how outcomes

evolved over time will have an important influence on the results.

Comparing Experimental and Non-Experimental Estimates

2.4

A

growing literature

taking advantage of randomized evaluation to estimate a program's

is

impact using both experimental and non-experimental methods and then

test

whether the non-

experimental estimates are biased in this particular case. LaLonde's seminal study found that

many of the

econometric procedures and comparison groups used in program evaluations did not

yield accurate or precise estimates

and that such econometric estimates often

A number

from experimental results (Lalonde 1986).

differ significantly

of subsequent studies have conducted such

analysis focusing on the performance of propensity score matching (Heckman, Ichimura, and

Todd

1997,

Dehejia and

Heckman, Ichimura, Smith, and Todd

Wahba

1999, Smith and

Todd

2005).

1998,

Heckman, Ichimura, and Todd 1998,

Results are mixed, with some studies finding

that non-experimental methods can replicate experimental results quite well and others being

more

negative.

A more

comprehensive review by Glazerman, Levy, and Myers (2003) compared

experimental non-experimental methods

service

programs

in the

United States.

in studies of welfare,

Synthesizing the results of twelve design replication

studies, they found that retrospective estimators often

from randomized evaluations and that the bias

is

strategy that could consistently remove bias and

Cook, Shadish, and

studies,

Wong

He

finds that experimental

experiment technique

is

produce results dramatically

often large.

still

They were unable

different

to identify

any

answer a well-defined question.

(2006) conducts a comparison of randomized and non-randomized

most of which were implemented

conclusion.

job training, and employment

in educational settings,

and non-experimental

and

arrives at a

results are similar

more nuanced

when

the non-

a regression discontinuity or "interrupted time series" design (difference-

in-differences with long series of pre-data), but that

13

matching or other ways to control

for

observables does not produce similar results.

He concludes

(regression discontinuity designs in particular)

that well designed quasi-experiments

may produce

results that are as convincing as

those of a well-implemented randomized evaluation but that "You cannot put right by statistics

what you have done wrong by

level of control achieved

While Cook's findings are extremely

by the quasi-experiments he reviews

following threshold rules)

is

(in

interesting, the

terms, for example, of strictly

such that for developing countries these designs

may

actually be

than randomized evaluations.

less practical

We

design."

are not aware of any systematic review of similar studies in developing countries, but

Some

a

number

is

a significant problem; others find that non-experimental estimators

been conducted.

of comparative studies have

suggest omitted variables bias

may perform

well in cer-

tain contexts.

Buddlemeyer and Skofias (2003) and Diaz and Handa (2006) both focus on

PROGRESA,

a poverty alleviation program implemented in Mexico in the late 1990s with a

randomized design. Buddlemeyer and Skofias (2003) use randomized evaluation

benchmark

to

examine the performance of regression discontinuity design.

formance of such a design to be good, suggesting that

if

GRESA

number

data.

to propensity score

They

find the per-

policy discontinuities are rigorously

enforced, regression discontinuity design frameworks can be useful.

compare experimental estimates

results as the

Diaz and Handa (2006)

matching estimates, again using the

PRO-

Their results suggest that propensity score matching does well when a large

of control variables

is

available.

In contrast, several studies in

Kenya

find that estimates

from prospective randomized

eval-

uations can often be quite different from those obtained using a retrospective evaluation in

the same sample, suggesting that omitted variable bias

Moulin, and Zitzewitz (2004) study an

NGO

is

a serious concern. Glewwe. Kremer,

program that randomly provided educational

charts to primary schools in Western Kenya.

Their analysis suggests that retrospective

timates seriously overestimate the charts' impact on student test scores.

difference-in-differences

approach reduced but did not eliminate

flip

es-

They found that

a

this problem.

Miguel and Kremer (2003) and Duflo, Kremer, and Robinson (2006) compare experimental

and non-experimental estimate of peer

fertilizer

effects in the case of the take

up

of

deworming drug and

adoption, respectively. Both studies found that the individual's decision

is

correlated

with the decisions of their contacts. However, as Manski (1993) has argued, this could be due to

many

factors other than peer effects, in particular, to the fact that these individuals share the

14

same environment.

that

In both cases, randomization provided exogenous variation in the chance

some members

respectively).

of a particular

The presence

network adopted the innovation (deworming or

of peer effect can then

fertilizer,

be tested by comparing whether others

in

the network were then more likely to adopt as well (we will come back to this specific method

Both studies

of evaluating peer effects in section 6.3).

non-experimental

find

markedly

different results

from the

Duflo, Kremer, and Robinson (2006) find no learning effect, while

results:

Miguel and Kremer (2003) find negative peer

effects.

Furthermore, Miguel and Kremer (2003)

run on the non-experimental data a number of specifications checks that have been suggested

in the peer effects literature,

and

all

these checks support the conclusion that peer effects are in

fact positive, suggestive that such checks

Future research along these

to assess the size

and prevalence

lines

may

not be sufficient to erase the specification bias.

would be valuable, since comparative studies can be used

and provide more guidance

of biases in retrospective estimates

which methodologies are the most robust. However, as discussed below, these types of studies

have to be done with care in order to provide an accurate comparison between different meth.

ods. If the retrospective portions of these comparative studies are

is

a natural tendency to select from plausible comparison groups

in order to

match experimental estimates. To address these concerns, future

experimental results, there

and methodologies

done with knowledge of the

researchers should conduct retrospective evaluations before the results of randomized evaluations are released or conduct blind retrospective evaluations without

randomized evaluations or other retrospective

knowledge of the results of

studies.

Publication Bias

2.5

2.5.1

Publication bias in non-experimental studies

Uncertainty over bias in the reported results from non-experimental studies

by publication

bias,

which occurs when

we

just reviewed, researchers will have

models, and

many

of these

Consider an example

in

might

still

compounded

editors, reviewers, researchers, or policymakers

preference for results that are statistically significant or support a certain view.

as

is

many

possible choices of

how

effect

is

zero,

many

cases,

to specify empirical

be subject to remaining omitted variable

which the true treatment

In

have a

bias.

but each non-experimental

technique yields an estimated treatment effect equal to the true effect plus an omitted variable

15

bias term that

what appears

tions,

a normally distributed random variable with

is itself

may

in the published literature

which would be centered on

In any study, a

number

zero,

but

mean

zero. Unfortunately,

not reflect typical results from plausible specifica-

may

instead be systematically biased.

of choices will need to be

made about how

the analysis would

most appropriately be conducted, which method should be used, which control variable should

be introduced, which instrumental variable

for a variety of different alternatives.

seem to produce

studies that

"file

to use.

There

will often

be legitimate arguments

Researchers focus their time and effort on completing

do not end up

statistically significant results, those that

in the

drawer."

Some

researchers

may

inappropriately mine various regression specifications for one that pro-

duces statistically significant

results.

Even researchers who do not deliberately search among

specifications for those that yield significant results

may do

so inadvertently.

Consider a

re-

searcher undertaking a retrospective study for which there are several potentially appropriate

which are known to the researcher before beginning the analysis. The

specifications, not all of

researcher thinks of one specification and runs a series of regressions.

cally significant

and confirm what the researcher expected

she will assume the specification

alternatives.

However,

if

is

appropriate and not spend

is

perhaps more

of time considering other possible specifications.

of

perhaps

much time

likely to

he or

considering possible

what

spend substantial amounts

In addition to generating too

many

false pos-

if

held views.

a researcher introduces no bias of this kind, the selection by journals of papers with

significant results introduces another level of publication bias.

Moreover, citation of papers

with extreme results by advocates on one side or another of policy debates

publication bias with citation bias.

when a program has no

effect,

be published and widely

A

likely that

published papers, this type of specification searching probably leads to under-rejection

commonly

Even

it is

the results are statisti-

the regression results are not statistically significant or go against

the researcher expected to find, he or she

itives in

to find,

If

The cumulative

likely to

compound

result of this process is that even in cases

strongly positive and/or strongly negative estimates are likely to

cited.

growing body of available evidence suggests publication bias

the economics literature.

is

DeLong and Lang

un-rejected null hypotheses that are

false.

is

a serious problem within

(1992) devise a test to determine the fraction of

They note

16

that under the null, the distribution of test

known;

statistics is

by the marginal

their cumulative distributions are given

associated with them. For example, any test statistic has a

of the .05-significance level.

They

these test statistics conform to

5%

chance of

significance levels

falling

below the value

use this observation to examine whether the distribution of

what would be expected

if

a pre-specified fraction of the null

hypotheses were in fact true. Using data from articles published in major economics journals,

the authors find they can reject at the .05 level the null hypothesis that

more than about

one-third of un-rejected null hypotheses were true. Although the authors acknowledge several

potential explanations for their findings, they argue publication bias provides the most important

explanation.

framework to examine whether inferences are

Statisticians have developed a meta-analysis

sensitive to publication bias.

Hedges (1992) proposed a formal model of publication bias where

tests that yield lower p- values are

more

likely to

be observed. Ashenfelter, Harmon, and Ooster-

beek (1999) apply Hedges's analytic framework to the literature on rates of return to education

and

find strong evidence that publication bias exists for instrumental variables (IV) estimates

on the rate of return to education, which suggests that

of returns to education are larger

bias.

OLS

estimates

may

IV estimates

just be an artifact of publication

Likewise, Card and Krueger (1995) find evidence of significant publication bias in the

time-series

2.5.2

than

the. often cited results that

minimum wage

an over-reporting of significant

literature, leading to

results.

Randomization and publication bias

Some, though not

all,

evaluations.

if

First,

of the

problems of publication bias can be addressed by randomized

a randomized evaluation

is

correctly implemented, there can be no question

that the results, whatever they are, give us the impact of the particular intervention that was

tested (subject to a

they have

less

known degree

of sampling error). This implies that

if

results are unexpected,

chance of being considered the result of specification error and discarded. Miguel

and Kremer (2003) evaluation

of the

impact of network and peer

deworming medicine, which we discussed above, provides an

effects

on the take up of

interesting example.

Ex

ante,

the researchers probably expected children with more links to students in treatment schools

to increase their uptake of

deworming treatment

in

subsequent rounds of the program as they

learned about the medicine's benefits. Instead, their experimental findings showed a significant

effect in the

opposite direction.

Had they obtained

17

these results in a retrospective study, most

—

researchers would have assumed there was a problem with their data or specification and explored

The experimental

alternative specifications.

design leaves

little

doubt that peer

effects are in

fact negative.

Second, in randomized evaluation the treatment and comparison groups are determined

how

before a researcher knows

discretion.

how

There

is

usually

to handle subgroups,

still

these choices will affect the results, limiting

some

flexibility

ex post

room

for

ex post

— including what variables to control

for,

and how to deal with large numbers of possible outcome variables

which may lead to "cherry-picking" amongst the

which can become arbitrarily

results.

However, unlike omitted variable bias

large, this ex post discretion

is

bounded by the ex ante design

choices.

Nevertheless, "cherry-picking" particular outcomes, sites, or subgroups where the evaluation

concluded that the program

can appear

in

how

effective provides

randomized evaluations.

sub-group analysis

discuss

is

in

medical

This

trials valid

is

a window through which publication biases

why

the

FDA

does not consider results from

evidence for the effectiveness of a drug.

to handle these issues.

Third, randomized evaluations can also partially overcome the

lication biases as their results are usually

Even when unpublished, they

This

are

is

documented even

are typically circulated

if

drawer and journal pub-

file

they suggest insignificant

and often discussed

in

because researchers discover whether the results are significant at a

much

Below, we

less likely to

effects.

systematic reviews.

much

later stage

and

simply abandon the results of an evaluation that has taken several years

to

conduct than they are to abandon the results of a quick dip into existing data to check out

an

idea. In addition, funding agencies typically require a report of

how

their

money was

spent

regardless of outcomes.

Despite

this,

it

would

still

be extremely useful to put institutions

in place to

ensure that

negative as well as positive results of randomized evaluations are disseminated. Such a system

is

in place for

of social

medical

trial results,

programs would help to

maintain such a system would be

results

from

all

and creating a similar system

alleviate the

for

for

documenting evaluations

problem of publication

bias.

One way

to help

grant-awarding institutions to require researchers to submit

evaluations to a centralized database.

To avoid problems due

to specification

searching ex post, such a database should also include the salient features of the ex ante design

(outcome variables to be examined, sub-groups to be considered,

18

etc.)

and researchers should

have to report results arising from this main design. While researchers could

still

report results

other than those that they initially had included in their proposal, they should be clearly sign-

posted such that users should be able to distinguish between them.

be useful from a decision-theoretic perspective

— several

This information would

positive, unbiased estimates that are

individually statistically insignificant can produce significant meta-estimates

to policymakers

— and be available

and those seeking to understand the body of experimental knowledge regarding

the effectiveness of certain policies.

Incorporating Randomized Evaluation in a Research Design

3

In the rest of this chapter,

tice.

In this section,

we

in developing countries.

clinical trials

we

discuss

focus on

how

how randomized

evaluations can be carried out in prac-

researchers can introduce randomization in field research

Perhaps the most widely used model of randomized research

conducted by researchers working

in laboratory conditions or

of research following similar templates in developing countries, 8

While there are examples

most

of the projects involving randomization differ from this

They

are in general conducted with implementation partners (governments,

who

that of

with close supervi-

sion.

companies)

is

are implementing real- world

model

in several

programs and are interested

important ways.

NGOs,

or private

in finding out

whether

they work or how to improve them. In some cases, randomization can then be included during

pilot projects, in

which one or several version

where randomization can be included outside

program

is

of a

program are

pilot projects,

tried out.

There are

with minimal disruption to

how

the

run, allowing the evaluation of on-going projects.

Section 3.1 discusses the possible partners for evaluation. Section 3.2 discusses

ization can be introduced in the context of pilot projects

potential to go

beyond estimating only program

Section 3.3 discuses

The

also cases

best example

is

and how these

effects to test specific

how randomization can be introduced

how random-

pilot projects

have the

economic hypotheses.

outside pilot projects.

probably the "Work and Iron Supplementation Experiment" (see Thomas, Frankenberg,

Friedman, Habicht, and Al (2003)), where households were randomly assigned to receive either iron supplementation or a placebo for a year,

and where compliance with the treatment was

19

strictly enforced.

Partners

3.1

Unlike conducting laboratory experiments, which economists can do on their own, introducing

randomization

in real-world

programs almost always requires working with a partner who

is

in

charge of actually implementing the program.

Governments are possible partners.

entire eligible population, pilots

While government programs are meant to serve the

programs are some times run before the programs are scaled-

up. These programs are limited in scope and can sometimes be evaluated using a randomized

Some

design.

of the

most well-known early

social

experiments in the

Partnership Training Act and the Negative Income Tax

in this

volume)

Todd

—

for

example the Job

—were conducted following

There are also a few examples from developing countries.

tunidades) (also discussed in

US

PROGRESA

this

(now called Opor-

(2006) and Parker, Rubalcava, and Teruel (2005) chapters

probably the best known example of a randomized evaluation conducted by a

is

government. The program

offers grants, distributed to

women, conditional on

children's school

attendance and preventative health measures (nutrition supplementation, health care

participation in health education programs). In 1998,

in the

getary constraints

ties.

when

Mexican government made a conscious decision

PROGRESA

model.

made

all at

it

visits,

the program was launched,

and

officials

to take advantage of the fact that bud-

impossible to reach the 50,000 potential beneficiary communities of

once, and instead started with a randomized pilot

program

in

506 communi-

Half of those were randomly selected to receive the program, and baseline and subsequent

data were collected

The

tional

remaining communities.

in the

task of evaluating the program was given to academic researchers through the Interna-

Food Policy Research

and a number

PRI Web

of papers have

site).

The

PROGRESA

and expanded

in

The data was made

been written on

evaluations showed that

(see in particular Gertler

The

Institute.

its

many

effective in

improving health and education,

and Boyce (2001) and Schultz (2004)).

pilot

had an impressive demonstration

Mexico despite the subsequent change

in

effect.

The program was continued

government, and expanded

other Latin American countries, often including a randomized pilot component.

are the Family Allowance

different people,

impact (most of them are accessible on the IF-

was

it

accessible to

Program (PRAF)

2000), a conditional cash transfer

program

in

in

in

many

Some examples

Honduras (International Food Policy Research

Nicaragua (Maluccio and Flores 2005), a condi-

20

tional cash transfer

program

program

in

Ecuador (Schady and Araujo 2006), and the Bolsa Alimentacao

in Brazil.

These types of government-sponsored

(or organized) pilots are

becoming more frequent

in

developing countries than they once were. For example, such government pilot programs have

been conducted in Cambodia (Bloom, Bhushan, Clingingsmith, Hung, King, Kremer, Loevinsohn, and Schwartz 2006), where the impact of public-private partnership on the quality of

health care was evaluated in a randomized evaluation performed at the district levels. In

cases,

sia,

governments and researchers have worked closely on the designs of such

pilot. In

some

Indone-

Olken (2005) collaborated with the World Bank and the government to design an experiment

where

different

ways to

in different villages. In

pilot a

number

fight corruption in locally

administered development projects were tried

Rajasthan, India, the police department

of reforms to improve police performance

and

is

working with researchers to

limit corruption across

randomly

selected police stations in nine districts.

Randomized evaluations conducted

They require cooperation

in collaboration

at high political levels,

required for successful implementation.

opment owes much

Unlike governments,

to a

The

and

with governments are

it is

still

relatively rare.

often difficult to generate the consensus

recent spread of randomized evaluations in devel-

move towards working with non-governmental organizations (NGOs).

NGOs

are not expected to serve entire populations,

and even small organi-

zations can substantially affect budgets for households, schools, or health clinics in developing

countries.

Many

are eager to

development-focused

work with researchers to

operations. In recent years,

NGOs,

number

NGOs

test

frequently seek out new, innovative projects and

new programs

or to assess the effectiveness of existing

numerous randomized evaluations have been conducted with such

often with evaluation-specific sponsorship from research organizations or foundations.

A

of examples are covered throughout this chapter.

Finally, for-profit firms

have also started getting interested in randomized evaluations, often

with the goal of understanding better how their businesses work and thus to serve their clients

better and increase their profits. For example, Karlan and

(2005a), Karlan

Zinman (2006b), Karlan and Zinman

and Zinman (2006a) and Bertrand, Karlan, Mullainathan,

(2005) worked with a private consumer lender in South Africa.

are

now working with

Many

Shafir,

and Zinman

micro-finance organizations

researchers to understand the impact of the salient features of their

21

products or to design products that serve their clients better. 9

What

As mentioned,

are the advantages of different partners?

NGOs are more willing to want

When

to partner on an evaluation, so they are often the only partner of choice.

with governments

offers several advantages. First,

may

Second, the results

be more

it

can allow

for

possible,

working

much wider geographic

likely to feed into the policy process. Third, there will

scope.

be

less

concern about whether the results are dependent on a particular (and impossible to replicate)

NGOs

organizational culture. However,

it

can be easier

of the

main

for the researchers to

benefits of the

move

and firms

offer a

much more

environment, where

flexible

monitor the implementation of the research design. One

to working with

NGOs

has been an increased scope for testing

a wider range of questions, implementing innovative programs, and allowing for greater input

from researchers into the design of programs, especially

3.2

A

From program

Pilot projects:

natural

pilot phase.

window

This

effectiveness of the

Many

evaluations to field experiments

to introduce randomization

is

an occasion

for the

program and can

early field

also

is

before the program

be a chance to improve

in the

US and

sought to test the effectiveness of particular programs.

comparison

nents of

The

villages.

PROGRESA

evaluation

in the

is

is

scaled up, during the

implementation partner to rigorously assess test the

randomized studies (both

program implemented the program

in the pilot stage.

its

design.

in developing countries)

For example, the

PROGRESA

treatment villages and did not introduce

only able to say whether, taken together,

are effective in increasing health

simply

all

it

pilot

in the

the compo-

and education outcomes. They cannot

disentangle the various mechanisms at play without further assumptions.

Such evaluations are

still

very useful in measuring the impact of policies or programs before

they are scaled up, but increasingly, researchers have been using such pilot programs to go

beyond the simple question of whether a particular program works or

their partners design

programs or interventions with

not.

specific theories in

They have helped

mind. The programs

are designed to help solve the partner's practical problems but they also serve as the test for the

theories.

A

parallel

movement has

also

happened

in

developed countries, fuelled by the concerns

on the external validity of laboratory experiments (see Harrison and List (2004)

this literature).

See the research coordinated by the Centre for Micro Finance in India, for

22

many

examples.

for a review of

These

programs allow standard "program evaluation" to transform

pilot

itself into

"field

experiments", in the sense that both the implementing agency and the researchers are experi-

menting together to

such work

in

find the best solution to a

problem (Duflo 2006). There

is

an explosion of

development economics. 10 In practice, the distinction between "simple" program

evaluation and

experiment

field

is

of course too stark: there

is

a wide spectrum of work ranging

from the most straightforward comparison between one treatment and one comparison group

for

a program, to evaluation involving a large number of groups allowing researchers to

subtle hypotheses in the

test very-

field.

Here we mention only two examples which

illustrate well the

power of creative designs.

Ashraf, Karlan, and Yin (2006) set out to test the importance of time-inconsistent ("hyperbolic")

preferences.

To

this

in the Philippines.

end they designed a commitment savings product

The

rural

bank was interested

in participating in a

for

a small rural bank

program that had the

potential to increase savings. Individuals could restrict the access to the funds they deposited

in the

accounts until either a given maturity or a given amount of

money was

saved. Relative

to standard accounts, the accounts carried no advantage other than this feature.

was

offered to a

randomly selected half of 1,700 former

clients of the

bank.

individuals were assigned either to a pure comparison group or to a group

given a speech reminding

whether the simple

availability of a

them

other half of the

who was

of the importance of savings. This group allowed

fact of discussing savings

time-commitment

in the context of a pilot

The

what encourages

and

visited

them

clients to save, rather

to test

than the

Having these two separate groups was possible only

device.

with a relatively

is

The product,

flexible organization.

Duflo, Kremer, and Robinson (2006) evaluated a series of different interventions to un-

derstand the determinants of the adoption of

interventions

made

it

possible to test

some

fertilizer in

Western Kenya.

The design

of the

of the standard hypotheses of the hindrance to the

adoption of new technology. Field demonstrations with treatment and control plots were con-

ducted to evaluate the profitability of

randomly

vision

kits,

fertilizer in

selected, those field trials also allowed

and the channels

the local conditions. Because the farmers were

them

to study the impact of information pro-

of information transmission; other

ways

to provide information (starter

school based demonstrations) were also examined; finally, financing constraints and

culties in saving

were also explored, with interventions helping farmers to buy

Many of these very

diffi-

fertilizer at

the

recent or on going studies are reviewed in the review articles mentioned in the introduction.

23

time when they have most money in the

Alternative

3.3

Methods

The examples we have looked

all

of

field.

Randomization

at so far are in one respect similar to classic clinical trials:

in

was introduced concurrent with a new program and where the

cases the randomized study

sample was randomly allocated into one or more treatment groups and a comparison group that

never received the treatment. However, one of the innovations of recent work

many

there are

different

ways to introduce an element of randomization

is

to realize that

into programs.

It is

often possible to introduce randomization into existing programs with minimal disruption. This

has spurred rapid growth in the use of randomized studies by development economists over the

last ten years.

in,

we run through the

four key

within-group randomization, and encouragement design

new and

3.3.1

A

In this section

methods

—

for

introducing randomization into

existing programs.

Oversubscription

Method

natural opportunity for introducing randomization occurs

or implementation capacity

a natural and

lottery

—oversubscription, phase-

among

fair

way

and demand

for a

to ration resources

is

program

when

there are limited resources

or service exceeds supply. In this case,

to select those

who

will receive the

program by

eligible candidates.

Such a method was used to ration the allocations

resulting randomization of treatment

assess the impact of the voucher

of school vouchers in

and comparison groups allowed

for a

Colombia and the

study to accurately

program (Angrist, Bettinger, Bloom, King, and Kremer)

uated the impact of expanded consumer credit in South Africa by working with a lender

randomly approved some marginal loan applications that would normally have been

All applicants

who would normally have been approved

below the cutoff were rejected.

received loans, and those

these results, one must be careful to keep in

or,

more

who

rejected.

who were

well

Such an evaluation was only possible because the experimental

design caused minimal disruption to the bank's normal business activities.

borrowers

eval-

mind that they only apply

generally, to the population over

was truly random.

24

When

interpreting

to those "marginal"

which assignment to the treatment group

Randomized Order of Phase-In

3.3.2

Financial and administrative constraints often lead

randomization

will often

be the

fairest

way

NGOs

to phase-in

programs over time, and

the order of phase-in can allow evaluation of program effects in contexts where

some groups

able for

Randomizing

of determining the order of phase-in.

or individuals to receive no support.

it is

In practical terms,

it

not accept-

can

facilitate

continued cooperation by groups or individuals that have randomly been selected as the com-

As such, where

parison group.

logistics permit,

randomization of phase-in

may be