OVERVIEW First set of lectures: Basics of Brownian motion, Stochastic Differential... calculus, connection with jump processes, Fokker-Planck equations and related PDE’s

advertisement

OVERVIEW

First set of lectures: Basics of Brownian motion, Stochastic Differential Equations, Ito

calculus, connection with jump processes, Fokker-Planck equations and related PDE’s

References:

Oksendal, Stochastic Differential Equations: An Introduction with Applications

Schuss, Introduction to Stochastic Differential Equations

Gardiner, Handbook of Stochatics Methods for Physics and Chemistry

Second set of lecturers: Asymptotic approximations, including large number of events,

different time scales, small noise, and stochastic averaging

References:

Stuart and Pavliotis, Multiscale methods: Homogenization and Averaging

Bender and Orszag, Advanced Mathematical Methods for Scientists and Engineers

(Also references with specific examples)

1

1

From Einstein to Ito

Motivating Examples

1.1

Basic stochastic models: continous time and state space

Characteristics of the noise:

Example 1: Motion of a Brownian particle:

mxtt = −ηxt + X

ma = F

12

Random

fluctuations

(1)

0.5

10

0.4

8

X?

6

0.3

4

0.2

2

x,v

w

x

0

0.1

-2

0

v

-4

-0.1

-6

-8

0

50

100

t

150

200

-0.2

0

50

100

t

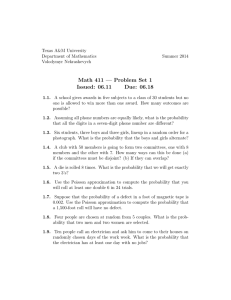

Figure 1: Realization for noise process, particle position, and velocity

2

150

200

Langevin gave an alternative (heuristic) derivation to that of Einstein

( physically motivated)

m=mass

η = viscous drag

X = irregular fluctuations: "X# = 0.

T = temperature

! "

! "

From statistical mechanics: 12 m v 2 = 21 m x2t = kT

2 as t → ∞

(k is Boltzmann’s constant) Then averaging the equation or x gives: x → x0 as t → ∞.

#

Note: we are averaging over realizations, "f (x)# = f (x)p(x)dx for p(x) the (stationary)

density of x. The variation about initial condition has mean zero E[x − x0] = "(x − x0)#

Now let’s look at the variance E[x2]: (take x0 = 0 for simplicity)

Multiply equation of motion by x, integrate:

(assume X is "nice")

⇒ mxxtt = −ηxxt + X · x

% ? what

m$ 2

η is2 X = random part?

2

⇒

(x )tt − 2(xt) = − (x )t + X · x

2

2 ! What

is E[x^2]?

"

1

2

Averaging over realizations and using the result for 2 m v gives

η! "

m ! 2"

"0

!

!! "x#

x tt = − x2 t + kT + !

"X#

2

2

3

(2)

(3)

(4)

assuming independence of X and x

! "

Then, we integrate the equation for x2 t to get

! 2"

ηt

2kT

+ Ce− m

x t =

η

Variance of the particle position

⇒ as t → ∞

(5)

! 2"

2kT

x →

t

η

(6)

Then the variance "x2# behaves as a constant times t

Einstein had derived a similar result, looking for probability density corresponding to diffusion (later).

Note that Langevin’s derivation assumes that the usual rules of calculus apply.

big assumptioin - not necessarily true

Highlights for the behaviour of X

We consider the equation for v = xt , to look more closely at the implications for the

behavior of the noisy term X What are these fluctuations?

Write the equations of motion of a Brownian particle:

mvt = −ηv + X

4

(7)

Formal solution for v: (assuming X is a “nice” function)

& t

η

η

1

e− m (t−s) X(s)ds

v = v 0 e− m t +

m 0

so we can consider the variance of v about

+ its,mean:

-2.

*

'(

& t

)

2

η

η

1

(t−s)

−m

X(s)ds

=

v − v 0 e− m t

e

?

m2

0

! 2"

#

1

2

Let’s now compare the average of this term ( . . . ds) to the result 2 m v =

(8)

(9)

kT

2

Thinking of the integral as the limit of a sum, we write

&

taking X(s) ≈

∆b

∆s

t

e

0

η

−m

(t−s)

X(s)ds = lim

N→∞

N

/

e

η

−m

(t−j∆s)

∆bj ,

(10)

j=1

(we use "b" for Brownian motion, which we see later)

That is, we view X as the derivative of random fluctuations (does not exist as ∆s → 0).

Assuming the ∆bj are independent (independent increments in the random process) and

2

"∆bj #2 = q∆s yields

2 .

+ N

N

/ η

/

/

−

(t−j∆s)

− 2η

(t−j∆s)

m

m

e

∆bj

=

e

q∆s +

()"∆bj ∆bk #

j=1

j=1

5

j(=k

(11)

For independent increments, the last term vanishes, yielding, as ∆s → 0, an integral

& t

2η

e− m (t−s) qds

0

Definition of X (random part)

2η

is consistent with physical

which can be easily evaluated as Const · (1 − e− m t).

behaviour of v

! "

Then, m2 v 2 = const, as t → ∞. We can then choose q appropriately so that

! 2"

m v = kT.

"

!

So we have X(s) as a “derivative” of the random fluctuations, such that (X(s)∆s)2 = ∆s

and, furthermore, have assumed x is independent of X ("Xx# = "X# "x# previously).

Essentially this follows from the assumption of independent increments of the random

fluctuations.

These calculations illustrate several key mathematical properties of Brownian motion =

standard Wiener process w(t):

(increments)

• w(t + s) − w(t) is independent of t and independent of w(t) − w(t − u) (u ≥ 0,s ≥ 0)

• Paths of w(t) are continuous (X(t) undefined, but integrated, gives continuous paths

for w)

• Joint probability distribution of (w(t1), w(t2), ..., w(tn)) is mean zero Gaussian E[w2 (t)] =

t (normalized)

w(t) is distributed as N(0,sqrt(t)) Var(w) = t

6

add and subtract the same

quantity

Covariance:

E[w(s)w(t)] = E([w(t) − w(s)]w(s)) + E[w2 (s)] = 0 + s = min(t, s) (for s < t)

Simulation of SDE’s and Langevin-type stochastic models:

Langevin-type models

drift

diffusion

dx = f (x)dt + qdw

(12)

We write this in differential form, rather than

dw

dx

= f (x) + q

dt

dt

since w is continuous but not differentiable.

(13)

General Stochastic Differential Equations (SDE):

dx = A(x, t)dt + B(x, t)dw

(14)

The behavior of the increment dw suggests a simple approach for numerical simulation:

Simulate at discrete time steps: tj = j∆t

Discrete approximation for the derivative:

dx x(t + ∆t) − x(t)

≈

dt

∆t

7

w is Gaussian with mean zero, variance t

Behavior of dw:

dw ≈ ∆w ∼ N (0,

Then,

√

∆t)

√

x(t + ∆t) = x(t) + A(x(t), t) ∆t + B(x(t), t) ∆tZ

where Z ∼ N (0, 1).

In general, writing xj = x(j∆t) we have an iterative procedure to get xj for all j, starting

with an initial condition x = x0 and computing to a time T = K∆t.

x(1) = x0;

for j = 1 : K − 1

√

xj+1 = xj + A(xj , tj ) ∆t + B(xj , tj ) ∆tZj

end

N(0,1)

This is the Euler-Maruyama method. It is generally associated with the Ito interpretation

of SDE’s (later). This has implications for the dynamics via the interpretation of the noise

increments.

Higher order methods: Reference: Kloeden and Platen, Numerical Solution of SDE’s,

1992. Both Weak (in distribution) and Strong (pathwise) methods.

For higher dimensional SDE:

dx = A(x, t)dt + B(x, t)dw

8

(15)

x, A are d-dimensional, w is n-dimensional, B is d × n dimensional.

Example:

mxtt = −ηxt + qX

For X as above, we write

(16)

drift is linear in v

dx = vdt

η

q

dv = − vdt + dw

m

m

v is an Ornstein-Uhlenbeck (O-U process)

12

0.5

10

0.4

8

6

0.3

0.2

2

x,v

w

4

0

0.1

-2

0

-4

-0.1

-6

-8

0

50

100

t

150

-0.2

200

0

50

100

t

150

Figure 2: Realization for noise process, particle position, and velocity

9

200

x(1) = x0; v(1) = v0

for j = 1 : K − 1

xj+1 = vj ∆t

η

q√

vj+1 = vj − vj ∆t +

∆tZj

m

m

end

Note: v is an Ornstein-Uhlenbeck process (OU process). It has a linear drift term and

a constant coefficient for the diffusion term. OU processes have a Gaussian stationary

density with a constant mean and variance, i.e. normally distributed. That is, as t → ∞,

√

v ∼ N (0, q/ 2η). typo

N(0,q / sqrt(2 eta m) )

Dynamics driven by Jump Processes - “Shot Noise”

dI

= −αI + qµ(t)

dt

/

µ(t) =

δ(t − tk )

(17)

(18)

I =current, tk =arrival time of electron. Note that µ(t) is not mean zero.

Define: N (t)=sum of Poisson arrivals,

dN

=µ

dt

10

(19)

formally, but N is a step function. If we want to write the current equation in terms of

a mean zero process (analogous to X in previous example) then consider E[dN ] = λdt,

V ar[dN ] = λdt, where λ is the mean arrival rate. Then

dη = dN − λdt

is a centered Poisson increment, with mean zero, and variance λdt

Formally

dI

dη

= −αI + λq + q

dt

dt

Poisson process, N has an average

of (rate)x(t) arrivals in interval t

(20)

Note on Numerical simulation

Here η is not a continuous process, rather, events occur at times that are exponentially

distributed. Then some time interval increments of length ∆t will have an event, and

some will not.

Averaging yields

d "I#

= (−α "I# + λq),

dt

"I# →

λq

α

as t → ∞

(21)

What about "I 2#?

Suppose we consider d(I 2) and compare with equation above:

d(I 2) = (I + dI)2 − I 2 = 2IdI + (dI)2

11

(22)

1.2

2.5

1

2

1.5

0.6

I

Less frequent jumps,

compared to decay rate of I

I

0.8

0.5

0.2

0

More frequent jumps

compared to the decay rate

of I

1

0.4

0

20

40

60

80

0

100

0

200

t

400

600

t

1

Looks sort of Brownian?

2.5

0.8

2

I

I

0.6

0.4

1.5

0.2

0

60

70

80

90

100

1

250

260

t

270

280

290

300

t

Figure 3: Realization for I

and

(dI)2 = ((−αI + λq)dt)2 + 2(−αI + λq)dtdη + q 2 dη 2

(23)

Compare with heuristic as in previous example: multiply equation for I by I and

integrate and average): multiply equation (20) by I, and integrate to get

! 2"

'

*

! 2"

dη

1d I

= −α I + λq "I# + q I

(24)

2 dt

dt

12

What about

4

I dη

dt

5

! "

? Is it = 0? If so, then we can solve for I 2 as t → ∞.

,

-2

This is just the mean of I

λq

squared

α

⇒ V ar(I) = "I 2# − "I#2 = 0

! 2"

I =

! No effect of noise - not correct!

! 2"

This heuristic neglects the term (dI) - it is not in equation for I .

So we need to examine that equation

! "

(dI)2 = ( )(dt)2 + ( )dt "dη# + q 2 dη 2

2

(25)

using the properties of the centered Poisson increment. recall: mean = variance for Poisson

random variables

!

"

"dη# = 0

(dη)2 = λdt

So now we can get the correct equation for I 2 .

"

!

"

!

d (I)2 = 2 "IdI# + (dI)2

(26)

$0

#

### + q 2 λdt + o(dt)

= "(2I(−αI + λq))dt# + #"2qIdη#

(27)

##

! 2" q2λ

Calculation of details leave

t→∞

(28)

⇒ V ar I =

as an exercise

2α

! 2"

Recall that for the Wiener process "w(t)# = 0 and w = t. Later we see that the

if Poisson events are very frequent, then one can replace Poisson random variables with

13

appropriate “centering” and Brownian motion (normal random variables).

Other types of increments: e.g. Levy Processes

Levy Processes can be characterized by a combination of Brownian motion and jumps.

A simple class of Levy Processes are alpha-stable processes. Instead of having a density

with exponentially decaying tails, like the Gaussian distribution, they have “fat” tails;

that is, the tails of the density have the behavior

p(x) = |x|α+1 as x → ∞ for α < 2

Don't have a variance

(infinite), perhaps no mean

depending on parameter

To simulate an SDE with alpha-stable noise increments, e.g.

dx = H(x)dt + κdLα,β

(29)

the iterative steps of the Euler-type method take the form

xn+1 = xn + H(xn)∆t + κδLα,β

n

1/α

∆Lα,β

)

n ∼ Sα (β, (∆t)

(30)

The alpha-stable distribution is given in terms of its characteristic function, rather than

the density, so X ∼ Sβ,σ

α has a characteristic function of the form

&

ψ(k) = F[p(x)] = eikx p(x)dx = exp(−σ α|k|α(1 − iβsgn(k)φ(k)))

$ πα %

6

α (= 1

tan 2

φ(k) = =

(31)

2

− π log |k| α = 1

14

Note: for α = 2, we have a Gaussian distribution.

Additional details: Dybiec, Gudowska-Nowak, Hanggi, Phys. Rev E, 2006; Asmussen and

Glynn, 2007.

15

Example:

Linear system forced by Levy noise (alpha-stable)

probability

density

on

time

series

averaging of a linear system where α = 2 and α < 2. For such systems, the (L) and (N+) approximatio

logarithmic scale

are the same and they compare well with the full system.

the rules of ordinary calculus, like the chain rule, to hold. In Fig. 3.1, we show an example of stochast

α=2

x(t )

0.5

p(x)

10

Gaussian

0

10

10

−0.5

0

x(t )

1

α<1

“fat tails”

20

40

60

80

−3

−5

x

t

−0.5

0

0.5

p(x)

10

0.5

10

0

10

−0.5

0

−1

20

40

60

80

t

−1

−3

−5

x

−5

0

5

Figure 3.1: A comparison of the time series and simulated marginal distributions of the fast-slow

Saturday, August 1, 2015

2

Ito’s Formula

Now we ask:

What are the rules for functions of w(t): f (w(t))?

In particular, we are interested in how to treat df (w(t)), write stochastic differential equations including w(t)

Ito’s formula: find equation for df (x) given dx = a dt + b dw

Simple example: y = w2

&

t

dy = w2(t) − w2(s)

(32)

Can we write dy = a dt + b dw? Or,

&

&

&

dy = adt + bdw

(33)

s

where a,b may be functions of y,t?

We need to define bdw: how do we evaluate or interpret b dw ?

16

Euler-Maruyama numerical method

Ito interpretation:

&

s

Stratonovich:

t

b(w)dw =

/

j

b(w(tj ))(w(tj+1) − w(tj ))

(34)

evaluate b at the average of w(t_j) and w(t_j+1)

&

s

t

/ , w(tj ) + w(tj+1) b(w)dw =

b

(w(tj+1) − w(tj ))

2

j

Back to the equation for y = w2 : We compare

from usual rules of calculus.

#

dy with

#

(35)

w dw - we might expect this

If we use the Ito interpretation, then

Rewrite Ito definition in a convenient way

& t

1 / 2

w dw =

w (tj+1) − w2(tj ) − (w(tj+1) − w(tj ))2

2 j

s

1/

1 2

2

(w(tj+1) − w(tj ))2

= (w (t) − w (s)) −

2

2 j

(36)

(37)

dw_j^2

This looks like some integrated quantity evaluated at the endpoints t and s plus a sum

17

Considering the expected value of the sum:

use covariance of w

N

N

/ ;

/

<

;

<

2

E w2 (tj+1) + E w2(tj ) − 2 min(tj+1, tj )

E

(w(tj+1) − w(tj ))

=

j=1

j=1

N

/

=

j=1

Furthermore, one can show that

V ar

tj+1 + tj − 2tj =

N

/

j=1

N

/

j=1

(w(tj+1) − w(tj ))2 ∼

∆t = t − s

1

N

(38)

(reference: Schuss, Introduction to SDE’s)

So

2

&

t

s

wdw = w2(t) − w2(s) − t − s = y(t) − y(s) − t − s

(39)

in probability, as N → ∞. Then for y = w2 ,

dy = =>?@

2w dw + =>?@

1 dt

(40)

a=1

b

Note: The expression is not simply dy = 2w dw, but there is also an additional term dt.

18

Next, we compute the rule for products

Consider dx = adt + bdw and dw

For a,b constant, x = at + bw, for x(0) = 0

Using Ito interpretation

xw = atw + (bw2)

d(xw) = atdw + awdt + b(2wdw) + bdt

= xdw + wdx + bdt

(41)

(42)

(43)

And we can use this, together with the result for y = w2 to find by induction (exercise)

dwm(t) = m(w(t))m−1dw +

m(m − 1) m−2

w

dt for m ≥ 2

2

(44)

Then, for any Polynomial P (w)

1

dP (w) = P -(w)dw + P --(w)dt

2

From there, we can write general “noise” functions as f = g(t)φ(w), so

df = φg - (t)dt + gdφ

B

A

1

⇒ df = φg - (t) + gφ-- (w) dt + gφ- (w)dw

2

A

B

1

= ft + fww dt + (fw )dw

2

19

(45)

(46)

(47)

(48)

Applying this term by term to a general expression,

/

f (w, t) =

g(t)φ(w),

yields the same result for f (w, t)

Finally, for f (x, t), x = at + bw, a,b, constant

∂f

∂f

∂f

=

+a

∂t

∂t

∂x

(49)

Ito's formula: Chain

rule for f(x,t) when (50)

dx = adt + bdw in the

Ito sense

2

∂f

∂f

∂ 2f

2∂ f

=b

=

b

∂w

∂x

∂w2

∂x2

Then

A

B

∂f

∂f 1 2 ∂ 2f

∂f

df =

+a

+ b

dt

+

b

dw

(51)

∂t

∂x 2 ∂x2

∂x

This is Ito’s formula, relating the equation for f to the equation for x, dx = adt + bdw.

It can be generalized for a(x, t) and b(x, t).

Note: Throughout this we use the Ito interpretation for ()dw.

Is there a Stratonovich Formula?

(S)

#

f (w) dw follows the usual rules of calculus

20

Ito interpretation + correction

f(w_i + Delta w_i/2)

C

C $ wi +wi+1 %

(∆wi )2 ∆w

∼

f

(w

)∆w

+

(S) f (w) dw =

f

,

t

f (wi )

i

i

i

i

2

2

Ito interpretation

Note: in this simple

Simple example:

example 2x = w^2, so

(∆w)2

∆t

1

2

(S) w dw = w dw + 2 ∼ w dw + 2 = 2 d(w )

b(x) = w = sqrt(2x),

#

1

bb )dt

2 x

dx = adt + bdw(S) = adt + b ◦ dw = (a +

+ bdw

Show as Exercise: repeat same procedures as

b=const, (S) = (I)

above, for powers of w, polynomials in w

Other Processes:

products of functions of t and w, etc

bb_x = 1

For Levy Processes, the analogy for the Stratonovich interpretation is known as the Marcus

interpretation. Here, written in an SDE with increments of an alpha-stable process

dx = a(x)dt + b(x)!dLα,β

(52)

For α = 2 it is equivalent to the Stratonovich interpretation.

To implement this for evaluation/simulation, the Marcus integral takes the form

& t

/

α,β

−

b(xs)!dLs =

θ(1; ∆Ls, x−

s ) − xs

0

s≤t

dθ

= ∆Lxb(θ), θ(0) = zs−

(53)

dr

The quantity θ here is known as the Marcus map, and it is a time-like variable that travels

infinitely fast along a curve connecting across the jumps. Then, a numerical approximation

of dx = a(x)dt + b(x)!dLα,β is given by

xn+1 = xn + a(xn)∆t + (θ(1; ∆Lα,β , xn) − xn]

21

(54)

Additional references on implementation:

Grigoriu, Phys. Rev. E, 2009.

Asmussen and Glynn, Stochastic Simulation: Algorithms and Analysis, 2007.

Notes at: http://www.math.ubc.ca/~rachel/cv/lec1jul15_rw.pdf

Printed copies tomorrow, post on summer school website next week

22

3

Master, Forward, and Backward equations

Random walk as a Markov process

where X = position

X

and Xj =position after j steps.

P(step up: Xn+1 = Xn + 1) = p

p

P(step down: Xn+1 = Xn − 1) = 1 - p

1-p

j

Each step is independent of previous steps (Markovian) (depends only on present location):

(conditional probability)

P (Xn = k) = P (Xn = k|Xn−1 = k − 1)P (Xn−1 = k − 1)

+ P (Xn = k|Xn−1 = k + 1)P (Xn−1 = k + 1)

= pP (Xn−1 = k − 1) + (1 − p)P (Xn−1 = k + 1)

In general,

P (Xn = k) = P ( location after n steps, m + steps, l - steps,

with m − l = k, m + l = n)

23

Random walk: P (k, j) = P (Xj = k)

P (k, j) = P (Xj = k) = pP (Xj−1 = k − 1) + (1 − p)P (Xj−1 = k + 1)

= pP (k − 1, j − 1) + (1 − p)P (k + 1, j − 1)

For p =

1

(symmetric) we rewrite this as:

2

1

P (k, j) − P (k, j − 1) = [P (k − 1, j − 1) + P (k + 1, j − 1)−

2

2P (k, j − 1)]

It doesn’t matter what the grid size is, so, take time step size ∆t, space step size ∆X ,

P (Xk , tj ) − P (Xk , tj − ∆t) =

1

[P (Xk − ∆X, tj − ∆t)+

2

P (Xk + ∆X, tj + ∆t) − 2P (Xk , tj )]

Suppose

Diffusive scaling

2

(∆X) = C∆t

-

(not +)

t_j - 1

Then the equation for P (Xk , tj ) becomes (for all k):

P (Xk , tj ) − P (Xk , tj − ∆t)

C∆t

1

[P (Xk − ∆X, tj − ∆t) +

2(∆X)2

P (Xk + ∆X, tj + ∆t) − 2P (Xk , tj )]

C

as ∆t, (∆x)2 → 0

=⇒ Pt = PXX

2

=

Diffusion equation: Heat equation

Exercise: Taylor expansion around Delta t=0 and (Delta X)^2=0

24

The solution is a mean zero Gaussian with variance proportional to t! This is the density

of Brownian motion.

1

2

P (X, t) = √ e-X /(Ct)

for P (X, 0) = δ(X)

πCt

C/2 = diffusion coefficient

So we get Brownian motion under a diffusive scaling for the random walk.

This also illustrates the self-similarity of this diffusion. In particular, if w(t) is a standard

Brownian motion, then so is

1

√ w(αt),

α

1

"( √ w(αt))2# = t

α

25

4

Equation(s) for the probability density:

General continuous time/space

We derived an equation for P (x, t) using conditional probability - that is conditioning

on starting at x0 at time t, then taking a step up or down.

In general, this is a useful approach to deriving and equation for P (x, t)

Chapman-Kolmogorov equation:

P (X(t) = y|X(s) = x) = p(y, t, x, s) =

&

p(y, t, z, τ )p(z, τ, x, s)dz s < τ < t (55)

This is the probability of going from x to y via z, “summed” over all possible intermediate z. While in general this doesn’t look like a simplification of the problem, it may be,

given the particular problem and the choice of z.

This general equation is used to derive the PDE for the probability density p(y, t|x, s)

(often, s = 0) as follows:

Fokker-Planck equation (FPE)

We will show that

∂p

∂t

= L∗p where

Forward Kolmogorov equation

%

∂

1 ∂2 $ 2

b

(y,

t)p(y,

t,

x,

s)

−

(a(y, t)p(y, t, x, s))

L p=

2 ∂y 2

∂y

∗

26

(FPE)

(56)

where dξ = adt + bdw , p(ξ = y, t|ξ = x, s) = p(y, t, x, s)

This is accomplished by showing:

&

&

∂p

g(y) dy = g(y)L∗p(y, t, x, s) dy

∂t

(57)

for an appropriate “nice” test function g (weak sense). To do this, we show that

& 6&

=

&

p(z, t + h, y, t)[g(z) − g(y)]dz

p(y, t, x, s) dy

[p(y, t + h, x, s) − p(y, t, x, s)]g(y) dy (FPI)

The RHS of FPI can be approximated with

&

h pt(y, t, x, s)g(y)dy,

The LHS of FPI can be written as:

& 6&

D

h = ∆t

(58)

(59)

(60)

as h goes to zero

D

p(z, t + h, y, t)[g - (y)(z − y) + 1/2g -- (y)(z − y)2...] dz p(y, t, x, s) dy

(61)

Taylor series expansion around z=y

Borrowing the h1 from (60), the LHS can be written as

& ,

E

F1

1

1

E [ξ(t + h) − ξ(t)] g - (y) + g -- (y) E (ξ(t + h) − ξ(t))2 p(y, t, x, s) dy (62)

h

2

h

27

Defining

1

E [ξ(t + h) − ξ(t)] = a(y, t) as h → 0

(63)

h

F

1 E

2

E (ξ(t + h) − ξ(t)) = b2(y, t) as h → 0

(64)

h

which is essentially the statement that the particle displacement is a(y, t)h with variance

b2h (thus a diffusion process). Together the RHS and LHS of FPI yields:

B

&

& A

-g (y) 2

b (y, t) p(y, t, x, s)dy

(65)

pt(y, t, x, s)g(y)dy =

a(y, t)g - (y) +

2

Integrating by parts yields FPE for p(y, t, x, s) (weak version - integrated with test function g(y)dy).

Moves the derivatives to ap and b^2/2 p

, then we get p_t = L^* p

To show FPI: Write RHS:

&

&

p(z, t + h, x, s)g(z)dz − p(y, t, x, s)g(y)dy

First term

&

& ,&

p(z, t + h, x, s)g(z)dz =

p(z, t + h, y, t)p(y, t, x, s) dy g(z) dz

,&

&

=

p(y, t, x, s)

p(z, t + h, y, t)g(z) dz dy

28

(66)

using Chapman-Kolmogorov equation. Second term:

,&

&

&

p(z, t + h, y, t)dz g(y)dy

p(y, t, x, s) · 1 · g(y)dy =

p(y, t, x, s)

,&

&

=

p(y, t, x, s)

p(z, t + h, y, t)g(y)dz dy

Subtract first and second to get the LHS of FPI.

dy = adt + bdw

= L∗p.

(b dw term is in the sense of Ito)

Computationally, we can find p(x, t) using methods for solving a PDE.

We can also obtain a numerical approximation to p(x, t) via a simulation of the SDE for x.

So we can find the density by solving a PDE

∂p

∂t

This is accomplished by simulating the SDE for x to time t using N (independent)

realizations of the SDE. Recording the N values of x(t), we construct a histogram from

these values, recording the number of realizations that fall into bins of width ∆x. Then

p(X, t)∆x ≈ ki/N

(67)

where 0 ≤ ki ≤ N is the number of realizations of x(t) that take values in the interval

between X and X + ∆x. We see examples of these approximations in later applications.

29

4.1

Exit Time

Another key quantity is Expected Exit Time (Expected Transition Time).

Later, we will see that the equation for mean exit time has the form, ut + Lu = −1 and

u(ξ ∈ ∂Ω, t) = 0, where

1

Lu = auξ + b2uξξ where dξ = adt + bdw

2

L is the adjoint of L∗ , where pt = L∗p.

See page 44 and following for exit time problems

30

Asymptotic approximations

In the next sections we cover different types of asymptotic behavior in SDE’s.

Examples:

1. Approximating systems with a large number of discrete events with a continuous process: Related to central limit theorem: normal approximation for a large number N of

realizations

2. Small noise asymptotics and behavior in the state space for: boundary layers in state

space

- small random fluctuations

where b is small

dx = a dt + b dw

3. Systems with multiple time scales: quasi-steady approximations and stochastic averaging

Example: Quasi-steady approximations in deterministic dynamics

Michaelis-Menten Model:

S+

k1

−→

E ←− C

k3

k

2

−→

P +E

deterministically - nothing random

k_j are the rates of the reactions

31

substrate + enzyme =⇒ complex =⇒ further reaction to product + enzyme

S

Ċ

Ṡ

Ė

Ṗ

+

=

=

=

=

k

1

E −→

C

k1SE − k3C − k2C

−k1SE + k3C

−k1SE + k2C + k3C

k2C

E + C = conserved quantity = E0

Nonlinear - difficult

to get an analytical

solution

Take (C (0) = 0) so we can eliminate E

Ċ = k1S(E0 − C) − k3C − k2C

linear stability shows that

⇒ C = 0, S = 0

Ṡ = −k1S(E0 − C) + k3C

is stable fixed point.

Ṗ = k2C

Suppose E0 3 1 (small amount of enzyme); then also small amount of C .

<< " is much less than"

C = Ec

0

⇒ E0ċ = k1S(1 − c)E0 − k3E0c − k2E0c

Ṡ = −k1S(1 − c)E0 + k3E0c

To see leading order behavior, T = E0t

Leading order = what are the

largest terms for E_0 small

(68)

(69)

(70)

(71)

(72)

(73)

(74)

(75)

(76)

(short time)

⇒ E0cT = k1S1 (1 − c) − k3c − k2c

ST = −k1S(1 − c) + k3c

32

(77)

(78)

typo should be c_T

C moves quickly to adjust to the

value of S; since S moves slower, it

Leading order: set E0Ċ = 0

looks like a constant relative to C

B

k1S1

like a steady–state, if

quasi-steady

⇒c =

k1S1 + k2 + k3 S was a constant

approximation

Ṡ = −k1S(1 − c) + k3c

typo - should be S_T

0.5

0.45

0.4

0.35

C,S

0.3

S

0.25

0.2

+ are the quasi-steady

approximation

solid lines = full system (73)

0.15

C

0.1

0.05

0

0

5

10

15

20

25

30

35

t

Figure 4: Realization M-M process, with E0 3 1.

Note: Here E_0 = .2 - not even that small, but small compared to other parameters

33

(79)

(80)

5

Approximations of interacting individuals

The SIR model: Simple epidemiological model

Reference: Kuske, Greenwood, Gordillo, 2007, Journal of Theoretical Biology; Chaffee

and Kuske, Bull. Math Bio, 2011:

don't have the disease

SIR model - model for spread of disease

Susceptible population = S

Asymptotic result for large N = population size

has the disease Infective population = I

Population of recovered individuals R

had the

disease, can't

get it again For either probabilistic or deterministic models, we think in terms of rates

transition

rate

S →S+1

µN

S →S−1

βSI/N + µS

I →I +1

βSI/N

I →I −1

(γ + µ)I

R→R+1

γI

R→R−1

µR

N = total population size

µ = birth/death rate µ

γ = recovery rate

βI/N = average number of contacts with infectives per susceptible per unit time.

34

(81)

N = total population size

Stochastic model: the rates (per unit time) are the conditional transition rates of the

stochastic (Poisson) process (S, I, R)

Can write this as a Continuous time Markov process: {(St, It , Rt) : t ∈ [0, ∞)}, state

space Z+3 . N = expected population size N = E[S + I + R]

We need to break this down into Poisson events - births, deaths, infections, recoveries

For example: P (St+∆t = s + 1|St = s) = µN ∆t + o(∆t)

A birth process is a Poisson event with probability µN ∆t for a birth in time interval of

length ∆t

Other events:

P (St+∆t = s + 1|St = s) = µN ∆t + o(∆t),

P (St+∆t = s − 1|St = s) = µS∆t + o(∆t) decrease in susceptibles due to death

P (St+∆t = s − 1|St = s) = βSI/N ∆t + o(∆t) decrease in susceptibles due to infections

P (It+∆t = i + 1|It = i) = βSI/N ∆t + o(∆t), increase in infectives (only by infections)

Approximations

35

Infected population

Note: stochastic simulation

(black) does not go to a

constant, and

also has regular oscillations in

the simulation

number of infectives

2500

2000

1500

1000

0

5

10

15

time (years)

20

25

30

time

Figure 5: A realization of a stochastic SIR model and its deterministic counterpart for N = 2000000, µ = 1/55, R0 = 15

and γ = 20.

Deterministic (mean field) model

dS

dt

dI

dt

= µ(N − S) − β SI

,

N

(82)

= β SI

N − (γ + µ)I,

Note: R equation is redundant, S + I + R = N .

β

Basic reproductive number, R0 = µ+γ

If R0 > 1 there is a stable endemic (I (= 0) equilibrium. (ODE exercise)

We express the stochastic equations of the process in a form easily compared with the

equations (82) of the deterministic model.

Approximation of the stochastic process with a continuous time, continuous state space:

36

Typically for larger populations, with short time increments. N >> 1

Recall diffusion processes - approximating a random walk with a Brownian motion for

(∆x)2 = ∆t.

Ultimately, approximate Poisson process increments with Brownian motion incremenents

earlier - centered Poisson increments (subtracted off the mean of the original Poisson process,

they have mean zero and variance proportional to Delta t

Viewing our Poisson processes as increments: To each increment we add and subtract

its conditional expectation, conditioned on the value of the process at the beginning of

the time increment of length ∆t. Each increment of the process is then the sum of the

expected value of the increment of the process and the centered increment,

For a Poisson process U , composed of two indepedent types of events with probability

P+ , P− for increasing or decreasing by one, we have

P (Ut+∆t = u + 1|Ut = u) = P+∆t + o(∆t)

P (Ut+∆t = u − 1|Ut = u) = P−∆t + o(∆t)

Then the equation for an increment of U is:

average change + Poisson increment

∆U = (P+ − P−)∆t + Z+ + Z− ,

Z+/− = U +/− − E[U +/− ]

Z+ Z− are centered Poisson increments, that is E[Z+/− ] = 0 with variances P+/−∆t

37

Note: Can simplify, for two processes independent: ∆U = (P+ − P−)∆t + Z

Z is a centered Poisson increment, that is E[Z] = 0 with variance that is the sum of the

two variances (P+ + P−)∆t

√

Recall: σdW has

distribution

N

(0,

σ

∆t), so Z has zero mean and standard deviation

√

proportional to ∆t, as does the standard Brownian motion.

When is a Poisson random variable well-approximated by a Gaussian random variable?

Typically when there are enough events so that the Poisson parameter is large (e.g. > 10)

So we can write:

S, I have fluctuations that are not independent from each other

∆S = (µ(N − S) − β SI

N ) ∆t + ∆Z1 − ∆Z2 ,

(83)

∆I = (β SI

− (γ + µ)I) ∆t + ∆Z2 − ∆Z3.

N

The increments ∆Z1 , ∆Z2 , ∆Z3 are independent, centered Poisson variables with variances µ(N + S)∆t, β SI

∆t and (γ + µ)I∆t, respectively.

N

Note: ∆Z2 is the increment of the process that influences both S and I (infection), so it

must appear in both equations. Thus the noise in the equations is correlated.

We replace ∆Zi by increments of Brownian motion, dWi , with the same standard devia-

38

tions,

Diffusion approximation for the stochastic equations

,

-

β

µ(N − S) − SI dt + G1dW1 − G2dW2,

N

,

β

dI =

SI − (γ + µ)I dt + G2dW2 − G3dW3,

N

H

G

G

β

G1 = µ(N + S), G2 =

SI, G3 = (γ + µ)I.

N

dS =

(84)

Note - these have the Ito interpretation.

Note - some noise coefficients may be large or small? If the noise is small, why not just

use the deterministic equations (82)

References:

Allen, Allen, Arciniega, Greenwood, Stochastic Analysis and Applications, 2008.

dX = f (t, X) + G(t, X)dW

dY = f (t, Y) + B(t, Y)dV

X, Y are d-dimensional, W is m-dimensional, V is d-dimensional, m > d. For GGT =

H and B = H 1/2 , for f, G, B satisfying certain continuity conditions, giving pathwise

unique solutions. Then X and Y have the same distribution. (weak sense)

So we can express the noise terms in different forms, can choose for our convenience. In

the example above, it is easy to derive, easy to see influence of different biological processes

39

in the equations.

Approximating Poisson with Normal

Expect N is relatively large (larger rates for Poisson processes in a time interval)

Are stochastic terms significant? i.e. if N is large, are the fluctuations significant?

u is proportion of N that is in S, v is proportion of N in I

Consider rescaled system S = N u, I = N vu v

u, v are between zero and 1

,

β

du = µ(1 − u) − v dt + g1dW1 − g2dW2,

u

,

β

SI − (γ + µ)I dt + g2dW2 − g3dW3,

(85)

dv =

N

H

G

G

β

g1 = µ(1 + u)/N , g2 =

uv, g3 = (γ + µ)v/N .

N

Note, coefficients gj of noise term scale as N −1/2 , vanish as N → ∞. Then we recover

the mean field (deterministic) model (82).

What if N is just large, rather than infinite?

We consider the system with the basic reproductive number, R0 =

40

β

µ+γ

> 1 Then the

deterministic system has a unique nontrivial stable equilibrium point (Seq , Ieq ) at

Nµ

N

, Ieq =

(R0 − 1).

(86)

R0

β

Consider simulations for different parameter values: Why is the noise sometimes significant?

u, v are fluctuations

We introduce the dimensionless variables

around

S − Seq

I − Ieq

u=

, v=

, the equilibrium, normalized

Seq

Ieq

by the size of that

equilibrium

Note Seq and Ieq both proportional to N

Seq =

t → Ωt, Ω =

I

βµ

(R0

R0

− 1) (for convenience) to get the equations (exercise)

1

βIeq

βIeq

βIeq

[(−µ −

)u −

v−

uv]dt +

Ω

N

N

N

J

H

µ

βIeq

(N

+

S

(u

+

1))dW

(t)

−

(v + 1)(u + 1)dW2(t),

+

eq

1

2

ΩSeq

ΩN Seq

βSeq

1 βSeq

(u + v) +

uv − (γ + µ)v]dt +

dv = [

ΩJN

N

H

βSeq

γ+µ

+

(v + 1)(u + 1)dW2(t) −

(v + 1)dW3(t).

(87)

ΩN Ieq

ΩIeq

du =

Noise coefficients: still N −1/2

41

a

Power spectral density

Modulus of the

Fourier transform of the process

b

c

1

1

1

0.8

0.8

0.8

0.6

0.6

0.6

0.4

0.4

0.4

0.2

0.2

0.2

0

0

2

4

6

0

0

2

4

6

0

0

2

4

6

Large peak where there is a strong frequency

Noisy driven process

But it is dominated by one frequency

1

1

1

0.8

0.8

0.8

0.6

0.6

0.6

0.4

0.4

0.4

0.2

0.2

0.2

0

0

2

4

d

6

0

2

4

e

6

0

4

6

8

f

frequency

Figure 6: Graphs of the PSD (vertical axis) vs. frequency of the oscillations, a)R0 = 15, γ = 20, µ = 1/55, N = 500000.

b)R0 = 15, γ = 25, µ = 1/55, N = 500000. c)R0 = 15, γ = 30, µ = 1/55, N = 500000. d)R0 = 15, γ = 30, µ = 1/55, N =

2000000. e)R0 = 7, γ = 33, µ = 1/55, N = 2000000. f)R0 = 10, γ = 35, µ = 1/10, N = 500000.

Consider equations linearized about u = v = 0 (near equilibrium)

u, v are fluctuations around the equilibrium

42

−3

6

−3

x 10

6

5

5

4

4

3

3

2

2

1

1

0

0

500

1000

1500

2000

0

2500

I

x 10

0

500

1000

1500

2000

2500

I

Figure 7: Stationary density p(I) (2000 realizations for a value of t > 100) Left: (a) (∗’s and dash-dotted line) and (f)

(diamonds and solid line) Right, (c) (∗’s and dash-dotted line), (d) (dotted and solid line)

linear approximation around u=v=0

(Ornstein-Uhlenbeck process as an approximation)

L

K

, , dW1

R0 −1

µR0

− Ω −µ Ω

u

u

,

(88)

d

= M

dt + G dW2 ,

M=

β

0

v

v

ΩR0

dW3

I

I

βI

µ

eq

,

(N

+

S

)

−

0

2

eq

2

ΩNS

g

−b

g

0

ΩS

eq

1

2

eq

I

I

G =

=

.

βSeq

γ+µ

0

g

−g

2

2

0

− ΩIeq

ΩNIeq

43

Solutions of the deterministic version of (88) are given in terms of the eigenvalues of M,

which are

for certain values of rate

G

2

4

constants and N large, epsilon

λ = −0 ± 0 − 1 ,

is small

where

µR0

lambda is complex with small

.

02 =

negative real part

2Ω

,

,

, 2

2

b sin t

b cos t

u

,

(89)

+ C2e−0 t

∼ C1e−0 t

− cos t

sin t

v

In references, u and v

dropping O(02) corrections.

are cos t and sin t with

stochastic amplitude

For N large and finite - drift and diffusion coefficients both small

that is O-U process

Oscillations have weak (slow) decay, noise coefficients may play a role

6

Mean exit time

Another key quantity is Expected Exit Time (Expected Transition Time).

If we define τx =time to reach certain state, ∂Ω, given initial state ξ(s) = x ∈ Ω,

E[τx ]=Expected time to exit Ω and reach new state (∂Ω) given ξ(s) = x.

44

We can write this as

E[τx ] =

&

0

∞&

p(y, t, x, s)dydt =

Ω

&

∞

>

P (τx < t)dt

(90)

0

since P (τx < t) is the probability that y ∈ Ω at time t. Can also compute this, noting

that for u(ξ, t) with dξ = adt + bdw,

,

∂u ∂u

1 2 ∂ 2u

∂u

du(ξ(t), t) =

+ a+ b

dt

+

bdw

(91)

∂t ∂ξ

2 ∂ξ 2

∂ξ

using Ito’s formula, so that

u(ξ(t), t) = u(ξ(s), s) +

&

t

[ut + Lu] dt̂ +

0

&

t

0

∂u

bdw

∂ξ

(92)

Note Lu = auξ + 21 b2uξξ , is the adjoint operator for L∗u = −(au)ξ + 12 (bu)ξξ

Suppose, ut + Lu = −1 and u(ξ ∈ ∂Ω, t) = 0. Then substitute t = τx above and take

expected values,

E[u(ξ(τx ), τx)] = u(x, s) + E

A&

τx

(−1)dt + 0

0

⇒ 0 = u(x, s) − E[τx ]

Example: Exit time of a particle from potential U (x).

45

B

(93)

(94)

Simple example: U (x) =

x2

,

2

on −1 < x < 1

Particle position: dξ = −ξdt +

√

√

20dw = −U (ξ)dt + 20dw

-

Realizations of xi vs t

1

1.5

epsilon small

1

0.9

0.5

0

blue line is q

0.8

-0.5

-1

0

100

200

300

400

500

600

700

800

900

1000

1.5

0.7

0.6

1

epsilon smaller

0.5

0.5

0

-0.5

-1

0.4

0

100

200

300

400

500

600

700

800

900

1000

0.3

1.5

epsilon even smaller

1

0.2

0.5

0

0.1

-0.5

-1

0

100

200

300

400

500

600

700

800

900

1000

0

-1

-0.8

-0.6

-0.4

-0.2

0

0.2

0.4

0.6

0.8

1

Figure 8: Realizations for 0 = .05, .04, .03. The uniform approximation for q, compared to the potential U(x)

Note: this is a time homogeneous case ⇒

0u-- − xu- = −1

for u= mean exit time from interval [−1, 1]

u(−1) = u(1) = 0

This is an example which we could solve exactly, but we see how the asymptotic solution

gives physical insight: Consider for 0 3 1

For small 0 (small noise), expect u → ∞ as 0 → 0 (at least for x (= ±1)

brief introduction

to boundary layer theory

46

so take u = C(0)q(x) for C(0) → ∞ as 0 → 0

Then, substituting into equation for u,

0q -- − xq - ∼ 0

q(−1) = q(1) = 0

For 0 = 0, we have, to leading order: ⇒ q0 = constant

(95)

constant (= 0

However, the boundary conditions are not satisfied, so we must find the boundary layer

behaviour. The solution q0 is the “outer” solution (away from the boundaries).

look near the boundary, and expand that region

Then, taking 0z = 1 − x, as a local variable near x = 1, yields

0

1 − 0z

qz = 0 , q ∼ q0 + 0q1 + ...

q

−

zz

02

0

⇒ q0zz − q0z = 0 , q0(z = 0) = 0

)

different behavior for q in this local variable

$

% (

(1−x)

−z

− "

q0 = 1 − e

= 1−e

almost 1 when x is not near 1,

Similarly for x near −1.

approaching zero as x approaches 1

Then we can construct the uniform solution:

q ∼ 1

q ∼ 1 − e−

q ∼ 1 − e−

47

(1−x)

"

(x+1)

"

away from x=1 or x=-1

near x =1

near x= -1

(96)

(97)

(98)

Boundary layer approximations → constant, as matched to “outer” approximation

Uniform approximation: Add boundary layer expansions + outer approximation - parts

matched

)

(

(x+1)

(1−x)

− "

− "

−e

(99)

u = C(0) 1 − e

Still, we don’t know C(0), but can it determine using our knowledge of p(x) (invariant

density). That is, p satisfies equation with the adjoint operator: L∗p = 0.

We have the equation for u

and

Lu = −1

(100)

1 − x2

L p = 0 = 0pxx + (xp)x ⇒ p(x) = √

e 2"

2π0

(101)

∗

So,

&

pLudx =

p0u-|1−1

+

&

0

(L p)udx = −

"

!

∗!!

!

!

&

1

−1

1 · pdx

(102)

If we use the uniform expansion for u we can evaluate (exercise), then we get the

equation for C(0) from the calculation of p(x)

√

C(0) ∼ K 2π0e−

U (1)

"

+ higher order corrections

(103)

Additional exercise: Could there be a longer time to escape from the bottom of the

potential? Show that there is no internal layer in the construction of the uniform solution.

48

Use local variable v = 0α x, find appropriate α and equation for q(v). A contradiction in

matching to the outer solution results, which shows to layer at x = 0.

no

Asymptotic methods for determining p(x, t) on whole domain

Another asymptotic method that is commonly used to determine p(x, t) in the small noise

case is the WKB method.

another asymptotic method for solving PDE's

Let’s see how this works for the simple example above. The equation for p(x, t) is

pt = (xp)x + 0pxx = L^* p

(104)

The WKB method is based on the observation that is we take 0 → 0 in the equation for

p, we eliminate the highest order derivative. This doesn’t make sense, as it eliminates the

diffusion from the equation.

So, instead we should look for a form of the solution that better balances the effect of the

diffusion with the other contributions.

p(x, t) = K(x, t) exp (−ψ/0 )

(105)

This makes intuitive sense, particularly if we are expecting densities that decay exponenTerms with different orders of epsilon:

tially for large values.

coefficients of 1/epsilon, coefficients with

epsilon^(0)

Substituting into the equation for p(x, t), we get:

A

B

A

B

ψx

ψx

ψxx

(ψx )2

1

K + 2 − 2Kx + Kxx (106)

Kt − Kψt = x Kx − K + K + 0 −

0

0

0

0

0

49

For simplicity, we consider the case of the invariant density - no t dependence.

Then, to leading order (terms with coefficient of 0−1 ) we have

Nonlinear first order PDE for psi - eikonal equation

−xψx + ψx2 = 0

(107)

from which we obtain ψ = constant or ψ = x2/2. The constant solution is the trivial

solution, and is also not normalizable for p density.

(condition that p must be a probability density, has to be normalizable)

Then, the equation for K (terms with coefficient 00 ) is

(1 − ψxx )K + (x − 2ψx )Kx + Kxx = −xKx + Kxx = 0

(108)

#

The solution for K that is normalizable, i.e. K(x)e−ψ(x)/0 dx is K = constant. Then we

obtain the result given above in (101).

50

Stochastic systems with multiple time scales:

for epsilon small:

dx

= f (x, y)

dt

dy

= 0−1g(x, y)

dt

Can view time scale of y as “fast”, i.e. for T = t/0, y - (T ) = g(x, y).

sometimes called "separation of time scales" for small epsilon

“Slow” time scale t and “Fast” time scale T

If fluctuations in y are fast, then they may appear as fast random fluctuations in the

equation for x. Then it may be possible to approximate x over the slow time scale of t

Here fluctuations in y replaced by some kind of average

with

dX

= fˆ(X, ζ)

(109)

dt

where ζ is a quantity used to approximation the behavior of y. Then X may approximate

the behavior of x in the weak sense, e.g. in terms of moments or distribution.

How do we determine a reasonable approximation? Under what conditions does this approximation hold?

References:

Informal, physical intuition: Monahan and Culina, 2011: Stochastic Averaging of Idealized

Climate Models. J. Climate, 24, 3068-3088.

51

Mathematical (and applications): Stuart and Pavliotis, Multiscale methods

Long history of averaging: Khasminskii (1968), Papanicolau and Kohler (1974), Friedlin

and Wentzell (1998), MTV method: Majda, Timofeyev and Vanden-Eijnden, 2001, Comm.

Pure Appl. Math.

We show here results for the following system of SDE’s:

dx = f (x, y) dt

dy = 0−1g(x, y) dt + 0−1/2σ dW

Specific example:

(x,y) is an Ornstein-Uhlenbeck process

for this specific example

f (x, y) = −x − ay

g(x, y) = cx − y

(110)

(111)

Deterministic approximation: Averaged approximation = (A) approximation

Assuming y is “well-mixed”: that is, on the t time scale, the density of y is well-sampled,

approaching the stationary density

Then the approximation is based on using the stationary conditional density, p(y|x).

That is, on the fast time scale on which y fluctuates, x appears as a constant. Then the

proposed (A) approximation is

&

dx

(112)

= f (x) = f (x, y)p(y|x) dy = Ey|x[f (x, y)]

dt

52

For our example, what is f ?

Need to determine what p(y|x) is

If x is treated as a constant in the equation for y, then y is an Ornstein-Uhlenbeck (OU)

process, with a Gaussian stationary density N (cx, σ 2/2). So

(A) approximation

dx

Ey|x[f (x, y)] = −x − E[y|x] = −(1 + ac)x

⇒

(113)

= −(1 + ac)x

dt

What are the circumstances for which (A) is a reasonable approximation? Can we do

(neglecting stochatic fluctions, just averaging)

better? Consider our example:

Taking x = x + ξ, assuming ξ 3 1 is a small correction

substitue x = x-bar + xi

dx dξ

+

= f (x, y) − f (x) + f (x) ⇒

dt dt

dξ

∼ (f (x, y) − f (x)) + f (x) + f (x)ξ − f (x)

dt

dξ

∼ f (x)ξ + fˆ(x, y) neglect terms of higher powers of xi

dt

where we have used a Taylor series about ξ = 0, and fˆ(x, y) = (f (x, y) − f (x)).

Then, to complete the approximation, we need to approximate fˆ(x, y). This term represents the fluctuations of f about its average, due to fast fluctuations in y. As we look

for a weak approximation to x on the time scale t (slow time scale), then we look for an

53

approximation to fˆ which has the same properties - in the weak sense.

Specifically, can fˆ be approximated by Brownian motion, or some other random process?

What is the motivation for this approximation?

We expect the properties of fˆ gives the fast fluctuations of f (x, y) about its average f .

So if varying on a fast time scale, these can appear as fluctuations of a random process

with mean zero. So if we can approximate with something known, then we have a lower

dimensional approximation for ξ and thus for x ∼ X = x + ξ.

If fˆ has the properties of a Brownian motion DdW , then we consider the integrated

behavior of the correlation C : correlation between f-hat at two different times in process y

&

&

&

Cfˆfˆ(τ ) dτ = E[fˆ(x, y(t + τ ))fˆ(x, y(t))]dτ = D 2 δ(τ )dτ

(114)

W has independent increments

Here τ is the lag variable. Usually C(τ ) → 0 as τ → ∞. That is, correlation between

different points in a realization decreases with increasing time intervals between the points.

Note: for simplicity we take the stationary result for Cfˆfˆ - that is, as 0 → 0, on the fast

time scale t/0 → ∞. So C is independent of t.

This result can be expressed more generally in the case that the behavior is not identically

a Brownian motion, but rather one that in the limit as 0 → 0 (time scales are separated)

has the behavior of a δ-function.

54

&

d tau

1

Cfˆfˆ(τ )dt = β

h(u/0)du

0

&

&

1

lim

h(u/0)du =

δ(u)du = 1

0→0

0

&

where

(115)

For example, if fˆ has the properties of an Ornstein-Uhlenbeck process, on the fast scale,

e.g.

Σ

µ

dZ = − Z dt + √ dW (t)

0

0

Then CZZ (τ ) = 0Σ2/(2µ) 10 e−µ|τ |/0 , and

& &

Σ2

lim

CZZ (τ )dτ = 0 2

0→0

µ

(116)

(117)

Now let’s see how this works for our example:

Recall, f (x) = −(1 + ac)x

a

Then fˆ = f (x, y) − f (x) = −x − ay + x + acx = −ay + cx

condition on x = const. for x slow, y fast

and Cfˆfˆ = a2Ey|x[(y(t) − cx)(y(t + τ ) − cx)] = a2Cyy (τ ). Considering the stationary

behavior of y (for large time), we have

55

#

Cfˆfˆ(τ )dτ = 02a2σ 2

#∞ 1

0

0e

(−|τ |/0)

dτ = a2σ 20

Then we conclude that fˆ can be weakly approximated by increments of a Brownian motion

0ab dW (t), and the slow dynamics for x in

dx = −x − ay

cx + y

σ

dy = −

+ √ dW (t)

0

0

(118)

can be weakly approximated as x = x + ξ where

dx = −(1 − ac)x

In applications,

√

√

epsilon is not zero,

(119)

dξ = f (x)ξ + 0aσ dW (t) = −(1 − ac)ξ + 0aσ dW (t)

maybe small, but

not very small - linear, given the linear eq'n for xi

stochastic This is known as the (L) approximation. It is a linear SDE approximation for the slow

averaging result dynamics x. We can see that for certain values of the parameters a and b, namely where

includes important

γ

ab

=

0

, for γ > −1,

/2 the stochastic fluctuations are negligible as 0 → 0. Then ξ decays

fluctuations

to its mean 0, and we are left with the (A) approximation. b= sigma

This form is relatively simple, given that the original system is linear. Furthermore, terms

-of the form f (x)ξ 2/2 vanish. But in a more general nonlinear system, these terms would

not vanish and could be a source of error in the approximation.

General multiple scale averaging approximation (nonlinear):

56

Compare averaged

approximation

with full system - paths are

not identical

but density for x is the same

0.4

4.5

0.3

4

0.2

3.5

0.1

3

0

2.5

-0.1

2

-0.2

1.5

-0.3

1

-0.4

0.5

-0.5

0

20

40

60

80

100

120

140

160

180

0

-0.5

200

-0.4

-0.3

-0.2

-0.1

0

0.1

0.2

0.3

0.4

0.5

Figure 9: Realization and pdf for x from full and reduced (average) equations

The (N+) approximation:

(Stratonovich)

dx = f (x) dt + D(x) ◦ dW

= f (x) dt + G(x) + D dW

(Ito)

(120)

(121)

Note that ◦ indicates the Stratonovich interpretation for the noise incremement, and

G(x) = D(x)D -(x)/2 is the correction between the Ito and Stratonovich interpretations.

Derivation of this result:

Reference: Stuart and Pavliotis, Multiscale Methods, Averaging and Homogenization

(Springer)

The approach is based on looking at the operator L corresponding to the generator for

Main ingredients: PDE theory - operator L associated with the SDE : generator for the process

57

the full process. Recall, for u = E(f (x, y)),

ut = Lu = a · ∇u + B∇2u,

dz = a(z) dt + b(z) dW

B = bbT /2

(122)

and then looking at the asymptotic behavior of that operator as 0 → 0, and identifying

the process that corresponds to the asymptotic approximation to L.

Example:

v(x)y

√

0

√

αy

2α

dy = −

+ √ dW (t)

0

0

dx =

(123)

The equation for u has the form:

,

1

1

ut = Lu = L0 + √ L1 + L2

(124)

0

0

√

Using an asymptotic expansion for u = u0 + 0u1 + 0u2 , then one can consider the

sequence of equations obtained at each order of 0.

different orders correspond to different powers of epsilon

After a number of calculations, the equation has the form:

L0u2 = M (u0)

58

(125)

From the Fredholm Alternative Theorem, for there to be a solution we must have

&

M (u0)ρ(y) dy = 0

since

L∗0 ρ(y) = 0

(126)

where ρ(y) is the invariant density for the fast variable y. Note that this condition is

essentially an averaging over y. From that condition we find

&

M (u0)ρ(y) dy = Lxu0

(127)

where Lx is the operator corresponding to the reduced equation in (120), which is the N+

approximation.

Other recent results: Stochastic averaging for systems forces by Levy (alpha

stable processes), and other noise processes with "fat tails"

(Thompson, Kuske, and Monahan, arXiV)

instead of Stratonovich, we have Marcus interpretation of stochastic

increments

59

More research on interactions of noise and nonlinear

dynamics, stochastic bifurcations, noise sensitivity - in

applications: physics, (bio)-mechanics, neuroscience,

epidemiology, pattern dynamics, environment

http://www.math.ubc.ca/~rachel

Recruiting PhD students and postdoctoral

researchers in 2016:

http://www.math.ubc.ca : Information on PhD programs

2015

Applications for postdocs: Math Jobs (by November 2016)

Sunday, August 2, 2015