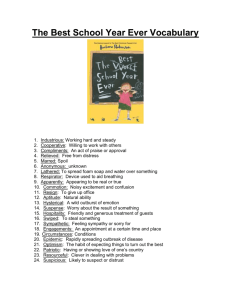

comMotion:

advertisement