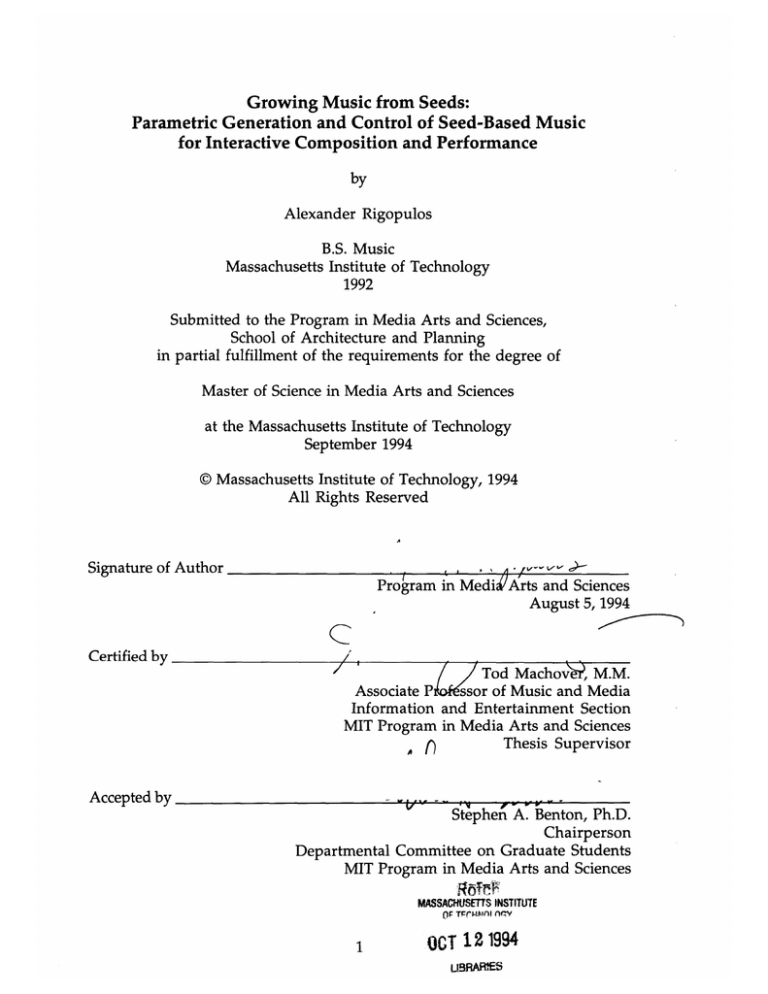

Growing Music from Seeds: for Interactive Composition and Performance

advertisement