Eye Movement Guidance in Familiar Visual ... A Role for Scene Specific Location ...

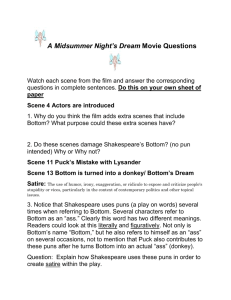

advertisement