Supervised Classification using S-Plus

Linear Discriminant Analysis

This is an example of supervised classification of the Australian crabs data. The first step

is to create a new variable sp.sex with 4 categories from the two variables species and

sex. You can do this with a command like:

Sp.sex_c(rep(1,50),rep(2,50),rep(3,50),rep(4,50))

since each group has sample size of 50, and the cases are ordered according to the species

and sex variables. You’ll need to add this variable to the Australian crabs data table.

Next we break the data into training and test samples. We’ll keep out 20% of each group

to test our rule. Here is the code to do this:

indx_c(sample(1:50,size=40),sample(51:100,size=40),sample(101:150,size=40),

sample(151:200,size=40))

crabs.train_australian.crabs[indx,]

crabs.test_australian.crabs[-indx,]

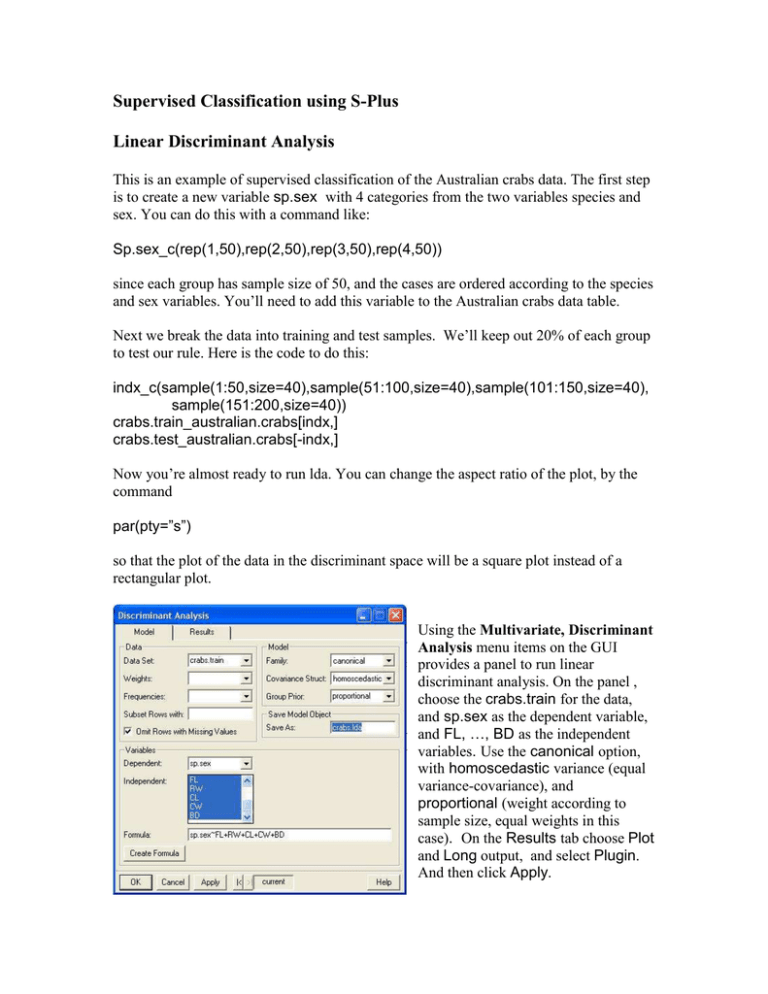

Now you’re almost ready to run lda. You can change the aspect ratio of the plot, by the

command

par(pty=”s”)

so that the plot of the data in the discriminant space will be a square plot instead of a

rectangular plot.

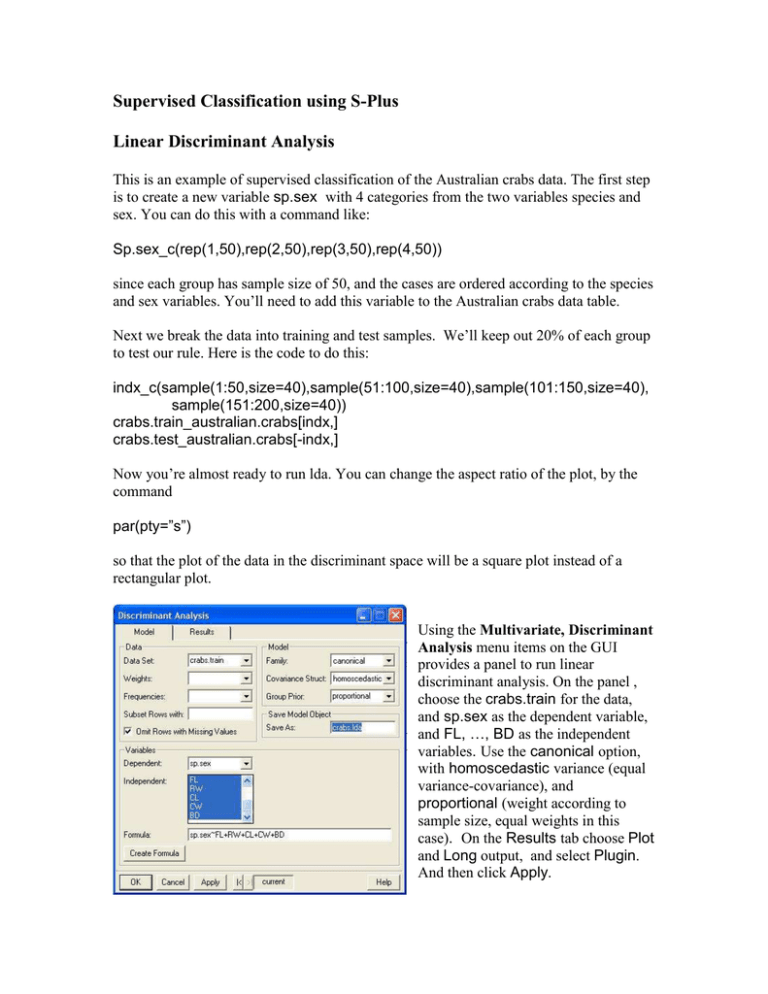

Using the Multivariate, Discriminant

Analysis menu items on the GUI

provides a panel to run linear

discriminant analysis. On the panel ,

choose the crabs.train for the data,

and sp.sex as the dependent variable,

and FL, …, BD as the independent

variables. Use the canonical option,

with homoscedastic variance (equal

variance-covariance), and

proportional (weight according to

sample size, equal weights in this

case). On the Results tab choose Plot

and Long output, and select Plugin.

And then click Apply.

Here are the results:

*** Discriminant Analysis ***

Call:

discrim(structure(.Data = sp.sex ~ FL + RW + CL + CW + BD, class = "formula"),

data = crabs.train,

family = Canonical(cov.structure = "homoscedastic"), na.action = na.omit,

prior =

"proportional")

Group means:

FL

RW

G1 14.53 11.50

G2 13.34 12.18

G3 16.25 12.06

G4 17.36 14.59

CL

31.26

28.23

32.98

34.18

CW

35.93

32.78

36.41

38.50

BD

13.05

11.86

14.99

15.46

N Priors

40

0.25

40

0.25

40

0.25

40

0.25

Covariance Structure: homoscedastic

FL

RW

CL

CW

BD

FL 9.93 6.93 21.39 24.07 9.78

RW

5.19 15.13 17.05 6.90

CL

46.74 52.54 21.31

CW

59.22 23.96

BD

9.85

Canonical Coefficients:

dim1

dim2

dim3

FL 1.6105 0.3357 -1.8263

RW 0.5383 1.6172 0.5305

CL 0.3748 -1.2881 0.7397

CW -1.6470 0.7206 -0.6705

BD 1.2708 -0.4509 1.3253

Singular Values:

dim1 dim2 dim3

19.54 13.34 2.587

Constants:

G1

G2

G3

G4

-15.74 -25.3 -17.64 -40.29

Linear Coefficients:

G1

G2

G3

FL 6.464

7.55 13.55

RW 2.311

7.95

3.56

CL -7.641 -10.37 -3.53

CW 7.189

7.06 -3.05

BD -8.518 -7.45 -0.22

G4

17.22

10.48

-9.10

-0.87

-1.92

Canonical Correlations:

Canonical.Corr Likelihood.Ratio Chi.square df

Pr

dim1

0.9381

0.0240

576.1 15 0.0000

dim2

0.8797

0.2003

248.6 8 0.0000

dim3

0.3377

0.8860

18.8 3 0.0003

Eigenvalues:

Eigenvalue Difference Proportion Cumulative

dim1

7.341

3.919

0.6740

0.6740

dim2

3.423

3.294

0.3142

0.9882

dim3

0.129

0.0118

1.0000

Tests for Homogeneity of Covariances:

Statistic df Pr

Box.M

226.3 45 0

adj.M

213.4 45 0

Tests for the Equality of Means:

Group Variable: sp.sex

Statistics

F df1

Wilks Lambda

0.024 80.1 15

Pillai Trace

1.768 44.2 15

Hotelling-Lawley Trace

10.893 109.4 15

Roy Greatest Root

7.341 226.1

5

* Tests assume covariance homoscedasticity.

F Statistic for Roy's Greatest Root is an

df2 Pr

420 0

462 0

452 0

154 0

upper bound.

Hotelling's T Squared for Differences in Means Between Each Group:

F df1 df2 Pr

G1-G2 39.0

5 152 0

G1-G3 121.4

5 152 0

G1-G4 182.2

5 152 0

G2-G3 141.6

5 152 0

G2-G4 105.5

5 152 0

G3-G4 72.6

5 152 0

Plug-in classification table:

G1 G2 G3 G4 Error Posterior.Error

G1 36 4 0 0 0.10

0.1062

G2 2 38 0 0 0.05

0.0190

G3 0 0 40 0 0.00

-0.0360

G4 0 0 2 38 0.05

0.0685

Overall

0.05

0.0394

(from=rows,to=columns)

Rule Mean Square Error: 0.06431

(conditioned on the training data)

Cross-validation table:

G1 G2 G3 G4 Error Posterior.Error

G1 36 4 0 0 0.1000

0.1107

G2 2 38 0 0 0.0500

0.0213

G3 0 0 40 0 0.0000

-0.0528

G4 0 0 3 37 0.0750

0.0844

Overall

0.0563

0.0409

(from=rows,to=columns)

Predict the values of the test set:

crabs.lda.pred_predict(crabs.lda,crabs.test)

This creates a new data set that has the predicted sp.sex group and the probability of

membership in each species.sex group, eg

Groups

G1

G1

G1

G1

G1

G1

0.999

0.994

1.000

0.787

0.992

G2

0.001

0.006

0.000

0.213

0.008

G3

0.000

0.000

0.000

0.000

0.000

G4

0.000

0.000

0.000

0.000

0.000

For each of these the predicted class is 1, and the probability of membership in each of

the 4 classes is given in the four columns. For the 4th case there is some doubt whether it

might be group 2.

These predicted classes need to be crosstabulated with the true class to get the

misclassification table:

sp.sex|predicted

|G1

|G2

|G3

|G4

|RwTt|

-----+-----+-----+-----+-----+----+

1

|10

| 0

| 0

| 0

|10 |

|1

|0

|0

|0

|0.25|

|0.91 |0

|0

|0

|

|

|0.25 |0

|0

|0

|

|

-----+-----+-----+-----+-----+----+

2

| 1

| 9

| 0

| 0

|10 |

|0.1 |0.9 |0

|0

|0.25|

|0.091|1

|0

|0

|

|

|0.025|0.22 |0

|0

|

|

-----+-----+-----+-----+-----+----+

3

| 0

| 0

|10

| 0

|10 |

|0

|0

|1

|0

|0.25|

|0

|0

|0.91 |0

|

|

|0

|0

|0.25 |0

|

|

-----+-----+-----+-----+-----+----+

4

| 0

| 0

| 1

| 9

|10 |

|0

|0

|0.1 |0.9 |0.25|

|0

|0

|0.091|1

|

|

|0

|0

|0.025|0.22 |

|

-----+-----+-----+-----+-----+----+

ClTtl|11

|9

|11

|9

|40 |

|0.28 |0.22 |0.28 |0.22 |

|

-----+-----+-----+-----+-----+----+

Which gives an error rate of 2/40=0.05, or 5%.

The plot below shows the data plotted in the three discriminant coordinates. The first

two coordinates appear to be sufficient to separate the four groups as best as possible.

It should be possible to re-create this plot using the canonical coordinates.

G2

G3

G4

6

6

4

4

dim1

dim1

G1

2

0

2

0

-2

-2

-4

-4

0

2

4

6

8

dim2

-7

-5

-3

dim3

dim2

8

6

4

2

0

-7

-5

-3

dim3

Re-creating the plots above using the canonical coordinates.

>

>

>

>

d1<-c(1.61,0.54,0.37,-1.65,1.27)

d2<-c(0.34,1.62,-1.29,0.72,-0.45)

dc<-cbind(d1,d2)

dc

d1

d2

[1,] 1.61 0.34

[2,] 0.54 1.62

[3,] 0.37 -1.29

[4,] -1.65 0.72

[5,] 1.27 -0.45

> crabs.lda.proj<-as.matrix(australian.crabs[,5:9])%*%as.matrix(dc)

> dim(crabs.lda.proj)

> plot(crabs.lda.proj[,1],crabs.lda.proj[,2],type="n",xlab="Discrim

1",ylab="Discrim 2")

> points(crabs.lda.proj[sp.sex==1,1],crabs.lda.proj[sp.sex==1,2],pch="1")

> points(crabs.lda.proj[sp.sex==2,1],crabs.lda.proj[sp.sex==2,2],pch="2")

> points(crabs.lda.proj[sp.sex==3,1],crabs.lda.proj[sp.sex==3,2],pch="3")

> points(crabs.lda.proj[sp.sex==4,1],crabs.lda.proj[sp.sex==4,2],pch="4")

10

4

1

4

Discrim 2

6

8

4

2

1

1

2

22

2 2

2

22 2

2

22 22 2

2

2

2222

2 2 22

2

2 2222 2 2

22

22 2 22

1 21

12 221

1 1 1 2 12

1 1 1 1 12

1 1 1 1 1 11 21

1 1

11 1 11 1 1 1

11 1 1

11 11

1 11 1

4

4

44 4

3

3

3 33

3

3

333333

3 3 3 33

333

3333

3

3 3 3 3

3

3

3 3 33 3

3 3 3 3

3

3 3

3 3

3

3

0

1

-2

4

44 4 4

4 44 4

44

4

44 44

444

4444 4

4

4

4

4 44 44

4 44

44

4

1

-4

4

0

2

4

6

Discrim 1

Yes, this will do it.

Quadratic Discriminant Analysis

On the discriminant analysis control panel select Heterogeneous variance-covariance.

You’ll need to use the Classical option. Here are the results.

*** Discriminant Analysis ***

Call:

discrim(structure(.Data = sp.sex ~ FL + RW + CL + CW + BD, class = "formula"),

data = crabs.train,

family = Classical(cov.structure = "heteroscedastic"), na.action =

na.omit, prior =

"proportional")

Group means:

FL

RW

G1 14.53 11.50

G2 13.34 12.18

G3 16.25 12.06

G4 17.36 14.59

CL

31.26

28.23

32.98

34.18

CW

35.93

32.78

36.41

38.50

BD

13.05

11.86

14.99

15.46

N Priors

40

0.25

40

0.25

40

0.25

40

0.25

Covariance Structure: heteroscedastic

Group: G1

FL

RW

CL

CW

BD

FL 10.78 6.85 24.70 28.17 10.79

RW

4.67 15.93 18.20 6.91

CL

57.01 65.02 24.82

CW

74.29 28.36

BD

10.94

Group: G2

FL

RW

CL

CW

BD

FL

6.94

RW

CL

CW

BD

6.17 15.38 17.73 7.05

5.72 13.87 15.96 6.37

34.51 39.68 15.88

45.74 18.26

7.46

Group: G3

FL

RW

CL

CW

BD

FL 12.67 7.85 27.35 30.08 12.69

RW

5.02 17.11 18.85 7.92

CL

59.57 65.50 27.59

CW

72.16 30.36

BD

12.87

Group: G4

FL

RW

CL

CW

BD

FL 9.34 6.86 18.15 20.30 8.58

RW

5.38 13.62 15.19 6.41

CL

35.89 39.95 16.93

CW

44.70 18.87

BD

8.12

Constants:

G1

G2

G3

G4

-49.29 -18.88 -50.8 -23.74

Linear Coefficients:

G1

G2

G3

G4

FL 27.71 8.958 13.47 2.017

RW 18.64 6.185 27.04 7.769

CL -12.90 -2.317 -4.97 -1.524

CW -0.16 -0.922 -5.64 0.538

BD -8.23 -4.965 -4.81 -4.432

Quadratic coefficents:

group: G1

FL

RW

CL

CW

BD

FL -8.068 -1.759 3.187 -0.040 1.943

RW

-2.914 0.764 0.854 -0.371

CL

-5.953 4.065 -0.656

CW

-4.446 1.801

BD

-4.907

group: G2

FL

RW

CL

CW

BD

FL -8.411 -1.182 1.761 2.839 -1.738

RW

-3.898 1.508 0.791 -0.701

CL

-7.173 4.175 2.103

CW

-5.240 0.581

BD

-3.725

group: G3

FL

RW

CL

CW

BD

FL -4.935 -0.367 1.442 0.335 1.211

RW

-5.177 0.432 1.270 -0.373

CL

-5.100 3.248 1.586

CW

-3.817 0.928

BD

-6.596

group: G4

FL

RW

CL

CW

BD

FL -4.541 -0.998 0.239 2.178 0.024

RW

-2.730 0.767 0.845 -0.355

CL

-3.525 2.150 1.495

CW

-3.387 0.422

BD

-3.904

Tests for Homogeneity of Covariances:

Statistic df Pr

Box.M

226.3 45 0

adj.M

213.4 45 0

Hotelling's T Squared for Differences in Means Between Each Group:

F df1

df2 Pr

G1-G2 34.7

5 72.39 0

G1-G3 120.0

5 73.67 0

G1-G4 120.2

5 68.19 0

G2-G3 141.4

5 70.88 0

G2-G4 119.0

5 66.20 0

G3-G4 73.7

5 66.67 0

* df2 is Yao's approximation.

Pairwise Generalized Squared Distances:

G1

G2

G3

G4

G1

0.00 30.58 44.45 77.51

G2 14.82 0.00 47.12 31.13

G3 53.58 85.95 0.00 25.15

G4 115.39 79.19 50.00 0.00

Kolmogorov-Smirnov Test for Normality:

Statistic Probability

FL

0.0544

0.7301

RW

0.0487

0.8419

CL

0.0429

0.9303

CW

0.0497

0.8238

BD

0.0514

0.7920

Plug-in classification table:

G1 G2 G3 G4 Error Posterior.Error

G1 38 2 0 0 0.050

0.0949

G2 1 39 0 0 0.025

0.0348

G3 0 0 40 0 0.000

-0.0180

G4 0 0 1 39 0.025

0.0286

Overall

0.025

0.0351

(from=rows,to=columns)

Rule Mean Square Error: 0.04505

(conditioned on the training data)

Cross-validation table:

G1 G2 G3 G4 Error Posterior.Error

G1 35 5 0 0 0.1250

0.1171

G2 3 36 0 1 0.1000

0.0484

G3 0 0 40 0 0.0000

-0.0122

G4 0 0 1 39 0.0250

0.0149

Overall

0.0625

0.0421

(from=rows,to=columns)

For the crabs data quadratic discriminant analysis isn’t an improvement over linear

discriminant analysis. The test set can be predicted similarly to lda:

crabs.qda.pred_predict(crabs.qda,crabs.test)

Classification Trees

Use the Tree, Tree Models

menu items on the GUI. Use the

crabs.train as the data, sp.sex

as the dependent variable and

FL, …, BD as the independent

variables. Save the model

object. The fitting options

provide control over the

minimum number of

observations allowed to split the

data, the minimum number of

cases in a node, and the

minimum deviance allowed to

add a node. On the results tab

choose full tree and save

misclassification errors. On the

predict tab, use the crabs.test

data, and use the response

option.

*** Tree Model ***

Regression tree:

tree(formula = sp.sex ~ FL + RW + CL + CW + BD, data = crabs.train, na.action =

na.exclude, mincut = 5,

minsize = 10, mindev = 0.01)

Number of terminal nodes: 22

Residual mean deviance: 0.285 = 39.3 / 138

Distribution of residuals:

Min. 1st Qu. Median

Mean 3rd Qu.

Max.

-1.570 0.000

0.000 0.000 0.018

1.670

node), split, n, deviance, yval

* denotes terminal node

1) root 160 200.0 3

2) RW<14.35 124 100.0 2

4) CW<36.2 83 90.0 2

8) BD<12.15 51 40.0 2

16) CL<26.75 37 30.0 2

32) BD<11.15 32 20.0 2

64) CL<22.9 20 20.0 2

128) BD<8.95 13

5.0 2

256) RW<8.1 7

4.0 1 *

257) RW>8.1 6

0.0 2 *

129) BD>8.95 7

4.0 3 *

65) CL>22.9 12

3.0 2

130) RW<10.55 5

0.0 1 *

131) RW>10.55 7

0.0 2 *

33) BD>11.15 5

1.0 3 *

17) CL>26.75 14

3.0 2

34) RW<11.25 7

0.0 1 *

35) RW>11.25 7

0.0 2 *

9) BD>12.15 32 20.0 3

18) RW<13.1 24 10.0 3

36) CW<33.95 18

4.0 3

72) RW<11.7 13

0.0 3 *

73) RW>11.7 5

0.0 4 *

37) CW>33.95 6

3.0 2 *

19) RW>13.1 8

0.0 4 *

5) CW>36.2 41 40.0 2

10) FL<17.3 26

8.0 1

20) RW<13.65 18

5.0 1

40) CW<38.95 10

4.0 1

80) RW<12 5

0.0 1 *

81) RW>12 5

3.0 2 *

41) CW>38.95 8

0.0 1 *

21) RW>13.65 8

0.0 2 *

11) FL>17.3 15 20.0 2

22) CL<39.15 9

2.0 3 *

23) CL>39.15 6

3.0 1 *

3) RW>14.35 36 30.0 3

6) CW<48.85 29 20.0 4

12) FL<17.75 7

7.0 3 *

13) FL>17.75 22

6.0 4

26) CW<46.35 14

0.9 4 *

27) CW>46.35 8

4.0 3 *

7) CW>48.85 7

8.0 3 *

RW<14.35

|

CW<36.2

BD<12.15

CW<48.85

FL<17.75

CW<46.35 3

3 4 3

FL<17.3

RW<13.65CL<39.15

CW<38.95

RW<12

2

1 2 1

3

CL<26.75

RW<13.1

BD<11.15 RW<11.25 CW<33.95

RW<11.7

4

1 2

CL<22.9

2

BD<8.95RW<10.55 3

3 4

1 2

RW<8.1

1 2 3

1

5

10

15

20

10

20

30

40

50

20

15

FL

10

5

20

15

RW

10

5

50

40

CL

30

20

10

50

40

CW

30

20

10

22

17

BD

12

7

2

5

10

15

20

10

20

30

40

50

2

7

12

17

Check the test error rate:

> table(crabs.test[,1],round(crabs.tree.pred$fit,digits=0))

1 2 3 4

1 8 0 2 0

2 0 7 3 0

3 2 1 7 0

4 1 1 1 7

which gives the error rate (2+3+2+1+1+1+1)/40 = 0.275, that is, 27.5%. Tree do much

worse than linear discriminant analysis on this data.

22