The history of communications and its implications for the Internet

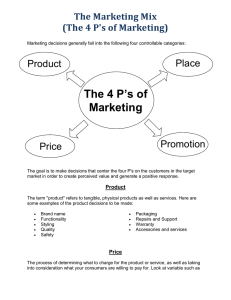

advertisement