17. Communications Biophysics A. Signal in The Auditory System

advertisement

Communications Biophysics

17. Communications Biophysics

A. Signal Transmission in The Auditory System

Academic and Research Staff

Prof. N.Y.S. Kiang, Prof. W.T. Peake, Prof. W.M. Siebert, Prof. T.F. Weiss, Dr.

M.C. Brown, Dr. B. Delgutte, Dr. R.A. Eatock, Dr. D.K. Eddington, Dr. J.J.

Guinan, Jr., Dr. E.M. Keithley, Dr. J.B. Kobler, Dr. W.M. Rabinowitz, Dr. J.J.

Rosowski, J.W. Larrabee, F.J. Stefanov-Wagner, D.A. Steffens

Graduate Students

D.M. Freeman, M.L. Gifford, G. Girzon, R.C. Kidd, M.P. McCue, X-D Pang, C. Rose

17.1 Basic and Clinical Studies of the Auditory System

National Institutes of Health (Grants 2 PO 1 NS 13126, 5 RO 1 NS 18682, 5 RO 1 NS20322,

1 RO 1 NS 20269, and 5 T32 NS 0704 7)

Symbion, Inc.

Studies of signal transmission in the auditory system continue in cooperation with the

Eaton-Peabody Laboratory for Auditory Physiology at the Massachusetts Eye and Ear Infirmary.

Our goals are to determine the anatomical structures and physiological mechanisms that underlie

vertebrate hearing and to apply that knowledge where possible to clinical problems. This year the

Cochlear Implant Laboratory was formed and a joint program was established with the

Massachusetts Eye and Ear Infirmary to study subjects with cochlear prostheses. The ultimate

goal of these devices is to provide speech communication for the deaf by using electrical

stimulation of intracochlear electrodes to elicit patterns of auditory nerve fiber activity that the

brain can learn to interpret.

17.1.1 Comparative Aspects of Middle-Ear Transmission

William T. Peake, John J. Rosowski

Our work on comparative aspects of signal transmission through the middle ear aims to relate

the structure of the middle ear to its function. This year we focused on the completion of a series

of papers on the middle ear of the alligator lizard.

Papers have been published on distortion

products in the sound pressure in the ear canal'

and on nonlinear properties of the input

impedance at the tympanic membrane. 2 The results suggest that both nonlinear phenomena

RLE P.R. No. 127

Communications Biophysics

originate in the inner ear. We began to investigate the extent to which a simple nonlinear model

of the inner ear can account for these measurements.

17.1.2 Effects of Middle-Ear Muscle Contraction on Middle-Ear Transmission

Xiao-Dong Pang, William T. Peake

We aim to understand the mechanism through which middle-ear muscle contractions alter

signal transmission through the middle ear. Therefore, we have measured changes in acoustic

input admittance of the ear and the cochlear microphonic potential in response to sound stimuli

3

during maintained contractions of the stapedius muscle in anesthetized cats. Large changes in

middle ear transmission (of as much as 30 dB) occur at low frequencies, and appreciable

changes occur up to 10 kHz. For physiologically significant levels of muscle contraction, the

stapes is displaced (up to 30 firm) in a posterior direction as expected from the anatomical

orientation of the muscle tendon. During this motion the incudo-stapedial joint slides and no

motion of the incus is seen. These observations suggest that changes in the impedance of the

stapes ligament cause the change in transmission.

17.1.3 Model of the Ear of the Alligator Lizard

Dennis M. Freeman, William T. Peake, John J. Rosowski, Thomas F. Weiss

We have developed a model of the ear of the alligator lizard that relates the sound pressure at

the tympanic membrane to the receptor potential of hair cells with free-standing stereocilia. The

aim is to understand the signal processing mechanisms in this relatively simple ear, which should

help us to understand more complex ears such as those found in mammals. The model contains

stages to represent sound transmission through the middle and inner ear, micromechanical

properties of the hair-cell stereocilia, mechanoelectric transduction, and the electric properties of

hair cells. This model accounts for most of our measurements of hair-cell receptor potentials in

response to sound. During the past year we have made substantial progress in preparing this

work for publication.

Motivated by our initial theoretical studies of stereocilia mechanics, we

initiated a further theoretical study of stereociliary motion aimed at assessing the role of fluid

4

properties and of passive mechanical properties of stereociliary tufts in determining tuft motion.

Our initial results suggest to us that the inertial forces of fluid origin are appreciable and should

not be ignored as they have been in previous studies of stereocilia mechanics.

RLE P.R. No. 127

Communications Biophysics

17.1.4 Relation Between Spike Discharges of Cochlear Neurons and the Receptor

Potential of Cochlear Hair Cells

Ruth A. Eatock, Christopher Rose, Thomas F. Weiss

A review of the relation of spike discharges of cochlear neurons and the receptor potential of

cochlear hair cells was published. 5 It was concluded that while much qualitative knowledge of this

relation is available, quantitative and coordinated studies of these two signals are required to

crystallize our understanding of signal processing mechanisms in the cochlea.

In this

connection, we have investigated tone-induced synchronization of the discharges of cochlear

neurons in the alligator lizard. There are two principal results: (1) with increasing frequency the

rate of loss of synchronized response of nerve fibers innervating hair cells with free-standing

stereocilia exceeds that found in the receptor potential of these hair cells so that synaptic and

excitatory mechanisms at the hair-cell neuron junction must contribute to loss of synchronization;

(2) the loss of synchronized response of cochlear neurons innervating hair cells with

free-standing stereocilia differs from that of neurons innervating hair cells covered by a tectorial

membrane. The differences in responses may well be related to distinct differences in synaptic

structure and innervation reported for these two groups of neurons.

17.1.5 Accuracy of Algorithms for Neural Iso-Response Measurements

Christopher Rose, Thomas F. Weiss

We investigated the accuracy of automatic algorithms, used in our laboratory and in many

others, for measurement of the frequency selectivity of cochlear neurons. We now have a much

better understanding of the limitations of such methods. The results should be of practical utility

to many investigators.

17.1.6 Coding of Speech in Discharges of Cochlear Nerve Fibers

Bertrand Delgutte, Nelson Y.S. Kiang

We aim to understand the coding of speech in the discharges of cochlear nerve fibers. During

this year, we began to examine the role of the mechanisms responsible for two-tone rate

suippressionn on speech coding in cochlear nerve fibers of anesthPti7ed cats. We developed an

automatic method to measure iso-suppression contours.

Also, we focused on the relation

between responses of cochlear nerve fibers and intensity discrimination.

We have, in effect,

measured an analog of intensity difference limens for single auditory nerve fibers. For stimulus

levels within the dynamic range of the fiber, these "single-fiber limens" are commensurate with

behavioral difference limens in humans.

RLE P.R. No. 127

Communications Biophysics

17.1.7 Structural and Functional Studies of the Spiral Ganglion

M. Christian Brown, Nelson Y.S. Kiang

The mammalian cochlea contains two types of afferent and two types of efferent neurons. We

aim to understand the structure and function of these neurons. During the past year we examined

the peripheral terminations of fibers emanating from the spiral ganglion of the guinea pig.

Extracellular injections of horseradish peroxidase in the ganglion labelled several types of fibers

in the cochlea. 6 Presumed afferent fibers, believed to be the peripheral portions of spiral ganglion

cells, fell into two classes: radial fibers and outer spiral fibers. Radial fibers, believed to be the

peripheral processes of Type I spiral ganglion cells, sent myelinated peripheral fibers to one or

two inner hair cells. Outer spiral fibers, believed to be the peripheral processes of type II spiral

ganglion cells, sent thin, unmyelinated axons to spiral basally within the outer spiral bundles and

innervate several outer hair cells, usually within a single row. Presumed efferent fibers coursed in

the intraganglionic spiral bundle and innervated the organ of Corti. These fibers fell into two

classes, thick and thin efferents. Thick efferents innervated outer hair cells and could be either

unmyelinated or myelinated in the osseous spiral lamina. Thin efferents innervated areas near

inner hair cells and had thin, unmyelinated axons.

No examples of fibers were found which

innervated regions near both inner and outer hair cells. Thus, as with the afferent fibers, there

appears to be a separation of efferent fibers according to their terminations within the organ of

Corti.

In recordings from the spiral ganglion with glass micropipettes, two physiological classes of

units which respond to sound have been observed. One class responds only to sound in the

ipsilateral ear, and has sound-induced and spontaneous discharge characteristics that are

similar to those of units recorded in the VllIth cranial nerve.

originate from type I afferents.

Hence, we assume that these

In addition, for three of these units, intracellular injections of

horseradish peroxidase have marked type I afferents. A second class of units can be recorded at

the peripheral side of the ganglion. These units respond preferentially to sound in either the

ipsilateral or the contralateral ear, have responses with long latencies (5 to 100 msec) which

depend on sound-pressure level, have either no spontaneous spike discharges or regular

spontaneous spike discharges, and have a wide range of characteristic frequencies (0.34 to 14

kHz). We assume these units originate from the thick efferents because of the similarity of their

characteristics to identified efferent fibers recorded near the vestibulocochlear anastomosis in

the cat.7 In addition, two such units in the spiral ganglion of the guinea pig (ipsilaterally excited)

have been labeled and classified anatomically as thick efferents. To date, no class of units which

might correspond to the type II afferent neurons has been observed among the units which

respond to sound in the spiral ganglion.

RLE P.R. No. 127

Communications Biophysics

17.1.8 Middle-Ear Muscle Reflex

John J. Guinan, James B. Kobler, Michael P. McCue

We aim to determine the structural and functional basis of the middle-ear muscle reflex. We

have labelled stapedius motoneurons following large injections of horseradish peroxidase (HRP)

in the stapedius muscle. 8 The most important conclusion of this work is that the stapedius

muscle, the smallest muscle in the body, is innervated by an extraordinarily large number of

motoneurons (almost 1200 in the cat).

For some muscles that are innervated by multiple nerve fascicles, the central pattern of

innervating motoneurons differs for different fascicles. To see if this applies to the stapedius

muscle, injections of HRP have been made in single fascicles of the stapedius nerve at the point

where the nerve enters the muscle. In each case, retrograde labelling of stapedius motoneurons

was found in each of the four major anatomically-defined stapedius motoneuron groups. This

result suggests that each of these groups projects relatively uniformly throughout the stapedius

muscle.

Manuscripts in preparation describe changes in sound-induced stapedius electromyographic

responses following cuts of the facial genu or lesions around the facial motor nucleus.

The

results show that even though stapedius motoneurons form a single motoneuron pool, their

recruitment order is not determined by a single size-principle. Thus, the stapedius motoneuron

pool does not fit textbook descriptions of the organization of motoneuron pools.

We have developed techniques for recording from single stapedius motoneurons.

We use

electrolyte-filled pipets placed in stapedial-nerve fascicles distal to their junction with the facial

nerve in cats paralyzed with flaxedil. We have studied approximately 100 stapedius nerve fibers

all of which responded to sound with high thresholds, typically 90-100 dB SPL, and broad tuning

curves with a minimum threshold in the 1-2 kHz range.

Half of the stapedius nerve fibers

responded to tones in either or both ears, 15% responded to binaural tones but not to monaural

tones (up to 115 dB SPL), 17% responded to ipsilateral tones but not to contralateral tones, and

18% responded to contralateral tones but not to ipsilateral tones. These results are consistent

with the electromyographic results and strengthen the conclusion that there are monaural

stapedius motoneurons which respond to either ipsilateral or contralateral sound but not both.

Therefore the response properties of the stapedius motoneuron pool cannot be described by

applying the size principle to the pool as a whole (if the size principle applies at all).

RLE P.R. No. 127

Communications Biophysics

17.1.9 Cochlear Efferent System

Margaret L. Gifford, John J. Guinan, William M. Rabinowitz

There are two groups of olivocochlear neurons that give rise to efferent fibers that innervate the

cochlea: medial and lateral olivocochlear neurons. Our aim is to understand the physiological

effects of activation of these two groups. We have completed a study of the effects on cochlear

9

nerve fibers of electrical stimulation in the region of the medial olivocochlear neurons.

In order to examine the mechanical effects of stimulating cochlear efferents in the cat, we

developed a system for recording small changes in ear-canal acoustic impedance.

This

impedance depends on the impedance of the tympanic membrane and on the impedance of the

cochlea.

Electrical stimulation of olivocochlear efferents at the floor of the fourth ventricle

changes the ear-canal impedance by as much as 7%. This impedance change is greatest for

low-level sounds and decreases for high-level sounds in a manner reminiscent of the effects of

efferent stimulation on auditory-nerve discharge rates. The impedance change can be either

positive or negative and is a strong function of frequency. Since these impedance changes occur

in animals with the middle-ear muscles cut and disappear after the olivocochlear fibers are

severed at the lateral edge of the fourth ventricle, they appear to be due to mechanical changes

within the cochlea induced by the efferent fibers.

The function of the olivocochlear bundle (OCB) in man is unknown. We have an opportunity to

directly examine OCB effects in man by virtue of two circumstances. (1) As the OCB fibers exit

the brainstem, they course initially within the vestibular nerve and later they separate to enter the

cochlea. (2) To relieve some patients from incapacitating vertigo, they will undergo a unilateral

vestibular nerve transection (performed by Dr. J.B. Nadol at the Massachusetts Eye and Ear

Infirmary).

The procedure should, unavoidably, result in complete unilateral OCB transection

and, ideally, no change in afferent auditory function. Such patients are particularly appropriate

for the study of OCB effects because the transection is unilateral and the subjects will be available

pre- and post-operatively; hence, each subject can serve as his/her own control.

We have

developed a variety of behavioral and electrophysiological tests designed to elucidate possible

OCB effects for use with these patients. Six patients have been tested pre-operatively and four

have been tested post-operatively (at 3 months). During the next year, we shall obtain results at

9-12 months post-op for these six patients, and evaluate the results.

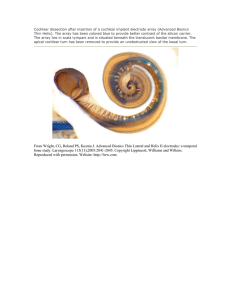

17.1.10 Cochlear Implants

Donald K. Eddinglon, Gary Girzon

During this year we obtained funds for and equipped the Cochlear Implant Laboratory. We also

RLE P.R. No. 127

Communications Biophysics

made significant progress on two projects. First, we initiated a project to develop a computer

model of the mammalian ear to display the activity patterns of auditory nerve fibers as a function

of characteristic frequency and time for arbitrary acoustic waveforms. These "neurograms" will

be studied to identify features of the activity patterns that cochlear implant systems should

produce for speech recognition. An initial model has been designed and the three dimensional

graphics software needed to display the information implemented. Second, our first subject

received a six-electrode implant at the Massachusetts Eye and Ear Infirmary and initial testing

shows that the relative pitch of the percepts elicited by electrical stimulation of these electrodes is

consistent with their tonotopic placement.

References

1. J.J. Rosowski, W.T. Peake, and J.R. White, "Cochlear Nonlinearities Inferred from Two-Tone

Distortion Products in the Ear Canal of the Alligator Lizard," Hearing Res. 13, 141-158

(1984).

2. J.J. Rosowski, W.T. Peake, and T.J. Lynch, III, "Acoustic Input-Impedance of the

Alligator-Lizard Ear: Nonlinear Features," Hearing Res. 16, 205-223 (1984).

3. X.D. Pang and W.T. Peake, "Effects of Stapedius Muscle Contraction on Stapes and Incus

Position and on Acoustic Transmission in the Cat," Abstr. Eighth Midwinter Res. Meeting,

Assoc. Res. Otolaryngol., Clearwater, Fla., Feb. 3-7, 1985, 72.

4. T.F. Weiss and D.M. Freeman, "Theoretical Studies of Frequency Selectivity, Tonotopic

Organization, and Compressive Nonlinearity of Stereociliary Tuft Motion in the Alligator

Lizard Cochlea," Abstr. Eighth Midwinter Res. Meeting, Assoc. Res. Otolaryngol.,

Clearwater, Fla., Feb. 3-7, 1985, pp. 177-178.

5. T.F. Weiss, "Relation of Receptor Potentials of Cochlear Hair Cells to Spike Discharges of

Cochlar Neurons," Ann. Rev. Physiol. 46, 247-259 (1984).

6. M.C. Brown, "Peripheral Projections of Labeled Efferent Nerve Fibers in the Guinea Pig

Cochlea: An Anatomical Study," Abstr. Eighth Midwinter Res. Meeting, Assoc. Res.

Otolaryngol., Clearwater, Fla., Feb. 3-7, 1985, pp. 9-10.

7. M.C. Liberman and M.C. Brown, "Intracellular Labeling of Olivocochlear Efferents near the

Anastomosis of Oort in Cats," Abstr. Eighth Midwinter Res. Meeting, Assoc. Res.

Otolaryngol., Clearwater, Fla., Feb. 3-7, 1985, 13-14.

8. M.P. Joseph, J.J. Guinan, Jr., B.C. Fullerton, B.E. Norris, and N.Y.S. Kiang, "Number and

Distribution of Stapedius Motoneurons in Cats," J. Comp. Neurol. 232, 43-54 (1985).

9. M.L. Gifford, "The Effects of Selective Stimulation of Medial Olivocochlear Neurons on Auditory

Nerve Responses to Sound in the Cat," Ph.D. Thesis, Department of Electrical

Engineering and Computer Science, M.I.T., 1984.

RLE P.R. No. 127

Communications Biophysics

B. Auditory Psychophysics and Aids for the Deaf

Academic and Research Staff

L.D. Braida, H.S. Colburn, L.A. Delhorne, N.I. Durlach, K.J. Gabriel, D. Ketten,

J. Koehnke, N. Macmillan, W.M. Rabinowitz, C.M. Reed, M.C. Schultz, V.W.

Zue, P.M. Zurek

Graduate Students

C.A. Bickley, D.K. Bustamante, C.R. Corbett, M.M. Downs, R. Goldberg, Y. Ito,

M. Jain, D.F. Leotta, M.V McConnell, R.C. Pearsall II, J.C. Pemberton, P.M.

Peterson, M.P. Posen, K.A. Silletto, R.C. Thayer, S. Thomson, M.J. Tsuk, R.M. Uchanski

17.2 Binaural Hearing

National Institutes of Health (Grant 5 R01 NS10916)

H. Steven Colburn, Nathaniel I. Durlach, Kaigham J. Gabriel, Manoj Jain, Janet Koehnke, Patrick

M. Zurek

Research on binaural hearing continues to involve i) theoretical work, ii) experimental work, iii)

development of facilities, and iv) preparation of results for publication.

i) In the neural modeling area, we have developed some simple models for neural structures that

could be related to available results from the lateral and medial superior olivary regions. During

the past year, we have simulated the behavior of the model neurons and found substantial

consistency with the physiological data.

We have also continued to develop our psychophysical model.

This model, which is a

refinement of previous models discussed in the literature, is being applied to results from both

normal and hearing-impaired listeners with encouraging results. The binaural component of the

model postulates the following operations: peripheral critical-band filtering, estimation of the

interaural time difference as a function of time, estimation of the interaural intensity difference as

a function of time, a combination of these estimates through interaural time-intensity trading, and

temporal averaging of the composite interaural cue. (The temporal averaging is a critical element

for the ability to predict interaural time and intensity discrimination and binaural detection with a

common model.)

Further theoretical work has been concerned with the development of a quantitative model of

the effects of a conductive hearing loss on binaural interaction.

This model proposes that

bone-conducted signals are abnormally large relative to air-conducted components in cases of

severe conductive impairment, resulting in a loss of cochlear isolation. Predictions of this model

RLE P.R. No. 127

Communications Biophysics

for interaural discriminations are made after specifying two parameters (cross-over attenuation

and delay). This model predicts one of the more curious results of our experimental study - that

interaural time jnd's are enlarged by conductive loss but interaural amplitude jnd's are not. A

manuscript on this topic is being prepared for publication (Zurek, 1985).

ii) We have completed three experimental studies of binaural interaction. The first is a study of

the dependence of the masking level difference on bandwidth. In addition to a complete set of

NoSo and NoSpi thresholds at 250 and 4000 Hz as a function of masker bandwidth, interaural

amplitude and phase jnd's and homophasic amplitude jnd's have been measured. The jnd's allow

us to test our current model of binaural interaction against this broad data set. A manuscript

describing this work is nearing completion (Zurek et al., 1985).

The second is a study of interaural correlation and binaural detection at fixed bandwidth

(Koehnke et al., 1985).

Psychometric functions for NoSo, NoSpi, interaural correlation

discrimination, interaural time discrimination, interaural intensity discrimination, and monaural

intensity discrimination were measured for four normal subjects. Results demonstrate, among

other things, that NoSpi detection and interaural correlation discrimination are closely related:

when correlation is expressed as the equivalent signal-to-noise ratio, the psychometric functions

for the two tasks are essentially identical.

The third experimental study, again concerned with detection and interaural correlation

discrimination (Jain et al., 1985), focused on issues related to the differences in bandwidth

dependence between the two tasks. Specifically, we measured a set of cases that included

interaural correlation discrimination for a narrowband noise stimulus in the presence of a spectral

fringe of noise with fixed correlation.

The results of this experiment, also consistent with the

model, show that wideband detection and fringe correlation are compatible in the sense

described in the preceding paragraph.

Additional experimental studies were undertaken to examine the feasibility of achieving

supernormal binaural hearing (in localization and detection) through new types of signal

processing.

Specifically, we have initiated studies of signal processing to simulate a greater

headwidth (by magnifying interaural differences) and also to slow-down the rapid interaural

fluctuations that occur at high frequencies in detection (by employing a comb filter to transform

critical band noise to narrowband noise at these frequencies). Further work on these techniques

is planned for the coming year.

iii) Work on laboratory facilities has included further development of sophisticated D/A

equipment for the main computer in the laboratory (VAX 11/750) and implementation of a

waveform generation package on the stand-alone LSI-11/23 system. The waveform-generation

package allows the generation of waveforms of arbitrary spectral content, phase relation and

correlation.

RLE P.R. No. 127

Communications Biophysics

iv) The following articles have been submitted for publication: Colburn et al., 1985; Gabriel, 1985;

Gabriel and Durlach, 1985; Koehnke et al., 1985; Siegel and Colburn, 1985; Stern and Colburn, 1985;

Zurek, 1985 a,b,c; Zurek et al., 1985.

Publications

Colburn, H.S., P.M. Zurek, and N.I. Durlach, in W. Yost and G. Gourevitch (Eds.), "Directional

Hearing in Relation to Hearing Impairments and Aids," Chapter in Directional Hearing,

(1985), accepted for publication.

Gabriel, K.J. and N.I. Durlach, "Interaural Correlation Discrimination. II. Relation to Binaural

Unmasking," submitted for publication.

Gabriel, K.J. "Sequential Stopping Rules for Psychophysical Experiments," J. Acoust. Soc. Am.,

submitted for publication.

Jain, M., J.R. Gallagher, and H.S. Colburn. "Interaural Correlation Discrimination in the Presence

of a Spectral Fringe," in preparation.

Koehnke, J., H.S. Colburn, and N.I. Durlach, "Performance in Several Binaural-Interaction

Experiments," J. Acoust. Soc. Am., submitted for publication.

Siegel, R.A. and H.S. Colburn, "Binaural Detection with Reproducible Noise Maskers," J. Acoust.

Soc. Am., submitted for publication.

Stern, R.M., Jr. and H.S. Colburn. "Lateral-Position Based Models of Interaural Discrimination,"

J. Acoust. Soc. Am., accepted for publication.

Zurek, P.M. "The Precedence Effect," in W. Yost and G. Gourevitch (Eds.), Chapter in Directional

Hearing, accepted for publication.

Zurek, P.M. "A Predictive Model for Binaural Advantages and Directional Effects in Speech

Intelligibility," J. Acoust. Soc. Am., submitted for publication.

Zurek, P.M. "Analysis of the Consequences of Conductive Hearing Impairment for Binaural

Hearing", in preparation.

Zurek, P.M., N.I. Durlach, K.J. Gabriel, and H.S. Colburn, "Masker-Bandwidth Dependence in

Homophasic and Antiphasic Detection," in preparation.

17.3 Discrimination of Spectral Shape

National Institutes of Health (Grant 1 RO NS 16917)

Louis D. Braida, Lorraine A. Delhorne, Nathaniel I. Durlach, Yoshiko Ito, Charlotte M. Reed,

Patrick M. Zurek

This research involves experimental and theoretical work on the ability of listeners with normal

and impaired hearing to discriminate broadband, continuous, speechlike spectra on the basis of

spectral shape.

The stimuli consist of Gaussian-noise simulations of steady state unvoiced

fricatives (/f, sh, s/) and the burst portion of unvoiced plosives (/p, t, k/), partially masked by a

Gaussian-noise simulation of cafeteria-voice babble.

The basic paradigm employed is

symmetric, two-interval, forced-choice, discrimination with trial-by-trial feedback. To prevent

listeners from basing judgements on loudness cues, the overall level of the stimulus is roved

between presentation intervals.

The data are then summarized in terms of psychometric

functions showing the dependence of sensitivity (d') on signal-to-babble ratio (S/B).

RLE P.R. No. 127

Communications Biophysics

Data have been collected on normal listeners for the three pairs of fricatives and the three pairs

of plosives, for various roving-level ranges, and for various stimulus durations. Overall sensitivity

is essentially unaffected by roving-level: the average d' is roughly the same for a 60 dB rove and

a 0 dB rove. Furthermore, the dependence of sensitivity and response bias on level-pair within

the 60 dB rove is minimal. in general, the psychometric functions are very shallow: their slopes

are in the range 0.1-0.2 d'/dB.

Also, the dependence on duration shows strong integration

effects: the value of S/B corresponding to d' = 1 changes by roughly 20 dB as the duration

changes from 10 msec to 300 msec.

Data have also been collected on two listeners with sensorineural impairments. The results for

these listeners fall within the range of results obtained on the normals with one major exception:

performance is exceptionally poor when a long stimulus duration is combined with a large rove.

In other words, at long stimulus durations (but not short durations), roving-level has a strong

abnormal negative effect.

Alternately, when the level is roved (but not when it is held fixed),

performance at long durations fails to show the normal improvements over performance at short

durations (i.e., the normal integration effect does not appear). Auxiliary experiments are now

being performed to explore these results further.

Theoretical work has focused on the computation of optimum performance for roving-level

spectral-shape discrimination I on models of central processing for discrimination of broadband

signals, and on the application of a specific black-box model of spectral-shape discrimination to

the above-mentioned data and to data in the literature.

The model that is currently being applied to the data includes a peripheral processor that

derives an internal representation of the stimulus spectrum and a central processor that relates

the internal spectrum to the performance of the listener. The peripheral processor consists of a

bank of N filters, a level estimator that squares and integrates each filter output, and a logarithmic

transformer. Each level estimate is assumed to be corrupted by an additive internal noise. The

central processor consists of an ideal processor that operates on the N-dimensional random

vector whose components are the corrupted level estimates. It is assumed that the N additive

internal noises are independent identically distributed zero-mean Gaussian random variables.

For a given choice of filters and integration times, the model has one free parameter: the variance

(K) of the Gaussian noise. We have previously reported (RLE Progress Report No. 126) that

predictions of the model were in qualitative agreement with our own data on spectral-shape

discrimination, although the best value of K for each prediction varied. We recently applied the

model to published data on critical bandwidths, 2 intensity discrimination, 3 and frequency

discrimination.4 The predictions were in qualitative but not quantitative agreement with the data.

Current work is concerned with incorporating into this model improved estimates of internal filters

and integration times.

We are also studying the effects of correlation among the noise

components in different frequency channels.

RLE P.R. No. 127

Communications Biophysics

References

1. L.D. Braida, Y. Ito, C.M. Reed, N.I. Durlach, "Optimum Central Processor for Spectral-Shape

Discrimination", J. Acoust. Soc. Am.. Suppl. 1, 77, S80 (1985).

2. C.M. Reed and R.C. Bilger, "A Comparative Study of S/N and E/N," J. Acoust. Soc. Am. 53,

1039-1044 (1973).

3. W. Jesteadt, C.C. Wier, and D.M. Green, "Intensity Discrimination as a Function of Frequency

and Sensation Level," J. Acoust. Soc. Am. 61, 169-177 (1985).

4. C.C. Wier, W. Jesteadt. and D.M. Green, "Frequency Discrimination as a Function of Frequency

and Sensation Level," J. Acoust. Soc. Am. 61, 178-184 (1977).

17.4 Role of Anchors in Perception

National Science Foundation (Grants BNS83-19874 and BNS83-19887)

Louis D. Braida, Nathaniel I. Durlach, Rina Goldberg, Neil A. Macmillan, Michael J. Tsuk

This research seeks to provide a unified theory for identification and discrimination of stimuli

that are perceptually one-dimensional.

During this period, we have i) begun to extend our

existing theory, developed for intensity perception, to other continua, ii) conducted experiments

using two such continua, and iii) devised psychophysical methods appropriate for large groups of

experimental subjects, each of whom serves only briefly.

i) Our current theory of intensity perception 1 postulates perceptual anchors near the extremes

of the stimulus range.

Observers judge stimuli by measuring the perceptual distance from a

sensation to the anchors. The model accounts for the increase in sensitivity observed near the

edges of the range in intensity identification.

For many other continua, especially those which are "categorically" perceived, sensitivity

reaches a peak in the interior of the stimulus range. If such peaks reflect regions of natural high

sensitivity, any resolution task including fixed discrimination is predicted to show a peak.

If

instead they reflect central anchors, identification and roving discrimination are predicted to show

sharper peaks than fixed discrimination.

Macmillan 2' 3 has demonstrated that some peaks

reported in the literature are of each type. We are currently developing a quantitative version of

the model for continua with central anchors.

ii) We have applied the anchor model to a continuum of synthetic, steady-state vowels 4 in the

range /i - I - e/. Resolution was measured in four discrimination conditions (two-interval

forced-choice and same-different, fixed and roving discrimination), and in identification

conditions with and without a standard. In identification and roving discrimination, resolution was

poorer than in fixed discrimination, but not uniformly so: sensitivity differences among tasks were

smallest near category boundaries. Presentation of a standard improved performance near the

standard, especially in the /i/

region, but lowered resolution for stimuli far from the standard.

These results are qualitatively consistent with the anchor model.

RLE P.R. No. 127

Communications Biophysics

Tsuk 5 studied identification of lateralized noise bursts with and without standards. Presence of

a standard improved performance, but not in the region of the standard.

iii) In this research, the amount of data gathered in a specific condition on one day is often

small; sensitivity estimates are obtained by pooling data across days or subjects. In future work,

we plan to study the way in which anchors develop, and the need to pool data will be even more

severe.

We have therefore compared the statistical properties of sensitivity estimates (d')

obtained by pooling data with those obtained by averaging sensitivities. Although pooling data

introduces a slight bias, it is statistically more efficient than averaging. A paper reporting this

work has been accepted for publication.6

References

1. L.D. Braida, J.S. Lim, J.E. Berliner, N.I. Durlach, W.M. Rabinowitz, and S.R. Purks, "Intensity

Perception. XIII. Perceptual Anchor Model of Context-Coding," J. Acoust. Soc. Am. 76,

722-731 (1984).

2. N.A. Macmillan, "Memory Modes and Perceptual Anchors in Categorical Perception," Paper

presented at Acoust. Soc. Am., Norfolk, Virginia (1984); abstract in J. Acoust. Soc. Am.

75, Suppl. 1, S66 (1984).

3. N.A. Macmillan, "Beyond the Categorical/Continuous Distinction: A Psychophysical Approach

to Processing Modes," in S. Harnad (Ed.), Categorical Perception, accepted for

publication.

4. R. Goldberg, N.A. Macmillan, and L.D. Braida, "A Perceptual-Anchor Interpretation of

Categorical Perception on a Vowel Continuum," Paper presented at Acoust. Soc. Am.,

Austin, Texas (1985).

5. M.J. Tsuk, "An Objective Experiment to Measure Lateralization," S.B. Thesis, Department of

Electrical Engineering and Computer Science, M.I.T., 1984.

6. N.A. Macmillan and H.L. Kaplan, "Detection Theory Analysis of Group Data: Estimating

Sensitivity from Average Hit and False Alarm Rates," Psychological Bulletin, accepted for

publication.

17.5 Hearing Aid Research

National Institutes of Health (Grant 5 O 1 NS 12846)

Louis D. Braida, Diane K. Bustamante, Lorraine A. Delhorne, Nathaniel I. Durlach, Miles P. Posen,

Charlotte M. Reed, Karen Silletto, Rosalie M. Uchanski, Victor W. Zue, Patrick M. Zurek

This research is directed toward improving hearing aids for persons with sensorineural hearing

impairments. We intend to develop improved aids and to obtain fundamental understanding of

the limitations on such aids. The work includes studies of i) effects of noise on intelligibility, ii)

amplitude compression, iii) frequency lowering, and iv) clear speech.

i) This project is concerned with the deleterious effects of noise on speech reception by the

hearing-impaired. In particular, we are trying to determine the extent to which this extra difficulty

in noise reflects aspects of hearing impairment beyond loss of sensitivity. To obtain insight into

RLE P.R. No. 127

Communications Biophysics

this problem we have measured monaural speech intelligibility for CV syllables presented in a

background of Gaussian noise with the spectral shape of babble. Speech and noise are added

prior to spectral shaping with either a flat or a rising frequency-gain characteristic. Over the past

year our sample has been increased to seventeen ears of twelve hearing-impaired subjects with

roughly 30-60 dB losses and a wide variety of audiometric configurations. The results have been

analyzed in terms of a model that assumes that the hearing loss is caused by an equivalent

additive noise 1 and Articulation Theory is used to predict intelligibility. Plots of consonant

identification score versus Articulation Index for the conditions tested continue to show a strong

degree of overlap. If conditions yielding approximately constant Articulation Index are plotted

versus average hearing loss (average at 0.5, 1.0, and 2.0 kHz), intelligibility scores from these

conditions and the various subjects are roughly constant, independent of hearing loss.

ii) Many sensorineural hearing impairments are characterized by reduced dynamic range and

abnormally rapid growth of loudness. Multiband amplitude compression has been suggested to

improve speech reception for listeners with such impairments, but the intelligibility advantages

associated with multiband compression are limited by distortion of speech cues associated with

the short term spectrum. 2' 3 To overcome this problem we are studying 'Principal Component

Compression,' a means of inter-band control of compressor action that seems capable of

achieving significant reductions in level variation with minimal distortion of the short-term

spectrum.4

A perceptual study to evaluate the ability of principal component compression to compensate

for reduced dynamic range in sensorineural hearing impairments is currently in progress. Two

types of principal component compression are being examined: compression of the lowest-order

component (PCA), which corresponds roughly to compression of the overall level, and

compression of the two lowest-order components (PCB), which corresponds roughly to

compression of both overall level and spectral slope. For comparison purposes, wideband

compression (WC), independent multiband compression (MBC), and linear amplification (LA) are

also being tested. Four subjects with sensorineural hearing impairments are being tested with

consonant-vowel-consonant (CVC) nonsense syllables and phonetically balanced (Harvard)

sentences at the subject's most comfortable listening level (MCL) and at MCL - 10 dB. Collection

of the CVC intelligibility data has been completed; collection of the sentence intelligibility data is

in progress. Preliminary analysis of the CVC data (in terms of percent-correct phoneme scores)

indicate that at MCL all systems are roughly equivalent for each of the four subjects, but that at

MCL-10 dB the WC and PCA systems are superior (by 10-15 percentage points) for three of the

four subjects.

5

A study has been completed on infinite peak clipping as a means of amplitude compression.

This extreme form of range reduction was evaluated with respect to: 1) the actual amount of

compression achieved when clipping is preceded or followed by filtering and 2) spectral

RLE P.R. No. 127

Communications Biophysics

distortion. It was found that the widths of level distributions of clipped and post-filtered speech

were relatively independent of the characteristics of both the pre-filter and the post-filter.

Measured ranges between the 10% and 90% cumulative levels were about 10--15 dB as compared

to the input ranges of 30-40 dB.

The clipped spectra of unvoiced speech sounds can be predicted analytically. The clipped

spectra of voiced sounds cannot be easily predicted and so several cases were examined

empirically. In general, despite the radical distortion of the input waveform, only moderate

spectral distortions were found.

Further evaluation of infinite peak clipping as a means of

amplitude compression will involve perceptual tests employing impaired listeners.

iii) Frequency-lowering is a form of signal processing intended to make high-frequency speech

cues available to persons with high-frequency hearing loss. We have been investigating the

intelligibility of artificially coded speech whose spectrum is confined to frequencies below 500 Hz.

A study of the intelligibility of coded consonants 6 is now being extended to include vowels and

diphthongs. Vowels were generated by varying the spectral shape of a complex of ten pure tones

spaced at multiples of 50 Hz in the region 50-500 Hz. The relative amplitudes of the components

for each vowel were determined from natural utterances by measuring the amplitude of the

spectra at each multiple of 500 Hz between 500 and 5000 Hz and then imposing this amplitude on

a pure tone ten times lower in frequency than the original. Identification experiments are being

conducted to compare the intelligibility of the coded vowels to that of natural utterances lowpass

filtered to 500 Hz. Preliminary results indicate that the intelligibility of the coded vowels (90-95%

correct) is somewhat higher than that observed under lowpass filtering of natural speech with one

token of each vowel.

In conjunction with this work, we have begun to investigate the effect of the type-token ratio on

the perception of speech sounds.7 Performance on sets of lowpass-filtered CV syllables was

studied as a function of the number of tokens per consonant in each syllable set. Twenty-four

consonants were represented in all the syllable sets and all tokens were produced by one female

talker.

The number of tokens per consonant in a given experiment ranged from one (one

utterance of each syllable in C/a/ context) to three (three utterances per syllable in C/a/ context)

to nine (three utterances per syllable in each of three contexts -

C/a/, C/i/, C/u/). As the

number of tokens increased from one to three to nine, identification scores decreased from 79 to

64 to 57 percent correct. Thus, the size of the effect appears to be substantial for small numbers

of tokens. Further work will be directed towards determining how this effect varies with the

particular speaker and utterances employed and towards modeling the observed effects.

iv) The ultimate goal of our research on clear speech is the development of improved

signal-processing schemes for hearing aids.

Picheny 8 (1981)

and Chen 9 (1980) have

demonstrated the ability of naturally produced clear speech to increase intelligibility for both

normal and hearing impaired listeners. The continuing research efforts are directed at more

RLE P.R. No. 127

Communications Biophysics

detailed descriptions of the differences between clear and conversational speech and an

understanding of the perceptual implications of these differences.

Since one of the major differences concerns temporal factors, durations of phonemes and

pauses are being measured. Conversational phoneme duration, clear phoneme duration, and

percentage increase (PI) have been measured for one of three speakers. Several one-way

ANOVA's were performed with the following factors: (i) syllable stress, (ii) prepausal lengthening

(or word position in a sentence), and (iii) the surrounding phoneme environment.

The values of PI determined for various phonemes can be summarized as follows:

{aa,ae,ao,aw,ey,oy,eh,b,d,g,dh}

P1<40%; {er,iy,yu,ah,ih,uh,y,p,t,k,m,n,ng,s,v,z,ch,jh}

-

40<P1<80%; and {ay,ow,uw,l,r,w,f,th,sh,h) - P1>80%. Results from the ANOVA tests indicate that,

in general, (i) stress is not a statistically significant factor and (ii) prepausal lengthening is

statistically significant for many groups of phonemes. Phoneme position at a phrase boundary

has a significant effect on duration in both speaking modes and an effect on PI. The prepausal

effect accounts for roughly 30% of the total variance in the duration measures. The significance

of the prepausal effect implies not only that grammatical structure is important in the production

of both conversational and clear speech, but that its effect is different in clear and conversational

speech.

References

1. R.L. Dugal, L.D. Braida, and N.I. Durlach, "Implications of Previous Research for the Selection

of Frequency-Gain Characteristics," in G.A. Studebaker and 1. Hochberg (Eds.),

Acoustical Factors Affecting Hearing Aid Performance and Measurement (University Park

Press 1980).

2. L.D. Braida, N.I. Durlach, S.V. DeGennaro, P.M. Peterson, and D.K. Bustamante, "Review of

Recent Research on Multiband Amplitude Compression for the Hearing Impaired," in G.A.

Studebaker and F.H. Bess (Eds.), The Vanderbilt Hearing-Aid Report, Monographs in

Contemporary Audiology, 1982.

3. S.V. DeGennaro, L.D. Braida, and N.I. Durlach, "Multichannel Syllabic Compression for

Severely Impaired Listeners," Proc. 2nd Int. Conf. on Rehab. Enqr., Ottawa, Canada,

1984.

4. D.K. Bustamante and L.D. Braida, "Principal Component Amplitude Compression," J. Acoust.

Soc. Am., Suppl. 1, 74, ZZ3, S104 (1983).

5. K.A. Silletto, "Evaluation of Infinite Peak Clipping as a Means of Amplitude Compression," M.S.

Thesis, Department of Electrical Engineering and Computer Science, M.I.T., 1984.

6. K.K. Foss. "Identification Experiment on Low-Frequency Artificial Codes as Representations of

Speech," S.B. Thesis, Department of Electrical Engineering and Computer Science,

M.I.T., 1983.

7. S.R. Dubois, "A Study of the Intelligibility of Low-Pass Filtered Speech as a Function of the

Syllable Set Type-Token Ratio." Course 6.962 Project Paper, Department of Electrical

Engineering and Computer Science, M.I.T., 1984.

8. M.A. Picheny, "Speaking Clearly for the Hard of Hearing," Ph.D. Thesis, Department of

Electrical Engineering and Computer Science, M.I.T., June 1981.

RLE P.R. No. 127

100

Communications Biophysics

9. F.R. Chen, "Acoustic Characteristics and Intelligibility of Clear Conversational Speech at the

Segmental Level," unpublished M.S. Thesis, Department df Electrical Engineering and

Computer Science, M.I.T.

17.6 Multimicrophone Monaural Aids for the

Hearing-Impaired

National Institutes of Health (Grants 1 RO 1 NS21322-01 and 5 T32-NS07099-07)

Nathaniel I. Durlach, Darlene Ketten, Michael V. McConnell, Patrick M. Peterson, William

M. Rabinowitz, Robert C. Thayer, Patrick M. Zurek

The goal of this work is the development of systems that utilize multiple spatial samples of the

acoustic environment and form a single-channel output to provide enhanced speech intelligibility

for monaural listening by the hearing-impaired. There are two basic problem areas in this project.

The first, to which most of our efforts thus far have been addressed, is the achievement of high

directionality with a cosmetically-acceptable body-worn array of microphones. The second,

which assumes that such directional channels can be achieved, concerns coding the channels

(prior to summing for monaural presentation) so that the listener can attend to any single channel

while simultaneously monitoring the other channels.

Work on the directionality problem is proceeding along three lines. In the first, we consider

arrays of microphones with fixed linear weights to provide maximal frontal directivity (when

placed on the head). Questions of interest include the complexity of the effects of diffraction and

scattering from the body and head, reliability of acoustic response with repeated placement of the

device, and performance (intelligibility) in non-anechoic environments. In addition to beginning

work on the design of devices, we are adapting the Speech Transmission Index 1 to our

application in order to predict the effects of background noise, room reverberation, and

directionality on intelligibility.2

The second class of processing schemes involves adaptive linear arrays, that is, linear

processing of the microphone signals with complex weightings that depend on estimates of the

acoustic environment.

Questions of special interest here concern the interactions of the

estimation procedure with the complexity of the environment and the rate of convergence of the

adaptive algorithm relative to the rate of change of the acoustic environment. Initial work has

included 1) development of a computerized procedure for measuring intelligibility thresholds and

2) an extension of the image method for simulating a room's acoustic response3 to the case of

multiple microphones.4

The third class of schemes uses nonlinear processing.

These schemes are motivated

principally by an understanding of the normal binaural processing of signals that leads to

enhanced speech intelligibility.5 Thus far we have attempted to replicate a processing scheme for

RLE P.R. No. 127

Communications Biophysics

reproducing the "cocktail-party effect".

6

Our results, when we use the same method of

evaluation, are consistent with their results: interference that is spatially separate from the target

is suppressed.

improvement.

However, on direct tests of target intelligibility, the processing shows no

Further work now being performed with this basic scheme includes the

development of a multiband system with independent processing in each band.

References

1. H.J.M. Steeneken and T. Houtgast, "A Physical Method for Measuring Speech-Transmission

Quality," J. Acoust. Soc. Am. 67, 318-326 (1980).

2. R.C. Thayer, "The Modulation Transfer Function in Room Acoustics," S.B. Thesis, Department

of Electrical Engineering and Computer Science, M.I.T., 1985.

3. J.B. Allen and D.A. Berkley, "Image Method for Efficiently Simulating Small-Room Acoustics,"

J. Acoust. Soc. Am. 65, 943-950 (1979).

4. P.M. Peterson, "Simulation of the Impulse Response Between a Single Source and Multiple,

Closely-Spaced Receivers in a Reverberant Room," J. Acoust. Soc. Amer. 76, S50(A)

(1984).

5. P.M. Zurek, "A Predictive Model for Binaural Advantages and Directional Effects in Speech

Intelligibility," J. Acoust. Soc. Am., submitted for publication.

6. J.F. Kaiser and E.E. David, "Reproducing the Cocktail Party Effect," J. Acoust. Soc. Amer. 32,

918(A) (1960).

17.7 Tactile Perception of Speech

National Institutes of Health (Grant 1 RO1 NS 14092-06)

National Science Foundation (Grant BNS77-21751)

Louis D. Braida, Lorraine A. Delhorne, Maralene Downs, Nathaniel I. Durlach, Daniel F. Leotta,

Joseph Pemberton, William M. Rabinowitz, Charlotte M. Reed, Scott Thomson

The ultimate goal of this research program is to develop tactile aids for the deaf and deaf-blind

that will enable the tactile sense to serve as a substitute for hearing.

Among the various

components of our research in this area are (i) study of tactile communication methods employed

by the deaf-blind, (ii) development of an augmented Tadoma system, and (iii) development of a

synthetic Tadoma system.

(i) The primary tactile communication methods employed by the deaf-blind are Tadoma (in

which speech is perceived by placing a hand on the face of the talker and monitoring the

mechanical actions associated with speech production), tactile fingerspelling, and tactile signing.

Research on Tadoma 1 has demonstrated that continuous speech can be transmitted through the

tactile sense at speeds and with error rates that are satisfactory for everyday communication (thus

producing an empirical "existence theorem" that communication through the tactile sense is

possible). Tactile signing and tactile fingerspelling differ from Tadoma in that (a) they are much

more widely used than Tadoma, (b) they require special knowledge on the part of the "talker",

and (c) they are tactile adaptations of communication methods devised for the visual sense.

RLE P.R. No. 127

102

Communications Biophysics

Current research is concerned with determining how each of these two methods compares to

Tadoma with respect to communication rate and error structure.

Results on tactile fingerspelling indicate that (a) performance is highly consistent across the five

subjects tested; (b) although production rates are generally slow compared to the speaking rates

used in conjunction with Tadoma, the two methods produce comparable results for sentence

reception over a small range of comparable rates and for estimates of rates at which continuous

discourse can be tracked (roughly 30 to 40 words per minute); and (c) communication is limited

by the maximum rate at which fingerspelling can be produced. The results of an auxiliary study

concerned with visual reception of fingerspelled words 2 suggest that intelligibility remains high

for rates up to 2-3 times the normal rate of production (achieved through variable-speed

playback of videotaped stimuli).

Preliminary results from our study of tactile signing show a

higher degree of performance variability for American Sign Language (ASL) subjects than for

Pidgin Signed English (PSE) subjects.

Results available for one ASL subject indicate highly

accurate tactile reception of signed sentences at transmission rates roughly equivalent to those

achieved in the fingerspelling study.

(ii) Direct observation of Tadoma, plus analysis of Tadoma errors, suggests that performance

could be substantially improved by augmenting Tadoma with supplementary information on

tongue position. A commercially available electro-palatograph (Rion DP-01) is being used to

sense the contact pattern between the tongue and palate and the transducer portion of the

Optacon isbeing used to display this tongue-position information. (This system is referred to as

the "Palatacon".) The palatograph contains an artificial palate (specified for a given individual)

with 63 contact detectors which drive, on a one-to-one basis, a visual display of 63 LEDs

arranged in the shape of a palate. A programmable interface (using a DEC LSI-11) has been

developed to allow for tabular specification of vibrator action on the Optacon's 24x6 array

corresponding to the 63 palatographic contact points. The mapping currently in use preserves the

general shape of the palate on the vibratory array. This vibratory array is applied to a finger on the

hand not being used for Tadoma.

Preliminary experiments have been performed to measure the ability of two laboratory subjects

(with simulated deafness and blindness) using augmented Tadoma to discriminate pairs of stimuli

(9 vowel pairs and 9 consonant pairs) that are difficult to discriminate using conventional

Tadoma. 3 For consonants, performance on the Palatacon alone was generally superior to that

obtained through Tadoma alone and equivalent to that obtained on augmented Tadoma. For

vowels, this finding was reversed in that performance through Tadoma alone was superior to that

through the Palatacon alone and equivalent to that obtained on augmented Tadoma. Thus, the

tactile display of tongue contact with the palate appears to be an effective means of improving

consonant discrimination, but not vowel discrimination. Future research will include investigation

of alterations in the Palatacon system to improve performance on vowels and investigation of

103

RLE P.R. No. 127

Communications Biophysics

other methods of deriving information on tongue position for vowels.

(iii) In order to explore a variety of research questions that have arisen in the study of Tadoma,

we are developing a synthetic Tadoma system. 4 This system is composed of an artificial

mechanical face that is driven by computer-controlled signals derived from sensors placed on a

talker's face. The facial actions represented (based on our understanding of natural Tadoma) are

laryngeal vibration, oral airflow, and jaw and lip movement. Laryngeal vibration was monitored

with a small microphone situated on the throat near the larynx; oral airflow was sensed with an

anemometer positioned in front of the mouth; and jaw and lip movements were measured in the

midsaggital plane using cantilevered strain gauges.

appear to markedly effect speech production.

The complete array of sensors did not

Simultaneous multichannel recordings of these

signals have been made 5 from two male and two female talkers for a variety of speech materials

representative of those used in our studies of natural Tadoma.

These recordings have been

digitized (12 bit ADC), edited to separate files for each utterance, and processed to remove known

transducer artifacts and to be made appropriate for use with the artificial-face display. 5' 6

In the construction of the artificial face, an anatomical-model skull has been adapted to

incorporate the articulatory actions specified above. For jaw movement, a rotational joint was

constructed near the condylar process and a drive linkage was connected to a DC servomotor.

For lip movements, assemblies were mounted behind the lower and upper teeth with actuators

that project (following removal of portions of the central incisors) to the locations of the lips in the

midsaggital plane; these actuators attach to the lips (rubber tubing) and are driven via Bowden

cables and DC servomotors. Each motor operates within a closed-loop, position-control system

with feedback of shaft velocity, via an analog tachometer, and shaft position, via a digital optical

encoder.

Laryngeal vibration is presented with a bone-conduction vibrator placed in the

laryngeal region. Oral airflow is approximated by four flow levels using a pressurized air supply

and two high-speed, on/off solenoid valves (the valves output to tubes which deliver jets that exit

the center of the mouth opening).

A preliminary evaluation of the synthetic Tadoma system has now been completed. 7 Roving

ABX discrimination tests were performed among sets of 15 vowels in CVC contexts and among 24

consonants in CV contexts. Two naive adult subjects. with simulated deafness and blindness,

have been tested. Average results for vowel discrimination (68%) were equal to those obtained in

a comparable study of natural Tadoma. 8 Initial results for consonant discrimination were lower for

the synthetic system (68 vs. 78%) due primarily to poor performance on voicing contrasts

(performance on these contrasts was roughly at the chance level of 50%). After modifying the way

in which laryngeal vibration was represented on the face, performance on voicing contrasts

improved to 80% and the overall consonant score to 74%.

While these results with normal

subjects are encouraging, further tests of speech recognition with deaf-blind Tadoma users are

required to assess the adequacy of the synthetic Tadoma system as a simulation of natural

RLE P.R. No. 127

104

Communications Biophysics

Tadoma. The achievement of an adequate simulation will necessarily confirm our understanding

of Tadorna; more importantly, it will provide a flexible research tool to study how performance is

affected by various transformations that cannot be achieved with natural Tadoma.

References

1. C.M. Reed, W.M. Rabinowitz, N.I. Durlach, L.D. Braida, S. Conway-Fithian, and M.C. Schultz,

"Research on the Tadoma Method of Speech Communication," J. Acoust. Soc. Am. 77,

247-257 (1985).

2. S. Thomson, "Fingerspelled Stimuli: Accuracy of Reception vs. Transmission Rate," S.B.

Thesis, Department of Electrical Engineering and Computer Science, M.I.T., 1984.

3. J. Pemberton, "Evaluation of Augmented Tadoma as a Method of Communication," S.B.

Thesis, Department of Electrical Engineering and Computer Science, M.I.T., 1984.

5. G.M. Skarda, "An Analysis of Facial Action Signals During Speech Production Appropriate for a

Synthetic Tadoma System," S.M. Thesis, Department of Electrical Engineering and

Computer Science, M.I.T., 1983.

6. M.M. Downs, "Processing Facial Action Signals for Presentation to an Artificial Face," S.B.

Thesis, Department of Electrical Engineering and Computer Science, M.I.T., 1984.

7. D.F. Leotta, "Synthetic Tadoma: Tactile Speech Reception from a Computer-Controlled

Artificial Face," S.M. Thesis, Department of Electrical Engineering and Computer

Science, M.I.T., 1985.

8. C.M. Reed, S.I. Rubin, L.D. Braida, and N.I. Durlach, " Analytic Study of the Tadoma Method:

Discrimination Ability of Untrained Observers," J. Speech Hearing Res. 21, 625-637

(1978).

105

RLE P.R. No. 127

Communications Biophysics

C. Transduction Mechanisms in Hair Cell Organs

Academic and Research Staff

Prof. L.S. Frishkopf, Prof. T.F. Weiss, Dr. D.J. DeRosier 2 2, Dr. C.M. Oman

The overall objective of this project is to study the sequence of steps by which mechanical

stimuli excite receptor organs in the phylogenetically related auditory, vestibular, and lateral-line

organs. The receptor cells in these organs are ciliated hair cells. Specific goals include the

characterization of the motion of the structures involved, particularly the hair cell stereocilia;

study of the nature and origin of the electrical responses to mechanical stimuli in hair cells; and

investigation of the role of these responses in synaptic and neural excitation.

17.8 Relation of Anatomical and Neural-Response

Characteristics in the Alligator Lizard Cochlea

National Institutes of Health (Grant 5 RO 1 NS11080)

Lawrence S. Frishkopf

Microscopic observation of the mechanical response characteristics of the auditory papilla

(cochlea) of the alligator lizard, Gerrhonotus multicarinatus,to acoustic stimulation has revealed

that in the basal region of the organ, length-dependent resonant motion of ciliary bundles can

account for frequency selectivity and tcnotopic organization of basally directed fibers in the

auditory nerve. 1 3 In the apical region of the organ, studies of nerve fiber responses indicate that

the range of characteristic frequencies is lower and that tuning is sharper than for basal fibers;

that, apically, tonotopic organization appears to be absent, as judged by neural studies; and that

two-tone suppression, absent in the basal region, is found among apical fibers. 4

Morphologically, the two regions differ in a number of significant ways: 5 in the apical but not in

the basal region hair cells are covered by a tectorial membrane; ciliary bundles are shorter

apicaily (5-10 lLm) than basa!ly (12-31 fpm); apical hair bundles all have the same morphological

orientation, whereas basal bundles do not. The innervation of hair cells in the two regions also

differs: apical hair cells alone receive efferent synapses; and whereas some apical fibers send

branches along the length of the papilla, basal fibers all terminate locally where they enter the

papilla.

A program of experimental research has been proposed that will attempt to test possible

relationships among some of these physiological and anatomical characteristics.

Particular

emphasis will be placed on understanding the basis of frequency selectivity and two-tone

22

Professor, Department of Biology, Brandeis University

RLE P.R. No. 127

106

Communications Biophysics

interactions observed in apical fibers. Initial experiments will explore the mechanical response of

the tectorial membrane and its role in determining ciliary motion.

References

1. L.S. Frishkopf and D.J. DeRosier, "Evidence of Length-Dependent Mechanical Tuning of Hair

Cell Stereociliary Bundles in the Alligator Lizard Cochlea:

Relation to Frequency

Analysis," M.I.T. Research Laboratory of Electronics Progress Report No. 125, 190-192

(1983).

2. L.S. Frishkopf and D.J. DeRosier, "Mechanical Tuning of Free-standing Stereociliary Bundles

and Frequency Analysis in the Alligator Lizard Cochlea," Hearing Res. 12, 393-404

(1983).

3. T. Holton and A.J. Hudspeth, "A Micromechanical Contribution to Cochlear Tuning and

Tonotopic Organization," Science 222, 508-510 (1983).

4. T.F. Weiss, W.T. Peake, A. Ling, Jr., and T. Holton, "Which Structures Determine Frequency

Selectivity and Tonotopic Organization of Vertebrate Cochlear Nerve Fibers? Evidence

from the Alligator Lizard," in R.F. Naunton and C. Fernandez (Eds.), Evoked Electrical

Activity in the Auditory Nervous System, (Academic Press, New York 1978), pp. 91-112.

5. M.J. Mulroy, "Cochlear Anatomy of the Alligator Lizard," Brain Behav. Evol. 10, 69-87 (1974).

6. M.J. Mulroy and T.G. Oblak, "Cochlear Nerve of the Alligator Lizard," J. Comp. Neurol. 233,

463-472 (1985).

107

RLE P.R. No. 127

RLE P.R. No. 127

108