Tactical Strategies to Search for

advertisement

Cow-Path Games: Tactical Strategies to Search for

Scarce Resources

by

Kevin Spieser

Submitted to the Department of Aeronautics and Astronautics

in partial fulfillment of the requirements for the degree of

Doctor of Philosophy

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

October 2014 [PeoV

2\Z]

@ Massachusetts Institute of Technology 2014. All rights reserved.

Lf

Signature redacted

Author....................................................

Department of Aeronautics and Astronautics

October 7, 2014

Signature redacted

Certified by............

Emilio Frazzoli

Professor of Aeronautics and Astronautics

Thesis Supervisor

Signature redacted

.....................

. . .

.

Certified by...............

Iatrick Jaillet

Professor of Electrical Engineering

Corpmittee Member

Signature redacted

Certified by......

Hamsa Balakrishnan

Associate Professor of Aeronautics and Astronautics

Committee Member

FN

Accepted by .....

.....................

Signature redacted ........

Paulo C. Lozano

Associate Professor of Aeronautics and Astronautics

Chair, Graduate Program Committee

2

Cow-Path Games: Tactical Strategies to Search for Scarce

Resources

by

Kevin Spieser

Submitted to the Department of Aeronautics and Astronautics

on October 7, 2014, in partial fulfillment of the

requirements for the degree of

Doctor of Philosophy

Abstract

This thesis investigates search scenarios in which multiple mobile, self-interested

agents, cows in our case, compete to capture targets.

The problems considered in this thesis address search strategies that reflect (i)

the need to efficiently search for targets given a prior on their location, and (ii)

an awareness that the environment in which searching takes place contains other

self-interested agents. Surprisingly, problems that feature these elements are largely

under-represented in the literature. Granted, the scenarios of interest inherit the

challenges and complexities of search theory and game theory alike. Undeterred, this

thesis makes a contribution by considering competitive search problems that feature

a modest number of agents and take place in simple environments. These restrictions

permit an in-depth analysis of the decision-making involved, while preserving interesting options for strategic play. In studying these problems, we report a number of

fundamental competitive search game results and, in so doing, begin to populate a

toolbox of techniques and results useful for tackling more scenarios.

The thesis begins by introducing a collection of problems that fit within the competitive search game framework. We use the example of taxi systems, in which drivers

compete to find passengers and garner fares, as a motivational example throughout.

Owing to connections with a well-known problem, called the Cow-Path Problem, the

agents of interest, which could represent taxis or robots depending on the scenario,

will be referred to as cows. To begin, we first consider a one-sided search problem in

which a hungry cow, left to her own devices, tries to efficiently find a patch of clover

located on a ring. Subsequently, we consider a game in which two cows, guided only

by limited prior information, compete to capture a target. We begin by considering a

version in which each cow can turn at most once and show this game admits an equilibrium. A dynamic-programming-based approach is then used to extend the result

to games featuring at most a finite number of turns. Subsequent chapters consider

games that add one or more elements to this basic construct. We consider games

3

where one cow has additional information on the target's location, and games where

targets arrive dynamically. For a number of these variants, we characterize equilibrium search strategies. In settings where this proves overly difficult, we characterize

search strategies that provide performance within a known factor of the utility that

would be achieved in an equilibrium.

The thesis closes by highlighting the key ideas discussed and outlining directions

of future research.

Thesis Supervisor: Emilio Frazzoli

Title: Professor of Aeronautics and Astronautics

Committee Member: Patrick Jaillet

Title: Professor of Electrical Engineering

Committee Member: Hamsa Balakrishnan

Title: Associate Professor of Aeronautics and Astronautics

4

Acknowledgments

This thesis has been a long time in the making. As with many lengthy endeavors, the

road has not always been smooth. However, it is also true that, looking back, I am

grateful for the experience, the knowledge gained, the doors that have been opened,

and the many acquaintances and friends made along the way. Of course, I am also

very appreciative of the frequent encouragement and timely distractions provided by

those that have seen me through this degree.

My advisor, Emilio Frazzoli, afforded me tremendous freedom to pursue a widerange of research topics throughout my studies. This flexibility to think freely across

a breadth of problems not only kept my work engaging, but also made me a more

independent, well-rounded, and all together better researcher. I must also point out

the opportunities I had to visit NASA Ames in California, SMART in Singapore,

as well as the various venues at which I have been fortunate enough to present my

research.

He helped make all of these ventures possible.

Finally, as I got off to

somewhat of a rocky start at MIT, I owe him a special thanks for sticking with me.

The remaining members of my thesis committee, Professors Patrick Jaillet and

Hamsa Balakrishnan, have provided thoughtful commentary and a fresh perspective

on my research during our meetings and the writing of this document. I am grateful

for their time and feedback.

Lastly, I have seen many fellow lab mates come and go in my time at MIT. I

have taken classes and collaborated on research projects with a number of these

individuals. Their assistance with solving homework problems, studying for exams,

marking exams, writing papers, re-writing papers, and brainstorming ideas is much

appreciated. These interactions have been one of the defining features of my graduate

school experience. I hope that, on the whole, they have found my contributions to

these efforts as insightful and formative as I have found theirs. Thank you!

5

Sincerely,

Kevin Spieser

6

This thesis is dedicated to Briggs and my parents.

No cows were harmed in the writing of this thesis.

7

8

Contents

1

2

3

1.1

M otivation . . . . . . . . . . . . . . . . . . . .... . . . . . . . . . . .

22

1.2

Contributions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

27

1.3

O rganization

. . . . . . . . . . . . . .... . . . . . . . . . . . . . . .

28

31

Background Material

2.1

Search Theory: An Introduction . . . . . . . . . . . . . . . . . . . . .

32

2.2

Probabilistic Search . . . . . . . . . . . . . . . . . . . . . . . . . . . .

34

2.2.1

Search with an imperfect sensor . . . . . . . . . . . . . . . . .

35

2.2.2

Search with a perfect sensor . . . . . . . . . . . . . . . . . . .

37

2.3

Pursuit-evasion games

. . . . . . . . . . . . . . . . . . . . . . . . . .

39

2.4

Persistent planning problems . . . . . . . . . . . . . . . . . . . . . . .

42

45

Mathematical Preliminaries

3.1

4

21

Introduction

G am e theory

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

45

51

The Cow-Path Ring Problem

4.1

Introducing The Cow-Path Ring Problem . . . . . . . . . . . . . . . .

51

4.2

CPRP Notation and Terminology . . . . . . . . . . . . . . . . . . . .

53

9

The number of turns in the CPRP

. . . . . . . . . . . . . . . . . .

58

4.4

An iterative algorithm for s* . . . . . . . . . . . . . . . . . . . . . .

59

4.5

A direct algorithm for finding s* . . . . . . . . . . . . . . . . . . . .

64

4.6

Summary of the CPRP . . . . . . . . . . . . . . . . . . . . . . . . .

64

.

.

.

.

4.3

5 The Cow-Path Ring Game

67

Adding a second cow to the ring . . . . . .

. . . . . . . . . . . . . .

67

5.2

A model for informed cows . . . . . . . . .

. . . . . . . . . . . . . .

68

5.3

Defining the Cow-Path Ring Game . . . .

. . . . . . . . . . . . . .

70

5.4

A remark about Cow-Path games on the line . . . . . . . . . . . . . .

71

5.5

CPRG-specific notation and terminology .

. . . . . . . . . . . . . .

72

5.6

Search strategies in the CPRG . . . . . . .

. . . . . . . . . . . . . .

74

5.7

The one-turn, two-cow CPRG . . . . . . .

. . . . . . . . . . . . . .

74

5.8

1T-CPRG: computational considerations .

. . . . . . . . . . . . . .

83

5.9

The 1T-CPRG for different cow speeds . .

. . . . . . . . . . . . . .

84

5.10 Finite-turn CPRGs . . . . . . . . . . . . .

. . . . . . . . . . . . . .

84

5.11 Summary of the CPRG . . . . . . . . . . .

. . . . . . . . . . . . . .

87

Games with Asymmetric Information: Life as a Cow Gets Harder

91

.

.

.

.

.

.

.

.

.

.

5.1

Searching with asymmetric information:

92

6.2

Supplementary notation and terminology .

93

6.3

Information models for situational awareness

93

6.4

Behavioral models for asymmetric games .

95

6.5

A bound on the maximum number of turning points in the CPRG

95

.

a motivating example . . . . . . . . . . . .

.

6.1

.

6

10

7

6.6

CPRGs with asymmetric information . . . . . . . . . . . . . . . . . .

99

6.7

AI-CPRGs with perfect knowledge

. . . . . . . . . . . . . . . . . . .

99

6.8

Socially Optimal Resource Gathering . . . . . . . . . . . . . . . . . .

105

6.9

Conclusions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

Dynamic Cow-Path Games: Search Strategies for a Changing World113

A motivation for dynamic environments . . . . . . . . . . . . . . . . .

7.2

Dynamic Cow-Path Games with target transport requirements . . . . 115

7.3

Greedy search strategies for the DE-CPRG . . . . . . . . . . . . . . .

7.4

Equilibria utilities of cows in the DE-CPRG . . . . . . . . . . . . . . 120

7.5

An aggregate worst-case analysis of greedy

7.6

8

114

7.1

118

searching . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

123

. . . . . . . . . . . . . . . . . . .

134

Conclusions and Future Directions

137

Summary and Future Directions

11

12

List of Figures

1-1

Depiction of real-world scenarios where agents compete, against one

another, to capture targets given limited information on the location

of targets.

(a) Snapshot of taxi operations in Manhattan.

In busy

urban cities, the operation of taxi drivers trying to find passengers can

be represented as a competitive search game. (b) A sunken treasure

ship wrecked along a coral reef. The exploits that result from two rival

recovery boats each trying to find the ship using a crude sonar map

of the area constitutes a competitive search game. (c) A Kittyhawk

P-40 that crash-landed in the Sahara desert during World War II. In

the event rescue and enemy forces had some idea of the aircraft's location, e.g., from (intercepted) radio communications, and had each

party launched a recovery operation, the resulting race to locate the

plane would be a competitive search game. . . . . . . . . . . . . . . .

2-1

26

A partial taxonomy of select sub-disciplines within the field of search

theory. It is worth reinforcing that the families of games represented

are only a relevant sampling of select research areas in the field, and

not an exhaustive listing. The box shaded in blue represents agentvs-agent or competitive search games, the class of problems that will

be the focus of this thesis. The location of CSGs in the tree indicates

these problems share fundamental attributes with probabilistic search

problems and pursuit-evasion search games. The image was inspired

by similar figures reported in [17], [33]. . . . . . . . . . . . . . . . . .

13

34

2-2

Illustration of the key features of the stochastic or average case CowPath Problem. Starting from the origin, the cow explores R in search of

clover W. A hypothetical search plan is shown in gray. In the instance

depicted, the cow makes four turns before finding the target at the

point marked with a red exclamation mark.

4-1

. . . . . . . . . . . . . .

38

A visualization of the Cow-Path Ring Problem. The target density,

f , as a function of radial position q, is shown in blue. In the instance

depicted, the target, 7, is located on the North-West portion of 'Z.

In the search plan shown, the cow (yellow triangle) travels in the ccw

direction toward q1, where, having not found 7, she reverses direction,

and travels in the cw direction toward q2 . Upon reaching q2 , having

still not found T, she again reverses direction and continues searching

in the ccw direction until ultimately finding 7 at the site indicated

with a red exclamation mark.

5-1

. . . . . . . . . . . . . . . . . . . . . .

55

A visualization of the Cow-Path Line Game. The target density, fT,

is shown in blue. The unique equilibrium search strategy of each cow,

s* = (9, 9), is indicated by a directed gray line. Under s*, Ci heads

toward eCi and, just before meeting, reverses direction and visits any

previously unexplored territory. . . . . . . . . . . . . . . . . . . . . .

5-2

72

An instance of the CPRG illustrating the initial positions and initial

headings of cows C1 and C 2 . The trajectories of both cows, right up

to the point of capture, are shown in dark gray. The target density

f achieves a global maximum in [-7r/4, 0]. In the instance shown, 7

is located along the North-West portion of 'Z. The site at which 7 is

found, in this case by C 2 , is indicated with a red exclamation mark. .

14

73

5-3

A diagram showing associations between families of finite-turn CPRGs.

The node labelled with the pair (i, j) denotes the family of games in

which C 1 and C 2 may turn up to i and

j

times, respectively.

The

numbers above and beside the arrows indicate which cow turns to

bring about the indicated transition. The nodes representing base case

games, for which equilibria strategies may be found using the methods

discussed in previous sections, are colored in red. The nodes representing all other games are colored in gray. The arrows indicate how one

family of games reduces to a simpler family of games when a cow turns.

For example, the (2,2)-CPRG becomes an instance of the (1,2)-CPRG

when C 1 turns, and an instance of the (2, 1)-CPRG when C 2 turns. . .

6-1

88

Visualization of the functionality of notation used for describing subregions of 'Z and one point relative to another on 'Z. Due to the circular

topology of 'R, there is flexibility in the notational system. For example, [qi,

q2]c.

and [q2, qi]cc. refer to the same arc of R. Similarly,

(q 3 + x)c. and (q 3 + 27r - x)c, refer to the same point on 'Z. . . . . .

6-2

Initial positions, qi(0); initial headings,

#j(0);

and target priors,

of C 1 and C 2 for an instance of an AI-CPRG. (a)

f7,

94

f7;

shown in blue,

has local maxima along the South-East and North-West regions of 'Z.

(b)

f2,

shown in green, is more evenly distributed and contains three

modest peaks along 'Z. For q E 'Z such that ff(q)

$

f2(q), C1 and C 2

have different valuations for visiting q first. . . . . . . . . . . . . . . .

An instance of an AI-CPRG. The cows (depicted as cars) C 1 and C 2 are

initially diametrically opposed at the top and bottom of 'R, respectively.

C 1 's prior on T, namely f1j, is shown in blue.

Owing to

ff,

motivated to, if possible, be the first cow to explore segments 'Z

C 1 is

1

and

'R 2 . Shown in green, it is assumed that f2(q) = 1, Vq E 'R, such that

any two segments of 'Z having equal length are equally valuable to C 2

.

6-3

100

The points a, b, c, d, e, f and g are points of interest in Example 6.3.

15

.

102

6-4

Visualization of key quantities used in the proof of Theorem 6.5. The

points labelled 1, 2, and 3 in red correspond to the three points visited

by C 1 in 6.14. In the instance shown, d*

6-5

=

. . . . . . . . . . . .

ccw.

104

Illustration of the three ways in which Uso can fall short from the

maximum value of 2. In each figure, f17 and

fj

are shown in blue

and green, respectively. In (a), C 1 and C 2 are initially positioned on

the "wrong" sides of 9, resulting in a shortfall from 2. Were the cows

able to switch positions, the shortfall could be avoided. In (b), overlap

between Su(ff) and Su(f2) creates unavoidable inefficiency. In (c),

the shortfall results from the lack of convexity of

6-6

ff

and

f2.

. . . . .

107

A socially optimal search strategy for the scenario considered in Example 6.3. The socially optimal search strategy is illustrated by the

purple line: C 1 and C 2 rendezvous at b, having travelled there in the cw

and ccw directions, respectively, and proceed to explore [qi (0), q 2 (0)]c,,

in tandem. The segment R1, shown in red, is the portion of the ring

that transitions from being explored by C 2 in a cooperative search to

being visited first by C 1 in a competitive search. . . . . . . . . . . . .

7-1

111

A sample sequence of target capture times associated with the early

stages of a DE-CPRG. In the instance shown, C1 captures targets 71,

T2 , and 7 4 , while C2 captures targets 73 and 75 . If the statistics shown

are representative of steady-state behavior, then the aggregate utilities

of the cows would be U$'g(s) = 0.6 and U2(s) = 0.4, respectively. . . .

7-2

117

An isometric visualization of an instance of the DE-CPRG. The prior

fo

is shown in blue as a function of position along

. Also shown

are the origin and destination points associated with targets 7j,

'J+1,

and 7i+2 . At the instant shown, C 1 and C2 are searching 9R for W,.

According to

#y,

once 7j is discovered and transported from (9 to PDj,

75+j is popped from the queue of targets and appears on 9.

16

. . . . .

118

7-3

A snapshot of a DE-CPRG taken at the start of CPRGj. The origintarget density, fo, and destination-target density, fD, are shown in (a)

and (b), respectively. Targets are significantly more likely to (i) arrive

in E1 rather than 'Z \E 1 and (ii) seek transport to

7-4

0

2

rather than 'Z \8

2.120

Illustration of two scenarios used in the proof of Proposition 7.1. In

(a), C2 discovers a target at

0

, that requires transport to 9 b, a distance

L

away. During transport, C1 has time to optimally preposition herself

at Oc in preparation for the next stage. A finite time later, in (b), the

}

roles reverse, 1, discovers a target at 0 , that also requires transport to

6

7-5

b,

allowing

C2

to optimally position herself at 0, for the next game. . 122

Visual breakdown of a typical interval spanning the time between successive target captures for Ci using si = s-. On average, it takes Ci

time d to return to qV: after delivering 7j. From the perspective of

Ci, in the worst-case, Ci finds a target at time td(Tij)+, which, on

average, is delivered in time da.

7-6

. . . . . . . . . . . . . . . . . . . . .

132

Segments from possible sample runs, from the perspective of Ci, of a

D E-CPRG . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

17

134

18

List of Tables

3.1

Utility payoffs for the simple two-agent search game in Example 3.4.

The possible actions of agent 1 are displayed in the leftmost column.

The possible actions of agent 2 are displayed along the top row. Given

each agent's search strategy, the first and second entry in each cell

represent the utility of agent 1 and agent 2, respectively. For example,

if agent 1 searches using si = a and agent 2 searches using s 2 = b, then

the probability of agent 1 finding the target is 1 and the probability of

agent 2 finding the target is . .....

.................

48

4.1

Summary of general and CPRP-specific notation used in the thesis.

54

5.1

Summary of CPRG-specific Notation . . . . . . . . . . . . . . . . . .

70

19

20

Chapter 1

Introduction

When eating an elephant, take one bite at a time.

Creighton Abrams

This thesis considers the decision-making process of mobile, self-interested search

agents that compete, against one another, to find targets in a spatial environment.

We are quick to point out that the adversarial scenarios of interest are fundamentally distinct from the cooperative formulations that dominate much of multi-agent

search theory. By and large, these existing works study the exploits of a team of

searchers that cooperate to efficiently locate a target. Unsurprisingly, the study of

search scenarios that stress inter-agent competition among searchers calls for a new

evaluative framework and a customized assortment of analytic methods. Providing

these elements and putting them to use is the central contribution of this thesis.

The role of this preliminary chapter is to, at a high-level, introduce the types of

problems that will be of interest. To this end, we recount a number of real-world

examples in which the competitive search framework features prominently. The aim

is to whet the reader's appetite and motivate why the problems considered are both

intriguing and relevant.

In particular, we will provide an example based on the

operation of a taxi system that will be revisited and serve as a motivational aid

21

at various points throughout the thesis. A contributions section discusses how we

see the work that comprises this document supplementing and extending the field of

search theory. Finally, this chapter provides an overview of the thesis's organizational

structure. This outline is useful as both a navigational aid and a preview of the story

that follows.

1.1

Motivation

The problems considered in this thesis result from the fusion of two fundamental, yet

previously disparate, ideas. The first key idea is that a mobile agent that wishes to

locate a target, but does not know the target's exact location, is obligated to search

for it. The second key idea is that competition naturally arises when multiple selfinterested agents each vie to acquire a scarce resource. The first point is the premise of

search theory. The second point, somewhat more subtly, touches on the competitive

undertones of game theory. This work considers scenarios that incorporate both of

these notions through the study of multi-agent systems in which the agents compete

to capture targets given only limited knowledge of where the targets are located. To

understand the void that the problems investigated in this thesis begin to fill, it is

useful to, very briefly, highlight the types of search problems considered to date by

those in the community. A more detailed account of the relevant literature will be

provided in the next chapter.

In probabilistic search problems, one or more search agents attempt to (efficiently)

capture a target that is indifferent to their actions. In many of these cases, search

plans must be devised given only limited knowledge of a target's location. An example

of a probabilistic search problem is the case of an explorer trying to find buried

treasure given a raggedy and faded map of the area.

When multiple agents are

involved in the search, their plans are often formulated in a cooperative context in

order to improve efficiency.

For example, coordinated planning may increase the

probability the target is found or reduce the expected time required to capture the

22

target. It also has the benefit of avoiding redundancies that could emerge if the agents

planned independently.

In pursuit-evasion games, the target assumes a more animated role and actively

chooses a fixed hiding location (immobile target) or trajectory (mobile target) to.

evade capture by a team of searchers. Again, the searchers act cooperatively in order

to capture the target efficiently, e.g., in minimum time or minimum expected time. In

other words, competitive tension exists only between the target and, collectively, the

team or search agents. The agents themselves have no preference for which agent, if

any, ultimately finds the target. An example of a pursuit-evasion game is the case of

an escaped convict who tries to evade capture by a team of police officers. Pursuitevasion games naturally incorporate an element of game theory, as it is reasonable,

and often necessary, for each party to factor in their adversary's actions when planning

routes and making search-related decisions.

Probabilistic search problems and pursuit-evasion games address decision-making

in a host of applications. However, they offer little guidance about how agents should

search when the agents, themselves, compete against one another to capture targets.

Pragmatically, it is fair to ask, why might one be interested in these scenarios? The

answer, in the author's opinion, is that there are a number of relevant venues where the

agent-versus-agent search dynamic features prominently. Before providing a collection

of examples, it is useful to first give an informal description of the exact relationship

that exists between agents and targets in the problems of interest. Naturally, a formal

discussion of each of these components will follow later chapter of the thesis.

In the search games considered in this thesis, each agent is adversarially aligned

with every other agent. Agents do not form teams, nor do they cooperate, unless

doing so expressly benefits all parties. Agents are, unless otherwise stated, and aside

from their initial conditions, homogeneous. Each agent has a prior on the location of

targets, but the exact locations of the targets are unknown. Moreover, an agent can

discover a target only when standing directly over it. Unlike pursuit-evasion games,

the targets in the games we consider are artifacts of the environment, not strategic

23

decision-makers. The targets are purely immobile and the locations at which they

appear in the environment are determined by a random process. Instead, the game,

as it were, is played among the search agents, with each agent trying to capture as

many targets as possible. To emphasize the stark differences that exist between this

search framework and the cooperative formulations previously described, we refer to

the problems considered in this thesis as competitive search games.

Returning to scenarios that emphasize the incentive for strategic decision-making

in a competitive search setting, consider the role of yellow cabs in Manhattan [73].

These taxis operate in what is called a "hail market". By law, yellow cab drivers may

only pick up passengers that have hailed them from the side of the street 169]. They

cannot schedule jobs in advance, nor can they respond to call-in requests. (Jobs that

originate under these circumstances are handled by a separate fleet of vehicles.) We

argue that yellow cab operations constitute a competitive search game. Abstractly,

the road network on which taxis drive may be viewed as a graph, whose edges and

vertices represent roadways and intersections, respectively. The targets are the passengers; they arrive dynamically according to an, albeit fairy complex socially-driven

and time-variant, spatio-temporal process. The game is played among the taxi drivers,

with each driver trying to maximize their individual revenue, which clearly requires

getting passengers onboard. To operate effectively, drivers must plan their routes by

accounting for the spatial demand pattern of passengers, as well as the location of

nearby cabs. An interesting feature of this system is that targets must be transported

from a pickup location to a dropoff location. That is, there is a service component

associated with capturing a target. The logistics of taxi operations will be revisited

at various points throughout this thesis to motivate specific problems of interest.

As a second example, consider the case of two rival shipwreck-recovery boats

searching for the outskirts of a jagged coral reef for the remnants of a treasure ship

lost at sea. Once more, we argue that this encounter has all the makings of a competitive search game. The environment is the subset of R2 that represents the waters

surrounding the reef. The lone target is the sunken ship. The game is played between

24

the two recovery boats, with each boat trying to discover the wreck (and any treasure that may be onboard) first. Given a priori knowledge of where the ship sank,

perhaps from crude sonar images, historical maps, word of mouth accounts of the

sinking, etc., each boat must chart a course to search the coastal waters surrounding

the reef. Once again, prudent search strategies must factor in not only probabilistic

information about where the target is likely to reside, but also the presence of a rival

salvage boat that harbors similar ambitions. A version of this scenario will motivate

the work in Chapter 6.

The preceding scenarios differ markedly in terms of workspace geometry, the number of agents involved, the processes by which targets arrive, and the time scales over

which searching takes place. This suggests competitive search games encompass a

broad class of search problems and there are potentially many other practical applications that fit naturally within the framework. For example, the same ideas emerge

in the prospecting and mining industries, where rival firms survey vast swaths of land

for gold, minerals, or oil deposits. Given preliminary geographic information, how

one of a handful of firms should prioritize testing potential mining sites in order to

be the first to file claims on the most lucrative locations, fits well within the domain

of competitive search games.

Along similar lines, imagine a military aircraft carrying sensitive information over

hostile enemy territory were to crash-land in a desert. How should authorities search

for the aircraft knowing other individuals, perhaps with unscrupulous intentions, are

also looking for the plane. Once again, the competitive search game framework is a

natural venue to pursue this question. Finally, competitive search games also have

relevant connections to the foraging behavior of animals in the wild and the diffusion

of bacterial colonies over a nutrient-laden agar plate. A sampling of these scenarios

are illustrated in Figure 1-1.

Given the apparent relevance of competitive search

games, it is surprising, at least to the author, that few results, even for scenarios

involving just two search agents, have been reported in the literature.

At this stage, we have, hopefully, convinced the reader that the competitive search

25

game framework captures the incentive for strategic decision-making in a host of

meaningful search applications.

However, as alluded to, the scenarios mentioned

above differ markedly with respect to key features and environmental parameters,

e.g., the number of search agents involved, the workspace geometries, the processes

by which targets arrive, and the time scales over which searching takes place. Unsurprisingly, it would be rather ambitious to expect a single formulation to capture the

nuances and peculiarities of each scenario. In this thesis, we will consider a collection

of idealized search games, with each encounter emphasizing one or more of the elements that punctuate the aforementioned scenarios. In analyzing these encounters,

this thesis provides the first rigorous analysis of competitive search games. A more

detailed exposition of our research philosophy and the contributions of our work is

discussed in the next section.

(a)

(b)

(c)

Figure 1-1: Depiction of real-world scenarios where agents compete, against one another,

to capture targets given limited information on the location of targets. (a) Snapshot of

taxi operations in Manhattan. In busy urban cities, the operation of taxi drivers trying to

find passengers can be represented as a competitive search game. (b) A sunken treasure

ship wrecked along a coral reef. The exploits that result from two rival recovery boats each

trying to find the ship using a crude sonar map of the area constitutes a competitive search

game. (c) A Kittyhawk P-40 that crash-landed in the Sahara desert during World War II.

In the event rescue and enemy forces had some idea of the aircraft's location, e.g., from

(intercepted) radio communications, and had each party launched a recovery operation, the

resulting race to locate the plane would be a competitive search game.

26

1.2

Contributions

This section provides a high-level synopsis of the contributions of the thesis.

A

detailed account of specific advancements can be found in the next section, where the

thesis is deconstructed on a chapter-by-chapter basis. Here, the focus is on conveying

the spirit of the thesis, defining the scope of the work, and remarking on the value of

the work going forward.

The major contribution of this thesis is a collection of algorithmic strategies that

agents can use to search for targets in an environment.

However, unlike existing

constructs, which have no competitive tension or pit a cooperative team of pursuers

against a target, the strategies presented herein are designed for situations in which

search agents compete against one another to find targets. As mentioned, we believe

this framework encapsulates the inter-agent search dynamics in many real-world scenarios, yet acknowledge that, in their fullest form, these problems introduce a number

of analytic and computational complexities.

To make headway, we will often restrict ourselves to encounters that involve two

agents and that take place in topologically simple environments. For example, many of

the problems considered involve two agents contesting targets on a ring. Despite these

restrictions, the search scenarios nevertheless support an assortment of interesting and

sometime surprising behaviors. Moreover, the modest furnishings of these problems

allow us to conduct a formal analysis of the constituent decision-making, often in the

form of quantifiable performance bounds, if not equilibrium strategies for the agents

involved. This approach not only caters to the author's research style, but also serves

to compile a set of initial competitive search game contributions. In this way, our

efforts begin the process of populating a toolbox of competitive search game results

that may prove useful in tackling more elaborate problems.

The next section outlines the content and contributions of the thesis on a chapterby-chapter basis.

27

1.3

Organization

This thesis is organized in chapters. Chapter 2 begins our investigation by providing

an overview of the relevant literature. By and large, this consists of contributions

to the fields of probabilistic search, pursuit-evasion games, and persistent planning

problems.

Included here is an overview of the Cow-Path Problem.

Many of the

scenarios considered in this thesis are an adversarial twist on this well-known problem,

so there is a vested interest in detailing its finer points. Throughout, we adopt the

philosophy that by understanding the pillars currently in place, one can better define

and appreciate the contributions of the work in this thesis. Still in a preparatory role,

Chapter 3 provides a brief overview of some of the technical details used in subsequent

chapters. Specifically, the game-theoretic terms discussed will be used to frame our

discussion of algorithmic search strategies.

With the requisite background material in place, the thesis moves straight into

novel material. The environment of interest in Chapter 4, as well as many of those that

follow, is a ring. Rather than plunge headfirst into a treatment of competitive search

games on a ring, we adopt a more tempered approach. As a prelude, we consider the

problem of how a hungry cow should search the ring to find a patch of clover in the

minimum expected time, given only a prior on the clover's location. This problem is

thematically very similar to the Cow-Path Problem, and serves as a stepping stone to

the adversarial encounters that follow. Iterative algorithms are provided for finding

optimal search strategies and, for games with bounded target densities, a bound is

given on the maximum number of times an intelligent cow would ever turn around.

In this latter case, we show that an optimal search plan may be expressed as the

end product of a nonlinear program followed by a trimming algorithm. This result is

noteworthy as the Cow-Path problem has no known closed-form solutions for general

target distributions.

Without further ado, Chapter 5 considers the canonical problem of this thesis: the

Cow-Path Ring Game, a scenario in which two hungry cows compete, given a prior,

28

to find a patch of clover on a ring. Upon introducing the necessary notation and

terminology, the problem is formally defined. Many of the games discussed in later

chapters will be variations of this formulation. A concerted effort is made to explain

why, for a number of reasons, including the realtime and feedback nature of the game,

the Cow-Path Ring Game is a challenging problem. In response, a simplified version

of the game is presented, one in which each cow may turn at most once. With this

restriction in place, an iterative algorithm is developed that establishes the existence

of an e-Nash equilibrium. Subsequently, a dynamic-programming-based approach is

used to extend this result to games in which each cow may turn at most a finite

number of times.

Moving on, Chapter 6 considers a potpourri of interesting scenarios that are sufficiently different from the Cow-Path Game to justify a chapter of their own. By imposing a cost each time a cow turns, a bound is developed on the maximum number of

times an intelligent cow would ever reverse directions. Continuing, we consider games

in which each cow maintains a unique prior on the target's location. Carrying this

momentum forward, we consider games where one cow is in the advantageous position

of knowing where her rival suspects the target is located. This dichotomy requires

specification of a behavioral model for both the more-informed and less-informed cow.

Assuming the less-informed cow behaves conservatively, it is shown the game admits a

Nash equilibrium. Moreover, for select distributions, the more-informed cow is able to

increase her utility by leveraging her situational advantage. The asymmetric nature of

these games invites the opportunity to study the social welfare of competitive search

games. To this end, a cooperative search strategy is presented that is socially optimal

for any set of target priors. This treatment transitions naturally into a discussion,

albeit a brief one, of the price of anarchy in competitive search games.

Chapter 7 considers competitive search games in which targets arrive dynamically

on a ring. The persistent nature of these games places an emphasis on strategies

that ensure targets are captured efficiently in the long-run.

Among the dynamic

games introduced are scenarios with transport requirements, in which targets, once

29

found, must be delivered to a destination point. A defining attribute of the search

strategies in these games concerns how a cow should position herself while her rival

is preoccupied delivering a target.

As a first contribution, we show that in any

equilibrium of a two-cow dynamic search game, each cow captures, in steady-state,

half of all targets. With this benchmark established, it is shown that greedy search

strategies can, for select target distributions, lead to arbitrarily poor capture rates.

Because it is difficult to quantify the long-term effect of short-term actions in an

equilibrium setting, we, instead, focus on defensive or conservative search strategies

and lowerbound the expected fraction of targets captured using these methods. This

bound is then compared with the theoretically optimum value, i.e., one half, for select

target distributions.

Finally, Chapter 8 summarizes the prominent ideas of the thesis, reflects on the

contributions made, and evaluates the resultant state of competitive search games.

It also takes the time to outline an assortment of open research directions that have

arisen during the development of this work, but that, on account of time constraints,

or their tangential nature, or both, have received only modest deliberation.

30

Chapter 2

Background Material

This chapter provides an overview of related work. Recall that competitive search

games stress the goal of finding targets in an agent-vs-agent setting. We view search

theory as the natural domain of our work, and game theory as the appropriate framework to study the problems of interest. Consistent with this mindset, we will not

attempt to summarize works from the field of game theory. Rather, a short compendium of the basic game-theoretic ideas, which constitute tools for our study, will

be provided in Chapter 3. These ideas are well established and covered in any introductory text on the subject. The major contribution of this thesis lies in using these

ideas to understand search in a novel setting. To this end, this chapter surveys relevant contributions to the field of search theory. The intent is to provide an overview

of the literature, both pre-existing and ongoing, that has relevant connections to

competitive search games, be the association in terms of application, methodology, or

both. This highly focused survey of select works in the fields of probabilistic search,

pursuit-evasion games, and, what we will refer to as persistent planning problems,

fosters an appreciation for the state-of-the-art and assists in defining the ultimate

identity of the thesis. Unlike competitive search games, the vast majority of works

covered in this chapter do not pit one search agent against another. Nevertheless,

they share salient features with the problems we consider or serve to better position

the thesis work within a larger search narrative.

31

2.1

Search Theory: An Introduction

Searching for a lost or hidden item is an age-old problem. People still misplace their

keys, passport, phone, cash, etc., and, when they do, typically search to find them.

Searching is also associated with larger-scale recovery operations, e.g., rescuing a

camper lost in the wilderness. In this thesis, search theory is defined as the study of

problems that take place in a spatial environment and involve n agents trying to find

m targets. Note this definition is quite broad. For example, it says nothing about the

interaction between agents, which could be of a cooperative or competitive nature,

nor the manner in which targets are distributed and arrive. In many cases, the task

of searching is constrained by the need to locate targets efficiently. For example,

in some applications, there is the possibility a target goes undiscovered.

Here, it

makes sense to use a strategy that maximizes the probability of finding it. In other

applications, it is known that a target resides somewhere in a bounded environment.

Although a lawnmower-style sweep of the workspace is guaranteed to find the target,

it is more appropriate to use a search strategy that minimizes the expected discovery

time [31]. In short, interesting search problems combine the goal of finding targets

with the need to provide some form of performance guarantee.

When people misplace basic everyday items, they typically launch small-scale,

ad-hoc campaigns to relocate them. These undisciplined approaches generally suffice

for finding low-value items in small spaces.

However, as the value of the object

increases, e.g., a human life, the size of the environment grows, e.g., a forest hundreds

of square kilometers in size, or both, successfully searching demands a more structured

treatment.

The first rigorous approach to solve search problems was undertaken

during World War II when the Anti-Submarine Warfare Operations Group was tasked

with finding submarines in the Atlantic [58], [63]. The declassification of these efforts

kicked off a mathematical investigation of search-related problems. Today, search

theory is an established field within operations research. More recently, advances in

autonomy have initiated efforts to revisit traditional search paradigms from a robotics

32

perspective. This movement has attracted interest from control theorists, roboticists,

and computer scientists. These researchers have raised the profile of new and emerging

applications within the search community, and advocated for more algorithmic and

pragmatic approaches to solve many existing search problems [17],

[331.

Auspiciously, search theory researchers have surveyed their field with remarkable

regularity, including the authors of [33], which recount many of the efforts detailed

here. Owing to these efforts, search problems generally obey a well-defined taxonomy.

Figure 2-1 provides a visualization of select disciplines within the hierarchy of search

problems. Rather than reiterate existing surveys, the focus of this chapter is on conveying advancements that help to frame the contributions of our work. Accordingly,

we freely pick and choose to cover the topics we deem most relevant. In doing so, we

often ignore or only briefly touch on contributions that while extremely significant,

are of minimal importance for future discussion. For completeness, the interested

reader can find detailed accounts of the classical period of search theory, spanning

the first forty-or-so years of the field, which we touch on only briefly, in [17], [371,

[62], [92].

The last section of the chapter highlights problems that stress persistent planning

for applications that feature indefinite horizons of operation and time-varying environments. Many of the results reported have emerged only recently, and, so far, have

had only tenuous affiliations with searching. Nevertheless, their long-term approach

to planning is pertinent for the work in Chapter 7, where we consider games with

dynamically arriving targets. In briefly surveying these works, the intent is to convey

how our efforts contribute to the state-of-the-art.

33

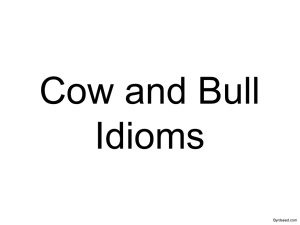

search theory

search games

probabilistic search

imperfect sensor

static

evader

mobile

evader

agent(s) vs target

perfect sensor

statiC

evader

cow-path

problems

mobile

evader

games

Figure 2-1: A partial taxonomy of select sub-disciplines within the field of search theory.

It is worth reinforcing that the families of games represented are only a relevant sampling

of select research areas in the field, and not an exhaustive listing. The box shaded in blue

represents agent-vs-agent or competitive search games, the class of problems that will be

the focus of this thesis. The location of CSGs in the tree indicates these problems share

fundamental attributes with probabilistic search problems and pursuit-evasion search games.

The image was inspired by similar figures reported in 1171, [331.

2.2

Probabilistic Search

In probabilistic search problems, a search agent, located in environment Q, attempts

to capture a target, T, whose initial position and movement are independent of the

agent's actions [33].

That is, T is impervious or indifferent to being captured. In

many cases, the agent must devise a search plan given only a prior density,

fy

:

Q -- R>o, on T's position. Initial efforts to place probabilistic search problems on

a firm theoretical foundation were undertaken in [58], and later expanded upon in

[59], [60]. Here, geometric arguments are used to characterize the sensor footprint

and detection efficiency of various sensor rigs, e.g., aerial surveillance with a human

spotter. Included is a result linking random search to a detection probability function

that is exponential in the time spent searching a bounded region.

As Figure 2-

1 indicates, probabilistic search problems are naturally categorized according to the

sensing capabilities of the agents involved. For most problems, the natural distinction

is between sensors that are (i) imperfect, i.e., may generate false-negatives, and (ii)

perfect, but have a finite sensing radius range.

34

2.2.1

Search with an imperfect sensor

When an agent's sensor is imperfect, it may fail to detect a target within its sensing

zone. Accordingly, whether the target is found must be discussed in probabilistic

terms. For the time being, assume the target is stationary. The detection function

b : Q x R>O -

[0, 1], assigns the probability b(q, z) to finding the target at point

q E Q, given z amount of effort is spent searching q, and the target is in fact at

q. Given fW, b, and a constraint budget C on the resources (e.g., time) that can be

devoted to searching, the canonical imperfect-sensor search problem is to find z*(q)

the optimal expenditure of search effort at each point q E Q that maximizes the

probability of finding the target subject to all constraints, i.e.,

z* = argmax

J

f(q)b(q, z(q))dq

(2.1)

z:Q-+-Ryo I

s.t. Jz(q)dq ; C.

qcEQ

(2.2)

In continuous environments, solutions to (2.1)-(2.2) typically employ Lagrange

multiplier techniques [60], [92], [95]. Hypothesis testing [40], [741 and convex programming [28] methods have also been used.

If an optimal search plan z*, with

search budget T, has the property that for all t < T, z* restricted over [0, t] is optimal for search budget t, z* is said to be uniformly optimal -

a desirable property.

In [7], [61], it is shown that uniformly optimal search plans exist for a broad class

of problems, provided b is a regular function, i.e., b(.) is increasing, but provides diminishing returns in the argument, [33], [93]. Unfortunately, the search plans these

methods generate are not always feasible, and may require the agents jump or teleport

between points in order to realize them [10],

[35],

[76]. Even today, search plans often

neglect this fundamental requirement, providing high-level search instructions, but

little instruction as to how these may be transformed into drivable search routes, i.e.,

realizable paths [26], [66]. The search plans considered in this thesis all correspond

to continuous, i.e., realizable, trajectories.

35

From a search perspective, an environment Q is discrete if it may be partitioned

into regions, 1, ...

,K

such that b(k, z) is the probability of finding the target in

region k, given z amount of effort is* spent searching region k (as a whole) and the

target is in fact in region k. Unfortunately, Lagrange methods do not extend directly

to discrete Q. The necessary amendments are discussed in [50]. As an analog to

uniform optimality, it was shown in [30] that optimal search plans in discrete Q are

temporally greedy if at any point during search, the next cell to be further inspected

is the one that maximizes the marginal gain in detection probability per marginal

effort expended. Operating within discrete environments makes it easier to formulate

and analyze variants on the basic problem structure. [17] and [33] provide an solid

overview of these and other endevors. In this and the next paragraph, we reiterate a

number of these efforts to provide a sense for the scope of problems that have been

addressed. Returning to discretized search paradigms, partitioning the environment

and the effort expended into discrete components is a much more natural framework to

model, among other things, scenarios where a quantized level of effort must expended

each time a region is searched and the rewards for capture are discounted in time

or search effort. More discussion of these problems, often referred to as sequential

searching, can be found in [52], [68], [93],

194].

A recent trend in one-sided searching is to consider operations in physicallyexpansive environments. In these settings, streamlining the numerics is an important consideration to alleviate computational bottlenecks.

Efforts in this vein are

recounted in [82]. Other works have focused on search problems with false contacts

[53], sensors that generate false positives [45] or, similarly, contain obstacles in the

environment.

In the optimal stopping problem, it is not known whether or not a

target actually resides in the environment [51], [52]. Rather, after some amount of

exploration, the agent must report (i) the target is not in Q, or (ii) the target is in Q

alongside its most likely location. Of course, the agent may reply incorrectly. These

problems introduce a new class of performance metrics, e.g., the probability the agent

makes a correct decision about target's inclusion in Q. Dynamic formulations of this

problem use observations to update target belief functions within a Bayesian setting

36

[34]. This latter class of problems results in feedback search plans that use recorded

observations to guide future decision-making. Motivated by an interest in developing autonomous robotic search platforms, a number of recent efforts have considered

cooperative, multi-agent formulations to solve variants of the probabilistic search

problem. For example, [32] uses a Bayesian approach that allows a team of pursuers

to quickly decide (though not necessarily correctly) whether or not a target is in the

environment, and dynamic programming-based solutions to solve similar problems

are discussed in [65]. However, as these methods often scale unfavorably with respect

to key factors, e.g., the number of search agents or the workspace complexity, research

efforts have also been directed at developing suboptimal algorithms with known, and

acceptable, performance guarantees.

2.2.2

Search with a perfect sensor

A sensor is perfect if it never registers false negatives. Occasionally, it makes sense

to rule out the possibility of false positives as well. It is important to remember that

perfect sensors are still assumed to have a finite sensing radius. If all targets are

stationary, then there is no need for an agent equipped with a perfect sensor to scan

any point more than once for targets. For this reason, perfect-sensor problems focus

not only on finding targets, but doing so in the minimum expected time. Of course,

if targets arrive dynamically, it is likely necessary to revisit points throughout the

workspace. This section considers a collection of perfect-sensor, probabilistic search

problems for static environments.

The Cow-Path Problem, or simply CPP, was proposed, independently, by Beck

[12] and Bellman [16] in the 1960s.

As the reader may have surmised, the CPP

has strong thematic connections with the encounters studied in this thesis. This is

reflected, most notably, by the fact that the players or agents in our games are also

cows. Since its inception, the CPP has become a canonical problem in the fields of

probabilistic path-planning, robotics, and operations research. The exact statement

of the CPP varies from field to field. For example, those in the online algorithms

37

community typically concentrate on a version that emphasizes search performance

in the worst-case [351. In this thesis, however, we will be interested in the following

stochastic version, which places a premium on performance in the average case.

Definition 2.1 (The Cow-Path Problem). A hungry cow is positioned at the

origin of a fence represented by the real line R. The cow knows that a patch of clover

resides somewhere along R, but has only a prior, fT : R --

R>o, on its location.

The cow can move at unit speed and reverse direction instantaneously. On account

of being severely nearsighted, the cow can locate the target only when she is standing

directly over it. How should the cow search to find the clover in the minimum expected

time?

fence

Figure 2-2: Illustration of the key features of the stochastic or average case Cow-Path

Problem. Starting from the origin, the cow explores R in search of clover T. A hypothetical

search plan is shown in gray. In the instance depicted, the cow makes four turns before

finding the target at the point marked with a red exclamation mark.

In the CPP, the cow's sensory capabilities are represented by a sensor that has

zero sensing radius, but perfect accuracy. Figure 2-2 provides a visualization of the

CPP. In the decades since the CPP's inception, various analytic conditions necessary

for search plan optimality have been reported, e.g., [9], [12], [13], [141, [15], [54]. Given

these contributions, it is perhaps surprising that exact (analytic) solutions are known

for only a handful of target distributions; specifically, the rectangular, triangular,

and normal density functions [42]. For general distributions, the common approach

remains to discretize the workspace and rely on dynamic-programming techniques.

38

Recently, a variant of the CPP, in which costs are levied against the cow each time

she turns, was investigated in [361.

2.3

Pursuit-evasion games

Pursuit-evasion games involve one or more pursuers trying to capture an evader.

Typically, these games can be effectively classified according to which aspects of the

pursuit receive the greatest emphasis, which, in turn, drive the mathematical techniques and solution methodologies employed. Unless otherwise stated, it is assumed

throughout the section that both the pursuer and evader are mobile.

The Princess-and-Monsterand Homicidal-Chauffeurgames [11], involve one player

attempting to capture a more agile adversary. In these and other games where it

is critical to model player dynamics, e.g., a finite-turning radius, acceleration constraints, etc., the interplay between pursuer and evader may be cast as a differential

game. The player's objectives and dynamics are incorporated in the Hamilton-JacobiIsaacs equation, which, when solved, gives each player's equilibrium strategy in the

form of a full-state, position, feedback control law. Unfortunately, as more pursuers

are recruited for searching, or the workspace becomes more complex, e.g., obstacles

or irregular perimeter boundaries are introduced, these methods quickly become intractable [33]. Differential games are sufficiently distinct from competitive search

games that to avoid unnecessary digression, the interested reader is referred to [47],

[48] for a detailed account of the subject.

Combinatoric search games strip away details of the problem deemed superfluous

for the application at hand. Instead, player motion and workspace geometry are modeled using simplified dynamics and basic topological structures. These abstractions

permit an insightful high-level study of pursuit-evasion scenarios that would not otherwise be possible. Within this class, ambush games stress the need for algorithms

that ensure the evader is captured, even under conditions that are highly unfavorable

or statistically rare. In the cops-and-robbers game, a robber (evader) and one or more

39

cops (pursuers) take turns moving between vertices of a graph [3], [75]. The cops win

the game whenever a cop and the robber are collocated at a vertex. When capture

can always be avoided, by judicious play on the part of the robber, the robber wins.

The cop number of a game is the minimum number of cops needed to ensure capture

for any initial conditions of the game. Generally speaking, the bulk of research in

this area is aimed at characterizing winning conditions, the cop-number, and monotonicity, i.e., the property that the number of safe vertices decreases in the number of

cop moves, in relation to graph topology. For example, a famous result is that every

planar graph has a cop number of three or less. Extensions of these ideas to games

played on hypergraphs are discussed in [43] for the marshalls-and-robbersgame.

Parson'sgame considers the problem of clearing a building (a graph with nodes

and edges representing rooms and hallways, respectively) that has been infiltrated by

an infinitely fast and agile trespasser [78], [79]. The problem is similar to the artgallery problem [76], except the super-human speed of the assailant requires rooms

be swept in a manner that ensures previously cleared spaces are not recontaminated

[78]. This is achieved by placing guards at topologically-inspired locations to ensure

the perpetrator is confined to an ever diminishing region of the workspace. Research

efforts have focused almost exclusively on the search-number, i.e., the minimum number of guards needed to locate the evader in the worst-case. Once again, much less

effort has been devoted to developing search algorithms to carry out such a sweep,

or to characterizing the time complexity of these schemes. The GRAPH-CLEAR

problem is an extension of Parson's game in which multiple agents are, in general,

required to guard doorways and sweep rooms [56]. Determining the minimum number

of pursuers is known to be computationally challenging, but efficient algorithms have

been reported for graphs possessing special structure, e.g., tree graphs [55], [57].

The lion-and-man game is thematically similar to cops-and-robbers, but unfolds

in two-dimensions, typically a circle or a polygon. The hungry lion tries to eat the

man, while, as one may have guessed, the man tries to escape. Conditions for the

lion to capture the man are reported in [84] for a turn-based version of the game.

40

Extensions to higher-dimensions, most meaningfully R 3 , are reported in [4], [641. A

robotics-inspired variant of the game, in which the pursuer has line-of-sight visibility,

but cannot see through obstacles or boundaries is proposed in [44], [67]. Strategies

for two lions to capture the man in any simple polygon and for three lions to capture

a man in a two-dimensional polygon with obstacles are reported in [49] and [221,

respectively. In many of these problems, randomized search schemes are employed

to minimize the search number. Variations of the game involving finite sensing radii

are reported in [4], [24].

The problem of coordinating multiple agents that each

have a finite sensing radius remains an open problem [331, as does quantifying the

time-complexity of possible capture schemes.

The essential elements of Parson's game are extended to hyper-graphs as in [43],

where they refer to the encounter as a marshalls-and-robbersgame. Here the graphtheoretic notion of tree width features prominently in analyzing the game. Establishing connection to other notions of graph theory, various complexity measures for

pursuit-evasion games are characterized in [25], [83].

A more complete survey of

pursuit evasion in graphs can be found in [6], [391, and more recently in [33] which

originally pointed the author to these works.

A second class of combinatoric search game concerns the exploits of a pursuer who

tries to capture an evader in minimal time. The evader, not being one to go quietly,

strives to delay capture for as long as possible [5], [42]. Owing to these competing

objectives, optimal player strategies are best understood using the language and formalism of game theory. Equilibrium strategies, which are typically highly dependent

on workspace geometry, are reported in [5] for games taking place in an assortment

of environments, including line segments, specialized graphs, and compact regions of

R2. Once again, the use of mixed strategies is critical to describing equilibrium play.

Also in [5], the authors consider team search games in which multiple pursuers scour

the environment in an effort to locate the evader quickly. In this respect, the game

is played between the team of pursuers and the lone evader; individual pursuers have

no preference for which of them, if any, ultimately succeeds in capturing the evader.

41

As in the problems we consider, the structure of player strategies in adversarial

search games depends heavily on a number of key factors, including sequencing of

player moves, e.g., simultaneous versus turn-based; the information made available to

the players, e.g., finite versus infinite sensing radius; the geometry of the environment;

and the number of pursuers, i.e., search agents, participating in the game. Depending

on the specifics of the problem, solutions and efficient algorithms for computing them

are either known, known to scale poorly with problem complexity, or remain open

research problems [33].

It is useful to take stock of the body of work discussed thus far in the context

of competitive search games. Recall that competitive search games emphasize the

decision-making process of search agents that compete (rather than cooperate) to

capture a target. In this setting, agents must individually design and execute search

plans given only a prior on the target's position. This requirement recalls the basic

premise of probabilistic search problems. However, due to inter-agent competition

for targets, prudent search strategies must account for the presence of other agents in

the workspace, and thus prove efficient in a game-theoretic sense. This latter point

recounts the methodology used to analyze select pursuit-evasion games. The next section considers problems that unfold in dynamically changing environments. Although

not directly search-related, these problems help to frame the work in Chapter 7, where

targets are contested on an ongoing basis in a time-varying environment.

2.4

Persistent planning problems

When an environment is dynamic or contains an inherent level of uncertainty, it is

often necessary to employ agents that perform a task indefinitely. This requirement

is present, for example, in surveillance and patrol applications. Here, agents must

inspect or provide service to specific regions of the workspace on an ongoing basis

[21]. Describing policies that realize this long-term functionality is best achieved

by using iterative constructs and adopting an algorithmic perspective. This section

42

draws attention to research, much of which is quite recent, that stresses the need for

precisely this type of persistent planning.

In dynamic vehicle routing (DVR) problems, one or more service vehicles mobilize

within a workspace to accomplish a task; usually to satisfy a set of demands that arrive

dynamically at a time and location that are unknown in advance. However, unlike the

search scenarios we consider, service vehicles in DVR problems are alerted to each new

demand and its location at the time of the demand's arrival. To provide a high quality

of service, agents adopt adaptive policies that reflect the current state of the system

and, to some extent, a projection for how the set of outstanding demands will evolve

[80]. A typical quality of service metric is the expected amount of time, in steadystate, that a demand spends in the system before receiving service 118]. In the dynamic

traveling repairman problem (DTRP), vehicles are notified of each demand's location,

provide on-site service, and the service times are modeled as random variables [191,

[20]. More exotic variants of the DTRP framework that capture features such as

customer impatience, various demand priority levels, and nonholonomic vehicular

constraints are studied using queueing-based policies in [27].

A decentralized DTRP policy that performs optimally under light-load conditions

is considered in [8].

More relevant to the research at hand, however, is the fact

that the policy supports a game-theoretic interpretation in which a socially optimal

Nash equilibrium exists. Service vehicles treat the centroid of all previously serviced

demands as a home base, and return to wait at this location when the environment is

void of outstanding demands. When demands do appear, the vehicles venture forth

to provide service, adjusting their home base as necessary. In this case, the utility

function of each vehicle places a premium on being much closer to a demand than

the next closest vehicle in the workspace.

The problem of finding a target that intermittently emits a low-power distress

beacon is presented in [881. A vehicle with finite sensing radius is capable of locating

the target only when it senses a signal that originated from within its sensing zone.

The expected time for a vehicle to discover the target using periodic search paths

43

is analyzed. In [46], the persistent patrol problem assumes that targets enter the

environment dynamically according to a renewal process, and the vehicle has a prior

over each target's location. Persistent search plans are designed to, in steady-state,

and as the sensing radius becomes small, locate targets in minimal expected time.

Extensions to multi-robot persistent patrol scenarios are provided in [38] in the context of optimal foraging strategies. The main result is that the frequency of visits

to a region is proportional to the third-root of the target density in that particular

region to ensure targets are collected in a timely manner.

The task of providing persistent surveillance to a finite set of feature points within

a region is considered in [87]. The points in question could represent, for example,

sites at which hazardous waste materials have accrued and must be collected for safe

disposal. A periodic speed controller for a fixed vehicle route is designed to minimize

the maximum steady-state accumulation of waste across the sites. A closely related

problem is addressed in [86], where observation points are classified based on the

required visitation frequency and a control policy is enacted that ensures each point

is visited sufficiently often. Similar themes are explored in the context of perimeter

patrol, coverage, and surveillance applications, e.g., [1], [2], [26], [29], [31], [81], [85].

The collection of persistent planning problems outlined above emphasize the need

to continually revisit sites within the environment.

This involves translating the

operational objectives of the problem, in conjunction with the geometric and temporal parameters of the systems, into a visitation schedule for the various sites in the

workspace. In Chapter 7, we consider games in which targets enter the environment

dynamically.

Here again, agent planning requires deciding how often to visit spe-

cific points within the workspace. Of course, the competitive nature of our problem

requires integrating these considerations with the recent travel histories of nearby

search agents.

44

Chapter 3

Mathematical Preliminaries

This chapter provides a focused summary of the main theoretical tools used in the

thesis. Because we are interested in characterizing search strategies that find targets

efficiently in the face of inter-agent competition, this amounts to a presentation of

key game-theoretic ideas. As mentioned in the previous chapter, search theory draws

upon a range of techniques, but has few transcendent results. Rather, contributions

tend to be application-specific. In contrast, game theory has a number of core ideas

that permeate the field and provide a framework to characterize and study a range

of multi-agent encounters, search-related or otherwise. Here, we recount three such

concepts: Nash equilibria, best-response strategies, and maximin strategies. A full

discussion of these ideas may be found in any of the many textbooks on the subject,

including [111, [41], [70], [771. Finally, a short example is provided to better illustrate

each concept. Although well-established, these ideas, taken collectively, provide an

able toolset for characterizing strategic play in competitive search games.

3.1

Game theory

For the purpose of this thesis, a game constitutes an encounter involving n players in

which the utility, i.e., well-being or payoff, of each player depends on the collective

actions of all players. Game theory studies the decision-making process and ultimate

45

outcome of players in a game. In competitive search games, the number of targets a

cow captures depends not only on her search strategy, but also the search strategy

of each rival cow. Therefore, the scenarios of interest are games not only in the

vernacular, but also in the game-theoretic sense. Moreover, game theory is the natural

tool to describe and analyze these encounters. No concept is more central to gametheory than that of a Nash equilibrium, or simply NE, a strategy profile, i.e., a

collection of player strategies, from which no player has a unilateral incentive to

deviate.

For our work, the closely related concept of an c-NE also proves useful

[71], [72]. To this end, we present the following definition, which closely follows the

presentation in [41].

Definition 3.1 (Pure Strategy E-Nash Equilibrium). Let g be a game with n

players, V = {1, . . . , n}. Let Si be the strategy set of player i and S = Si x

...

x Sn

the set of strategy profiles. For s E S, let si be the strategy played by player i in s

and s-.

the strategy profile of all players other than i in s. Let U2(s) be the utility

of player i under s. For e > 0, a strategy profile s* E S is a pure strategy c-Nash

equilibrium of 9 if for all i E V,

U (si , s_) + E > U (si, s*j) for all si E Si.

(3.1)

In words, (3.1) says that s* is an E-Nash equilibrium, or E-NE, if no player can