GAO INFORMATION TECHNOLOGY DOD Needs to

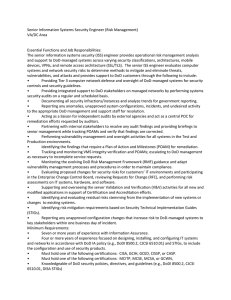

advertisement