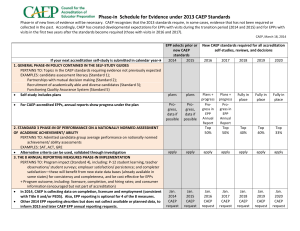

CAEP Evidence Guide January 2015

advertisement