THE VULNERABILITY OF TECHNICAL SECRETS TO POLICY By

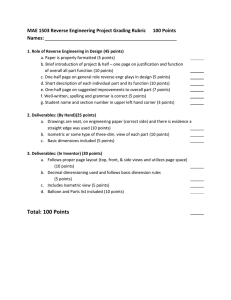

advertisement