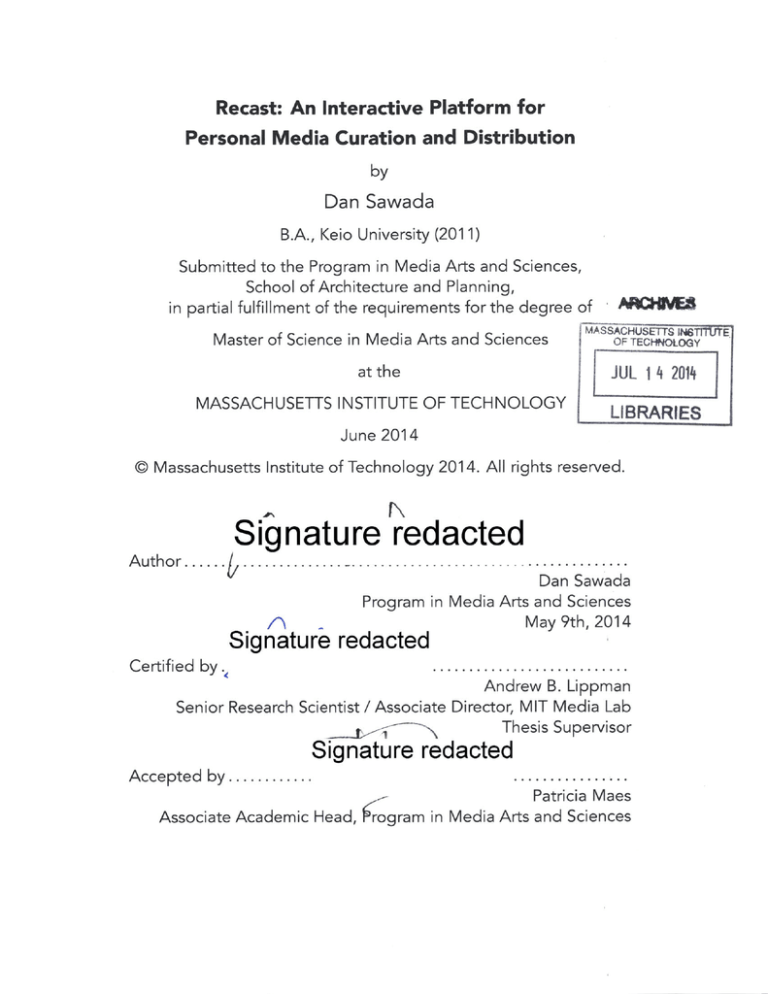

Recast: An Interactive Platform for

advertisement

Recast: An Interactive Platform for

Personal Media Curation and Distribution

by

Dan Sawada

B.A., Keio University (2011)

Submitted to the Program in Media Arts and Sciences,

School of Architecture and Planning,

in partial fulfillment of the requirements for the degree of

Master of Science in Media Arts and Sciences

OF TECHNOLOGy

at the

JUL 14 2014

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

LIBRARIES

June 2014

C Massachusetts Institute of Technology 2014. All rights reserved.

Signature redacted

Author...................

..

. .

..

. .

--

- -

-

-- -

-

- ----

- -- -

-r -

- I

Uan Sawada

Program in Media Arts and Sciences

May 9th, 2014

Signature redacted

Certified by

.

Andrew B. Lippman

Senior Research Scientist / Associate Director, MIT Media Lab

Thesis Supervisor

Signature redacted

Accepted by............

Patricia Maes

Associate Academic Head, 1rogram in Media A rts and Sciences

2

Recast: An Interactive Platform for

Personal Media Curation and Distribution

by

Dan Sawada

Submitted to the Program in Media Arts and Sciences,

School of Architecture and Planning,

on May 9th, 2014, in partial fulfillment of the

requirements for the degree of

Master of Science in Media Arts and Sciences

Abstract

This thesis focuses on the design and implementation of Recast, which is an

interactive media system that enables users to dynamically aggregate, curate, reconstruct, and distribute visual stories of real-world events, based on various perspectives. Visual media have long been the means for consumptive information

acquisition. However, the advancement of technology in the field of communication networks and consumer devices has made visual media a powerful tool for

user expression.

Given the background, Recast aims to present an intuitive platform for proactive citizens to create visual storyboards that represent the view of the world from

their perspective. In order to fulfill the needs, Recast proposes a media analysis

platform, as well as a block-based user interface for semi-automating the workflow of video production. As a result of an operation test and a user study, it was

verified that Recast is successful in achieving its initial goals.

Thesis Supervisor: Andrew B. Lippman

Title: Senior Research Scientist / Associate Director, MIT Media Lab

3

4

Recast: An Interactive Platform for

Personal Media Curation and Distribution

by

Dan Sawada

/i

Signature redacted

Thesis Advisor /

...............................

Andrew B. Lippman

Senior Research Scientist / Associate Director, MIT Media Lab

Signature redacted

Thesis Reader .

.

..............

V. Michael Bove, Jr.

Principal Research Scientist, MIT Media Lab

Thesis Reader

Signature redacted

Ethan Zuckerman

Principal Research Scientist, MIT Media Lab

5

6

Acknowledgments

With the utmost gratitude, I thank my advisor, Andy Lippman, for offering me

the opportunity to come to the MIT Media Lab and guiding my way through research. Your guidances, both academic and personal, were extremely meaningful.

I thank my thesis readers, Mike Bove and Ethan Zuckerman, for their wonderful

insights and comments toward completing this thesis.

I thank the members of the Digital Life Consortium and the Ultimate Media

Initiative of the MIT Media Lab, especially Comcast, DirecTV, and Cisco, for financially supporting my research.

I thank the members and alums of the Viral Spaces group (Travis Rich, Rob

Hemsley, Jonathan Speiser, Savannah Niles, Vivian Diep, Amir Lazarovich, Grace

Woo, and Eyal Toledano) for their friendship.

Amongst the members of my group, I owe a special thanks to Rob Hemsley

and Jonathan Speiser, my fellow mates of The Office (E14-348C), for all the fun

and inspiration.

I thank all the Japanese students and researchers in the lab, especially Hayato

Ikoma, for their support and encouragement.

I thank all of my classmates, and everyone at the lab for providing such an

unique culture and environment for pursuing the concepts of the future.

I thank my parents, Shuichi and Teruko Sawada, for their understanding and

empathy.

Last but not least, I thank Shiori Suzuki, my beloved fiance, for always being

with my heart.

7

8

Contents

1

Introduction

1.1

M otivation

17

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

1.2 Contributions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

1.3

Overview of Thesis . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2 Background and Purpose

2.1

19

21

Utilizing Visual Media Toward Self Expression . . . . . . . . . . . . . 21

2.2 Video Production and Editing . . . . . . . . . . . . . . . . . . . . . . 23

2.2.1

History of Video Production and Editing . . . . . . . . . . . . 23

2.2.2 Workflow of Video Production and Editing . . . . . . . . . . 25

2.2.3

2.3

Issues Around Video Production and Self Expression . . . . . 26

Purpose . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

3 Related Work

29

3.1

M edia Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

3.2

Media Aggregation and Curation . . . . . . . . . . . . . . . . . . . . 31

3.3

Internet-based Media Distribution . . . . . . . . . . . . . . . . . . . 32

4 Approach

4.1

35

Media Analysis System. . . . . . . . . . . . . . . . . . . . . . . . . . 35

4.1.1

System Concept . . . . . . . . . . . . . . . . . . . . . . . . . 35

4.1.2

System Overview. . . . . . . . . . . . . . . . . . . . . . . . . 37

4.2 Recast UI. .......................................

9

38

5

4.2.1

Concept of Interaction . . . . . . . . . . . . . . . . . . . . . . 38

4.2.2

Overview of UI . . . . . . . . . . . . . . . . . . . . . . . . . . 39

Media Analysis System

41

5.1

Media Acquisition Framework.

41

5.1.1

42

Cloud-based DVR . . .

5.1.2 Web Video Crawler

43

5.1.3

User Upload Receiver

44

. . . . . . . . . . . . . .

44

Download Module . . .

46

5.2.2 Transcript Module . . .

46

5.2.3 Thumbnail Module . . .

48

5.2.4 Scene Module . . . . .

48

5.2.5

Face Module . . . . . .

49

5.2.6

Emotion Module . . . .

50

5.3 Media Database . . . . . . . .

51

5.2 G LUE

5.2.1

5.3.1

Data Store

5.3.2

Supplemental Indexing f:or Text-based Metadlata

52

5.3.3

Media Database API . .

53

. . . . . . .

51

5.4 Constellation . . . . . . . . . .

54

5.4.1

System Setup . . . . . .

54

5.4.2

User Interaction

. . . .

55

5.4.3 Visualization Interfaces .

56

6 Recast UI

6.1

59

Overview of Implementation

6.2 Scratch Pad and Main Menu .

. . . . . . . . . . .

59

. . . . . . . . . . .

60

6.3 Content Blocks . . . . . . . . .

60

6.3.1

Video Assets . . . . . .

60

6.3.2

Image Assets . . . . . .

63

6.3.3

Text Assets . . . . . . .

64

10

6.4

Filter Blocks. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

6.5 Overlay Blocks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

6.6 Asset Management Service . . . . . . . . . . . . . . . . . . . . . . . 68

6.7

Recast EDL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

69

6.7.1

Timeline.

. . . . . . . . . . . . . . . . . . . . . . . . . . . . .

69

6.7.2

Recast Video Player . . . . . . . . . . . . . . . . . . . . . . .

69

6.7.3

Publishing Recast EDLs . . . . . . . . . . . . . . . . . . . . .

72

73

7 Evaluation

7.1

7.2

8

Operation Tests. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

73

7.1.1

Method . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

73

7.1.2

Results

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

74

7.1.3

Considerations . . . . . . . . . . . . . . . . . . . . . . . . . .

75

User Studies

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

76

. . . . . . . . . . . . . . . . . . . . . . . . .

76

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

77

Considerations . . . . . . . . . . . . . . . . . . . . . . . . . .

79

7.2.1

Method . .. ..

7.2.2

Results

7.2.3

Conclusion

81

8.1

Overall Achievements . . . . . . . . . . . . . . . . . . . . . . . . . .

81

8.2

Future Work.

82

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

. . . . . . . . . . . . .

82

. . . . . . . . . . . . . . . . . . .

82

Large-scale Deployment . . . . . . . . . . . . . . . . . . . . .

82

8.2.1

Improvement of Metadata Extraction

8.2.2

Enhancement of Recast Ul

8.2.3

A List of Video Samples

85

B User Study Handout

87

11

12

List of Figures

1-1

Live Coverage of 9/11 the Terror Attacks

. . . . . . . . . . . . . . .

1-2 The Announcement of Pope Francis's Election

18

. . . . . . . . . . . . 18

2-1 V ine . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .. .. . . . . 22

2-2 List of Supercuts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2-3 C M X 600 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

2-4 Popcorn Maker . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

2-5 NBC News Studio in New York City. . . . . . . . . . . . . . . . . . . 26

3-1 Visualization of Trending Topics on Broadcast Television . . . . . . . 30

3-2 Word Cloud of Trending Topics on Mainstream Media . . . . . . . . 32

3-3 Ustream . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

4-1

Concept of Tagging Scenes With Metadata . . . . . . . . . . . . . . 36

4-2 High-level Design of The Media Analysis System . . . . . . . . . . . 37

4-3 Overview Design of the Recast UI

5-1

. . . . . . . . . . . . . . . . . . . 40

Example of a GLUE Process Request . . . . . . . . . . . . . . . . . . 45

5-2 Snippet of an SRT File . . . . . . . . . . . . . . . . . . . . . . . . . . 47

5-3 Example of an Object Representing a Phrase Mention . . . . . . . . 47

5-4 Example of Thumbnail Images . . . . . . . . . . . . . . . . . . . . . 48

5-5 Example of Scene Cuts

. . . . . . . . . . . . . . . . . . . . . . . . . 49

5-6 Example of a Face Detected Within a Video Frame . . . . . . . . . . 50

5-7 Waveform and Emotion State of a Speech Segment . . . . . . . . . 51

5-8 Setup of Constellation . . . . . . . . . . . . . . . . . . . . . . . . . . 55

13

5-9 Constellation in Metadata Mode . . . . . . . . . . . . . . . .

56

5-10 Constellation Transitioning to Metadata Mode . . . . . . . .

57

. . . . . . . . . . . . .

57

Scratch Pad and Main Menu of the Recast UI . . . . . . . . .

61

6-2 Examples of Content Blocks . . . . . . . . . . . . . . . . . . .

62

6-3 Specifying Search Keywords for Retrieving Video Assets . . .

62

6-4 Preview of Video Assets . . . . . . . . . . . . . . . . . . . . .

63

6-5 Chrome Extension for Capturing Screen Shots of Web Pages

64

6-6 List of Image Annotation Tags in Menu . . . . . . . . . . . . .

65

6-7 Preview of Image Assets . . . . . . . . . . . . . . . . . . . . .

65

6-8 Creation of Text Content Blocks . . . . . . . . . . . . . . . .

66

6-9 Examples of Filter Blocks . . . . . . . . . . . . . . . . . . . .

66

6-10 List of Creators . . . . . . . . . . . . . . . . . . . . . . . . . .

67

6-11 Recording Voice . . . . . . . . . . . . . . . . . . . . . . . . .

68

6-12 Example of a Recast EDL . . . . . . . . . . . . . . . . . . . .

70

6-13 Timeline With Content Blocks . . . . . . . . . . . . . . . . . .

70

6-14 Preview of Final Video . . . . . . . . . . . . . . . . . . . . . .

71

6-15 M edia M atrix . . . . . . . . . . . . . . . . . . . . . . . . . . .

72

5-11 Visualization Screens in Constellation

6-1

Processing Times of Analysis Modules in GLUE

75

7-2 Comparison of the Task Completion Time . . .

78

7-3 Comparison of User Ratings . . . . . . . . . . .

78

7-1

14

List of Tables

5.1

List of Recorded Channels . . . . . . . . . . . . . . . . . . . . . . . . 42

5.2

List of Analysis Modules in GLUE . . . . . . . . . . . . . . . . . . . . 45

5.3 Schema of Solr Core . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

5.4

List of Endpoints Within the Query API . . . . . . . . . . . . . . . . . 53

6.1

List of Filters

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

15

16

Chapter 1

Introduction

This chapter discusses the motivation and the contributions of this thesis, as

well as its overview structure.

1.1

Motivation

Ever since commercial television broadcasting started in the mid-20th century, visual media has been one of the main sources for us to acquire information

and understand various events that occur throughout the globe. Many historical

events such as the collapse of the World Trade Center have been visually documented with motion pictures and disseminated simultaneously to the entire world,

as shown in Figure 1-1. There is no doubt on how powerful and influential visual

media is, as it has the ability to deliver reality and immersive experiences to a mass

audience in remote locations.

In the late-20th century, the Internet was introduced and revolutionized how

we interact with visual information and media in general. The Internet has enabled

visual media to become the means for expression, rather than simple consumption.

Thanks to the Internet and its related technologies, we can easily make use of

visual media to report what we see, create stories, and present our thoughts and

perspectives to the entire world in real-time. For instance, Figure 1-2 indicates

a scene where a huge crowd is documenting and sharing the announcement of

17

Pope Francis's election at St. Peters Square in March 2013.

Figure 1-1: Live Coverage of 9/11 the Terror Attacks

(Source: BBC)

Figure 1-2: The Announcement of Pope Francis's Election

(Source: AP)

18

Given the context of visual media and self-expression, the motivation of this

thesis is to rethink the way we can use visual media to express ourselves. Although

there are many existing technologies and services we can utilize to express ourselves to the general public, there is still room for making the process simple, more

intuitive, and accessible.

1.2

Contributions

To play a role toward filling the white spaces in the area of visual media and

self-expression, this thesis focuses on the design of Recast, which is a system that

enables anyone to potentially become their own the newsroom of their own. The

main contributions of this thesis include the design and implementation of the

following frameworks:

" A novel system for collectively gathering and indexing visual media content

* A new block-based visual programming language for producing remixed

video storyboards in a semi-automated manner

1.3

Overview of Thesis

Chapter 2 first discusses the background, covering the cultural history of visual

media and its utilization toward self-expression. It then covers the purpose and

the goals of this thesis.

Chapter 3 covers some of the prior work in the field of media analysis, media

curation, and media distribution. Given the discussion of prior work, Chapter 4

presents the approach toward creating Recast, and discusses its system design.

Chapter 5 mainly discusses the details of the Media Analysis System. It covers

the technical details and implementations of the back-end analysis engine that

drives Recast, as well as other applications.

19

Chapter 6 describes the details of the Recast UI, which is the front-end user

interface of Recast. It covers the user interactions around Recast, as well as the

process of how remixed news storyboards are constructed from original materials.

Finally, Chapter 7 discusses the evaluation of Recast and Chapter 8 states the

conclusion of this thesis.

20

Chapter 2

Background and Purpose

This chapter describes the background of this thesis, mainly covering the culture and history of visual media and self-expression. Following the background,

this chapter also describes the purpose and goal of this thesis.

2.1

Utilizing Visual Media Toward Self Expression

The majority of visual media that we interact with are produced and distributed

by major content creators such as CNN or BBC. In general, the facts that we see,

hear, and learn are from video content are likely to be biased with the voices and

thoughts of the content creators. On the other hand, the act of creating and disseminating personal media content has become extremely easy, thanks to the advancement of Internet-based technologies and media platforms. For instance,

one can easily create a video and post it on YouTube [1], or share live moments

in real-time with Vine [2], as shown in Figure 2-1. However, despite the fact that

Internet-based media platforms for sharing user-generated content are increasing

in importance, the influence of old-school content creators and broadcasters are

very strong.

On the other hand, there are considerably large groups of proactive citizens

who develop and enjoy the culture of vidding [3]. Vidding refers to the practice of

collecting raw materials and footage from the existing corpus of visual media, and

21

Figure 2-1: Vine

(Source: Mashable)

create remixed content based on the users' thoughts, perspectives, and creativity.

The official history of vidding dates back to 1975, but its general popularity has

expanded drastically with the introduction of Internet-based video sharing platforms.

The process of vidding is extremely meaningful in terms of fostering creativity

and awareness. For instance, some may attempt to increase political awareness

with Political Remix Videos [4]. A Political Remix Video refers to a genre of transformative do-it-yourself media production, whereby creators critique power structures, deconstruct social myths and challenge dominate media messages through

re-cutting and re-framing fragments of mainstream media and the popular culture.

As an example, "Moms & Tiaras" [5] remix by Angelisa Candler combines selected

clips from the show, "Toddlers &Tiaras", to shift the focus away from the children

and onto their parents. By completely removing footage of the toddlers, Candler presents a re-imagined reality show called "Moms & Tiaras" that is critical of

the questionable and sometimes deeply troubling behavior of the adults behind

prepubescent beauty pageant contestants.

Not all remixed videos are related to politics. For instance, some enjoy creating

Supercuts [6], as shown in Figure 2-2. A Supercut refers to a fast-paced montage of

22

short video clips that obsessively isolates a single element from its source, usually

a word, phrase, or cliche from film and television. As an example, "My Little Pony:

Friendship is Magic in a nutshell'' [7] aggregates the segments from the show, "My

Little Pony", which mention the word "friend", in a comical way.

-

The full list

Ordr by

nm

Ordrby

dt.

Addod

ANt

Film

Te.,.,.s

TesvbIson

Real4e

VariU"

Vda-

dsCwid

calHubod

B

C on s

n . abe

vmwg

cmprng bA

2=8Ulaf~h.Lkdan~Addr.

WithW ry spewh

m

U

Si

-

Jumft

b.rmtO

-

M1*Cam*dAw.

Ad,

ysko

mPAUbW

psW

t"

fS addwn

-

Emy

m

an

-"Wawt

Ka

nd

u2fM2

t.mTmaaa.t

bm

yd.from BubWatow

- E~yy

in he

Figure 2-2: List of Supercuts

(Source: Supercut.org)

2.2 Video Production and Editing

In the field of visual media, video production and editing is one aspect that

cannot be missed, regardless of its type or genre. This section describes the history

of video editing, as well as its high-level workflow and its issues.

2.2.1

History of Video Production and Editing

The history of video editing dates back to 1903, when Edwin S. Porter first introduced the crosscutting technique [8]. The first motion film to utilize the crosscutting technique was "The Great Train Robbery", where 20 individually-shot scenes

were combined to create one single sequence. Since then, linear video editing,

23

which refers to the act of cutting, selecting, arranging and modifying images and

sound in a predetermined, ordered sequence become popular.

The production of visual media evolved drastically in 1971 when CMX Systems

introduced the CMX 600, which was the first non-linear video editing system [9].

Unlike linear video editing techniques that require the process of going through

films or videotapes in a sequential fashion for finding the correct segment, nonlinear video editing allows random access within a stored, electronic copy of the

material. The CMX 600, as shown in Figure 2-3, had the capability of digitally

storing 30 minutes worth of black-and-white video content. It was also equipped

with a light pen interface for making cuts and reordering scenes. Although the

CMX 600 had many limitations, it was the first-ever computerized non-linear video

editing system that became commercially available.

Figure 2-3: CMX 600

(Source: The Motion Picture Editors Guild)

Thanks to the advancement of technology, there are a lot of video editing and

production platforms that run on personal consumer appliances. For instance,

Apple bundles iMovie [10] with its Mac OS X operating system, and even provides

mobile versions for iPads and iPhones. Apart from native applications, the Popcorn

24

Maker [11], as shown in Figure 2-4, presents a video editing application that runs

completely within a web browser.

P cornmmakr

MYnwprjc

mmozitla

MOZK~aEvents

PopcornMaker

Test

Use the Media tab

to import your

audio or video files

W

IN

n

kflw o

uts of tb toImorado

f md

vPe

rndt

rd

cto

,i

Wtttiat

asue"

ie

c

n

ceptually the same for a number of decades. The following steps outline the process at a high level.

1. Raw footage is collected, recorded, or filmed

2. Footages are cut into segments

3. An edit decision list (EDL) is constructed

4. Based on the EDL, segments are reordered and connected into a sequence

5. The final sequence is compiled and published

An EDL refers to a list that defines which cuts or assets from the original repository are to be used in the final sequence. It may also define how sound tracks or

25

other graphical elements are overlaid on the main sequence. Regardless of the

scope or scale of video production, this process remains more or less the same.

In personal video production scenarios, the process is simplified to a certain

extent. However, in professional scenarios, the process is divided into several layers to maintain the quality and speed of its outcome. For example, in the case of

a television newsroom, there are teams of highly trained experts working together

behind the scenes to deliver high-quality content in a timely fashion. Figure 2-5

shows a few photographs taken during a visit to the NBC News studio in New York

City. Every step towards producing a news program is being handled by a team

of professionals around the globe, working side-by-side with a suite of complex

equipment.

Figure 2-5: NBC News Studio in New York City

2.2.3

Issues Around Video Production and Self Expression

Although the process of video production and editing has become less tedious,

the required workflow toward expressing oneself remains non-trivial. Most of the

user interfaces and interactions around current video production tools inherit the

idea of segmented, sorting, and reordering the original assets on a linear timeline.

While such user interfaces may be intuitive for skilled users that have experie.nce,

it is still cumbersome for ordinary users. In terms of vidding, users do not have a

26

sophisticated way to explore, sort, and understand all of the media content that is

available.

2.3

Purpose

Given the background, this thesis proposes a platform called Recast. As stated

in the previous chapter, the purpose of Recast is to design an intuitive framework

for proactive citizens who want to become the newsroom of their own. The framework of Recast can also be utilized for other use cases, such as creating personalized version of "The Daily Show", where users assemble clips to make a political

point or parody.

For the scope of this thesis, Recast aims to simplify and personalize the functions of traditional newsrooms and production studios. Its key goals are to design

systems and interfaces that enable users to effectively navigate through the universe of visual media that represent real-world events, create storyboards based

on their own perspectives and contexts, and distribute their views to the world.

To achieve the goals of Recast, this thesis focuses on the design and implementation of a system for real-time visual media analysis (Media Analysis System), and

a user interface for semi-automated media aggregation and curation (Recast UI).

The Media Analysis System gathers visual media content from multiple sources,

and annotate them based on various contexts. The Recast UI features an easyto-use visual scripting language to semi-automate the process with data-driven

intelligence.

The visual scripting interface for media aggregation and curation can always

be utilized for other means of self-expression. For instance, one can be use it to

create personalized versions of real-time sports live casts. Others may use it for

remixing personal archived material of family vacations. Although the utilization

of Recast in various domains can be interesting areas to explore, the focus of this

thesis is primarily focused on news and synchronous real-world events.

27

28

Chapter 3

Related Work

One of the key elements toward achieving the goals of Recast is the extracting

and indexing the metadata, which is essential in navigating through the world of

media. However, unlike text-based content that is comparatively easy and lowcost for analyzing and indexing, visual media is difficult to understand, process and

distribute, since it fundamentally requires intensive computation and bandwidth.

This chapter discusses some of the prior work in terms of extracting metadata,

aggregating and curating materials, and distributing content over the Internet.

3.1

Media Analysis

Upon gaining a better understanding of visual media, computational methods of extracting metadata from visual media has always been an active field of

research. In this field, many researchers have proposed various algorithms and

systems that contribute to the goal of extracting metadata.

One of the trends amongst existing work is the analysis of text-based metadata

that is associated with visual media. For instance, the Weighted Voting Method

[12] proposes a statistical approach of automatically extracting feature vectors from

the transcript (closed captioning), and categorizing the clips based on its topic.

The ContextController [13] presents a method to extract entities from video transcripts, and display contextual information associated with the entities in real-time.

29

Apart from research projects, commercial services such as Beamly [14] and Boxfish [15] provide APIs to access analyzed transcripts of broadcast television. Figure

3-1 indicates an example of how Boxfish visualizes trending topics extracted from

television programs.

Trending on Television

Stowaway

Pacers

Pujols

*

MENTIONS

PAST24HOURS

PASTWEEK

PASTMONTH

70

60

so

40

30

20

0

1

p'Ne

~

0

-

-10

04pm OSpm 06pm 07pm 08pm 09pm

10pm 11pm

12am

01am 02am 03mm 04am 05am 06am 07am 08mm 09am

10am

l1mm

12pm Olpm

Figure 3-1: Visualization of Trending Topics on Broadcast Television

(Source: Boxfish)

Other trends of in the field include the analysis of pixels, frames, and audio

tracks within video contents. For instance, Expanding Window [16] proposes an

effective approach to extract scenes from video clips with computer vision and

pseudo-object-based shot correlation analysis. Audio Keywords [17] proposes a

method, which extracts features from the audio track to detect events within a live

soccer game.

There are also methods that look into user-centric approaches to analyze the

content of videos. For instance, MoVi [18] presents a method to apply the concept

of collaborative sensing for detecting highlight scenes of an event. Ma, et al.

30

(2002) [19] focuses on building a model of the users' attention level to determine

important scenes within video clips, without the semantic understanding of the

clip.

3.2

Media Aggregation and Curation

After extracting metadata, some of the challenges are to properly categorize,

segment, annotate, index, and curate video content. Upon addressing these challenges, researchers have proposed various methods that combine different media

analysis techniques.

For instance, systems such as Personalcasting [20], Informedia [21], Pictorial

Transcripts [22], and Video Scout [23] presents a framework to analyze video clips

and create content-based video databases. These systems allow applications to

query scenes or clips based on topic keywords or categories. As a unique scheme

for intuitively retrieving and annotating content, Media Stream [24] proposes a visual scripting interface that utilizes iconic figures. CLUENET [25] proposes a framework to aggregate and cluster semantically similar video clips that flow through

the universe of social networks. Media Cloud [26], on the other hand, provides

an open platform to index and aggregate text-based metadata of media content

from arbitrary sources. Figure 3-2 shows a work cloud visualization of trending

topics that appeared in mainstream media through the week of May 20th, 2013.

In terms of media curation, there are mainly two concepts that lie underneath

existing projects and services. The first concept is to personalize the media consumption experience based on the users' interest or perspective. For instance,

NewsPeek [27] has looked into ways of examining the users' viewing habits of

nightly television news, and providing a personalized news consumption experience. More recent services such as Flipboard [28] provide platforms that aggregate and personalize the way we enjoy Internet-based news media. The second

concept is to filter out irrelevant, false, or low-quality content from a large corpus. For example, CrisisTracker [29] focuses on crowd-sourced media curation,

31

Figure 3-2: Word Cloud of Trending Topics on Mainstream Media

(Source: Media Cloud)

and presents a method to automatically generate relevant and meaningful situation awareness reports from raw social network feeds that contain false or misleading posts.

3.3

Internet-based Media Distribution

The distribution of visual media content has been radically transformed by the

rise of the Internet, due to the rapid growth of platforms like YouTube. Netflix

[30] and other related platforms have overtaken the roles of DVD rental shops,

and provide users with on-demand access to movies and TV programs over the

Internet. Apart from static media distribution, there are platforms such as Ustream

[31] that enables any user to become a live media broadcaster, and present oneself

to the world in real-time. Figure 3-3 shows a list of publicly available live broadcasts

on Ustream.

As for the technologies for Internet-based media distribution and broadcasting,

many standard protocols, coding schemes, and communication technologies have

been introduced ever since the dawn of the Internet. For instance, RealPlayer [32]

was a huge contributor in terms of video streaming. Currently, there is a strong

trend toward embedding the functions of visual media distribution within web

pages and web browsers using HTML5 [33] and the HTTP Live Streaming pro32

USTFEW

EXPLORE

-

PRODUCTS

Log in / Sign up

SEARCH

-

OOprMy Cam

370 vieweis

Jordan Lake EagleCam

124 viewors

The Decorah Eagle Cam

We ,vie

Figure 3-3: Ustream

tocol [34]. While these methods may not be the optimal solution in terms of technical efficiency, the commoditization of high-bandwidth Internet connectivity and

extensive abilities of web browsers make it possible to implement such functionalities within simple web pages that don't rely on any third-party software.

33

34

Chapter 4

Approach

Given the review of existing methods and technologies, this section describes

the approach toward building Recast, which consists of the design and implementation of a the Media Analysis System and the Recast UI. The Media Analysis System aims to build an archived corpus of visual media, and extract metadata that

gives a better understanding of the frames that underlie within each piece of content. The Recast UI, on the other hand, aims to provide a simple visual scripting

language for semi-automating the process of media aggregation and curation.

4.1

Media Analysis System

The Media Analysis System aims to build a corpus of visual media that is indexed based on frame-level metadata. Frame-level metadata refers to information

that provides deep insights into the meaning of the content.

4.1.1

System Concept

In the World Wide Web, there are basic semantic markers that make it possible to extract information and order from hypertext documents. The "a" tag,

for example, explicitly maps out connections between various web pages, and

becomes a useful tool for analysis in applications such as Google's PageRank [35].

35

Other HTML tags such as the header tags and emphasis tags (e.g. "bold ", "italic",

etc.) provide markers for differentiating importance of content within a document.

These structures embedded within the hypertext facilitate the creation of useful

information retrieval applications.

Video content, however, is typically a sequence of pixels and sound waves that

do not have a unified structure for easily extracting semantic data and relationships. Therefore, the Media Analysis System makes attempts to decompose the

video container, and run various analyses over each individual component to computationally extract information that defines the context of the original content.

Figure 4-1 indicates the concept of extracting metadata, and annotating scenes

with tags that gives an insight into its context.

Figure 4-1: Concept of Tagging Scenes With Metadata

36

4.1.2

System Overview

Figure 4-2 indicates a high-level design of the system. The system is composed

of three individual parts; the Media Acquisition Framework, the extensible media

analysis framework called GLUE, and the Media Database.

Media Acquisition Framework

YouTube

e e-

Crawler

Raw

Video Clips

Process Request

GLUE Framnework

File Storage

Metadata

Get scenes that

mention "Syria"

Figure 4-2: High-level Design of The Media Analysis System

The Media Acquisition Framework is a family of content retrievers that include a

cloud-based DVR, web video crawlers, and a user upload receiver. Each recorder,

crawler, or receiver is a self-contained process. Its roles are to collect and store

raw video clips from the specified source. As of April 2014, the framework is simultaneously capturing news content from 10 nation-level TV channels, YouTube,

and the TV News Archive [36], as well as accepting user-uploaded media.

After video clips are collected and stored into the storage, each recorder or

crawler passes a message to GLUE for initiating the analysis. GLUE is an extensible, modular media analysis engine that was designed and implemented in col37

laboration with Robert Hemsley and Jonathan Speiser. It has the responsibly of

in-taking video files and extracting the following types of metadata by analyzing

the video frames, sound track, and transcript:

" Scenes cuts within the clip

" Human faces

* Static thumbnail images

" Phrases (mentions) in the transcript

" Named entities (people, organizations, locations, etc.)

* Emotional status of the speakers

Upon completion of the analysis, GLUE passes the metadata onto the Media

Database. The Media Database stores and indexes the metadata for video clips

that ran through GLUE. The Media Database also exposes an extensible RESTful

API that allows any application to issue queries. By using this API, applications

can access video clips based on the various factors. For instance, applications can

query the system for all the scenes that mention "President Obama", where two

human individuals are having a conversation in an angry manner.

4.2

Recast UI

For providing an intuitive interface for users to curate the content in a semiautomated fashion, the Recast UI inherits the paradigm of visual programming

languages, such as Scratch.

4.2.1

Concept of Interaction

As previously mentioned, the goal of Recast is to allow novice users to become

the newsroom of their own. However, the process of curating raw materials and

38

creating meaningful storyboards is a non-trivial task that involves a large team of

professional directors, producers, reports, designers, and technicians. Therefore,

the main challenge of Recast is to design an intuitive, data-driven user interface

that can semi-automate the workflow of content curation and media production.

Typically, automating workflows involves some kind of computer programming

or scripting. For the scope of the Recast UI, it must have some form of scripting language that users can use to define and automate the selection of relevant

scenes from desired sources. It should also have smart ways to assist the user in

narrowing the scope or topic. Scripting languages may be full-featured programming languages, but such languages tend to be counterintuitive, especially for

individuals who do not have prior experience with coding.

In order to make the process of scripting intuitive and easy to use for everyone,

the Recast UI adopts the concept of visual programming languages, similar to

those found in Scratch [37] or VisionBlocks [38]. In the same way kids can combine

blocks in Scratch to create action scripts for animating game characters, users of

Recast can create their own news storyboards by manipulating blocks. In terms

of using visual elements for querying content, Recast UI also inherits some of the

dynamics proposed in Media Stream [24].

4.2.2

Overview of U1

Figure 4-3 indicates how content blocks (primitives that define bundles of content assets) are combined with filter blocks (primitives that define the scope of

curation). Based on the given scope, each content block automatically retrieves

content that are relevant to the context. After the curation process, users may drag

the blocks into the timeline. This timeline represents a personalized EDL, which includes all the original assets that were selected. Based on the EDL, Recast renders

all the content into a single video sequence.

The Recast UI is designed to be used on devices that have touch-enabled displays. In terms of manipulating virtual blocks, touch-based interfaces can increase

39

Figure 4-3: Overview Design of the Recast Ul

the tangibility of elements and improve the usability. The Recast UI is also intended

to be a web application that runs within a standard web browser, and a test bed

for experimenting with new web-based technologies. Amongst various browsers,

the desktop version of Google Chrome [39] was selected a platform for running

the Recast UI, since it is the most sophisticated browser in terms of supporting new

technologies and standards in a stabilized way.

40

Chapter 5

Media Analysis System

The Media Analysis System was designed and implemented in collaboration

with Jonathan Speiser and Robert Hemsley. This system serves as a unified backend data engine that drives not only Recast, but also several other applications

and demonstrations.

This chapter mainly covers the technical design and implementation of the

three main components that comprises the Media Analysis System. It also discusses the details of Constellation, which is an interactive installation that visualizes

the analysis results.

5.1

Media Acquisition Framework

As described in the previous chapter, the Media Acquisition Framework is a

family of content retrievers, which acquires the original content and passes them

onto GLUE for analysis. Each piece of content acquired by this framework is given

a unique global identifier known as the UMID (Unique Media ID). As of April 2014,

the Media Acquisition Framework consists of a cloud-based DVR, two web video

crawlers, and a user upload receiver.

This framework is designed to be modular, extensible, and scalable. Every

single recorder or crawler is a stand-alone process that can run independently on

any node across the network, and all of the retrieved assets are served externally

41

using nginx [40], which is a sophisticated web server. Therefore, it is extremely easy

to add new types of retrievers, and distribute processes across multiple machines

on the network. The remainder of this section describes the details of the three

retrievers that were initially implemented.

5.1.1

Cloud-based DVR

At the MIT Media Lab, there is an in-house satellite head end that has the

ability of translating DirecTV [41] channel feeds into multicast UDP streams. The

cloud-based DVR is a process that records television programs from DirecT, by

capturing and transcoding the UDP/IP streams served by the head end.

The cloud-based DVR is a stand-alone daemon written in Python that periodically retrieves the program guide and simultaneously records individual programs

from 10 nation-level channels listed on Table 5.1. The program guide used by the

DVR is provided by the Tribune Media Services (TMS) [42]. TMS exposes webbased APIs that third-party applications can use to access the television program

guide. Based on the program guide, the DVR forks FFmpeg processes for recording the actual program. After each programs is successfully recorded, the DVR

sends a process request to GLUE via HTTP.

Table 5.1: List of Recorded Channels

Channel Name

PBS (WGBH)

Channel Number

002

ABC (WCVB)

005

FOX (WFXT)

025

BBC America

264

Discovery Channel

278

FFmpeg [43] is a cross-platform tool that can capture UDP streams, and transcode

videos to a various formats. Each FFmpeg instance forked by the DVR has the re42

sponsibility of recording a single program, and transcoding them into an HTML5compatible format. As of April 2014, the video format compatible with HTML5 is

still fragmented. For the scope of thesis, FFmpeg was configured to use H.264 [44]

for the video, and AAC [45] for the audio. These are the codecs compatible with

Chrome. FFmpeg is also configured to create low-resolution (640 x 360 pixels,

15 FPS) versions of the video for analysis purposes. Apart from FFmpeg, a tool

called CCExtractor was also used to extract the transcript (closed captioning) as a

SubRip [46] subtitle (SRT) file from the original video source.

The DVR is also equipped with a feature for dynamically configuring the context

of programs to record. For the scope of this thesis, the DVR was configured to only

record programs that are related to news, based on the TMS program guide.

5.1.2 Web Video Crawler

In addition to the DVR, the Media Acquisition Framework also includes web

video crawlers that can scour and retrieve videos found on the World Wide Web.

The crawlers are stand-alone scripts based on a Python framework called Scrapy

[47]. The scripts run periodically, and recursively follows web links to find videos.

For the scope of this thesis, two types of crawlers were implemented; one being

a YouTube crawler, and the other being a News Archive [36] crawler.

Crawlers look for video content related to news, within their service domain. After the crawlers discover video content, it then passes the URL to a message queue

server based on RabbitMQ [48]. The message queue server then dispatches a process, which downloads the content, transcodes the video to an HTML5-compatible

format, and generates a low-resolution version. After all the processing is completed, it sends a process request to GLUE via HTTP. If a transcript is available

within the video, it is extracted and stored as an SRT file.

43

5.1.3

User Upload Receiver

The user upload receiver is a simple web server based on Node.js [49] and

Express [50]. After it accepts file uploads from users, it saves the file onto the disk,

and examines the file format.

If the file is a typical video file, the receiver transcodes the video into HTML5compatible format, and creates a low-resolution version. If the file is a disk image of

a video DVD, the receiver extracts the video and applies the necessary processing.

If a subtitle track is present within the uploaded video, the receiver also extracts

the transcript into an SRT file. After everything is completed, the receiver sends a

process request to GLUE via HTTP.

The receiver also accepts IDs of YouTube videos as an input. When YouTube

video IDs are received, they are passed to the same message queue server that

handles input from the YouTube crawler and processed accordingly.

5.2

GLUE

GLUE is the extensible media analysis framework that extracts frame-level metadata from arbitrary video sources. Once GLUE receives a process request from the

Media Acquisition Framework through its web-based API, it retrieves the raw content from the file server, and conducts various analyses on the transcript, the video

frames, and the sound track. As shown in Figure 5-1, GLUE expects process requests to be in JSON format, and have fields such as an unique ID, a title, and URLs

of the video content. As previously indicated, process requests are sent from the

Media Acquisition Framework to GLUE as part of HTTP POST requests.

From a technical viewpoint, GLUE is a message queue manager written in

Python, which assigns processing tasks to stand-alone analysis modules, and aggregates the results into a unified JSON data structure. It makes use of the standard multiprocessing framework included within Python for implementing the message queue. For exposing the web-based API, it makes use of Twisted [51], which

is an event-driven networking framework for Python. The analysis modules are

44

1

{

" id": "SH

/0000210000"Q

"20/20",3

ftitle":

"1cc": 11.http://url/to/SHOO000021000O.srt",

"media":{

2

3

4

s

"low": "http://url/to/SH000000210000_low.mp4"

8

10

11

Ilength": 3540.0,

12

tstartTime":

"2Oi4-03-22TO2:01Z",

"endTime": "2014-03-22TO3:OOZ"'

13

"channel":

14

1s

"005"

}

Figure 5-1: Example of a GLUE Process Request

designed to run in parallel, and extract the metadata from the given transcript

or video. Since analysis modules are implemented as independent Python modules that are dynamically imported, it is extremely easy to add new modules that

conduct new types of analysis. After the analysis results are returned from all of

the modules, GLUE stores the aggregated metadata into the Media Database by

utilizing a driver called PyMongo [52].

Table 5.2 indicates a list of all the modules that are currently implemented. The

remainder of this section covers the details of each analysis module.

Table 5.2: List of Analysis Modules in GLUE

Module Name

Module Description

45

5.2.1

Download Module

The download module handles the responsibility of downloading the low-resolution

version of the original video content. All the analysis modules, except for the transcript module, rely on the video file downloaded by this module.

GLUE downloads the video file into a temporary directory of its own, instead

of having the analysis modules directly access the video through a shared file system. After the file is successfully downloaded, this module sends a notification

to the central message queue manager, which then dispatches analysis requests

to other modules that rely on the video file. From a performance perspective,

there is a huge overhead of retrieving files through a web server. However, this architecture enables multiple instances of GLUE to exist and operate independently

anywhere on the Internet, without the need of a shared file system that could cause

constraints in terms of deployment.

5.2.2

Transcript Module

The transcript module handles the role of parsing the content of the SRT file for

any process request that contains a transcript. It also conducts natural language

processing (NLP) over the transcript to extract any useful metadata that describes

the context of the video.

Since the process request only contains an URL to the actual SRT file, the transcript module starts the process by downloading the content of the SRT into a

string variable. As shown in Figure 5-2, an SRT file lists the spoken phrases along

with time-offset values. Time-offset values indicate the segment of the video,

where the corresponding phrase was mentioned. These values are relative to the

beginning of the video, and indicates the start time and the end time of a segment.

Given an SRT file, this module parses each line and creates an array of nested

objects, which contain the actual phrase, its start time, and its end time. Figure 5-3

indicates an example of an object that represent a phrase mention. It also creates

a separate field that contains the entire transcript as a concatenated string.

46

13

jWJJ ir

LUU1%WJI

Ur

InIDL

n~ruUnJ

14

15

Figure 5-2: Snippet of an SRT File

1

{

2

"start": 0,

3

"end": 8.073,

"result": "THEN WE RIDE ALONG WITH A GROUP OF BOUNDTY

UNTERS THAT ARE SURE TO CATCH YOU BY SURPRISE."

4

5

6

}

Figure 5-3: Example of an Object Representing a Phrase Mention

After the module parses the content of the SRT file, it sends the transcript to

AlchemyAPI [53] and openCalais [54], which are cloud-based NLP toolkits. This

module uses these toolkits to extract named entities and social tags that appear

within the transcript. Named entities refer to phrases that indicate specific entities

such as geographic locations, organizations, or individual people. Social tags, on

the other hand, refer to phrases that indicate specific real-world events or contexts.

Both AlchemyAPI and openCalais provide web-based APIs for receiving text data

and returning analysis results. The results from these NLP toolkits are combined

with the original parse results, and sent back to the central message queue.

47

5.2.3

Thumbnail Module

The thumbnail module handles the task of generating thumbnail images of the

video. Thumbnail assets include; a poster image (dump of a randomly selected

frame) of the video, minute-by-minute frame dumps of the video, and an animated

GIF that summarizes the video.

Both the poster image and the minute-by-minute frame dumps are generated

by utilizing OpenCV [55], which is an open-source computer vision library that

has the capabilities of scrubbing through a video and dumping a frame into an

image file. Figure 5-4 indicates an example of minute-by-minute frame dumps. For

videos that are less than two minutes (120 seconds), frame dumps are generated

every 6 seconds. The process of concatenating the frame dumps into a single

animated sequence creates the GIF summarization, which is handled by a feature

within FFmpeg.

Figure 5-4: Example of Thumbnail Images

All of the assets created by this module are stored within a directory that is exposed externally by nginx. After the entire thumbnail generation process is completed, this module returns a list of URLs that point to the assets.

5.2.4

Scene Module

The scene module handles the responsibility of detecting scenes cuts within a

video. It generates and returns a list of nested objects, which includes the start

time, the end time, and URLs that point to thumbnail images of the scene segment.

The start time and end time are timestamps relative to the beginning of the video.

The thumbnails are frame dumps of the first and last frames of the scene.

48

For detecting the scenes, this module uses a method similar to the one proposed in Expanding Window [16]. As shown in Figure 5-5, scene cuts often lead

to a drastic change in the color distribution of consecutive video frames. As a result, scene cuts may be computationally identified by thresholding the Euclidean

distance between the color histograms of two consecutive frames. In order to generate color histograms and calculate Euclidean distances, this module makes use

of OpenCV. In the same way the thumbnail module generates images of frame

dumps, this module also creates images from the first and last frames of each

scene, and saves it under a folder that is served by nginx.

Figure 5-5: Example of Scene Cuts

5.2.5

Face Module

The face module handles the role of detecting faces that appear within a video.

This module identifies the segments within a video that contains human faces, and

returns a list of nested objects that indicate the start time and the end time of each

segment, along with the number of faces it detected.

For detecting the faces, this module also utilizes OpenCV, which has the ability

of applying cascade classification [56] over the frames. Figure 5-6 indicates an

example of a face detected within a video frame. Although this module can detect

faces that appear, it does not have the feature to recognize the identity of the

individuals. That being said, it is technically feasible to apply machine-learning

techniques for cross-referencing the detected faces against a training data set of

49

known individuals to recognize their identity.

Figure 5-6: Example of a Face Detected Within a Video Frame

5.2.6

Emotion Module

The emotion module handles the task of identifying segments with speech activity, and recognizing the emotional status of the speakers. This module returns a

list of nested objects that indicate the start time and end time of a segment, along

with a state that best describes the emotional status of the speakers.

Before any analysis is conducted, the emotion module extracts the audio track

from the video using FFmpeg. The audio track is converted into a non-compressed

linear PCM sequence, and saved into a temporary working directory. For identifying the segments with speech activity, this module uses an audio processing

toolkit called SoX [57], which normalizes the audio and segments the original file

based on speech activity. For detecting the emotional status, this module uses

an open-source audio-based emotion analysis toolkit called OpenEAR [58]. OpenEAR extracts feature points from an audio stream, and cross-references it with a

pre-trained data set that maps features with seven different emotion states. The

50

seven emotion states include anger, boredom, disgust, fear, happiness, neutral,

and sadness. Figure 5-7 indicates an example of a speech segment and its emotional status.

Figure 5-7: Waveform and Emotion State of a Speech Segment

5.3

Media Database

The Media Database is a self-contained centralized framework that stores and

indexes the metadata extracted by GLUE. It uses MongoDB [59] for storing data,

Apache SoIr [60] for indexing data, and Tornado [61] for exposing a RESTful API.

This section covers the technical details of its design and implementation.

5.3.1

Data Store

The entire Media Analysis System is designed to use JSON data structures to

pass information from component to component. Due to this nature, the Media

Database utilizes MongoDB, which is a schema-less key-value store that can handle JSON data structures seamlessly. In MongoDB, individual data entries (JSON

objects) that contains the metadata are referred to as documents, and groups of

multiple documents are referred to as a collections.

MongoDB integrates well with Python scripts, and its schema-less architecture

provides high levels of flexibility and extensibility. Although data schemes are

useful upon querying data, the flexibility of schema-less data stores enable developers to rapidly modify the features of the Media Acquisition Framework and

GLUE, without being aware of the underlying database.

51

5.3.2

Supplemental Indexing for Text-based Metadata

In general, MongoDB has a sophisticated way of indexing data for expediting

queries. However, it is not optimized for free text search. Therefore, the Media Database utilizes SoIr as a supplement. SoIr is an open-source search engine

framework that is optimized for enabling fast text-based search queries. Its main

role is to index the metadata generated by the transcript module within GLUE, and

allow free text search over the spoken phrases.

Upon indexing data, SoIr requires a schema to be defined for each core (index database). Table 5.3 indicates a list of field names and data types that were

specified in the schema.

Table 5.3: Schema of Soir Core

Field Name

umid

Field Description

UMID of content

Data Type

String

Timesftm

Dat

fof

cotp

reation

Title of content

transcript

Text

1" ~ 0

Text

startTime

Double

Start time of video segment

creator

String

Creator of content

title

videoLow

r?:r

Phrases mentioned within video segment

URL of low-resolution video file

String

-,age

URotuVtIitrr~

Th~~tM~~bri~~hI

For each object that contains a field generated by the transcript module, three

types of SoIr entries are created. First of all, an entry categorized as type "0",

is created for the entire content. Secondly, entries categorized as type "1", are

created for each individual scene segment. Finally, entries categorized as type

"2", are also created for each phrase mention. For each subdivided video segment

that correspond to a scene or a phrase mention, the actual words that were spoken

within the segment are pulled and matched accordingly from the analysis results

of the transcript module. The process of creating multiple entries based on video

52

segments allows queries to be process in a non-hierarchical manner for retrieving

specific segments within videos.

The actual creation process of SoIr entries is handled by a custom data migration daemon written in Python. This daemon monitors the MongoDB collection,

intercepts new documents, and generates SoIr entries accordingly. After all of the

entries are created, they are sent to SoIr through a web-based API for indexing.

5.3.3

Media Database API

The Media Database API is a RESTful interface for querying and retrieving the

metadata from the Media Database. This API is exposed by a Python script, which

makes use of a lightweight web framework called Tornado. Each API endpoint was

implemented as a Python class, and exposed by Tornado. Table 5.4 indicates a list

of endpoints that are available within the query API.

Table 5.4: List of Endpoints Within the Query API

Endpoint Name

Endpoint Description

GET /search/:keyword

Returns a list of assets based on the keyword

GE fo~Ers~Rtps

a 1Vo l h reator

GET /umid/:umid

Returns a single asset based on the UMID

G~~~~~~iit~~d

Reun al s-fp~~~dwthi 24 horN

GET /trends

Returns a list of trending keywords within 24 hours

The "search" endpoint interfaces directly with SoIr, and returns results of free

text queries based on the given keyword. By default, SoIr returns a list of the

top 20 assets that matches the search criteria. Optional URI parameters may be

added to specify the maximum number of results, or to narrow the criteria based

on various aspects such as the content creator, the duration, the timestamp, and

the type of video segment.

The "creator" endpoint returns a list of all the unique content creators. Content

creators include entities such as television broadcasters and creators of YouTube

videos. It utilizes the feature in MongoDB to extract distinct values within a given

field.

53

The "umid" endpoint returns the metadata associated with the specified content. The "recent" endpoint, on the other hand, returns a list of assets that were

processed within the 24-hour time period. Both of these endpoints are designed

to return the entire MongoDB document, which contains all the metadata.

The "trends" endpoint returns a list of trending topics. This list is created by

counting the appearance frequencies of named entities and social tags that were

extracted from videos created within the 24-hour time period.

5.4

Constellation

Constellation is an interactive installation, which was implemented to visualize

the metadata of visual media that was acquired and processed my the Media Analysis System. This section discusses the details of its system architecture, as well as

its user interaction.

5.4.1

System Setup

Constellation consists of a wall with touch-sensitive displays (iPads), a projector, a control panel, and a message bus server. The displays are primarily meant

for playing videos and visualizing metadata. The projector augments the wall with

projection-mapped animations. The message bus server is the key component

in terms of synchronizing all the devices, and managing its statuses. It also periodically queries the Media Analysis System, and retrieves the metadata of video

assets. Figure 5-8 indicates the actual setup of Constellation.

All of the user interface elements on the displays, the projector, and the control

panel were implemented as HTML5-based web applications, which makes use of

canvases and CSS transforms. The communication between all of the components

is handled by Socket.lO [62], which is a real-time messaging framework that utilizes

the WebSocket protocol [63].

54

Figure 5-8: Setup of Constellation

5.4.2

User Interaction

In its initial stage, Constellation displays the most recent videos that were acquired and process by the Media Analysis System. Users may use the control panel

to adjust the scope of time, and display videos that were processed within a given

time frame in the past.

When a display is touched by a user, the state of the system changes to metadata mode. In this mode, the display that was touched will continue to play the

video as shown in Figure 5-9. However, all other displays will make a transition

and visualize the metadata associated with the main video.

As shown in Figure 5-10, the projector overlays animations of fireballs and

comets on the wall while constellation transitions from normal state to metadata

mode. The animation demonstrates the metaphor of metadata emerging from the

55

Figure 5-9: Constellation in Metadata Mode

original video, building up energy, and releasing small bits of information (shooting stars) as it explodes.

5.4.3

Visualization Interfaces

The current version of Constellation is designed to handle visualizations based

on the transcript, named entities, and scene cuts. Figure 5-11 shows screen shots

from the visualizations. As previously mentioned, all of the visualization interfaces

were implemented as HTML5-based web applications. Therefore, it is easy to

extend future versions of Constellation with new visualizations.

56

Figure 5-10: Constellation Transitioning to Metadata Mode

Named Entities

TranscriDt

Scenes

Figure 5-11: Visualization Screens in Constellation

57

58

Chapter 6

Recast UI

The Recast UI is the key component that allows users to easily aggregate, curate, and present their views to the world. This user interface utilizes the concept of

block-based visual scripting paradigms for semi-automating the process of video

production. This chapter describes the interface design, as well as its technical

implementations.

6.1

Overview of Implementation

As previously mentioned in Chapter 4, the Recast Ul is implemented as an

HTML5-based web application that is optimized to run on desktop versions of

Google Chrome. It also makes use of touch-based interaction techniques for increasing the tangibility of visual blocks and improving the usability of the interface.

Since desktop versions of Chrome cannot translate OS-level touch inputs into

Javascript events, Caress [64] was utilized as a middleware to fulfill the role. For

enabling programmatic manipulation of DOM elements within the HTML document, the Recast Ul makes heavy use of jQuery [65], which is a Javascript library

for simplifying client-side scripting. It also makes use of jQuery Ul [66] and jQuery

UI Touch Punch [67] for implementing the draggable block elements. In terms of

intercepting touch events and recognizing gestures, toe.js [68] was used.

59

6.2

Scratch Pad and Main Menu

As shown in the Figure 6-1, the Recast UI is initialized with a blank scratch pad,

which is the area where users can drag and combine content blocks with filter

blocks. Users can also tap anywhere on the scratch pad to access the main menu,

which provides several options. The main menu element utilizes a Javascript library

called Isotope [69]. Isotope enables developers to easily create hierarchical layouts

and animated transitions. Users can tap and navigate through the main menu to

add new blocks, as well as to preview and publish the final video.

6.3

Content Blocks

Content blocks refer to primitives that define bundles of content assets that

can be used within a video storyboard. A content block can represent a group of

video, image, or text assets. Each content block only contains assets that match

the criteria. For example, one specific video content block may contain scene

segments that mention something about Ukraine.

Figure 6-2 indicates examples of content blocks. A basic block has placeholders for a thumbnail image and a label. The thumbnail image corresponds to one of

the assets that match the user-specified criteria, and the label indicates the initial

scope, such as keywords or tags. Existing content blocks may be removed easily

from the scratch pad by tap-holding the block element.

6.3.1

Video Assets

Video content blocks deliver one of the key features to the interface. These

blocks enable users to easily aggregate, curate, and import video content. These

blocks directly query the Media Analysis System, and automatically retrieve video

assets that match the criteria.

Figure 6-3 indicates two different ways of specifying the search keyword upon

creating new blocks. Users can either input a custom search keyword, or select one

60

Scratch Pad

Main Menu

Figure 6-1: Scratch Pad and Main Menu of the Recast UI

61

Figure 6-2: Examples of Content Blocks

from the list of trending topics. The list of trending topics is dynamically populated

with up-to-date keywords that represent trending topics. It was originally designed

to use keywords from the Media Analysis System. However, after experimentation,

it was modified to use data from WorldLends [70], which provided more relevant

keywords in terms of narrowing the scope.

Get Video With Keyword

Figure 6-3: Specifying Search Keywords for Retrieving Video Assets

After the keyword is specified, a block that bundles the relevant videos appears on the scratch pad. By default, it uses the "search" endpoint of the Media

Database API, and fetches a maximum of 20 relevant assets from the Media Analysis System. Figure 6-4 shows a preview screen where users can view the assets

that are included within the block. This preview screen can be invoked by doubletapping the block. In the preview screen, users can preview the videos, and discard

any irrelevant assets from the bundle. A library called Video.js [711, which provides

helper methods around HTML5 video elements, handles the video playback. The

62

cover flow of thumbnails, on the other hand, is dynamically generated using a

library called coverflowjs [72].

Figure 6-4: Preview of Video Assets

Users may add filter blocks to narrow the scope of search. For instance, users

who wish to create a news commentary regarding the recent developments in

Ukraine may add a timestamp filter and a creator filter for getting the most recent

videos created by a specific organization. Other users who with to create Supercuts

may apply filters to retrieve specifically 30 phrase segments that mention the given

keyword.

6.3.2

Image Assets

Image content blocks enable users to import arbitrary images into their storyboard. While there are many ways to import images, the Recast UI focuses on

methods for importing screen shots of web pages without a hassle. This feature

enables users to easily import and remix assets they see as they are browsing the

Internet.

For capturing screen shots, a Chrome extension was implemented. As shown

in Figure 6-5, users can capture screen shots of browser windows with a click of a

63

button, and see its preview. Once users save the screen shot annotated with an

arbitrary tag, they are immediately uploaded to the Asset Management Service,

which indexes image and audio assets.

0-2 e----

Pvwaptaia

ow

WNW

wILL

a

WOuemSIme

pryCer.

ptr

WNuu

Wn"

tt waassingedw

Pa

iur

Uu

ren

Shot

wt

g

a Inn

Wmi*u~

thatn unle.

tems frm

6-6.

deinihzcate

th

ito

ipae

noaintg

ihntemieu

Figure 6-5: Chrome

foronetbocsue

Capturing Screen Shots

ofag WebsabaiPages

Sevc. Extension

mg

hs

AssetMaagement

o

Figure 6-6 indicates the list of annotation tags displayed within the main menu.

This list is dynamically populated with image annotation tags that exist within the

Asset Management Service. Image content blocks use these tags as a basis for

bundling multiple images. When new blocks are added, it retrieves all the images

Assetsf

Tex

M.a.

I~~l Sw

that are associated with the given tag.

Upon retrieving content, users can apply filters to narrow the scope. Users

can also double-tap the block to invoke the preview screen shown in Figure 6-7.

Through the preview screen, users may review the assets, and discard irrelevant

items from the bundle.

6.3.3

Text Assets

Text content blocks refer to blank screens with text overlays. These blocks can

be used for adding title screens and scene separators throughout the storyboard.

As shown in Figure 6-8 , users must enter a text string that they wish to display. By

64

Figure 6-6: List of Image Annotation Tags in Menu

Figure 6-7: Preview of Image Assets

default, the duration of the screen is set to three seconds. This may be adjusted

to any given length by applying a duration filter.

6.4

Filter Blocks

Filter blocks refer to primitives that define the scope of curation. Figure 6-9

shows an example of filter blocks on the scratch pad.

65

I

Add New Title Screen

My New

Figure 6-8: Creation of Text Content Blocks

Figure 6-9: Examples of Filter Blocks

As previously noted, filter blocks may be combined with content blocks for

narrowing their scope. Table 6.1 indicates the seven types of predefined filters that

may be applied to content blocks. Video content block accepts all types of filters.

Images content blocks, however, only accepts filters related to the timestamp, the

duration, and the number of items. As for the text content blocks, none of the

filters can be added except for the duration filter.

Table 6.1: List of Filters

Filter Descriotion

Filter Name

I I,trIAA(mntr~r i

Whi

66

-I

When filters blocks are added to video content blocks, optional parameters

are added to the URI for querying the Media Analysis System. When filter blocks

are added to image content blocks, optional parameters are added to the URI for

querying the Asset Management Service. As for text content blocks, filter blocks

changes the duration parameter of the screen, when duration filters are added. Filter may be removed at anytime by tap-holding the block. Content blocks reloads

the assets within the bundle whenever new filter are added, or existing filter are

removed.

Most of the filters have values that are statically predefined, or have values that

are definable by the user. However, filters related to creators have values that

are dynamically retrieved from the Media Analysis System, using the "creators"

endpoint within the Media Database API. Figure 6-10 indicates a list of creators

shown within the main menu.

Figure 6-10: List of Creators

6.5

Overlay Blocks

Overlay blocks represent supplemental assets that can be overlaid on top videos,

images, and text. The current version of the Recast UI supports a feature where

users can create overlay blocks that contain recordings their voices.

67