Unintended Consequences of Education and Housing Reform Incentives LIBRARIES

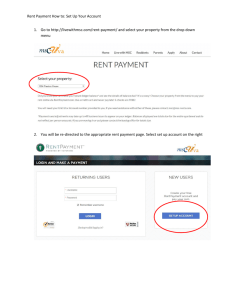

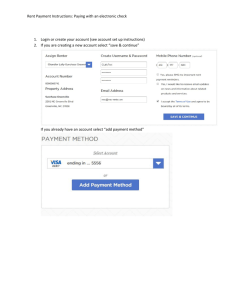

advertisement