The Causal and the Moral by

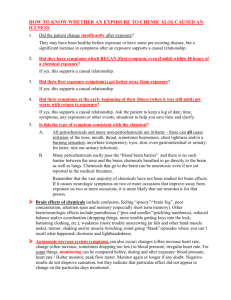

advertisement