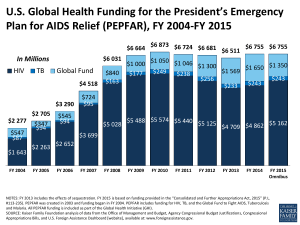

Background Paper How the big three AIDS donors define and use

advertisement