Engineering Computational Methods I 2016 spring Prof. Dr. Aurél Galántai Óbuda University

advertisement

Engineering Computational Methods I

2016 spring

Prof. Dr. Aurél Galántai

Óbuda University

05-05-2016

2

Contents

1 Elements of Matlab

Matrices and vectors in MATLAB . .

Commands, functions and procedures

Commands . . . . . . . . . . .

Functions . . . . . . . . . . . .

M-…les and procedures . . . . .

. . . . . . . .

in MATLAB

. . . . . . . .

. . . . . . . .

. . . . . . . .

2 Solution of linear systems

Linear systems of equations . . . . . . . . . .

Linear systems with triangular matrices . . .

The Gauss method . . . . . . . . . . . . . . .

Gauss elimination with pivoting . . . . . . . .

The LU-decomposition . . . . . . . . . . . . .

The LU-decomposition and the Gauss method

The Gauss method on band matrices . . . . .

Sparse matrices . . . . . . . . . . . . . . . . .

The three row or coordinate-wise method

Sparse matrices in MATLAB . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

3 Approximate solution of nonlinear equations

The bisection algorithm . . . . . . . . . . . . . . .

Fixed point iteration . . . . . . . . . . . . . . . . .

The Newton method . . . . . . . . . . . . . . . . .

The Newton method in high dimension . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

5

. 5

. 9

. 9

. 10

. 12

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

15

15

17

18

20

22

25

25

27

29

30

.

.

.

.

31

32

33

35

37

.

.

.

.

.

.

.

41

41

42

44

45

47

53

61

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

4 Numerical solution of Ordinary Di¤erential Equations

Explicit one-step methods . . . . . . . . . . . . . . . . . . . .

The explicit Euler method . . . . . . . . . . . . . . . . .

Explicit one-step methods . . . . . . . . . . . . . . . . .

The convergence of explicit one-step methods . . . . . .

Runge-Kutta methods and implementation matters . . .

Linear multistep methods . . . . . . . . . . . . . . . . . . . .

Di¤erence methods for boundary value problems . . . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

3

CONTENTS

4

Existence and unicity results for boundary value

Numerical di¤erentiation . . . . . . . . . . . . .

Solution of linear boundary value problems . . .

Solution of nonlinear boundary value problems .

The ODE programs of Matlab (A quick reminder) . .

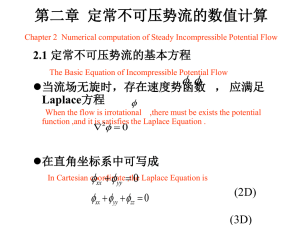

5 Numerical methods for partial di¤erential

Parabolic equations . . . . . . . . . . . . . . . .

The method of separation of variables . . .

Finite di¤erence methods . . . . . . . . . .

The explicit Euler method . . . . . . . . .

Hyperbolic equations . . . . . . . . . . . . . . .

D’Alembert’s method . . . . . . . . . . . .

The method of separation of variables . . .

Explicit di¤erence method . . . . . . . . .

Elliptic equations . . . . . . . . . . . . . . . . .

problems

. . . . . .

. . . . . .

. . . . . .

. . . . . .

equations

. . . . . . .

. . . . . . .

. . . . . . .

. . . . . . .

. . . . . . .

. . . . . . .

. . . . . . .

. . . . . . .

. . . . . . .

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

61

63

64

68

74

.

.

.

.

.

.

.

.

.

75

78

79

81

82

89

89

92

95

98

Chapter 1

Elements of Matlab

Matrices and vectors in MATLAB

The mathematical software MATLAB got its name from the expression MATrix LABoratory.

The most important data type of MATLAB is the real or complex matrix

The scalars and vectors are also matrices, however it is not necessary (but possible) to

invoke them by two indices

The simplest way to set up matrices is by enlisting their elements row-wise.

The rows must be separated by

; or enter,

The entries of row must be separated by a space or

,

the enlisted elements must be bracketed by [. . . ].

Example 1

2

3

1 0 3

A=4 3 1 2 5

4 5 6

A can be de…ned as follows

A=[1 0 3;3 1 2

4,5,6]

This is also an example of a simple command.

5

Elements of Matlab

6

Declaration of matrices is automatic if a reference is made to them.

Names of variables (data) can be selected in the usual ways.

There are …xed identi…ers used by Matlab. These can be detected with the HELP command.

Example 2 eps is an occupied identi…er, the so called machine epsilon (

2:2204

10

16

).

Programming Matlab we cannot use the …xed identi…ers for other purposes. Their use may

result in errors not easily detectable.

A matrix can be built from smaller matrices (blocks).

Example 3 The command

B=[A,[-1 -2;-1-2;-1-2];7,7,7,-7,-7]

with the previous A gives the matrix

2

1

6 3

B=6

4 4

7

0

1

5

7

3

2

6

7

1

1

1

7

We refer to any entry (i; j) of a matrix A by A (i; j).

3

2

2 7

7:

2 5

7

Example 4 A(2,3), B(1,4).

For the earlier A and B, A(2,3)=2, B(1,4)=-1.

For the elements of row or column vectors we can refer to only with one index.

In certain cases it has no signi…cance that a vector is column or row vector. In many cases

however it has signi…cance.

If a vector is de…ned by one index and its elements are given separately, then the vector will

be a row vector.

Scalar data can be de…ned in the usual way.

Example 5 -1.23e-2.

If k,h,m are already de…ned numbers, then we can build vectors in the following way:

c=k:h:m

This command de…nes a row vector c whose elements are in order

k; k + h; k + 2h; : : : ; k + th:

Elements of Matlab

7

The last element comes from the relations

k + th

m < k + (t + 1)h;

or

k + (t + 1)h < m

k + th;

depending the sign of h.

h can be omitted from the command, if h = 1.

A vector or matrix can be empty.

Earlier we built a matrix from submatrices. We can do the reverse.

The command

C=A(p:q,r:s)

results in the matrix

2

apr

6 ..

C=4 .

aqr

3

aps

.. 7 :

. 5

aqs

An important application of submatrices is the command

A(i,:)=A(i,:)+t A(k; :)

which gives t times the row k to the row i of matrix A.

The submatrix can be de…ned in a more general way in MATLAB: if u is a vector with r

elements, v is a vector with s elements (either row or column vectors), then the command

H=A(u,v)

produces a r

s type matrix H such that H(i; j) = A(ui ; vj ).

Example 6 Using only one command we can swap two rows of A:

A([j,k],:)=A([k,j],:)

If the elements of u or v are not strictly increasing numbers, then A(u,v) will not be a

submatrix of A.

Special matrices can be generated by built in Matlab functions. Some examples:

eye(m,n); ones(m,n); zeros(m,n); rand(m,n)

These functions produce in order an m n size

"identity" matrix,

a matrix whose entries are 1’s,

the zero matrix,

random matrix.

Function A RAND produces pseudo-random numbers with uniform distribution in (0; 1).

Elements of Matlab

8

For m = n, it is enough to use one argument: for example, ones(4).

Three other functions:

TRIL(A),

TRIL(A),

DIAG.

Function TRIL(A) takes out the lower triangular part of A.

Function TRIU(A) takes out the upper triangular part of A.

Before we specify DIAG we mention that we can refer to the diagonals of a matrix by their

indices.

The main diagonal of the m n type matrix A has the index 0, the diagonals above the main

diagonal are numbered as 1; : : : ; (n 1); the diagonals under the main diagonal are numbered

as 1; : : : ; (m 1).

The k-th diagonal is formed by those entries aij that satisfy the condition k = j

Let v be a vector of length t. The command

i.

DIAG(v,k)

generates matrix D 2 R(t+jkj)

other elements are 0.

(t+jkj)

whose k-th diagonal contains the elements of v and its

DIAG(A,k)

generates a column vector whose elements are elements of the k-th diagonals of matrix A.

If k = 0, we can omit k.

We can perform operations

+; ;

jelekkel with matrices, vectors and scalars provided that the operands are compatible with the

operations.

Exception: We can add (subtract) a scalar to any matrix. The scalar will be added to

(subtracted from) every entries of the matrix.

^

The sign

denotes the power operation. Operation A^k multiplies A with itself k times,

if k is positive integer, A^ ( 1) gives the inverse if it exists.

The operations + and

.(dot) sign.

are performed element-wise if the operation sign is preceded by a

Example 7 The result of [2,-2]. [2,3] is [4,-6], while the result of [-2,3].^3 is [-8,27].

Special operation is the transpose: A’.

Complex conjugation: conj(A)

Complex transpose (Hermitian transpose): ctranspose(A), or A´

Elements of Matlab

n).

9

For compatible matrices we can apply operations / and n (for details, see help / or help

We just mention the most important case. The result of command

x=Anb

is the (numerical) solution of linear system Ax = b (if the system is consistent).

Commands, functions and procedures in MATLAB

MATLAB has lots of built in functions and procedures to help program development.

Accordingly a MATLAB program generally consists of a few commands and several function

or procedure calls..

Commands

There are only a few commands:

simple command,

IF command,

two types of loops (for and while loops).

The general form of the IF command:

if condition

commands

{elseif

commands}

<else

commands>

end

Section {

Section <

} can be repeated many times (it can also be omitted),

> can be omitted.

Parts written in separate lines can be written in one line, but they must be separated by

or .

The conditions are logical expressions. Elementwise relational operators:

;

<, <=, >, >=, = =, ~=

,

Elements of Matlab

10

The last two are the ”equal” and the ”non-equal” operation. The logical or, and negation

are in order:

j, &, ~

The for loop has the form:

for i = v

commands

end

Variable i is the loop variable, v is a vector that is often has the form k:h:m (or k:m).

Semantics (if v has n elements):

the loop variable i takes the values v1 ; v2 ; : : : ; vn in order and for each value the loop

kernel is executed.

(If v is a matrix, then v1 ; v2 ; : : : ; vn stands for the column vectors of v.)

The while loop has the form:

while condition

commands

end

Functions

There are plenty of functions in MATLAB.

The following functions produce outputs from existing matrices.

Their inputs can be scalars as well..

Certain functions are de…ned only for scalars. In such cases the function is de…ned elementwise.

Example 8 The command

sin(pi/4*ones(2,3))

p

gives the 2 3 matrix whose all elements are 2=2:

The most important functions (using the HELP we can discover the others as well).

Elementary mathematical functions: SIN, COS, . . . ,ROUND, etc.

DET(A) produces det (A),

COND(A) produces the condition number of A (in ”2”-norm),

RANK(A) produces the rank of A.

[m,n]=size(A), h2=norm(A),

hinf=norm(A,inf)

The …rst command gives the size of matrix (vector) A. The second and third command give

kAk2 and kAk1 , respectively

Elements of Matlab

11

kAk1 and kAkF can also be computed by the calls NORM(A,1) and NORM(A,’fro’), respectively.

The outputs of the following functions are row vectors (or scalars):

MAX, MIN, SUM, PROD.

If the input is a matrix, but not a row vector, then MAX(A) gives the greatest elements

of each column, MIN(A) gives the smallest elements of each column of A, SUM(A) gives the

sums of the elements of each column, and PROD(A) gives the products of the elements of each

column.

If the input is a row vector, then we obtain in order the greatest, the smallest element, the

sum and the product of the elements (the result is clearly scalar).

Functions MAX and MIN can be invoked with two inputs, as well.

Example 9 Let

2

1

6 2

A=6

4 0

2

3

2

2

0

2

2

3

4

1

2

9

5

3

2

15 7

7:

1 5

1

Here max(A) gives the vector [2; 3; 4; 5; 15], while the commands

[c, d]=max(A), [c1,d1]=max(abs(A(3:4,2:4)))

give the results

c = [2; 3; 4; 5; 15]

d = [2; 1; 4; 4; 2]

c1 = [2; 4; 9]

d1 = [1; 2; 1]

Example 10 The command

[p,j]=max(max(A))

gives

p = 15;

j = 5;

which is the greatest element of A and its column index. The row index of the maximal element

is given by d(j), which is 2.

Many of the built in functions of MATLAB execute very complicated algorithms of numerical

linear algebra. The most important ones are the following

[L,U,P]=lu(A),

[T,F]=lu(A),

H=chol(C)

The …rst two functions perform two versions of the LU -decomposition, the last function produces the Cholesky-decomposition of matrix C (C must be symmetric and positive de…nite).

Elements of Matlab

12

The results satisfy the following properties: L; P; T; H are lower triangular matrices, U; F

are upper triangular matrices, P is a permutation matrix, and

L = P T;

X=inv(A),

U = F;

LU = P A;

T F = A;

HH T = C:

[Q,R]=qr(A)

Here X is the inverse of A (if it exists), Q is orthogonal, R is upper triangular and QR = A.

s=eig(A),

[V,D]=eig(A)

The …rst command gives the eigenvalues of A.

The second command puts the eigenvalues into the diagonal matrix D, while the i-th column

of matrix V is an eigenvector that belongs to the eigenvalue dii .

We mention here some numerical functions that are not built in functions but very useful:

- FSOLVE solves nonlinear systems of equations,

- QUAD computes approximate integral with an adaptive Simpson-formula,

- SPLINE computes the natural spline interpolation.

M-…les and procedures

A sequence of MATLAB commands form a MATLAB program.

The program must be included in a text …le with extension.M (M -…le).

The invocation of the program is simply done its name. For example, the command

prog

loads and execute the program contained in the …le prog.m.

The variables of a simple program (script-…le) are global variables.

It is also possible to de…ne functions in M -…le form in the following way:

The sequence of commands must be started with the line (function header)

function [k1 ; : : : ; kt ] = f name(b1 ; : : : ; bs )

The number of input parameters k1 ; : : : ; kt and the number of output parameters b1 ; : : : ; bs

is arbitrary (in principle).

It is advised to have the same function name and …le name (fname.m).

We can put the

RETURN

command anywhere in the program, with the usual e¤ect.

The non-parameter variables in the program of the function are local variables.

Elements of Matlab

13

A variables can be de…ned as global with the command

GLOBAL

A function name can be input parameter. In such a case we have to invoke the FEVAL

procedure within the function.

Example 11 If parameter f is a two variable function, then the command z = f(x; y) is error.

The correct way is z = feval(f; x; y).

A few other possibilities.

The command

DISP(variable)

or

DISP(’string’)

puts the value of variable or the string onto screen.

The command

DIARY …lename

puts all screen messages to the text …le …lename.

This can be stopped by the command

diary off

The command

diary on

continues writing.

If x and y are two vectors of the same length, then command

plot(x,y)

draws a curve on the screen from the points (xi ; yi ).

The procedure PLOT can be called in other forms as well.

We can also measure CPU time as shown by the next example

t=clock %or t=cputime

{commands}

tt=etime(clock,t) %or tt=cputime-t

The

CLOCK

returns a six element date vector containing the current time and date in decimal form:

[year month day hour minute seconds]

The

14

Elements of Matlab

ETIME

returns the time in seconds that has elapsed.

CPUTIME

returns the CPU time in seconds that has been used by the MATLAB process since MATLAB started.

Chapter 2

Solution of linear systems

Linear systems of equations

The general (canonical) form of linear systems of equations in case of m equations and n

unknowns is

a11 x1 + : : : + a1j xj + : : : + a1n xn = b1

..

.

ai1 x1 + : : : + aij xj + : : : + ain xn

=

..

.

bi

(2.1)

am1 x1 + : : : + amj xj + : : : + amn xn = bm

A compact form is

Ax = b;

(2.2)

where

m

A = [aij ]m;n

i;j=1 2 R

n

; x 2 R n ; b 2 Rm :

For m < n, the system is called underdetermined, and for m > n, it is overdetermined. If m = n

the system is called quadratic.

The geometric content of linear systems can be written in the following way.

De…nition 12 Let x0 2 Rn be a point of a hyperplane and let d 2 Rn be a nonzero vector that

is perpendicular (orthogonal) to the hyperplane. The points of this hyperplane are given by the

vectors x 2 Rn satisfying the equation

(x

x0 )T d = 0:

(2.3)

15

Solution of linear systems

16

We can rewrite the hyperplane equation in the form

xT d = xT0 d:

(2.4)

Using the row-wise partition of matrix A

2 T 3

a1

6 .. 7

6 . 7

6

7

A = 6 aTi 7

aTi = [ai1 ; : : : ; ain ]

6 . 7

4 .. 5

aTm

we can rewrite equation Ax = b in the form

aT1 x = b1 ;

..

.

aTm x

(2.5)

= bm :

We can see that the solution of the linear system is the common subset of m hyperplanes.

So there are three possibilities:

(i) Ax = b has no solutions,

(ii) Ax = b has exactly one solution,

(iii) Ax = b has in…nitely many solutions.

De…nition 13 If Ax = b has at least one solution, the system is called consistent. If it has no

solution, then the system is called inconsistent.

Example 14 The system

x + 2y = 1;

x + 2y = 4

is inconsistent.

Using the column-wise partitioning of A we can write Ax = b in the form

n

X

xi ai = x1 a1 + : : : + xn an = b;

i=1

where ai stands for the i-th column of A. The linear system can be solved if and only if b can

be expressed as a linear combination of the columns of A.

De…nition 15 The rank of a matrix A 2 C m

independent column or row vectors.

n

is de…ned by the maximal number of linearly

Solution of linear systems

17

Theorem 16 (a) Ax = b has a solution if and only if rank(A) =rank([A; b]).

(b) If rank(A) =rank([A; b]) = n, then Ax = b has exactly one solution.

Matlab: function rank(A) computes the rank of A.

From now on we assume that m = n.

Theorem 17 Equation Ax = b (A 2 Rn n , b 2 Rn ) has exactly one solution if and only if

there exists A 1 . I this case the solution is given by x = A 1 b.

Theorem 18 The homogeneous equation Ax = 0 (A 2 Rn

if and only if det (A) = 0.

n

) has a nontrivial solution x 6= 0

Linear systems with triangular matrices

De…nition 19 Matrix A = [aij ]ni;j=1 is lower triangular if for all i < j, aij = 0.

De…nition 20 Matrix A = [aij ]ni;j=1 is upper triangular if for all i > j, aij = 0.

A matrix which is both lower and upper diagonal is a diagonal matrix.

For a triangular matrix A = [aij ]ni;j=1 , det(A) = a11 a22 : : : ann .

The solution of systems with triangular coe¢ cient matrices is quite simple.

Consider the lower triangular linear system

a11 x1

..

.

= b1

..

.

..

.

ai1 x1 + : : : +aii xi

..

..

.

.

an1 x1 + : : : +ani xi

..

= bi

..

.

.

: : : +ann xn = bn

This can be solved uniquely if and only if a11 6= 0,: : : ; ann 6= 0.

From equation i, one obtains

!

i 1

X

x i = bi

aij xj =aii :

j=1

Hence the solution algorithm:

x1 = b1 =a11

for i = 2 : n

Pi 1

xi = (bi

j=1 aij xj )=aii

end

(2.6)

Solution of linear systems

18

Matlab programs: lowtri1.m, lowtri2.m, testlin1.m

Consider the upper triangular system

a11 x1 + : : : +a1i xi + : : : +a1n xn = b1

..

..

..

..

.

.

.

.

aii xi + : : : +ain xn = bi

..

..

...

.

.

ann xn = bn

This can be solved uniquely if and only if a11 6= 0,: : : ; ann 6= 0.

From equation i, one obtains

!

i 1

X

x i = bi

aij xj =aii :

j=1

Hence the solution algorithm (backward substitution):

xn = bn =ann

for i = n 1P

: 1:1

n

xi = (bi

j=i+1 aij xj )=aii

end

(2.7)

Matlab programs: upptri.m, testlin2.m

The Gauss method

The Gauss method consists of two phases:

I. We transform the system Ax = b to an equivalent upper triangular system:

II. We solve this upper triangular system using Algorithm (2.7).

Transformation to upper triangular form is done in the following way.

If a11 6= 0, then make zero the coe¢ cients of x1 under the coe¢ cient a11 so that we subtract

an appropriate multiple of the …rst row from row i (i = 2; : : : ; n):

(ai1

a11 )x1 + (ai2

a12 )x2 + : : : + (ain

a1n )xn = bi

b1 :

(2.8)

Solution of linear systems

Condition ai1

19

a11 = 0 gives us

ai1

:

a11

Hence the zeroing of coe¢ cients under a11 is done by the algorithm

9

= ai1 =a11

=

aij = aij

a1j (j = 2; : : : ; n)

(i = 2; : : : ; n)

;

bi = bi

b1

(2.9)

=

(2.10)

This algorithm overwrites the elements of submatrix A (2 : n; 2 : n) and vector b (2 : n).

However the zeros are not written into the matrix.

Using the original notation, the reduced linear system has the form

a11 x1 + a12 x2 + : : : + a1n xn = b1

a22 x2 + : : : + a2n xn = b2

..

..

.. :

.

.

.

an2 x2 + : : : + ann xn = bn

(2.11)

We can decompose this into the …rst equation and the smaller (n 1) (n 1) linear subsystem.

If a22 6= 0, we repeat the procedure on the subsystem, and so on.

Assume that we already made the zeroing in the …rst k 1 columns and we have a reduced

linear system of the form

a11 x1 + : : : : : : +a1k xk + : : : + a1n xn = b1

..

..

..

..

.

.

.

.

..

..

..

..

.

.

.

.

akk xk + : : : + akn xn = bk

..

..

..

.

.

.

aik xk + : : : + ain xn = bi

..

..

..

.

.

.

ank xk + : : : + ann xn = bn

If akk 6= 0, then we make zero the coe¢ cients of xk under akk .

Subtracting the multiple of row k from row i we obtain the equation

(aik

Condition aik

akk )xk + (ai;k+1

ak;k+1 )xk+1 + : : : + (ain

akk = 0 gives us

=

aik

:

akk

Hence the required algorithm is

= aik =akk

aij = aij

akj

bi = bi

bk

akn )xn = bi

(j = k + 1; : : : ; n)

bk :

(2.12)

(2.13)

9

=

;

(i = k + 1; : : : ; n)

Solution of linear systems

20

We can continue the procedure until akk 6= 0 and k n 1 hold.

If we succeeded to transform Ax = b into the equivalent upper triangular form, then we

apply Algorithm (2.7).

Notation (Matlab): A (i; j) stands for element aij of matrix A.

The Gauss method:

I. (elimination) phase:

for k = 1 : n 1

for i = k + 1 : n

= A (i; k) =A (k; k)

A (i; k + 1 : n) = A (i; k + 1 : n)

A (k; k + 1 : n)

b (i) = b (i)

b (k)

end

end

II. (backward substitution) phase:

x (n) = b (n) =A (n; n)

for i = n 1 : 1 : 1

x(i) = (b(i) A(i; i + 1 : n) x(i + 1 : n))=A(i; i);

end

Matlab programs: gauss1, testlin3.m

Theorem 21 The Gauss method requires 32 n3 + O(n2 ) arithmetic operations.

Theorem 22 (Klyuyev, Kokovkin-Shcherbak, 1965). Any algorithm which uses only rational

arithmetic operations (solely row and column operations) to solve a general system of n linear

equations requires at least as many additions and subtractions and at least as many multiplications and divisions as does the Gaussian elimination.

Gauss elimination with pivoting

In the …rst phase of the Gauss method it may happen that akk becomes zero (or near zero)

after the elimination of coe¢ cients under the main diagonal in the …rst k 1 columns of A.

Example 23 In the system

4x2 + x3 =

x1 + x2 + 3x3 =

2x1

2x2 + x3 =

a11 = 0.

9

6

1

Solution of linear systems

21

In such a case we can try to exchange rows or columns so that we should have a nonzero

element in place. In the above case we can swap the …rst and the third rows:

2x1

2x2 + x3 =

x1 + x2 + 3x3 =

4x2 + x3 =

1

6

9

If we swap the …rst and the second columns (and the respective variables) we obtain

4x2

+ x3 =

x2 + x1 + 3x3 =

2x2

2x1 + x3 =

9

6

1

Generally, there are several possibilities for the exchange of rows or columns.

If all coe¢ cients aj;k (j k) or ak;j (j k) are zero, then the submatrix [aij ]n;n

i;j=k is singular

and so is A. In such a case we clearly cannot continue the elimination.

Element akk is called the pivot element. The exchange of rows or columns so that the new

pivot element should be di¤erent from zero is called pivoting.

The selection of the pivot element greatly in‡uences the reliability of computational results.

Problem 24 Solve the linear system

10

17

x + y = 1

x

+ y = 2

with our program gauss1.m.

The obtained result is x = 0, y = 1, while the correct result is

x=

1

1

10

1;

17

y=1

10 17

1 10 17

1:

Swap the two equations:

x

10

17

+ y = 2

x + y = 1

The same program now gives the result x = 1, y = 1.

The last result is near to the solution, while the …rst one is a catastrophic result.

It is true generally that a good pivoting can improve the precision of the numerical solution

signi…cantly. It is a general rule to choose pivot elements with absolute values as big as possible.

There are two basic pivoting strategies.

Partial pivoting: Step k of the …rst phase: we choose row i (i

jaik j = max fjajk j : k

j

k) so that

ng :

Then we swap rows k and i so that after the pivoting jakk j = maxk

i n

jaik j holds.

Solution of linear systems

22

Complete pivoting: Step k of the …rst phase: we chose entry (i; j) so that

jaij j = max fjats j : k

t; s

ng :

Then we swap rows k and i, and columns k and j so that after the pivoting jakk j = maxk

holds.

i;j n

jaij j

In such a case we also swap the variables.

In the case of Gauss elimination with pivoting we make a pivoting at each step of the …rst

phase.

The complete pivoting is considered as a safe strategy but expensive.

The partial pivoting is also considered as a safe but less costly strategy, if some extra

technique (for example, iterative improvement) is added.

In practice the latter is preferred.

The LU-decomposition

The …rst phase of the Gauss method produces an upper triangular matrix. We seek for the

connection of A and this upper triangular matrix.

We use the following facts:

(i) The inverse of a triangular matrix is also triangular (of the same type);

(ii) The product of two triangular matrices of the same type is also triangular of the same

type

De…nition 25 The LU -decomposition of a matrix A 2 Cn n is a representation of the matrix

in the product form A = LU , where L 2 Cn n is lower triangular matrix and U 2 Cn n is

upper triangular matrix.

Lemma 26 If a nonsingular matrix A has two LU -decompositions A = L1 U1 and A = L2 U2 ,

then there exists a diagonal matrix D such that L1 = L2 D, U1 = D 1 U2 .

Proof. Assume that we have two LU -decompositions A = L1 U1 = L2 U2 , which implies

L2 1 L1 = U2 U1 1 . The left matrix is lower triangular, while the right matrix is upper triangular.

Hence they must be diagonal, that is L2 1 L1 = U2 U1 1 = D, which implies the claim.

Corollary 27 If L is unit lower triangular (each entry in its main diagonal is 1), then the

LU-decomposition is unique.

Solution of linear systems

23

Let

A(r)

2

3

a11 : : : a1r

6

.. 7

= 4 ...

. 5

ar1 : : : arr

(r = 1; : : : ; n

1) :

be the leading principal submatrix of A.

Theorem 28 (Turing). A nonsingular matrix A 2 Cn n has an LU -decomposition if and only

if

(2.14)

det A(r) 6= 0 (r = 1; : : : ; n 1):

There are cases when a matrix is nonsingular, and it has no LU -decomposition.

Example 29 Matrix

0 1

1 0

has no LU -decomposition.

Assume the contrary:

0 1

1 0

=

1 0

l 1

a b

0 c

=

a

b

al lb + c

:

Then a = 0, b = 1, 1 = al = 0 l = 0, which is impossible.

De…nition 30 A matrix P 2 Rn n is a permutation matrix, if it has exactly one entry 1 in

each row and each column, while the other entries are 0.

Example 31

2

1

6 0

6

4 0

0

0

0

0

1

0

1

0

0

3

0

0 7

7:

1 5

0

Let ei = [0; : : : ; 0; 1; 0; : : : ; 0]T 2 Rn be the i-th unit vector. Any permutation matrix can

be written in the form

3

2

eTi1

6 eT 7

6 i 7

P = 6 ..2 7 ;

4 . 5

eTin

where i1 ; i2 ; : : : ; in is a permutation of the numbers 1; 2; : : : ; n.

For the above example, P = [e1 ; e3 ; e4 ; e2 ]T .

Solution of linear systems

24

Consider the product of a matrix A by a permutation matrix P . Using eTi A = aTi one

obtains

2

3

2

3 2

3

eTi1

eTi1 A

aTi1

6 eT 7

6 eT A 7 6 aT 7

6 i2 7

6 i

7 6 i 7

P A = 6 .. 7 A = 6 2.. 7 = 6 .. 2 7 :

4 . 5 4 . 5

4 . 5

T

ein

eTin A

aTin

It follows that product P A exchanges the rows of A.

Let P be partitioned column-wise, that is

P = [ej1 ; : : : ; ejn ] ;

where j1 ; j2 ; : : : ; jn is a permutation of the numbers 1; 2; : : : ; n. Also assume that A is partitioned column-wise, that is A = [a1 ; : : : ; an ] (ai 2 Cn ). Since Aei = ai we have

AP = A [ej1 ; : : : ; ejn ] = [Aej1 ; : : : ; Aejn ] = [aj1 ; : : : ; ajn ] :

For arbitrary permutation matrix P , P T P = I.

In the k-th step of the …rst phase of the Gauss method with partial pivoting we swap rows

k and i (i > k). This operation is equivalent with the operation Pk A(k 1) x = Pk b(k 1) , where

2 T 3

e1

6 .. 7

6 . 7

6 T 7

6 ek 1 7

6 T 7

6 ei 7

6 T 7

6 ek+1 7

6 . 7

n n

7

Pk = 6

6 .. 7 2 R ;

6 eT 7

6 i 1 7

6 eT 7

6 k 7

6 eT 7

6 i+1 7

6 . 7

4 .. 5

eTn

and A(k 1) , b(k 1) denote the system data after step k 1.

Note that in row k we have eTi , and in row i we have eTk .

The complete pivoting corresponds the operation

Pk A(k

1)

Qk QTk x = Pk b(k

1)

where Qk is the permutation matrix that executes the column swap.

Theorem 32 If the n n type matrix A is nonsingular, then there exists a permutation matrix

P such that matrix P A has LU -decomposition.

Solution of linear systems

25

The LU-decomposition and the Gauss method

The …rst phase of the (plain) Gauss method produces the LU -decomposition of A, more precisely

the equivalent linear system

U x = L 1 b;

(2.15)

The lower triangular matrix L of

2

1

6

6 a21 =a11

6

6

6 a =a

L = 6 31 . 11

6

..

6

6

..

4

.

an1 =a11

the LU -decomposition can be obtained as

3

0

:::

0

7

..

7

.

.. 7

7

1

. 7

7;

.

7

ak+1;k =akk . .

7

7

..

.

1

0 5

: : : ank =akk : : : an;n 1 =an 1;n 1 1

where the entries of column k are computed in step k of phase I. It is common to write elements

ak+1;k =akk ; : : : ; ank =akk

into the coe¢ cient matrix A under the main diagonal.

This does not a¤ect the elimination phase. Also note that these numbers are the

elements

used in the elimination step.

Hence the Gauss method is based on the LU -decomposition and, in fact, it essentially

produces it.

For the partial pivoting case, the Gauss method gives the LU -decomposition of a matrix

P A, where P is a permutation matrix that can be obtained from the procedure.

For complete pivoting, we do the same, but row exchange must also be done on the entries

under the main diagonal.

The Gauss method on band matrices

De…nition 33 A matrix A 2 Rn n is called band matrix with lower bandwidth p and upper

bandwidth q, if

aij = 0; for i > j + p and i + q < j:

Those elements aij of A are (considered) nonzero for which

i

p

j

i + q;

Solution of linear systems

26

or equivalently

j

2

a11

q

i

a12 : : : : : : a1;1+q

6

6 a21 a22

6 .

..

6 ..

.

6

6

...

6 a1+p;1

6

6

..

A=6 0

.

6

.

...

6 .

6 .

6 .

..

6 ..

.

6

6 ..

4 .

0

::: ::: :::

j + p:

0

...

:::

..

:::

0

..

.

..

.

.

...

..

0

.

an

...

..

...

0

.

...

an;n

p

: : : an;n

1

..

.

..

.

q;n

an 1;n

ann

3

7

7

7

7

7

7

7

7

7

7:

7

7

7

7

7

7

7

5

The band matrices are important if p and q are much smaller than n.

On band matrices the Gauss method has a special form. Here the k-th step of phase 1 must

be executed on a matrix of the form

=

A

x

b

It is obvious that the zeroing of entries under akk must be performed only in the rows

i = k + 1; : : : ; k + p provided that k + p n. It is also clear that in these "active" rows the

new coe¢ cients aij must be computed until column k + q.

The second phase must also be modi…ed accordingly. Here the upper triangular matrix has

upper bandwidth.

It follows that the LU -decomposition A is also special.

Theorem 34 Let A 2 Rn n be a band matrix with lower bandwidth p and upper bandwidth

q. Assume that A has an LU -decomposition A = LU . Then L is a band matrix with lower

bandwidth p and U is a band matrix with upper bandwidth.

Solution of linear systems

27

It follows that we can store L and U in place of A.

If we have to make pivoting on a band matrix, then its bandwidth doubles.

Band matrices arise in discretization methods, spline interpolation, etc.

Consider the following linear system with tridiagonal matrix:

b1 x1 +c1 x2

a2 x1 +b2 x2 +c2 x3

a3 x2 +b3 x3 +c3 x4

..

..

.

.

...

..

.

...

an 1 x n

2

...

+bn 1 xn

an x n

1

1

+cn 1 xn

+bn xn

=

=

=

..

.

..

.

=

=

d1

d2

d3

dn

dn

1

Here we use four vectors to store data in order to save memory space.

Assume that after the …rst k 1 steps of phase 1 rows k 1 and k have the form

xk

ak x k

+ ek 1 xk

= gk

1 + bk xk + ck xk+1 = dk

1

1

Subtracting the multiple of the …rst equation from the second one obtains

(bk

ak ek 1 ) xk + ck xk+1 = dk

and

xk +

b

|k

dk

ck

xk+1 =

a e

b

{zk k 1}

|k

ek

Hence the tridiagonal Gauss method has the form

ak gk

1

ak gk 1

:

ak ek 1

{z

}

dk

c1

ck

; ek =

(k = 2; : : : ; n 1) ;

b1

bk ak ek 1

dk ak gk 1

d1

(k = 2; : : : ; n 1; n) ;

g1 = ; gk =

b1

bk ak ek 1

xn = gn ; xk = gk ek xk+1 (k = n 1; n 2; : : : ; 1) :

e1 =

Matlab programs: testlin6.m, gtri.m.

Sparse matrices

De…nition 35 (heuristic): A matrix A 2 Rn

nonzero elements is essentially smaller than n2 .

n

is said to be sparse, if the number nnz of

Solution of linear systems

28

Under the term "essentially smaller" we mean that nnz = O (n) or at most O (n1+" ) , where

0 < " < 1 and " 0.

Band matrices are also sparse but their nonzero elements follow a regular sparsity pattern.

Under sparsity pattern we mean the geometry of the distribution of nonzero elements in the

matrix.

Large sparse matrices with irregularly distributed nonzero elements are a source of big and

serious computational problems.

The …rst problem is the storing of a large sparse matrix e¢ ciently.

The second problem is that standard matrix operations on sparse matrices often result in

matrices whose sparsity patterns and numbers of nonzero elements di¤erent from the original

or the result itself becomes dense.

Example 36 Consider the …rst phase of the Gauss method on the matrix

2

6

6

6

6

6

A=6

6

6

6

6

4

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

that contains 28 nonzero elements denoted by

0

0

0

7

7

7

7

7

7

0 7

7

0 7

7

0 5

0

0

0

0

0

0

0

3

.

Assume that the Gauss method can be executed. The …rst step of phase 1 makes three

elements zero. The resulting matrix will have 31 nonzero elements.

2

6

6

6

6

6

6

6

6

6

6

4

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

The 6 new nonzero elements are denoted by .

0

0

0

0

0

0

0

0

3

7

7

0 7

7

7

7:

7

7

0 7

7

0 5

Solution of linear systems

29

The upper triangular matrix U obtained at

2

0 0

6 0

0 0

6

6 0 0

0

6

6 0 0 0

6

6 0 0 0 0

6

6 0 0 0 0

6

4 0 0 0 0

0 0 0 0

the end of phase 1 looks like

3

0 0

7

0

7

0 7

7

7

0 0

7

7

7

7

0

7

5

0 0

0 0 0

where we have 7 new nonzero elements (with respect to the original upper triangular part).

This phenomenon is called …ll in.

Matlab demo program: testlin7.m.

The three row or coordinate-wise method

It is a generally used method. We store the nonzero elements, their row and column indices in

three vectors of length nnz either in column-wise or row-wise.

Example 37 Consider

2

6

6

A=6

6

4

5

2

0

0

0

0

4

1

0

0

0

0

3

0

0

3

0

0

2

2

0

1

2

3

1

3

7

7

7

7

5

(n = 5; nnz = 12) :

The corresponding (column-wise) three row representation is the following

val

=

row_ind =

col_ind =

5 2 4 1 3 3 2 2 1 2 3 1

1 2 2 3 3 1 4 5 2 3 4 5

1 1 2 2 3 4 4 4 5 5 5 5

Storing nonzero data in column-wise of row-wise form is not a real restriction. It is common

to store the nonzero elements in "random" order, and consider this as a list data structure. In

fact, it is easy to insert new elements at the and of the list. The matrix-vector operations are

essentially list data structure operations.

Some other sparse matrix storage forms:

- CRS = Compressed Row Storage,

- CCS = Compressed Column Storage,

- SKS = Skyline Storage.

Solution of linear systems

30

Sparse matrices in MATLAB

Algorithms and functions handling sparse matrices can be found in the SPARFUN library.

MATLAB uses the three row representation for sparse matrices. The sparsity is an attribute

of data.

Sparse matrices can be de…ned by the command SPARSE that can be called in several

ways. The three most important ways of call:

1. S = SP ARSE(X)

Converts a dense matrix into sparse form.

2. S = SP ARSE(i; j; s; m; n; nzmax)

De…nes an m n sparse matrix in which the number of nonzero elements is maximum

nzmax and the nonzero elements are given by in three row form by rows of matrix [i; j; s],

where i, j and s are vectors of the same length.

3. S = SP DIAGS(A; d; m; n)

De…nes an m n type sparse matrix formed from diagonals.

Sparse matrices can be converted to dense form by the command FULL whose form is

A = F U LL(X).

Command F IN D gives the nonzero elements of a matrix.

Command N N Z gives then number of nonzero elements.

Command SP Y gives a graphic representation of the geometry of nonzero elements.

Useful commands are SP EY E and SP RAN D, which generate identity and random matrices stored in sparse form, respectively.

The MATLAB system executes sparse matrix operations automatically. The general rules

are the following:

- The results of operations with dense matrices are dense.

- The results of operations with sparse matrices are sparse.

- The results of operations with mixed sparse and dense matrices are dense, except for

the cases when the result is expected to be sparse.

The sparse technique applied in MATLAB makes it possible that we can apply our programs

to sparse matrices without any modi…cation or rewriting. For example, we can apply our Gauss

program(s) to sparse matrices. The only requirement is that A should be declared as a sparse

matrix. If A is sparse, that MATLAB makes the computations in sparse form.

Several built in function gives sparse output for sparse input, and the built in linear solver

also automatically handles the sparse matrices.

Chapter 3

Approximate solution of nonlinear equations

We consider equations of the form

f (x) = 0 ( f : Rn ! Rn ; n

(3.1)

1).

A vector x 2 Rn is the solution (root, zero) of equation, if

f (x ) = 0:

If n

2, we speak of system of equations.

We always assume that f (x) is continuous.

Each of the suggested methods for solving f (x) = 0 produces a sequence fxi g1

i=0 that

converges to a solution x .

n

De…nition 38 The sequence fxi g1

i=0 2 R (n

there exists a constant 0 q < 1 such that kxi

1) converges to x 2 Rn with linear speed, if

x k q kxi 1 x k (i 1).

In case of linear convergence we have

kxi

xk

q kxi

1

xk

q 2 kxi

2

xk

:::

q i kx0

x k:

(3.2)

Thus the errors of iterations xi can be estimated by the members of a geometric sequence that

tends to 0.

31

Approximate solution of nonlinear equations

32

n

De…nition 39 The sequence fxi g1

1) converges to x 2 Rn with speed order p

i=0 2 R (n

(p > 1) if there exists a constant > 0 such that kxi x k

kxi 1 x kp (i 1).

The convergence speed of order p is faster than the linear convergence speed, when p = 1.

We can prove by induction that

kxi

p

xk

1

p

p 1

(

kx0

p

p 1

i

x k)p

(i

1) :

(3.3)

x k n< 1,othan the errors of approximations xi can be estimated by the

p

i

members of the sequence cq p (c = 1= p 1 ) that tends to 0.

If q =

p 1

kx0

This clearly converges to 0 faster than cq i .

The bisection algorithm

Theorem 40 (Bolzano) Assume that f : R ! R is continuous on [a; b] and

(3.4)

f (a)f (b) < 0:

Then equation f (x) = 0 has at least one zero x 2 (a; b).

Bolzano proved the theorem by the following "algorithm":

[a1 ; b1 ] = [a; b],

ci = (ai + bi ) =2, [ai+1 ; bi+1 ] =

It is clear that for i

[ai ; ci ] ;

[ci ; bi ] ;

if f (ai )f (ci ) < 0

, (i = 1; 2; : : :) :

otherwise

1,

x 2 [ai+1 ; bi+1 ]

[ai ; bi ] :

We can approximate zero x by any point y of the interval [ai ; bi ]. For the error of the

approximate zero y, we have

jx

yj

maxfy

ai ; b i

yg:

(3.5)

i

.

The bound maxfy ai ; bi yg is the smallest if y = ai +b

2

Thus, in general, we use xi = (ai + bi ) =2 as an approximation of the solution. It is clear

that

b i ai

b a

jx

xi j

=

(i = 1; 2; : : :):

(3.6)

2

2i

We stop the algorithm if the error of approximation is less than a given error bound " > 0.

Bisection algorithm:

input [a; b] ; " > 0.

while b a > "

Approximate solution of nonlinear equations

33

x = (a + b) =2

if f (a)f (x) < 0

b=x

else

a=x

end

end

x = (a + b) =2

Sequence fxi g converges to a zero of f (x) only if f is continuous.

Example 41 Let f (x) = 4 (1

x2 )

ex = 0 and compute the zeros!

y

-2.0

-1.5

-1.0

2

-0.5

0.5

1.0

1.5

-2

2.0

x

-4

-6

-8

-10

-12

-14

-16

-18

4 (1

ex

x2 )

This f (x) has zeros in the interval [ 1; 0], and [0; 1] and in both intervals

f ( 1) f (0) =

3e

1

< 0;

f (0) f (1) =

3e < 0:

Hence we can apply the bisection method.

For a given error bound " > 0, the solution of inequality

jxi

xj

b

a

2i

=

1

2i

"

(3.7)

gives an upper bound for the number of iterations.

In the above case " = 10 6 , i

log "= log 2 19:93, that is i = 20 iterations are enough

(x1

0:950455, x2 = 0:703439).

Matlab programs: bisect.m, fveq1.m

There exist high dimensional generalizations of the bisection method. But ....

Fixed point iteration

We consider the system of equations

x = g (x) ;

Approximate solution of nonlinear equations

34

where g : Rn ! Rn is continuous. Assume that D Rn is a bounded, closed region such that

conditions

g (x) 2 D; x 2 D

(3.8)

and

kg (x)

g (y)k

q kx

x; y 2 D

yk ;

(0

(3.9)

q < 1)

hold.

Then for any x0 2 D, the following …xed point iteration algorithm converges to the unique

solution of equation x = g (x).

The …xed point iteration algorithm:

Input x0 , " > 0.

while exit condition=false

xi+1 = g (xi ) ;

i=i+1

end

The usable exit (or termination or stopping) conditions are

(B)

kxi+1

xi k

"2 ;

(3.10)

(C) i = imax :

It is common to use the combination of the above termination criteria. Note however that

none of them guarantees a true result.

Example 42 Solve equation f (x) = 4 (1

precision " = 10 5 !

ex = 0 in the interval [0:68; 0:72] with a

x2 )

Since the root is positive, we can write the equation in the form x = g (x) =

the interval [0:68; 0:72]

p

g 0 (x) = ex = 4 4 ex < 0:

p

1

ex =4. In

Hence g (x) is strictly monotone decreasing and

max

x2[0:68;0:72]

jg 0 (x)j = jg 0 (0:72)j

0:3682

implies that g is contraction. Since

g (0:68) =

and

hp

1

hp

g (0:72) =

1

i

ex =4

i

= 0:711 71

x=0:68

ex =4

= 0:697 42;

x=0:72

the conditions of convergence are satis…ed.

Set x0 = 0:70. Using tol = 1e 6, termination criterion (B) and (C) the result is

7:034394488411407e 001.

Matlab programs: fpit.m, fveq2.m

Approximate solution of nonlinear equations

35

Rewriting equations f (x) = 0 in the form x = g (x) is easy. However it is di¢ cult to

guarantee the contractivity of g. In many cases the equivalent form is

x=x

where

=

(3.11)

f (x) ;

(x) is chosen such that g (x) = x

(x) f (x) be a contraction.

In fact, the Newton method can be interpreted this way, as well.

The Newton method

Assume that f : R ! R is continuously di¤erentiable. The essential element of the method is

that we take the tangent line to the graph of the function at the point xi and the zero of this

tangent line gives approximation xi+1 (to the zero of f (x)).

The tangent line equation at the point (xi ; f (xi )) is given by

f (xi ) = f 0 (xi )(x

y

xi ):

(3.12)

The solution of equation y = 0 is

xi+1 = xi

f (xi )

;

f 0 (xi )

(3.13)

provided that f 0 (xi ) 6= 0.

We can obtain this formula with a di¤erent argument. We linearize f (x) at the point xi ,

that is

f (x) f (xi ) + f 0 (xi )(x xi ):

(3.14)

Then we replace equation f (x) = 0 by the approximate equation f (xi ) + f 0 (xi )(x

whose zero approximates the zero of f (x) = 0.

The Newton method:

xi ) = 0

Input an initial approximation x0 2 R,

for i = 0; 1; : : :

xi+1 = xi

f (xi )

:

f 0 (xi )

(3.15)

end

Note that Newton’method is a …xed point iteration applied to the equation

x=x

f (x) =f 0 (x) :

Theorem 43 Assume that f : (a; b) ! R is twice continuously di¤erentiable, jf 00 (x)j

and

jf 0 (x)j

> 0 (x 2 (a; b)). If equation f (x) = 0 has a zero x in (a; b), then there exists a

number > 0 such that for jx0 x j < , xi ! x (i ! +1) and

jxi+1

xj

2

jxi

x j2

(i = 0; 1; : : :):

(3.16)

Approximate solution of nonlinear equations

36

Set

1

= min fx

x g > 0. Consider the Taylor expansion

a; b

xi )2 ;

f (x ) = f (xi ) + f 0 (xi ) (x –xi ) + (1=2) f 00 ( i ) (x

from which f (x ) = 0 implies

f 0 (xi ) (x

f (xi ) =

xi )

(1=2) f 00 ( i ) (x

xi ) +

1 f 00 ( i )

(xi

2 f 0 (xi )

xi )2 :

Substituting to the recursion we get

xi+1 = xi + (x

that is

xi+1

Conditions jf 00 (x)j

x =

and jf 0 (x)j

jx1

implies x1 2 (x

jxi+1

Hence for jx0

xj

jx0

2

2

jxi

x j2 ;

= min f 1 ; 2 = g, then

x j<

xj

;x + )

x )2 :

> 0 (x 2 (a; b)) imply

jxi+1

provided that xi 2 (a; b). If jx0

1 f 00 ( i )

(xi

2 f 0 (xi )

x )2 ;

x j jx0

xj

jx0

x j<

(a; b). Similarly we can prove that xi 2 (a; b) and

xj

2i+1

2

2

jx0

xj

(i = 0; 1; : : :):

x j < , the sequence fxi g1

i=0 is convergent with order p = 2.

The convergence of the Newton method is local, because the initial approximation x0 must

be close to the zero x .

The second order convergence of the Newton method can be characterized by the following

fact.

Assume that x 6= 0. Then we have

jxi+1 x j

jx j

jx j

2

jxi x j

jx j

2

maxfjaj ; jbjg

2

jxi x j

jx j

2

:

(3.17)

This means that the relative error of iteration xi+1 is the square of the relative error of xi .

If the …rst two decimal digits are correct, then the iteration achieves the best available

precision in 3–4 iterations in the standard double precision ‡oating point arithmetic.

Approximate solution of nonlinear equations

37

Termination criteria: We would like to have an approximation subject to

jxi

xj

(3.18)

":

In general, we have only heuristic exit conditions:

(A)

jf (xi )j

"1 ;

jxi+1

(B)

xi j

"2 ;

(C) i = imax :

(3.19)

None of these conditions can guarantee the tolerance condition (3.18).

In practice a combination of the three conditions is preferred.

The square root algorithm

xk+1 =

1

2

xk +

a

xk

(3.20)

(k = 0; 1; : : :)

is an application of the Newton method to equation f (x) = x2

a = 0.

Theorem 44 (Fourier). Assume that f 2 C 2 [a; b], f 0 (x) 6= 0 and f 00 (x) 6= 0, if x 2 [a; b].

Assume that there exists a zero x 2 (a; b). If the point x0 2 [a; b] is such that f (x0 ) f 00 (x0 ) > 0,

then the Newton method monotonically converges to the solution x .

The interval

[a; b] can be in…nite. In the above case f (x) = x2

p

for x0 > a, f (x0 ) f 00 (x0 ) > 0.

For such x0 , the Newton method always convergent.

a and f 00 (x) = 2. Hence

The Newton method in high dimension

The coordinate-wise form of equation

f (x) = 0 (x 2 Rn )

is given by

f1 (x1 ; x2 ; : : : ; xn ) = 0

..

.

fn (x1 ; x2 ; : : : ; xn ) = 0:

(i)

(i)

(i)

We linearize component fk (x) at the point xi = [x1 ; x2 ; : : : ; xn ]T 2 Rn (k = 1; : : : ; n):

fk (x1 ; x2 ; : : : ; xn )

(i)

(i)

fk (x1 ; x2 ; : : : ; x(i)

n )

n

X @

(i)

(i)

fk (x1 ; x2 ; : : : ; x(i)

+

n ) xl

@x

l

l=1

(i)

xl

:

Approximate solution of nonlinear equations

38

In compact form

f1 (x)

f1 (xi ) + rf1 (xi )T (x

..

.

xi )

fn (x)

fn (xi ) + rfn (xi )T (x

xi ):

Instead of solving f (x) = 0 we seek for the solution of the linear(ized) system of equations

f1 (xi ) + rf1 (xi )T (x

..

.

xi ) = 0

(3.21)

T

fn (xi ) + rfn (xi ) (x

xi ) = 0

which de…nes the new approximation xi+1 .

Observe that equation y = fk (xi )+rfk (xi )T (x xi ) de…nes the tangential plane of function

y = fk (x) at the point xi . The new iteration xi+1 is the common point of tangential planes on

the hyperplane y = 0.

The matrix

@fi (x)

@xj

J(x) =

n

i;j=1

2

3

rf1 (x)T

6

7

..

=4

5

.

T

rfn (x)

(3.22)

is called the Jacobian matrix of f (x). Using J (x) we can write the linear system in the form

f (xi ) + J(xi )(x

xi ) = 0;

(3.23)

whose solution is given by

xi+1 = xi

[J(xi )]

1

f (xi ) (i = 0; 1; : : :):

(3.24)

This recursion is the general Newton method. In practice we never compute the inverse of the

Jacobian matrix J(xi ). Instead we solve the linear system form.

Newton method:

Input x0 2 Rn , " > 0.

for i = 0; 1; 2; : : :

Solve the linear system J(xi )

xi+1 = xi + i

end

i

=

f (xi )!

The termination conditions are those of (3.19) and their combinations. The convergence of the

procedure is locally quadratic.

Example 45 Consider the nonlinear system of two variables

f1 (x) = x21 + x22 1 = 0;

f2 (x) = x21 x2 = 0:

Approximate solution of nonlinear equations

39

The tangential plane S1 of function z = f1 (x) at the point x1 = [1; 1]T is

z = 2x1 + 2x2

3;

while the tangential plane S2 of z = f2 (x) at x1 is

z=

2x1

x2 + 1:

The intersection of the two planes is a line that crosses the plane z = 0 at the point x2 =

[ 1=2; 2]T .

The following …gure shows the above situation.

4

2

z

0

-2

-4

1 .5

1 .0

0 .0

0 .5

0 .5

0 .0

x

1 .0

-0 .5

1 .5

-1 .0

y

2 .0

Solve the system f = [(f1 (x); : : : ; fn (x)]T = 0; x = [x1 ; : : : ; xn ]T using Newton’s method,

if

f1 (x) = x1 ;

fi (x) = cos(xi 1 ) + xi

1 (i = 2; : : : ; n)!

Set x(0) = [ 1; 1; : : : ; 1; 1]T . The Jacobian matrix:

2

1

0

0

6

6 sin(x1 )

1

0

6

.

J(x) = 6

0

sin(x2 ) . .

6

6

..

..

..

4

.

.

.

0

0

For n = 4, the elements of the sequence

x(k+1) = x(k)

0

..

.

..

.

3

7

7

7

..

7

.

7

7

..

.

0 5

sin(xn 1 ) 1

[J(x(k) )] 1 f (x(k) )

Approximate solution of nonlinear equations

40

are

2

6

x(1) = 6

4

2

6

x(3) = 6

4

3

0

0:3818 7

7;

0:7030 5

0:2098

3

0

0 7

7;

0 5

0:0025

2

6

x(2) = 6

4

2

6

x(4) = 6

4

3

0

0 7

7;

0:7020 5

0:1720

0:5e

3

0

0 7

7:

0 5

16

It can be shown that for any n 1, x(0) , the sequence x(k) converges to the unique solution

x = [0; : : : ; 0]T .

Matlab programs: Newt1.m, fN1.m, fN1p.m

Chapter 4

Numerical solution of Ordinary Di¤erential Equations

Explicit one-step methods

We consider initial value problems (IVPs) of the form

y 0 = f (x; y) ;

y (x0 ) = y0

f :R

R` ! R` ;

(4.1)

where

f (x; y) = [f1 (x; y) ; : : : ; f` (x; y)]T

is continuous on the open domain

D = f(x; y) : kx

x

and

y

x0 k1 <

ky

x;

(x 2 R; y 2 R` )

y 0 k1 <

yg

R`+1 ;

(4.2)

are positive constants and there exists a constant L > 0 such that

kf (x; y)

f (x; z)k1

L ky

zk1

((x; y) ; (x; z) 2 D) :

(4.3)

Then for all (x0 ; y0 ) 2 D, there exists a unique solution y (x) = [y1 (x) ; : : : ; y` (x)]T of the

IVP on some interval, that is

y 0 (x) = f (x; y (x)) ;

x 2 [x0 ; B] :

(4.4)

We seek for the approximate solution at discrete points (nodes)

x0 = t0 < t1 < : : : < tj < : : : < tN = b

of the interval [x0 ; b] (b

(4.5)

B).

41

Numerical solution of Ordinary Di¤erential Equations

42

De…nition 46 The set fti gN

i=0 is said to be a mesh or grid of interval [x0 ; b]. The quantity

hi = ti+1 ti > 0 is said to be the ith stepsize. The mesh is called equidistant, if

ti = t0 + ih (i = 0; 1; : : : ; N );

h=

b

t0

N

:

(4.6)

An approximate solution of the exact solution y (x) at the point ti is denoted by yi . Clearly,

y (t0 ) = y0 .

The explicit Euler method

A characteristic property of the Cauchy problem is that if y (x) is known at a point x, then we

know its derivative y 0 (x) = f (x; y (x)) as well.

Using the component-wise linearizations (tangential lines)

yi (x + h)

yi (x) + hyi0 (x) = yi (x) + hi f (x; y (x))

we have the approximation

2

3

y1 (x + h)

..

6

7

.

6

7

6

7

y (x + h) = 6 yt (x + h) 7

6

7

..

4

5

.

y` (x + h)

If the approximate value y (x)

(i = 1; : : : ; `)

y (x) + hy 0 (x) = y (x) + hf (x; y (x)) :

y^ is known at a point x, then the above formula changes

to

y (x + h)

y^ + hf (x; y^) :

The basic idea of the Euler method is the following:

At the point t1 = t0 + h0 approximate y (t1 ) by the tangential "line" at the point (x0 ; y0 ):

y (t0 + h0 )

y0 + h0 y 0 (t0 ) = y1 = y0 + h0 f (t0 ; y0 ) :

Using the approximation y1 at the point t1 and the above argument we obtain the approximation

y (t2 ) y (t1 ) + h1 f (t1 ; y (t1 )) y2 = y1 + h1 f (t1 ; y1 ) :

So we obtain the recursion

yi+1 = yi + hi f (ti ; yi )

where yi

(i = 0; 1; : : : ; N

1);

(4.7)

y (ti ).

This is called the explicit Euler method.

For scalar ODE (y0 = y, y (1) = 3, y (x) = 3ex 1 ) we can obtain the following …gure

(test_euler1.m):

Numerical solution of Ordinary Di¤erential Equations

43

explic it Euler method

25

20

y

15

10

5

ex ac t s olution

Euler poly gon

0

1

1.5

2

t

2.5

3

The points marked by rhomboids are the approximate solutions yi (ti = 1 + 0:5i, i =

0; 1; 2; 3; 4), while the blue line shows the theoretical solution.

De…nition 47 The quantity

T (y (x) ; h) = y (x + h)

(y (x) + hf (x; y (x)))

is called the local error of the Euler method at the point x.

De…nition 48 Error ei = yi

y (ti ) is called the global error at the point ti (i = 0; 1; : : : ; N ).

Consider the magnitude of local error T (y (x) ; h). Assume that y (x) 2 C 2 [x0 ; b]. Then

y (x + h) = y (x) + hy 0 (x) + R1 (x; h) ;

where

kR1 (x; h)k1

h2 K2

2K2 = max max jyi00 (x)j :

1 i ` x2[x0 ;b]

(4.8)

Hence

y 0 (x)

T (y (x) ; h) = (y (x) + hy 0 (x) + R1 (x; h))

= R1 (x; h) ;

z }| {

(y (x) + hf (x; y (x)))

which implies

kT (y (x) ; h)k1

Since e0 = y0

K2 h2

(x; x + h 2 [x0 ; b]):

y (x0 ) = 0, the following result holds.

Theorem 49 If y (x) 2 C 2 [x0 ; b], then for the global error of the Euler method we have

max kei k1

1 i N

(b

x0 ) K2 eL(b

x0 )

max hi :

0 i N 1

(4.9)

Numerical solution of Ordinary Di¤erential Equations

44

The result simply means that the global error of the Euler method is proportional to the

oN

n

(N )

(N )

is such that max0 i N hi ! 0

length of the greatest stepsize. If the mesh sequence ti

i=0

for N ! 1, then

max kei k1 ! 0; N ! 1:

(4.10)

1 i N

Thus the convergence rate of the Euler method is 1.

The nature of convergence is indicated on the next …gure, where the theoretical (y (x) =

x + x2 ) and numerical solutions (by Euler’s method) of problem

4

y 0 = 2y=x + 2x3 ; y (1) = 2

fare shown for equidistant grids and stepsizes h = 0:5; 0:05 (test_euler2.m).

explicit Euler method

90

exact solution

h= 0.5

h= 0.05

80

70

60

y

50

40

30

20

10

0

1

1.5

2

t

2.5

3

Explicit one-step methods

There are several methods that are more e¢ cient than the Euler method. One of these is the

class of explicit one-step methods of the form

yi+1 = yi + hi (ti ; yi ; hi )

Function (x; y; h) ( : R R`

function. It is assumed that

(i = 0; 1; : : : ; N

1):

(4.11)

R ! R` ), which depends of f (x; y) is called the increment

is continuous in each variables,

satis…es the Lipschitz condition

k (x; y; h)

where K

(x; z; h)k1

0 is constant and jhj

b

h,

K ky

zk1

((x; y) ; (x; z) 2 D)

(4.12)

Numerical solution of Ordinary Di¤erential Equations

45

and

(4.13)

(x; y; 0) = f (x; y) :

The last condition is said to be the condition of consistency.

The Euler method corresponds to the special case

(x; y; h) = f (x; y).

De…nition 50 The local error of explicit one-step methods at the point x is de…ned by

T (y (x) ; h) = y (x + h)

(4.14)

(y (x) + h (x; y (x) ; h)) :

De…nition 51 The one-step method is said to be of order p, if there exists a constant Kp > 0

such that

kT (y (x) ; h)k1 Kp hp+1 ; x; x + h 2 [x0 ; b] :

(4.15)

The quantity

ei = yi

(4.16)

y (ti )

is called the global error at the point ti (i = 0; 1; : : : ; N ).

If the consistency condition

(x; y; 0) = f (x; y) holds, then T (y (x) ; h) = o (h), that is

(y (x + h)

T (y (x) ; h)

=

h

h

y (x))

(x; y (x) ; h) ! y 0 (x)

f (x; y (x)) = 0:

If, in addition, we assume that y 2 C 2 and

k (x; y; h1 )

(x; z; h2 )k

K ky

K; q

zk + K2 jh1

h2 j

0; (x; y) ; (x; z) 2 D; jh1 j ; jh1 j

then T (y (x) ; h) = O (h2 ) follows from

(4.17)

b

h ;

kT (y (x) ; h)k = k(y (x + h) y (x) h (x; y (x) ; 0))

+h [ (x; y (x) ; 0)

(x; y (x) ; h)]k

k(y (x + h) y (x) hy 0 (x))k

+ h k (x; y (x) ; 0)

(x; y (x) ; h)k

O h2 + K2 h2 = O h2 :

The convergence of explicit one-step methods

By de…nitions and condition (4.3) we have

kyi+1

y (ti+1 )k = kyi + hi (ti ; yi ; hi )

kyi

[y (ti ) + hi (ti ; y (ti ) ; hi )

+T (y (ti ) ; hi )]k

y (ti ) + hi [ (ti ; yi ; hi )

(ti ; y (ti ) ; hi )]k

+ kT (y (ti ) ; hi )k

(1 + hi K) kyi

y (ti )k + kT (y (ti ) ; hi )k ;

(4.18)

Numerical solution of Ordinary Di¤erential Equations

46

that is

kei+1 k

(1 + hi K) kei k + kT (y (ti ) ; hi )k

(i = 0; 1; : : : ; N

1):

(4.19)

Lemma 52 For the solution of inequality

i+1

+

i i

we have

n

Y

n+1

i;

(

0

n

X

!

j

j=0

+

(4.20)

0; i = 0; 1; : : : ; n)

i

n

Y

i=0

j

j=i+1

!

i;

n

(4.21)

0:

Proof. For n = 0, by the recursion

1

0 0

+

0;

Q

while the closed formula ( ij=l f (j) = 1, if i < l) gives

0

Y

1

j

j=0

!

0

0

0

X

Y

+

i=0

Assume the statement for some integer n

Qn

n+1

n+2

j=0

j

Qn+1

j=0

+

0

j

j

j=1

!

i

=

+

0 0

0:

0. For n + 1, we have

n+1 n+1 +

Pn

n+1

i=0

0

+

n+1

Qn

j=i+1

j

i

j=i+1

j

i;

Pn+1 Qn+1

i=0

+

n+1

which was to be proved.

If we apply the Lemma with j = 1 + hj K and j = kT (y (tj ) ; hj )k, then we obtain the

inequality

" n

#

" n

#

n

Y

X

Y

(4.22)

ken+1 k

(1 + hj K) ke0 k +

(1 + hj K) kT (y (ti ) ; hi )k :

j=0

i=0

Using the inequality 1 + x

n

Y

ex (x

(1 + hj K)

j=i+1

j=i+1

eKhj and

0) we obtain 1 + hj K

n

Y

eKhj = eK

j=i+1

Pn

j=i+1

hi

eK(b

x0 )

:

(4.23)

Substituting into the formula we obtain the estimate

ken+1 k

eK(b

x0 )

ke0 k +

n

X

i=0

!

kT (y (ti ) ; hi )k :

(4.24)

Numerical solution of Ordinary Di¤erential Equations

47

If the method is of order p, then

max kei k

1 i N

eK(b

eK(b

max kei k

1 i N

x0 )

a)

K(b a)

e

ke0 k +

n

X

dp hp+1

i

i=0

ke0 k + dp

ke0 k + (b

!

p

max hi

0 i N 1

x0 ) dp

n

X

!

hi ;

i=0

p

max hi

0 i N 1

:

(4.25)

Theorem 53 If one-step method (4.11) is of order p and y0 = y (t0 ), then the convergence is

also of order p, that is

p

max kei k

1 i N

(b

K(b a)

x0 ) dp e

max hi

0 i N 1

:

(4.26)

The result means that the global error is proportional with the pth power of the greatest

n

oN

(N )

(N )

stepsize. If the mesh sequence ti

is such that max0 i N hi ! 0 for N ! 1, then

i=0

p

max kei k = O

1 i N

max hi

0 i N 1

! 0;

N ! 1:

(4.27)

For the explicit Euler method kT (y (x) ; h)k

K2 h2 , if y 2 C 2 . Hence its convergence

order is 1.

In general, a p order ( p 1) method may have convergence order p, only if exact solution