Guidelines for the Development and Assessment of Program Learning Outcomes

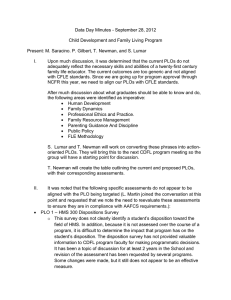

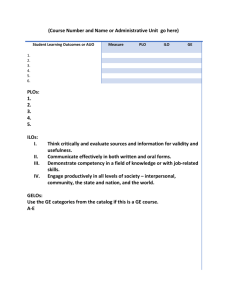

advertisement